Building a Banking Infrastructure with Microservices

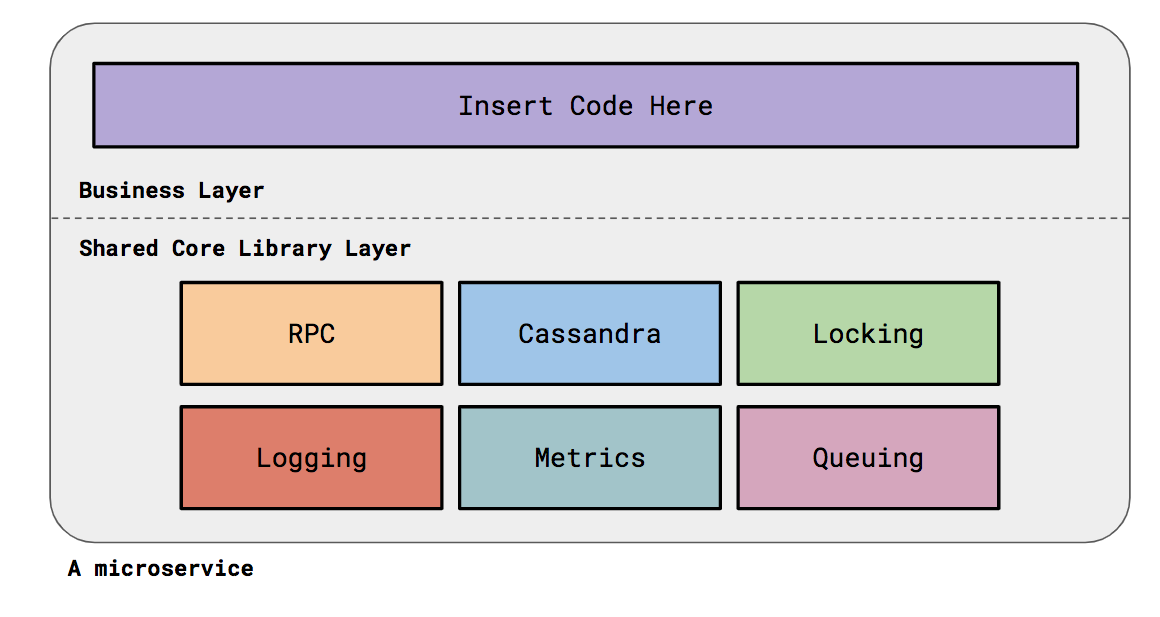

On the whole, the goal is to make engineers autonomous as much as possible for

organising their domain into the structure of the microservices they write and

support. As a Platform Team, we provide knowledge and documentation and

tooling to support that. Each microservice has an associated owning team and

they are responsible for the health of their services. When a service moves

owners, other responsibilities like alerts and code review also move over

automatically. ... Code generation starts from the very beginning of a

service. An engineer will use a generator to create the skeleton structure of

their service. This will generate all the required folder structure as well as

write boilerplate code so things like the RPC server are well configured and

have appropriate metrics. Engineers can then define aspects like their RPC

interface and use a code generator to generate implementation stubs of their

RPC calls. Small reductions in cognitive overhead for engineers allows them to

cumulatively focus on business choices and reduces the paradox of choice. We

do find cases where engineers need to deviate. That’s absolutely okay; our

goal is not to prescribe this structure for every single service. We allow

engineers to make the choice, with the knowledge that deviations need

appropriate documentation/justification and knowledge transfer.

Cybersecurity Skills Gap Worsens, Fueled by Lack of Career Development

The fundamental causes for the skill gap are myriad, starting with a lack of

training and career-development opportunities. About 68 percent of the

cybersecurity professionals surveyed by ESG/ISSA said they don’t have a

well-defined career path, and basic growth activities, such as finding mentor,

getting basic cybersecurity certifications, taking on cybersecurity

internships and joining a professional organization, are missing steps in

their endeavors. The survey also found that many professionals start out in

IT, and find themselves working in cybersecurity without a complete skill

set. ... The COVID-19 pandemic is not helping matters on this front:

“Increasingly, lockdown has driven us all online and the training industry has

been somewhat slow to respond with engaging, practical training supported by

skilled practitioners who can share their expertise,” Steve Durbin, managing

director of the Information Security Forum, told Threatpost. “Apprenticeships,

on the job learning, backed up with support training packages are the way to

go to tackle head on a shortage that is not going to go away.”

The Top 10 Digital Transformation Trends Of 2020: A Post Covid-19 Assessment

Using big data and analytics has always been on a steady growth trajectory and

then COVID-19 exploded and made the need for data even greater. Companies and

institutions like Johns Hopkins and SAS created COVID-19 health dashboards

that compiled data from a myriad of sources to help governments and businesses

make decisions to protect citizens, employees, and other stakeholders. Now, as

businesses are in re-opening phases, we are using data and analytics for

contact tracing and to help make other decisions in the workplace. There have

been recent announcements from several big tech companies including Microsoft,

HPE, Oracle, Cisco and Salesforce focusing on developing data driven tools to

help bring employees back to work safely — some even offering it for free to

its customers. The need for data to make all business decisions has grown, but

this year, we saw data analytics being used in real time to make critical

business and life-saving decisions, and I am certain it won’t stop there. I

expect massive continued investment from companies into data and analytics

capabilities that power faster, leaner and smarter organizations in the wake

of 2020’s Global Pandemic and economic strains.

How government policies are harming the IT sector | Opinion

Thanks to a series of misplaced policy choices, the government has

systematically eroded the permitted operations of the Indian outsourcing

industry to the point where it is no longer globally competitive. Foremost

among these are the telecom regulations imposed on a category of companies

broadly known as Other Service Providers (OSPs). Anyone who provides

“application services” is an OSP and the term “application services” is

defined to mean “tele-banking, telemedicine, tele-education, tele-trading,

e-commerce, call centres, network operation centres and other IT-enabled

services”. When it was first introduced, these regulations were supposed to

apply to the traditional outsourcing industry, focusing primarily on call

centre operations. However, it has, over the years been interpreted far more

widely than originally intended. While OSPs do not require a license to

operate, they do have to comply with a number of telecom restrictions. The

central regulatory philosophy behind these restrictions is the government’s

insistence that voice calls terminated in an OSP facility over the regular

Public Switched Telephone Network (PSTN) must be kept from intermingling with

those carried over the data network.

Data science's ongoing battle to quell bias in machine learning

Data bias is tricky because it can arise from so many different things. As you

have keyed into, there should be initial considerations of how the data is

being collected and processed to see if there are operational or process

oversight fixes that exist that could prevent human bias from entering in the

data creation phase. The next thing I like to look at is data imbalances

between classes, features, etc. Oftentimes, models can be flagged as treating

one group unfairly, but the reason is there is not a large enough population

of that class to really know for certain. Obviously, we shouldn't use models

on people when there's not enough information about them to make good

decisions. ... Machine learning interpretability [is about] how transparent

model architectures are and increasing how intuitive and understandable

machine learning models can be. It is one of the components that we believe

makes up the larger picture of responsible AI. Put simply, it's really hard to

mitigate risks you don't understand, which is why this work is so critical. By

using things like feature importance, Shapley values, surrogate decision

trees, we are able to paint a really good picture of why the model came to the

conclusion it did -- and if the reason it came to that conclusion violates

regulatory rules or makes common business sense.

Integration Testing ASP.NET Core Applications - Best Practices

Compared to unit tests, this allows much more of the application code to be

tested together, which can rapidly validate the end-to-end behaviour of your

service. These are also sometimes referred to as functional tests, since the

definition of integration testing may be applied to more comprehensive

multi-service testing as well. It’s entirely possible to test your

applications in concert with their dependencies, such as databases or other

APIs they expect to call. In the course, I show how boundaries can be defined

using fakes to test your application without external dependencies, which

allows your tests to be run locally during development. Of course, you can

avoid such fakes to test with some real dependencies as well. This form of

in-memory testing can then easily be expanded to broader testing of multiple

services as part of CI/CD workflows. Producing these courses is a lot of work,

but that effort is rewarded when people view the course and hopefully leave

with new skills to apply in their work. If you have a subscription to

Pluralsight already, I hope you’ll add this course to your bookmarks for

future viewing.

How Robotic Process Automation (RPA) and digital transformation work together

RPA is not on its own an intelligent solution. As Everest Group explains in

its RPA primer, “RPA is a deterministic solution, the outcome of which is

known; used mostly for transactional activities and standardized processes.”

Some common RPA use cases include order processing, financial report

generation, IT support, and data aggregation and reconciliation. However, as

organizations proceed along their digital transformation journeys, the fact

that many RPA solutions are beginning to integrate cognitive capabilities

increases their value proposition. For example, RPA might be coupled with

intelligent character recognition (ICR) and optical character recognition

(OCR). Contact center RPA applications might incorporate natural language

processing (NLP) and natural language generation (NLG) to enable chatbots.

“These are all elements of an intelligent automation continuum that allow a

digital transformation,” Wagner says. “RPA is one piece of a lengthy continuum

of intelligent automation technologies that, used together and in an

integrated manner, can very dramatically change the operational cost and speed

of an organization while also enhancing compliance and reducing costly

errors.”

It’s not about cloud vs edge, it’s about connections

“What is wanted is a new type of networking platform that establishes a

reliable, high performance, zero trust connection across the Internet —

meaning one that will only connect an authorised device and authorised user

using an authorised application (ie ‘zero trust’),” he said. “With zero trust,

every connection is continuously assessed to identify who or what is

requesting access, have they properly authenticated, and are they authorised

to use the resource or service being requested — before any network access is

permitted. “This can be achieved using software defined networking loaded into

the edge device or embedding networking capabilities into applications with

SDKs and APIs. This eliminates the need to procure, install and commissioning

hardware. Unlike VPNs, these software-defined connections can be tightly

segmented according to company policies (policy based access), determining

which workgroups or devices can be connected, and what they can share and how.

“This suggests a new paradigm: an edge-core-cloud continuum, where apps and

services will run wherever most needed, connected via zero trust networking

access (ZTNA) capable of securing the edge to cloud continuum end to end...."

Put Value Creation at the Center of Your Transformation

The leader of any transformation effort needs to be resilient and determined

to deliver the program’s full potential. Yet that person also needs to

understand and acknowledge the needs of employees during a radical upheaval.

Sometimes leaders must be pragmatic—particularly when the company’s long-term

survival is at stake. At other times, empathy and flexibility are more

effective. One CEO brought determination and conviction to the company’s

transformation, and he was able to tamp down dissent, gossip, and negative

press. He was also willing to reverse his decisions on some matters. For

example, one cost-reduction measure was a cutback in employee travel.

Initially, the CEO told employees that they needed direct approval from him

for any travel expenses above a certain amount. However, after about a year,

he relaxed this policy after considering employees’ feedback. ...

Transformations are a proving ground for leadership teams. They can be

catalysts to long-term business success and financial performance—but

companies undergoing a transformation underperform almost as often as they

outperform. Our analysis shows that there is a systematic way to increase the

odds of success.

The sinking fortunes of the shipping box

Another surprising problem for the global manufacturing model is that shipping

has actually become less efficient, largely due to business decisions of the

shippers. Maersk, the world-leading Danish firm, continued to order ever-larger

container ships after the financial crisis, convinced that consumer demand would

quickly resume its previous growth. When it did not, the firm and its

competitors were forced to sail half-full megaships around the world. Because

the ships were several meters wider than their predecessors, the process of

removing containers took longer. And they were designed to travel more slowly to

conserve fuel. Delays became much more common, undermining trust in the

industry. Without reliable shipping, Levinson writes, firms have chosen to hold

more inventory — which flies in the face of the prevailing orthodoxy. But things

have changed. Inventories can act as a buffer when supply chains are in

distress. For firms, “minimizing production costs was no longer the sole

priority; making sure the goods were available when needed ranked just as

highly.” It seems inevitable that the coronavirus pandemic will reinforce this

drift back toward greater self-sufficiency in manufacturing.

Quote for the day:

No comments:

Post a Comment