The most popular neural network styles and how they work

Feedforward networks are perhaps the most archetypal neural net. They offer a

much higher degree of flexibility than perceptrons but still are fairly

simple. The biggest difference in a feedforward network is that it uses more

sophisticated activation functions, which usually incorporate more than one

layer. The activation function in a feedforward is not just 0/1, or on/off:

the nodes output a dynamic variable. ... Recurrent neural networks, or RNNs,

are a style of neural network that involve data moving backward among layers.

This style of neural network is also known as a cyclical graph. The backward

movement opens up a variety of more sophisticated learning techniques, and

also makes RNNs more complex than some other neural nets. We can say that RNNs

incorporate some form of feedback. ... Convolutional neural networks, or CNNs,

are designed for processing grids of data. In particular, that means images.

They are used as a component in the learning and loss phase of generative AI

models like stable diffusion, and for many image classification tasks. CNNs

use matrix filters that act like a window moving across the two-dimensional

source data, extracting information in their view and relating them

together.

The startup CIO’s guide to formalizing IT for liquidity events

“You have to stop fixing problems in the data layer, relying on data

scientists to cobble together the numbers you need. And if continuing that

approach is advocated by the executives you work with, if it’s considered

‘good enough,’ quit,” he says. “Getting the numbers right at the source

requires that you straighten out not only the systems that hold the data, all

those pipelines of information, but also the processes whereby that data is

captured and managed. No tool will ever entirely erase the friction of getting

people to enter their data in a CRM.” The second piece to getting the numbers

right comes at the end: closing the books. While this process is a near

ubiquitous struggle for all growing companies, Hoyt offers two points of

optimism. “First,” he explains, “many teams struggle to close the books simply

because the company hasn’t invested in the proper tools. They’ve kicked the

can down the street. And second, you have a clear metric of improvement: the

number of days taken to close.” Hoyt suggests investing in the proper tools

and then trying to shave the days-to-close each quarter. Get your numbers

right, secure your company, bring it into compliance, and iron out your ops

and infrastructure.

Majority of commercial codebases contain high-risk open-source code

Advocates of open-source software have long argued that many eyes on code lead

to fewer bugs and vulnerabilities, and the report doesn’t disprove that

assertion, McGuire said. “If anything, the report supports that belief,” he

said. “The fact that there are so many disclosed vulnerabilities and CVEs serves

as a testament to how active, vigilant, and reactive the open-source community

is, especially when it comes to addressing security issues. It’s this very

community that is doing the discovery, disclosure, and patching work.” However,

users of open-source software aren’t doing a good job of managing it or

implementing the fixes and workarounds provided by the open-source community, he

said. The primary purpose of the report is to raise awareness about these issues

and to help users of open-source software better mitigate the risks, he said.

“We would never recommend any software producer avoid using, or tamp down their

usage, of open source,” he added. “In fact, we would argue the opposite, as the

benefits of open source far outweigh the risks.” Open-source software has

accelerated digital transformation and allowed companies to develop innovative

applications that consumers want, he said.

From gatekeeper to guardian: Why CISOs must embrace their inner business superhero

You, the CISO, are no longer just the security guard at the front gate. You're

the city planner, the risk management consultant, the chief resilience officer,

and the chief of police all rolled into one. You need to understand the flow of

traffic, the critical infrastructure, and the potential vulnerabilities lurking

in every alleyway. But how do we, the guardians of the digital realm, transform

into these business superheroes? Fear not, fellow CISOs, for the path to

upskilling and growth is paved with strategic learning, effective communication,

and more than a dash of inspirational or motivational leadership. ... As the

lone wolf days have ended, so too have the days when technical expertise alone

could guarantee a CISO’s success. Today's CISO needs to be a voracious learner,

constantly expanding their knowledge and skills. ... Failure to effectively

communicate is a career killer for any CXO. To be influential, especially with

the C-suite, CISOs must learn to speak in ways understood by their C-suite

peers. Imagine how your eyes may glaze over when a CFO starts talking capex,

opex, or EBITDA. Realize the same will happen for these cybersecurity

“outsiders.”

Looking good, feeling safe – data center security by design

For data centers in shared spaces, sometimes turning data halls into display

features is a way to make them secure. Keeping compute in a secure but openly

visible space means it’s harder to do anything unnoticed. It may also help

some engineers be more mindful about keeping the halls tidy and cabling neat.

“Some people keep data centers behind closed walls and keep them hidden and

private. Others use them as features,” says Nick Ewing, managing director at

UK modular data center provider EfficiencyIT. “The best ones are the ones

where the customers like to make a feature of the environment and use it to

use it as a bit of a display.” An example he cites is the Wellcome Sanger

Institute in Cambridge, where they have four data center quadrants. Each

quadrant is about 100 racks; they have man traps at either end of the data

center corridor. But one end of the main quadrant is full of glass. “They have

an LED display, which is talking about how many cores of compute, how much

storage they’ve got, how many genomic sequences they've they've sequenced that

day,” he says. “They've used it as a feature and used it to their

advantage.”

Neuromorphic computing: The future of IoT

The adoption of neuromorphic computing in IoT promises many benefits, ranging

from enhanced processing power and energy efficiency to increased reliability

and adaptability. Here are some key advantages: More Powerful AI: Neuromorphic

chips enable IoT devices to handle complex tasks with unprecedented speed and

efficiency. By collocating memory and processing and leveraging parallel

processing capabilities, these chips overcome the limitations of traditional

architectures, resulting in near-real-time decision-making and enhanced

cognitive abilities. Lower Power Consumption: One of the most significant

advantages of neuromorphic computing is its energy efficiency. By adopting an

event-driven approach and utilizing components like memristors, neuromorphic

systems minimize energy consumption while maximizing performance, making them

ideal for power-constrained IoT environments. Extensive Edge Networks: With

the proliferation of edge computing, there is a growing need for IoT devices

that can process data locally in real-time. Neuromorphic computing addresses

this need by providing the processing power and adaptability required to run

advanced applications at the edge, reducing reliance on centralized servers

and improving overall system responsiveness.

Decentralizing the AR Cloud: Blockchain's Role in Safeguarding User Privacy

For devices to interpret the world, their camera needs access to have some

kind of digital counterpart that it can cross reference. And that digital

counterpart of the world is much too complex to fit inside one device.

Therefore, the AR cloud has been developed. The AR cloud is a network of

computers that work to help devices understand the physical world. ... The AR

cloud is akin to an API to the world. The implications for applications that

require knowledge about location, context, and more are considerable. In AR,

the data is intimate data about where we are, who we are with, what we’re

saying, looking at, and even what our living quarters look like. AR devices

can read our facial expressions, and more, similar to how the Apple Watch can

measure the heart rates of its wearers. Digital service providers will have

access to a bevy of information and also insight into our thinking, wants,

needs, and desires. Storing that data in a centralized server that is opaque

is cause for concern. Blockchain allows people to take that same intimate

private data, and put it on their own server from which they could access the

wondrous world of AR minus such egregious privacy concerns.

Five ways AI is helping to reduce supply chain attacks on DevOps teams

Attackers are using AI to penetrate an endpoint to steal as many forms of

privileged access credentials as they can find, then use those credentials to

attack other endpoints and move throughout a network. Closing the gaps between

identities and endpoints is a great use case for AI. A parallel development is

also gaining momentum across the leading extended detection and response (XDR)

providers. CrowdStrike co-founder and CEO George Kurtz told the keynote

audience at the company’s annual Fal.Con event last year, “One of the areas

that we’ve really pioneered is that we can take weak signals from across

different endpoints. And we can link these together to find novel detections.

We’re now extending that to our third-party partners so that we can look at

other weak signals across not only endpoints but across domains and come up

with a novel detection.” Leading XDR platform providers include Broadcom,

Cisco, CrowdStrike, Fortinet, Microsoft, Palo Alto Networks, SentinelOne,

Sophos, TEHTRIS, Trend Micro and VMWare. Enhancing LLMs with telemetry and

human-annotated data defines the future of endpoint security.

Blockchain transparency is a bug

Transparency isn’t a feature of decentralization that is truly needed to

perform on-chain transactions securely — it’s a bug that forces Web3 users to

expose their most sensitive financial data to anyone who wants to see it.

Several blockchain marketing tools have emerged over the past few years,

allowing marketers and salespeople to use the freely flowing on-chain data for

user insights and targeted advertising. But this time, it’s not just

behavioral data that is analyzed. Now, your most sensitive financial

information is also added to the mix. Web3 will never become mainstream unless

we manage to solve this transparency problem. Blockchain and Web3 were an

escape from centralized power, making information transparent so that

centralized entities cannot own one’s data. Then 2020 came, Web3 and NFTs

boomed, and many started talking about how free flowing, available-to-all data

is a clear improvement from your data being “stolen” by big data companies as

a customer. Some may think if everyone can see the data, transparency will

empower users to take ownership of and profit from their own data. Yet,

transparency does not mean data can’t be appropriated nor that users are

really in control.

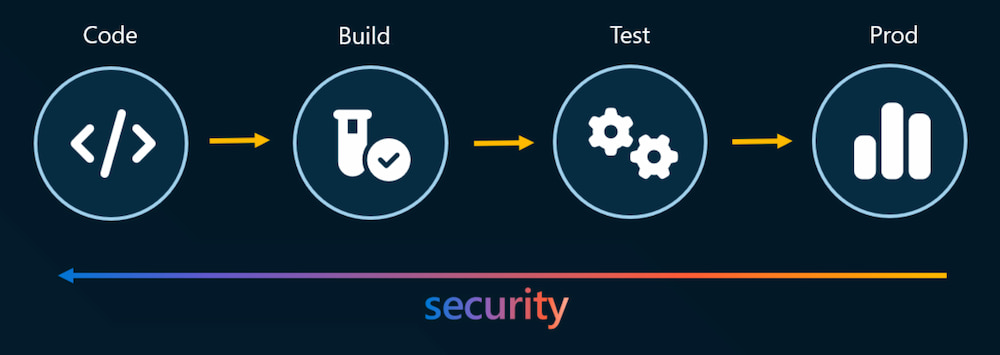

Key Considerations to Effectively Secure Your CI/CD Pipeline

Effective security in a CI/CD pipeline begins with the definition of clear and

project-specific security policies. These policies should be tailored to the

unique requirements and risks associated with each project. Whether it's

compliance standards, data protection regulations, or industry-specific

security measures (e.g., PCI DSS, HDS, FedRamp), organizations need to define

and enforce policies that align with their security objectives. Once security

policies are defined, automation plays a crucial role in their enforcement.

Automated tools can scan code, infrastructure configurations, and deployment

artifacts to ensure compliance with established security policies. This

automation not only accelerates the security validation process but also

reduces the likelihood of human error, ensuring consistent and reliable

enforcement. In the DevSecOps paradigm, the integration of security gates

within the CI/CD pipeline is pivotal to ensuring that security measures are an

inherent part of the software development lifecycle. If you set up security

scans or controls that users can bypass, those methods become totally useless

— you want them to become mandatory.

Quote for the day:

"It is better to fail in originality

than to succeed in imitation." -- Herman Melville

No comments:

Post a Comment