Top 3 API Vulnerabilities: Why Apps are Pwned by Cyberattackers

2021 is already the year of the API security incident, and the year is not

over. API flaws impact the entire business – not just dev, or security or the

business groups. Finger-pointing has never fixed the problem. The fix begins

with collaboration; development needs a full understanding from business groups

on how the API should function. API coding is different, so a refresh on secure

coding practices is warranted. And security needs to be involved upfront, to

help uncover gaps before publication. A great place to start is with the OWASP.

It has published the API Security Top 10 and recently published the Completely

Ridiculous API, which includes examples of bad APIs in an application.

Organizations can use the Completely Ridiculous API online or in-house as an

educational platform to train development and security on the errors to avoid

when utilizing APIs. Whether you are utilizing an “API-first approach” or just

starting your journey into digital transformation aided by APIs, knowing the

vulnerabilities that are out there and what might happen if something is missed,

is crucial.

2021 is already the year of the API security incident, and the year is not

over. API flaws impact the entire business – not just dev, or security or the

business groups. Finger-pointing has never fixed the problem. The fix begins

with collaboration; development needs a full understanding from business groups

on how the API should function. API coding is different, so a refresh on secure

coding practices is warranted. And security needs to be involved upfront, to

help uncover gaps before publication. A great place to start is with the OWASP.

It has published the API Security Top 10 and recently published the Completely

Ridiculous API, which includes examples of bad APIs in an application.

Organizations can use the Completely Ridiculous API online or in-house as an

educational platform to train development and security on the errors to avoid

when utilizing APIs. Whether you are utilizing an “API-first approach” or just

starting your journey into digital transformation aided by APIs, knowing the

vulnerabilities that are out there and what might happen if something is missed,

is crucial.How Tech Leaders Can Leverage Their Mentoring and Teaching with Coaching

Putting the focus on the other person means that we are encouraging them to do all of the work of coming up with a solution. We refrain from asking information gathering questions and instead ask questions that will help them solve the problem on their own. After all, anything that they have an answer to ... they already know! We want to help them make new connections in order to come up with new ideas that they didn’t have when they started talking to us. We also refrain from sharing our thoughts and opinions until they ask us for them directly or it is clear that they could benefit from some information that we have that they don’t. To aid in this, consider saying something early on in your conversation like, "I’m going to put my coaching hat on. I’m happy to share my expertise with you, but prefer to explore a bit first. If we get to the point where you really want to know my thoughts or I think of something that may be helpful to share, I can switch to my ‘expert’ hat."All About Waymo’s AI-Powered Urban Driver

Security engineer job requirements, certifications, and salary

Why should I choose Quarkus over Spring for my microservices?

Quarkus can automatically detect changes made to Java and other resource and

configuration files, then transparently re-compile and re-deploy the changes.

Usually, within a second, you can view your application’s output or compiler

error messages. This feature can also be used with Quarkus applications running

in a remote environment. The remote capability is useful where rapid development

or prototyping is needed but provisioning services in a local environment isn’t

feasible or possible. Quarkus takes this concept a step further with its

continuous testing feature to facilitate test-driven development. As changes are

made to the application source code, Quarkus can automatically rerun affected

tests in the background, giving developers instant feedback about the code they

are writing or modifying. ... From the beginning, Quarkus was designed around

Kubernetes-native philosophies, optimizing for low memory usage and fast startup

times. As much processing as possible is done at build time. Classes used only

at application startup are invoked at build time and not loaded into the runtime

JVM, reducing the size, and ultimately the memory footprint, of the application

running on the JVM.

Quarkus can automatically detect changes made to Java and other resource and

configuration files, then transparently re-compile and re-deploy the changes.

Usually, within a second, you can view your application’s output or compiler

error messages. This feature can also be used with Quarkus applications running

in a remote environment. The remote capability is useful where rapid development

or prototyping is needed but provisioning services in a local environment isn’t

feasible or possible. Quarkus takes this concept a step further with its

continuous testing feature to facilitate test-driven development. As changes are

made to the application source code, Quarkus can automatically rerun affected

tests in the background, giving developers instant feedback about the code they

are writing or modifying. ... From the beginning, Quarkus was designed around

Kubernetes-native philosophies, optimizing for low memory usage and fast startup

times. As much processing as possible is done at build time. Classes used only

at application startup are invoked at build time and not loaded into the runtime

JVM, reducing the size, and ultimately the memory footprint, of the application

running on the JVM.Sustainable transformation of agriculture with the Internet of Things

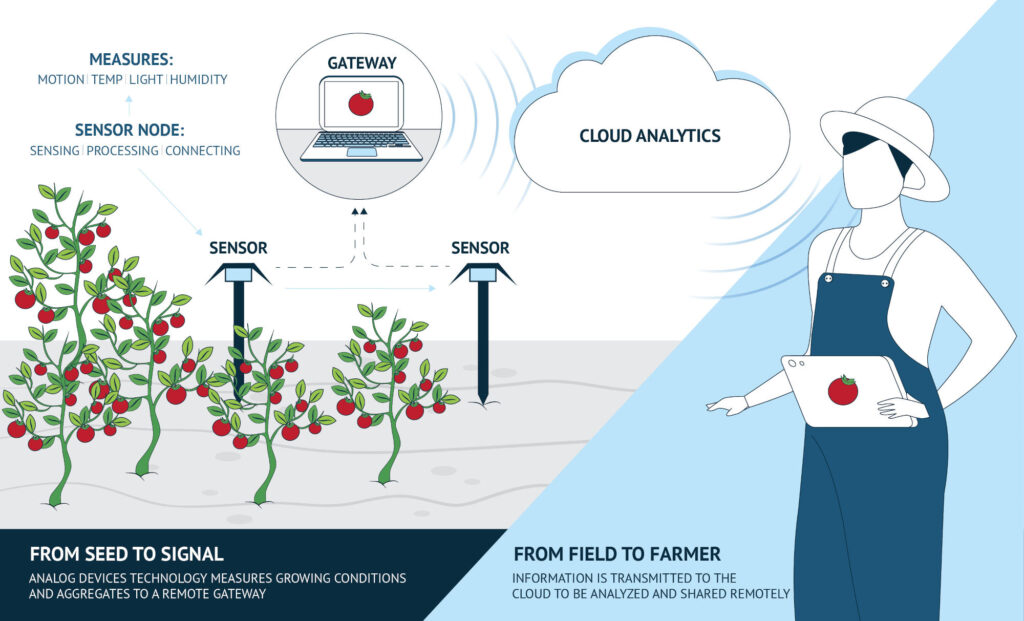

With the urgency to prevent environmental degradation, reduce waste and increase

profitability, farmers around the globe are increasingly opting for more

efficient crop management solutions supported by optimization and controlling

technologies derived from the Industrial Internet of Things (IIoT). Intelligent

information and communication technologies (IICT) (machine Learning (ML), AI,

IoT, cloud-based analytics, actuators, and sensors) are being implemented to

achieve higher control of spatial and temporal variabilities with the aid of

satellite remote sensing. The use and application of this set of related

technologies are known as “Smart Agriculture.” In SA, real-time and continuous

monitoring of weather, crop growth, plant physical/chemical variables, and other

critical environmental factors allow the optimization of yield production,

reduction of labor, and improvement of farming products. Practices such as

irrigation management, resource management, production, or fertilization

operations are being facilitated by integrating IoT systems capable of providing

information about multiple crop factors.

With the urgency to prevent environmental degradation, reduce waste and increase

profitability, farmers around the globe are increasingly opting for more

efficient crop management solutions supported by optimization and controlling

technologies derived from the Industrial Internet of Things (IIoT). Intelligent

information and communication technologies (IICT) (machine Learning (ML), AI,

IoT, cloud-based analytics, actuators, and sensors) are being implemented to

achieve higher control of spatial and temporal variabilities with the aid of

satellite remote sensing. The use and application of this set of related

technologies are known as “Smart Agriculture.” In SA, real-time and continuous

monitoring of weather, crop growth, plant physical/chemical variables, and other

critical environmental factors allow the optimization of yield production,

reduction of labor, and improvement of farming products. Practices such as

irrigation management, resource management, production, or fertilization

operations are being facilitated by integrating IoT systems capable of providing

information about multiple crop factors.Mainframes, ML and digital transformation

Moving from mainframes to client-server didn't just mean you went from renting

one kind of box to buying another - it changed the whole way that computing

worked. In particular, software became a separate business, and there were all

sorts of new companies selling you new kinds of software, some of which solved

existing problems but some of which changed how a company could operate. SAP

made just-in-time supply chains a lot easier, and that enabled Zara, and Tim

Cook’s Apple. New categories of software enabled new ways of doing business. The

same shift is happening now, as companies move to the cloud - you go from owning

boxes to renting them (perhaps), but more importantly you change what kinds of

software you can use. If buying software means a URL, a login and a corporate

credit card instead of getting onto the global IT department’s datacenter

deployment schedule for sometime in the next three years, then you can have a

lot more software from a lot more companies.

Moving from mainframes to client-server didn't just mean you went from renting

one kind of box to buying another - it changed the whole way that computing

worked. In particular, software became a separate business, and there were all

sorts of new companies selling you new kinds of software, some of which solved

existing problems but some of which changed how a company could operate. SAP

made just-in-time supply chains a lot easier, and that enabled Zara, and Tim

Cook’s Apple. New categories of software enabled new ways of doing business. The

same shift is happening now, as companies move to the cloud - you go from owning

boxes to renting them (perhaps), but more importantly you change what kinds of

software you can use. If buying software means a URL, a login and a corporate

credit card instead of getting onto the global IT department’s datacenter

deployment schedule for sometime in the next three years, then you can have a

lot more software from a lot more companies.What’s next for data privacy in the UK?

Since the implementation of GDPR, there has been a surge in recruitment for

roles like ‘head of data governance and privacy’. It’s time to seize this

momentum and move to the next milestone – let’s call it GDPR+. GDPR+ needs to

answer the question of how we protect and use data within the country and

cross-border. Ideally, we need a Data Privacy Act and a cross-party overseer of

the whole process whose remit spans all government departments – a kind of ‘data

privacy czar’. Ideally this would be an individual with a strong background in

data. The question that needs to be answered is how do we ensure businesses

align their practices with any new regulation and handle data responsibly rather

than selling it for their own gain? Data fiduciaries could be part of the

solution; third-party organisations who are given the legal right to handle

private data. But it needs to be a non-political government-funded third party.

It’s most likely that the government would outsource any enforcement, but it’s

pertinent to ask whether a private company would have the best interests of

individual citizens.

Since the implementation of GDPR, there has been a surge in recruitment for

roles like ‘head of data governance and privacy’. It’s time to seize this

momentum and move to the next milestone – let’s call it GDPR+. GDPR+ needs to

answer the question of how we protect and use data within the country and

cross-border. Ideally, we need a Data Privacy Act and a cross-party overseer of

the whole process whose remit spans all government departments – a kind of ‘data

privacy czar’. Ideally this would be an individual with a strong background in

data. The question that needs to be answered is how do we ensure businesses

align their practices with any new regulation and handle data responsibly rather

than selling it for their own gain? Data fiduciaries could be part of the

solution; third-party organisations who are given the legal right to handle

private data. But it needs to be a non-political government-funded third party.

It’s most likely that the government would outsource any enforcement, but it’s

pertinent to ask whether a private company would have the best interests of

individual citizens.Why you want what you want

Great marketers are certainly masters of mimetic manipulation. Burgis points to

Edward Bernays, the public relations pioneer, as a prime example. In 1929, when

the American Tobacco Company realized that breaking the taboo against women

smoking in public could generate beaucoup revenue, it hired Bernays’s firm. He

convinced 30 New York City debutantes to join the Easter parade and light up

Lucky Strikes—and arranged to have them photographed. The next day, the photos

of the debs smoking their “torches of freedom” appeared in newspapers across the

country. Sales of Lucky Strikes tripled by the following Easter. ... Much of

Wanting is devoted to translating and illustrating Girard’s theories in a

consumable way, and Burgis does a fine job at that task. The book’s most salient

point, even if it is somewhat opaque, is that leaders choose to pursue what

Burgis calls transcendent desire: “Magnanimous, great-spirited leaders are

driven by transcendent desire—desire that leads outward, beyond the existing

paradigm, because the models are external mediators of desire. These leaders

expand everyone’s universe of desire and help them explore it.”

Great marketers are certainly masters of mimetic manipulation. Burgis points to

Edward Bernays, the public relations pioneer, as a prime example. In 1929, when

the American Tobacco Company realized that breaking the taboo against women

smoking in public could generate beaucoup revenue, it hired Bernays’s firm. He

convinced 30 New York City debutantes to join the Easter parade and light up

Lucky Strikes—and arranged to have them photographed. The next day, the photos

of the debs smoking their “torches of freedom” appeared in newspapers across the

country. Sales of Lucky Strikes tripled by the following Easter. ... Much of

Wanting is devoted to translating and illustrating Girard’s theories in a

consumable way, and Burgis does a fine job at that task. The book’s most salient

point, even if it is somewhat opaque, is that leaders choose to pursue what

Burgis calls transcendent desire: “Magnanimous, great-spirited leaders are

driven by transcendent desire—desire that leads outward, beyond the existing

paradigm, because the models are external mediators of desire. These leaders

expand everyone’s universe of desire and help them explore it.”Getting ahead of a major blind spot for CISOs: Third-party risk

As the industry has seen firsthand, even mature and well-established enterprise

security teams have a lack of visibility into network hygiene of their branches,

offices and contractors abroad due to varying security policies and protocols,

management hierarchy and known pain points in franchised-based businesses. The

same is applicable to their supply chain, where the level of network hygiene is

typically a “black box” or something the third-party is simply not willing to

discuss. Acquisition of the quantitative, historical and the most recent

indicators of compromise is a vital component of TPRM, providing enterprise

organizations actionable information to determine if a counterpart may be

compromised with malware and what service may be potentially breached by it.

This knowledge enables CISOs to make strategic and tactical decisions, as well

as to communicate with other teams, including those responsible for vendor

management and supply chain and the organization’s legal team.

As the industry has seen firsthand, even mature and well-established enterprise

security teams have a lack of visibility into network hygiene of their branches,

offices and contractors abroad due to varying security policies and protocols,

management hierarchy and known pain points in franchised-based businesses. The

same is applicable to their supply chain, where the level of network hygiene is

typically a “black box” or something the third-party is simply not willing to

discuss. Acquisition of the quantitative, historical and the most recent

indicators of compromise is a vital component of TPRM, providing enterprise

organizations actionable information to determine if a counterpart may be

compromised with malware and what service may be potentially breached by it.

This knowledge enables CISOs to make strategic and tactical decisions, as well

as to communicate with other teams, including those responsible for vendor

management and supply chain and the organization’s legal team.Quote for the day:

"Leadership is an ever-evolving position." -- Mike Krzyzewski

No comments:

Post a Comment