4 Steps for Fostering Collaboration Between IT Network and Security Teams

Collaboration requires a single source of truth or shared data that's reliable

and accessible to all involved. If one team is working with outdated

information, or a different type of data entirely, it won't be on the same page

as the other team. Likewise, if one team lacks specific details, such as

visibility into a public cloud environment, it won't be an effective partner.

Unfortunately, many enterprise-level organizations struggle with data control

conflicts because individual teams can be overly protective of data they

extract. As a result, what is shared is sometimes inconsistent, irrelevant, or

out of date. At the same time, many network and security tools are already

leveraging the same data, such as network packets, flows, and robust sets of

metadata. This network-derived data, or "smart data," must support workflows

without requiring management tool architects to cobble together multiple

secondary data stores to prop it up. Consequently, network and security teams

should find ways to unify their data collection and the tools they use for

analysis wherever possible to overcome sharing issues.

A guide to sensor technology in IoT

There is still plenty of room for IoT sensor technology to grow, and further

disrupt multiple industries, in the coming years. With a hybrid working model

set to continue being common among businesses, the use of IoT sensors can enable

employees that choose not to work on company premises to carry out tasks

remotely. Meanwhile, as smart cities continue developing, IoT sensors will also

remain a big part of the lives of citizens. With national infrastructures

involving IoT sensors in the works around the world, businesses will be able to

benefit from increased connectivity and decreased costs, while being able to cut

carbon emissions as national and global sustainability targets loom. The

roll-out of 5G also promises to boost the IoT space, with more and more device

varieties set to be compatible with the burgeoning wireless technology. This

won’t mean that LPWAN will lose its relevance, however — organisations will

still find valuable uses for smaller amounts of data that may be easier to

manage and transfer between devices. There is the breakthrough of standards such

as LTE-M and NB-IoT to consider here, as well.

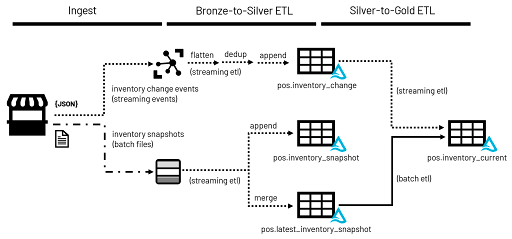

Real-time Point-of-Sale Analytics With a Data Lakehouse

Different processes generate data differently within the POS. Sales

transactions are likely to leave a trail of new records appended to relevant

tables. Returns may follow multiple paths triggering updates to past sales

records, the insertion of new, reversing sales records and/or the insertion of

new information in returns-specific structures. Vendor documentation, tribal

knowledge and even some independent investigative work may be required to

uncover exactly how and where event-specific information lands within the POS.

Understanding these patterns can help build a data transmission strategy for

specific kinds of information. Higher frequency, finer-grained,

insert-oriented patterns may be ideally suited for continuous streaming. Less

frequent, larger-scale events may best align with batch-oriented, bulk data

styles of transmission. But if these modes of data transmission represent two

ends of a spectrum, you are likely to find most events captured by the POS

fall somewhere in between. The beauty of the data lakehouse approach to data

architecture is that multiple modes of data transmission can be employed in

parallel.

The human factor in cybersecurity

People are creatures of habit who seek out shortcuts and efficiencies. If I

write a 5-step process for logging in to my most secure system, at least one

person will email me explaining how they found a shortcut. And there will be

many who complain about having to wait 4 seconds as their login is verified. I

know this, and so I push back on my team when they establish new protocols.

Can we make this easier? Can we use a tool – like multi-factor authentication

or PIV cards? Can we eliminate irritating parts of cybersecurity? Yes, the

solutions might cost more, but the benefit is compliance. I never want to

create a system that has my people jotting weekly passwords on post-it notes.

So, I ask my security team to think like a busy employee, a hurried exec, and

a distracted engineer – and remove complexity from our routines. Cybersecurity

measures take time to work, but human brains process faster. We might accept

that implementing an excellent cyber program and maintaining cyber hygiene

—like basic email scanning or link scanning—adds a layer of inefficiency;

however, this is often a difficult concept for employees.

Now Is The Time To Update Your Risk Management Strategy And Prioritize Cybersecurity

It’s clear that cybersecurity threats are real for companies of all types and

sizes, and so if there is one area of risk management businesses can

strengthen this year, it should be this. The good news is that many companies

are doing just that. A recent OnePoll survey of 375 senior-level IT security

professionals, commissioned by my company, confirms this. Respondents in our

survey indicated that recent data breaches, like SolarWinds, are impacting the

way their organizations prioritize cybersecurity. Nearly all respondents

believe cybersecurity is considered a top business risk within their

organizations, and 82% say these breaches have either greatly or somewhat

impacted the way their organization prioritizes cybersecurity. The U.K.’s

Department for Digital, Culture, Media and Sport commissioned another survey

that underscores these findings. They found that 77% of businesses say

cybersecurity is “a high priority for their directors or senior managers.”

This prioritization is also turning into real investment into cybersecurity

measures by businesses, which means company leaders are walking the talk.

7 Microservices Best Practices for Developers

At times, it might seem to make sense for different microservices to access

data in the same database. However, a deeper examination might reveal that one

microservice only works with a subset of database tables, while the other

microservice only works with a completely different subset of tables. If the

two subsets of data are completely orthogonal, this would be a good case for

separating the database into separate services. This way, a single service

depends on its dedicated data store, and that data store's failure will not

impact any service besides that one. We could make an analogous case for file

stores. When adopting a microservices architecture, there's no requirement for

separate microservices to use the same file storage service. Unless there's an

actual overlap of files, separate microservices ought to have separate file

stores. With this separation of data comes an increase in flexibility. For

example, let's assume we had two microservices, both sharing the same file

storage service with a cloud provider. One microservice regularly touches

numerous assets but is small in file size.

Why the ‘accidental hybrid’ cloud exists, and how to manage it

With many security tools designed for an on-premises world, they can lack the

application-level insight needed to positively impact digital services.

Businesses are therefore inevitably becoming more vulnerable to cyber attacks,

especially as an ‘accidental hybrid’ environment makes it challenging to

accurately monitor traffic or detect potential threats. If a SecOps team has a

‘clouded’ vision into the cloud environment, they may be forced to rely only

on trace files or application logs that ultimately provide a less than perfect

view into the network. What’s more, with the pervasive issue of the digital

skills shortage, there are a significant lack of experts that truly understand

how to secure the hybrid cloud environment. As long as a visibility strategy

is prioritised, network automation becomes an invaluable solution to

overcoming the issues of overstretched security professionals and the

increasing ‘threatscape’. While it may have seemed a daunting process in the

past, automation of data analysis is now surprisingly simple and can be

integral for gaining better insight and, in turn, mitigating attacks.

How Quantifying Information Leakage Helps to Protect Systems

The first and most important step is to identify the high value secrets that

your system is protecting. Not all assets need the same degree of protection.

The next step is to identify observable information that could be correlated

to your secret. Try to be as comprehensive as possible, considering time,

electrical output, cache states, and error messages. Once you have identified

what an attacker could observe, a good preventative measure is to disassociate

this observable information from your sensitive information. For example, if

you notice that a program processing some sensitive information takes longer

with one input than another, you can take steps to standardize the processing

time. You do not want to give an attacker any hints. Next, I suggest threat

modeling. Identify the goals, abilities, and rewards of possible attackers.

Establishing what your adversary considers "success" could inform your system

design. Finally, depending on your resources, you can approximate the

distribution of your secrets.

How to explain DevSecOps in plain English

DevSecOps extends the same basic principle to security: It shouldn’t be the

sole responsibility of a group of analysts huddled in a Security Operations

Center (SOC) or a testing team that doesn’t get to touch the code until just

before it gets deployed. That was the dominant model in the software delivery

pipelines of old: Security was a final step, rather than something considered

at every step. And that used to be at least passable, for the most part. As

Red Hat's DevSecOps primer notes, “That wasn’t as problematic when development

cycles lasted months or even years, but those days are over.” Those days are

most definitely over. That final-stage model simply didn’t account for cloud,

containers, Kubernetes, and a wealth of other modern technologies. And

regardless of a particular organization’s technology stack or development

processes, virtually every team is expected to ship faster and more frequently

than in the past. At its core, the role of security is quite simple: Most

systems are built by people, and people make mistakes.

End your meeting with clear decisions and shared commitment

In many cases, participants do the difficult, creative work of diagnosing

issues, analyzing problems, and brainstorming new ideas but don’t reap the

fruits of their labor because they fail to translate insights into action. Or,

with the end of the meeting looming—and team members needing to get to their

next meeting, pick up kids from school, catch a train, and so on—leaders rush

to devise a plan. They press people into commitments they have not had time to

think through—and then can’t (or won’t) keep to. Either of these mistakes can

result in an endless cycle of meetings without solutions, leaving people

feeling frustrated and cynical. Here are four strategies that can help leaders

avoid these detrimental outcomes, and instead foster a sense of clarity and

purpose. ... The key to this strategy: to prepare for an effective close,

leaders should “cue” the group to start narrowing the options, ideas, or

solutions on the table, whether it means going from ten job candidates to

three or selecting the top few messages pitched for a new brand campaign. The

timing for this cue varies based on the desired meeting outcomes, but it is

usually best to start narrowing about halfway through the allotted time.

Quote for the day:

People seldom improve when they have

no other model but themselves. -- Oliver Goldsmith

No comments:

Post a Comment