AI as a service to solve your business problems? Guess again

Companies seeking to use AI as a differentiating technology in order to gain

business advantages — and not merely doing it because that’s what everyone else

is doing — require planning and strategy, and that almost always means a

customized solution. In the words of Sepp Hochreiter (inventor of LSTM, one of

the world’s most famous and successful AI algorithms), “the ideal combination

for the best time to market and lowest risk for your AI projects is to slowly

build a team and use external proven experts as well. No one can hire the best

talent quickly, and even worse, you cannot even judge the quality during hiring

but will only find out years later.” That’s a far cry from what most online

off-the-shelf AI services offer today. The artificial intelligence technology

offered by AIaaS comes in two flavors — and the predominant one is a very basic

AI system that claims to provide a “one-size-fits-all” solution for all

businesses. Modules offered by AI service providers are meant to be applied,

as-is, to anything from organizing a stockroom to optimizing a customer database

to preventing anomalies in production of a multitude of products.

Let’s Redefine “Productivity” for the Hybrid Era

Despite the burnout so many of us feel, the hybrid environment offers an

opportunity to create a more sustainable approach to work. Remote and in-person

work both have distinct advantages and disadvantages, and rather than expecting

the same outcomes from each, we can build on what makes them unique. When in the

office, prioritize relationships and collaborative work like brainstorming

around a whiteboard. When working from home, encourage people to design their

days to include other priorities such as family, fitness, or hobbies. They

should take a nap if they need one and step outside between meetings. Brain

studies show that even five-minute breaks between remote meetings help people

think more clearly and reduce stress. Likewise, watch out for the risks each

type of work carries with it. People can avoid the long commutes they used to

have by staggering their schedules to avoid traffic. Encourage them to set

boundaries at home so they don’t work every hour of the day just because they

can. The trick is finding what works for each individual.

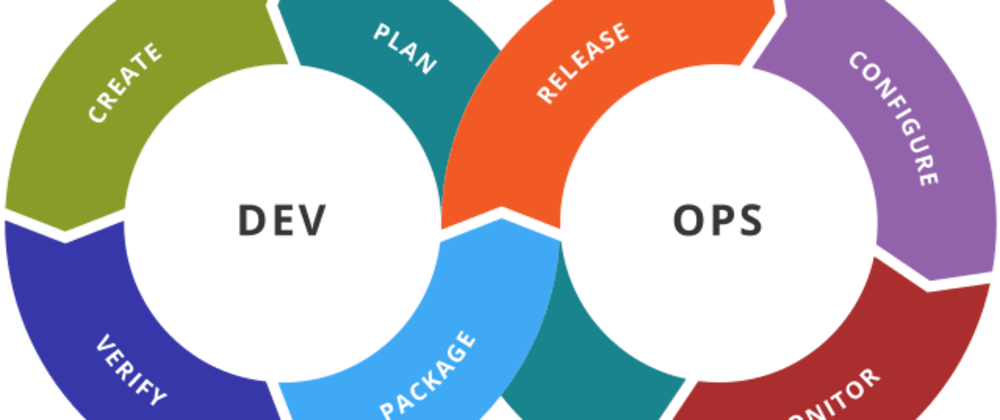

DevOps Is Not Automation

A highly-evolved DevOps team isn’t just about automating processes; it’s about

eliminating production roadblocks. Automating processes without making changes

to how your teams communicate is just moving the roadblocks around. A key first

step to truly effective DevOps is to synchronize development and operations

teams--teams that in traditional tech culture are siloed--and in fact, often at

odds. Forte Group points out that that typically, development teams are

incentivized to push things forward (get their deliverables in on time) and

quality assurance teams and system administrators are incentivized to minimize

disruptions (which often means pushing back deadlines to focus on a quality

product). In order to create a culture where continuous development is possible,

these teams have to think of their work as sharing an objective. Additionally,

they need to communicate frequently and effectively. DevOps also requires a

shift from one big deliverable at the end of a long development period to small,

incremental deployments that happen regularly and are constantly being monitored

and adjusted.

Who Should Own The Job Of Observability In DevOps?

Observability helps answer any question. Of course, this applies to

troubleshooting as well as helping users address the unknowns inside today’s

complex business systems. With observability, companies can continuously monitor

and react to issues or faults. Although observability may seem like the new

buzzword in IT, it actually isn’t new at all. The term came about as part of the

evolution of monitoring. As organizations began to move toward the cloud and

microservice applications, they needed a strategy that enabled them to monitor

at scale, along with answering the questions that were not defined during the

implementation of the monitoring system. Observability improves the way we

collect data and provides the data necessary to drive digital businesses

forward. ... Great monitoring tools count for little if people don’t know how to

use them properly. Organizations can have too many tools, owned by different

teams, so there’s a challenge around the selection and ownership of specific

tools within an organization. Organizations must be sure to take the necessary

steps of clearly communicating to developers their roles and responsibilities

and options available for them to solve the observability challenge.

Tooling Network Detection & Response for Ransomware

If ransomware is given too much time on the network, even if it doesn’t gain

access to your most critical data, it could have an impact on day-to-day

operations. By tracking the ransomware’s lateral movement, organizations can see

where it moved, and, more importantly, which machines were infected. Doing so

reduces the number of machines infected and thus reduces the time to recovery.

Tracking lateral movement is only as good as the data being collected. When new

machines or new employees connect to the network, organizations should start

monitoring those connections right away. Doing so will provide the most

visibility and will enable the organization to track malicious movement from all

devices on the network. Additionally, understanding how malicious software is

connecting throughout your network requires having an NDR system capable of

collecting network flow data and analyzing it. By leveraging flow data,

organizations can quickly determine where ransomware—and other malware—are

moving across the network.

Why do humans learn so much faster than machine learning models?

Strides have been made in enabling ML models to mimic the kind of

understanding humans have. A great and frankly magical example are word

embeddings. ... Word embeddings are a way to represent text data as numbers,

needed if you want to feed the text into an ML model. Word embeddings

represent each word using say 50 features. Words that are close together in

this 50 dimensional space are similar in meaning, for example apple and

orange. The challenge we face is how to construct these 50 features. Multiple

approaches have been proposed, but in this article we focus on Glove word

embeddings. Glove word embeddings are derived from a co-occurence matrix of

the words in a corpus. If words occur in the same textual context, Glove

assumes they are similar in meaning. This already presents the first hint that

word embeddings learn an understanding of the corpus they train on. If in a

given context a lot of fruits are used, the word embeddings will know apple

would fit in that place.

Observability is key to the future of software (and your DevOps career)

Observability platforms enable you to easily figure out what’s happening with

every request and to identify the cause of issues fast. Learning the

principles of observability and OpenTelemetry will set you apart from the

crowd and provide you with a skill set that will be in increasing demand as

more companies perform cloud migrations. From an end-user perspective,

“telemetry” can be a scary-sounding word, but in observability, telemetry

describes its three primary pillars of data: metrics, traces, and logs. This

data from your applications and infrastructure is called ‘telemetry,’ and it’s

the foundation of any monitoring or observability system. OpenTelemetry is an

industry standard for instrumenting applications to provide this telemetry,

collecting it across the infrastructure and emitting it to an observability

system. ... As an engineer, the best way to get started with something is to

get your hands dirty. As someone who works for a commercial observability

vendor, I’d be remiss to not tell you to try a free trial of Splunk

Observability Cloud—there’s no credit card required and the integration

wizards that walk you through setup actually have you integrate your

architecture with OpenTelemetry.

Service Mesh Ultimate Guide - Second Edition: Next Generation Microservices Development

Broadly speaking, the data plane “does the work” and is responsible for

“conditionally translating, forwarding, and observing every network packet

that flows to and from a [network endpoint].” In modern systems, the data

plane is typically implemented as a proxy, (such as Envoy,

HAProxy, or MOSN), which is run

out-of-process alongside each service as a “sidecar.” Linkerd uses a

micro-proxy approach that’s optimized for the service mesh sidecar use cases.

A control plane “supervises the work,” and takes all the individual instances

of the data plane—a set of isolated stateless sidecar proxies—and turns them

into a distributed system. The control plane doesn’t touch any

packets/requests in the system, but instead, it allows a human operator to

provide policy and configuration for all of the running data planes in the

mesh. The control plane also enables the data plane telemetry to be collected

and centralized, ready for consumption by an operator.

‘Azurescape’ Kubernetes Attack Allows Cross-Container Cloud Compromise

In the multitenant architecture, each customer’s container is hosted in a

Kubernetes pod on a dedicated, single-tenant node virtual machine (VM),

according to the analysis, and the boundaries between customers are enforced

by this node-per-tenant structure. “Since practically anyone can deploy a

container to the platform, ACI must ensure that malicious containers cannot

disrupt, leak information, execute code or otherwise affect other customers’

containers,” explained researchers. “These are often called cross-account or

cross-tenant attacks.” The Azurescape version of such an attack has two

prongs: First, malicious Azure customers/adversaries must escape their

container; then, they must acquire a privileged Kubernetes service account

token that can be used to take over the Kubernetes API server. The API Server

provides the frontend for a cluster’s shared state, through which all of the

nodes interact, and it’s responsible for processing commands within each node

by interacting with Kubelets. Each node has its own Kubelet, which is the

primary “node agent” that handles all tasks for that specific node.

The impact of ransomware on cyber insurance driving the need for broader cybersecurity knowledge

Effective security operations are critical to minimizing both the likelihood

and the impact of a cyberattack. Disparate tools will not fix the

effectiveness problem facing organizations across the globe, nor will they

stand up to risk assessments and external insurer requirements. An effective

security operations strategy provides risk management leaders the foundation

to confidently negotiate with insurance providers and set a long-term

cybersecurity agenda that protects the entire business. For insurance

providers, there is an opportunity to partner with security operations experts

to expand their cybersecurity expertise, to allow for more precise, accurate

calculations for policyholders. Cyber insurers and security operations

professionals must break down silos and recognize that together, they have a

unique opportunity to coordinate effectively to better protect businesses. ...

It’s paramount that insurance providers expand their knowledge on

cybersecurity. The providers that do will be able to take full control over

their policies.

Quote for the day:

“It is more productive to convert an

opportunity into results than to solve a problem – which only restores the

equilibrium of yesterday.” -- Peter Drucker

No comments:

Post a Comment