The importance of today’s digital practitioner

The shift we are undergoing - from the physical to the digital - has led to companies embedding technology into the products they sell. Therefore, the importance of digital is moving from an IT functional world to one where digital practitioners are embedded into not only every part of the business, but also into every part of the products that the vast majority of companies take to market. This includes companies that historically have been very physical, such as aircraft engines or oil refineries, or any number of sectors where physical products are becoming digital. They now provide much more information to consume and therefore, more technology is integrated into the products that companies sell. A changing world requires the creation of new job roles to closely monitor the digital evolution, and this explains the emergence and importance of digital practitioners. Speed and agility Nowadays technology adoption occurs at a much faster rate, since most organisations now operate digitally.

The Top 7 Technology Trends for 2019

Naturally, blockchain is part of my list of technologies for 2019. It has been on my list in one form or another since 2015. The difference with previous years is that in 2019 we will see the first real enterprise applications in use. I am not talking about the various blockchain startups developing decentralised Applications (or dApps) nor am I talking about Proof of Concepts. In 2019, I think that we will see large corporations using blockchain to improve industry collaboration. These enterprise applications will predominantly use private blockchains. Within these networks, new actors have to be approved by existing participants, enabling more flexibility and efficiency when validating transactions. Organisations that will prefer to keep a shared ledger for settlement of transactions will be in the financial services industry or within supply chains. Especially for the latter, I see tremendous opportunities for 2019. In 2019, blockchain will become the gold standard for the supply chain. Simply because it offers clear benefits for participants in a network that have to trust each other to make it work.

Phishing at centre of cyber attack on Ukraine infrastructure

According to Carullo, phishing is one of the major attack vectors cyber criminals and other attackers use to target critical infrastructure. “This was demonstrated in our recent study around GreyEnergy, another piece of malware which was targeting critical infrastructure in Ukraine via phishing,” he said. “Today’s determined attackers are showing no signs of slowing down, so teaching staff to ‘think before they click’ is key to defending against these types of attacks.” Defending CNI from cyber attacks is not only about resisting attacks, but also about being resilient to ensure a quick recovery, according to Mike Gillespie, managing director and co-founder of security consultancy Advent IM. An unwillingness to accept that cyber attacks are a real threat to critical national infrastructure by UK political and CNI business leaders has resulted in a lack of resilience

Powerful, extensible code with Tagless Final in … Java!

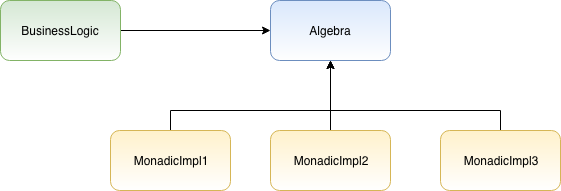

Tagless final is all the rage in the Scala community at the moment. It is a technique that allows you to define the general structure of the code to be executed, such that we can configure different implementations (or interpreters) to inject effects such as Optionality, Asynchronicity, Parallelism, Non-Determinism, Error Handling depending on our needs. Need to simplify a highly concurrent piece of code for a test case so that you can debug a nasty production issue? With Tagless final — no problem! ... The nice thing about Monads is they all implement the same general suite of methods (of / map / flatMap and so on) that all behave in predictable ways. This means that if we define our programs to work with Monads, and define our Algebra in a generic manner so that the concrete Monadic type is pluggable — we can reuse the same Algebra and ‘program’ definition with many different Monadic datastructures. In fact we aren’t even constrained to using Monads

IoT roundup: Retrofitting vehicle tracking, plus a new IoT standard

With millions of devices communicating different kinds of information via different kinds of networks, the IoT is crying out for some standardization, to help unlock its true potential as a transformative technology. Unfortunately, there is also a profusion of different standards, whether they’re from industry umbrella groups, technical committees or vendors pronouncing their connectivity framework as “standards.” Nevertheless, the International Organization for Standardization – a pan-industrial regulatory standards-setting body that’s been around in one form or another since the 1920s – has made its considerable presence felt in the world of IoT by ratifying the Open Connectivity Foundation’s OCF 1.0 specification as an international standard. The standard mandates public-key-based security, cloud management and interoperability for IoT systems in an attempt to create a useful, open framework for IoT.

Data protection, backup and replication in the age of the cloud

For data protection we have to consider on-premise applications as well as those running as cloud software platforms like Office365 or SalesForce.com. Public cloud services like these do not backup data by default other than to recover from system failure, so getting emails back after deletion is the data owner’s responsibility and so must be included in a data protection plan. With so much infrastructure accessible over the public internet, IT organisations also need to think about DLP – Data Loss Prevention. Or, probably more accurately, data leakage protection. We’ll discuss this later when talking about security. Data protection looks to meet the needs of the business by placing service level objectives on data protection and restore. In other words, recovery objectives drive protection goals. The two main measures are RTO (Recovery Time Objective) and RPO (Recovery Point Objective). RTO determines how quickly data and applications can be restored to operation, while RPO defines the amount of data loss tolerable.

How should CIOs manage data at the edge?

Because of this, it is imperative that CIOs build comprehensive security into any edge implementation proposal from the start. If security is bolted on after the business goals and ambitions of edge have been set, there will undoubtedly be trouble ahead. The need for processing at the edge comes from the sheer amount of data generated as our connected world expands over the coming years – according to DataAge 2025, a report sponsored by Seagate and conducted by IDC, 90% of the data created in 2025 will require security protection. More data, of course, means more vulnerability – which is why security, with intelligent data storage and data-at-rest encryption at its foundation, has to be at the heart of any business’s edge computing plans. Couple this with the increased physical concerns – more locations means that there are more sites to keep secure – and it’s clear that this is a complex challenge that must be managed methodically. Implementing edge is all about driving business growth – the new customer experiences and revenue streams that come with it will mean that your business expands and becomes more complex.

Defining RegTech: Why it Matters

As with many cases of disruption in tech, RegTech takes advantage of newer technologies. In order to provide scalability and convenient access, most RegTech offerings are based on the cloud, which helps connect new customers and provides an easy-to-access portal. Furthermore, RegTech relies on artificial intelligence for a number of tasks, including fraud detection and other actions. Machine learning, in particular, offers far better results than previous technologies, and RegTech companies can use data from their clients to improve their algorithms. RegTech is also emerging in the era of Big Data. Thanks to the modern tools and techniques used to analyze Big Data, RegTech companies can tap into large data sets and make sense of them, and they can pass this value on to their clients. Companies have long used software to aid in compliance. Furthermore, RegTech startups weren’t the first to incorporate artificial intelligence into the field. The emergence of the term RegTech, however, coincides with a time when companies are more inclined than ever to rely on cloud-based offerings.

10 predictions for the data center and the cloud in 2019

Virtualization is nice, but it’s resource-heavy. It requires a full instance of the operating system, and that can limit the number of VMs on a server, even with a lot of memory. ... A container is as small as 10MB in size vs. a few GB of memory for a full virtual machine, and serverless, where you run a single function app, is even smaller. As apps go from monolithic to smaller, modular pieces, containers and serverless will become more appealing, both in the cloud and on premises. Key to the success of containers and serverless is that the technologies were created with the cloud and on-premises systems in mind and easy migration between the two, which will help their appeal. ... Bare metal means no software. You rent CPUs, memory capacity, and storage. After that, you provide your own software stack — all of it. So far, IBM has been the biggest proponent of bare-metal hosting followed by Oracle, and with good reason. Bare metal is ideal for what’s called “lift and shift,” where you take your compute environment from the data center to a cloud provider unchanged. Just put the OS, apps, and data in someone else’s data center.

Why interest in IT4IT is on the rise

Micro Focus distinguished technologist Lars Rossen, who wrote the original IT4IT specification, recognized that to manage the delivery of services in a digital enterprise, you need to have an operating model and a clear, end-to-end understanding of all the capabilities and systems surrounding it. That includes understanding everything about the key information artifacts you manage, such as service models, incidents, subscriptions, and plans. Prior to IT4IT's introduction (and particularly the release of its second version in 2015), there was no prescription for how to do this. "There were various frameworks like ITIL that described some of the processes you need to put in place, but none of them were comprehensive, prescriptive, and vendor-agnostic," Rossen said. "We created IT4IT to address this." The first version of IT4IT was constructed in collaboration with some large customers and consultancies, including Shell, PwC, HPE, AT&T, and Accenture, Rossen explained.

Quote for the day:

"Leadership is a process of mutual stimulation which by the interplay of individual differences controls human energy in the pursuit of a common goal." -- P. Pigors

No comments:

Post a Comment