The fintech revolution: How digital disruption is reshaping the future of banking

Several pivotal trends have converged to accelerate fintech adoption. The JAM

trinity—Jan Dhan, Aadhaar, and Mobile—became the cornerstone of India’s fintech

revolution, enabling seamless, paperless onboarding and verification for

financial services. Aadhaar-enabled biometric authentication, for instance, has

transformed how identity verification is conducted, making the process entirely

mobile-based. Perhaps the Unified Payments Interface (UPI) is the most profound

disruptor. Introduced by the Indian government as part of its push for a

cashless economy, UPI has redefined peer-to-peer (P2P) and person-to-merchant

(P2M) transactions. As of September 2024, UPI transactions have reached a

staggering 15 billion per month, with transaction values surpassing INR 20.6

trillion, marking a 16x increase in volume and a 13x increase in value over five

years. UPI’s convenience and speed have made it the default payment mode for

millions, further marginalising the role of traditional banking infrastructure.

At the same time, blockchain technology is emerging as a force that could

dramatically reduce bank operational costs. Decentralised, secure, and

transparent, blockchain allows financial institutions to overhaul their legacy

systems.

Bridging the AI Skills Gap: Top Strategies for IT Teams in 2025

Daly explained that practical applications are key to learning, and creating

cross-functional teams that include AI experts can facilitate knowledge sharing

and the practical application of new skills. "To prepare for 2025 and beyond,

it's crucial to integrate AI and ML into the core business strategy beyond

R&D investment or technical roles, but also into broader organizational

talent development," she said. "This ensures all employees understand the

opportunity [and] potential impact, and are trained on responsible use." ...

Kayne McGladrey, IEEE senior member and field CISO at Hyperproof, said AI ethics

skills are important because they ensure that AI systems are developed and used

responsibly, aligning with ethical standards and societal values. "These skills

help in identifying and mitigating biases, ensuring transparency, and

maintaining accountability in AI operations," he explained. ... Scott Wheeler,

cloud practice lead at Asperitas, said building a culture of innovation and

continual learning is the first step in closing a skills gap, particularly for

newer technologies like AI. "Provide access to learning resources, such as

on-demand platforms like Coursera, Udemy, Wizlabs," he suggested. "Embed

learning into IT projects by allocating time in the project schedule and monitor

and adjust the various programs based on what works or doesn't work for your

organization."

What Makes the Ideal Platform Engineer?

Platform engineers decide on a platform — consisting of many different tools,

workflows and capabilities — that DevOps, developers and others in the

business can use to develop and monitor the development of software. They base

these decisions on what will work best for these users. ... The old adage that

every business is unique applies here; platform engineering doesn’t look the

same in every organization, nor do the platforms or portals that are used. But

there are some key responsibilities that platform engineers will often have

and skills that they require. Noam Brendel is a DevOps team lead at

Checkmarx, an application security firm that has embraced platform

engineering. He believes a platform engineer’s focus should be on improving

developer excellence. “The perfect platform engineer helps developers by

building systems that eliminate bottlenecks and increase collaboration,” he

said. ... “Platform engineers need to have a strong understanding of how

everything is connected and how the platform is built behind the scenes,”

explained Zohar Einy, CEO of Port, a provider of open internal developer

portals. He emphasized the importance of knowing how the company’s technical

stack is structured and which development tools are used.

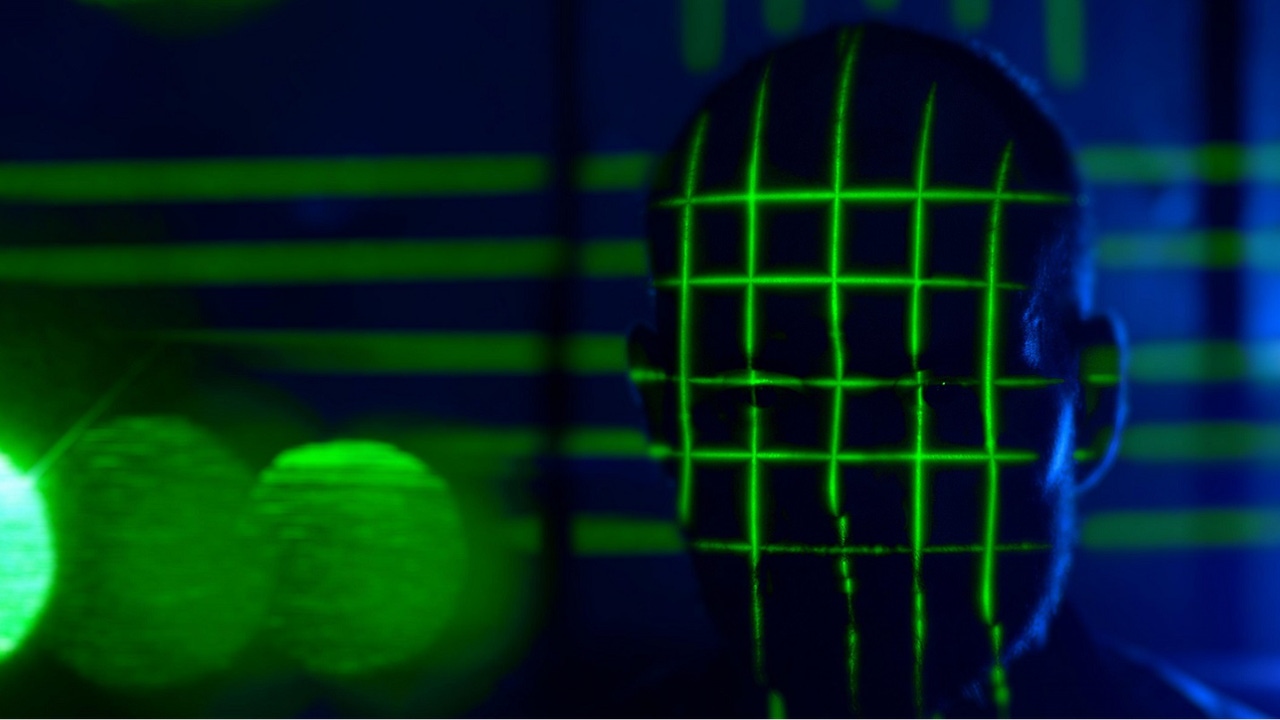

Biometrics and AI Knock Out Passwords in the Security Battle

Biometrics and AI-powered authentication have moved beyond concept to

successful application. For instance, HSBC's Voice ID voice identification

technology analyzes over 100 characteristics of an individual's voice,

maintains a sample of the customer's voice, and compares it to the caller's

voice. ... The success of implementing biometrics and AI into existing systems

relies on organizations to follow best practices. Organizational leaders can

assess organizational needs by conducting a security audit to identify

vulnerabilities that biometrics and AI can address. This information is then

used to create a roadmap for implementation considering budget, resources, and

timelines. Involving appropriate staff in such discussions is essential so all

stakeholders understand the factors considered in decision-making. Selecting

the right technology calls for careful vendor evaluation and identification of

solutions that align with the organization's requirements and compliance

obligations. Once these decisions are solidified, it is prudent to use pilot

programs to start the integration. Small-scale deployments test effectiveness

and address any unforeseen issues before large-scale implementation.

CISA, Five Eyes issue hardening guidance for communications infrastructure

The joint guidance is in direct response to the breach of telecommunications

infrastructure carried out by the Chinese government-linked hacking collective

known as Salt Typhoon. ... “Although tailored to network defenders and

engineers of communications infrastructure, this guide may also apply to

organizations with on-premises enterprise equipment,” the guidance states.

“The authoring agencies encourage telecommunications and other critical

infrastructure organizations to apply the best practices in this guide.” “As

of this release date,” the guidance says, “identified exploitations or

compromises associated with these threat actors’ activity align with existing

weaknesses associated with victim infrastructure; no novel activity has been

observed. Patching vulnerable devices and services, as well as generally

securing environments, will reduce opportunities for intrusion and mitigate

the actors’ activity.” Visibility, a cornerstone of network defenses to

monitoring, detecting, and understanding activities within their

infrastructure, is pivotal in identifying potential threats, vulnerabilities,

and anomalous behaviors before they escalate into significant security

incidents.

Tackling software vulnerabilities with smarter developer strategies

No two developers solve a problem or build a software product the same way.

Some arrive at their career through formal college education, while others are

self-taught and with minimal mentorship. Styles and experiences vary wildly.

Equally so, we should expect they will consider secure coding practices and

guidelines with similar diversity of thought. Organizations must account for

this wide diversity in its secure development practices – training,

guidelines, standards. These may be foreign concepts to even a highly

proficient developer, and we need to give our developers the time and space to

learn and ask questions, with sufficient time to develop a secure coding

proficiency. ... Best in class organizations have established ‘security

champions’ programs where high-skilled developers are empowered to be a

team-level resource for secure coding knowledge and best practice in order for

institutional knowledge to spread. This is particularly important in remote

environments where security teams may be unfamiliar or untrusted faces, and

the internal development team leaders are all that much more important to set

the tone and direction for adopting a security mindset and applying security

principles.

Developing an AI platform for enhanced manufacturing efficiency

To power our AI Platform, we opted for a hybrid architecture that combines our

on-premises infrastructure and cloud computing. The first objective was to

promote agile development. The hybrid cloud environment, coupled with a

microservices-based architecture and agile development methodologies, allowed

us to rapidly iterate and deploy new features while maintaining robust

security. The path for a microservices architecture arose from the need to

flexibly respond to changes in services and libraries, and as part of this

shift, our team also adopted a development method called "SCRUM" where we

release features incrementally in short cycles of a few weeks, ultimately

resulting in streamlined workflows. ... The second objective is to use

resources effectively. The manufacturing floor, where AI models are created,

is now also facing strict cost efficiency requirements. With a hybrid cloud

approach, we can use on-premises resources during normal operations and scale

to the cloud during peak demand, thus reducing GPU usage costs and optimizing

performance. This allows us to flexibly adapt to an expected increase in the

number of users of AI Platform in the future, as well.

Privacy is a human right, and blockchain is critical to securing It

While blockchain offers decentralized and secure transactions, the lack of

privacy on public blockchains can expose users to risks, from theft to

persecution. In October, details emerged of one of the largest in-person

crypto thefts in US history after a DC man was targeted when kidnappers were

able to identify him as an early crypto investor. However, despite the case

for on-chain privacy, it’s proven difficult to advance any real-world

implementations. Along with the regulatory challenges faced by segments such

as privacy coins and mixers, certain high-profile missteps have done little to

advance the case for on-chain privacy. Worldcoin, Sam Altman’s much-touted

crypto identity project that collected biometric data from users, has also

failed to live up to exceptions due to, perversely, concerns from regulators

about breaches of users’ data privacy. In August, the government of Kenya

suspended Worldcoin’s operations following concerns about data security and

consent practices. In October, the company announced it was pivoting away from

the EU and towards Asian and Latin American markets, following regulatory

wrangling over the European GDPR rules.

Transforming fragmented legacy controls at large banks

You’re not just talking about replacing certain components of a process with

technology. There’s also a cost to this change. It’s not always on the top of

the list when budgets come around. Usually, spend goes on areas that are

revenue generating or more in the innovation space. It can be somewhat of a

hard sell to the higher-ups as to why they would spend money to change

something, and a lot of organisations aren’t great at articulating the

business case for it. ... If you take operational resilience perspective, for

example, that’s about being able to get your arms around your important

business services, using regulatory language. Considering what is supporting

them? What does it take to maintain them, keep them resilient and available,

and recover them? The reality is that this used to be infinitely more

straightforward. Most of the systems may have been in your own data centre in

your own building. Now, the ecosystems that support most of these services are

much more complex. You’ve obviously got cloud providers, SaaS providers, and

third parties that you’ve outsourced to. You’ve also got a huge number of

different services that, even if you’ve bought them and they’re in-house,

there are a myriad of internal teams to navigate.

Why the Growing Adoption of IoT Demands Seamless Integration of IT and OT

Effective cybersecurity in OT environments requires a mix of skills and

knowledge from both IT and OT teams. This includes professionals from IT

infrastructure and cybersecurity, as well as control system engineers, field

operations staff, and asset managers typically found in OT. ... The

integration of IT and OT through advanced IoT protocols represents a major

step forward in securing industrial and healthcare systems. However, this

integration introduces significant challenges. I propose a new approach to IoT

security that incorporates protocol-agnostic application layer security,

lightweight cryptographic algorithms, dynamic key management, and end-to-end

encryption, all based on zero-trust network architecture (ZTNA). ... In OT

environments, remediation steps must go beyond traditional IT responses. While

many IT security measures reset communication links and wipe volatile memory

to prevent further compromise, additional processes are needed for

identifying, classifying, and investigating cyber threats in OT systems.

Furthermore, organizations can benefit from creating unified governance

structures and cross-training programs that align the priorities of IT and OT

teams.

Quote for the day:

"There are three secrets to managing.

The first secret is have patience. The second is be patient. And the third

most important secret is patience." -- Chuck Tanner

No comments:

Post a Comment