The promise and perils of synthetic data

Synthetic data is no panacea, however. It suffers from the same “garbage in,

garbage out” problem as all AI. Models create synthetic data, and if the data

used to train these models has biases and limitations, their outputs will be

similarly tainted. For instance, groups poorly represented in the base data will

be so in the synthetic data. “The problem is, you can only do so much,” Keyes

said. “Say you only have 30 Black people in a dataset. Extrapolating out might

help, but if those 30 people are all middle-class, or all light-skinned, that’s

what the ‘representative’ data will all look like.” To this point, a 2023 study

by researchers at Rice University and Stanford found that over-reliance on

synthetic data during training can create models whose “quality or diversity

progressively decrease.” Sampling bias — poor representation of the real world —

causes a model’s diversity to worsen after a few generations of training,

according to the researchers. Keyes sees additional risks in complex models such

as OpenAI’s o1, which he thinks could produce harder-to-spot hallucinations in

their synthetic data. These, in turn, could reduce the accuracy of models

trained on the data — especially if the hallucinations’ sources aren’t easy to

identify.

Federal Privacy Is Inevitable in The US (Prepare Now)

The writing’s on the wall for federal privacy. It’s simply not tenable for

almost half the states having varying privacy thresholds and the other half with

nothing. Our interconnected business and digital ecosystems need certainty and

consistency across the country. Congress can and should stand up for American

privacy. The good news? Recent history shows that sweeping reforms are possible.

From the CHIPS and Science Act to major pandemic stimulus, lawmakers have shown

their ability to meet moments with big regulations. While states deserve credit

for filling the privacy void, federal action must follow. For now, there’s no

time to waste. Enterprises that build privacy-ready operations today will be

better positioned to thrive under future regulations, maintain customer trust,

and turn compliance into a competitive advantage. On the other hand,

slow-to-move companies risk regulatory penalties and loss of customer confidence

in an increasingly privacy-conscious marketplace. Future-forward organizations

recognize that investing in privacy isn’t just about compliance; it’s about

building a sustainable competitive advantage in the data-driven economy. The

choice is clear: invest in privacy now or play catch-up when federal mandates

arrive.

AI use cases are going to get even bigger in 2025

Few sectors stand to gain more from AI advancements than defense. “We are

witnessing a surge in applications like autonomous drone swarms, electronic

spectrum awareness, and real-time battlefield space management, where AI, edge

computing, and sensor technologies are integrated to enable faster responses

and enhanced precision,” says Meir Friedland, CEO at RF spectrum intelligence

company Sensorz. ... “AI is transforming genome sequencing, enabling faster

and more accurate analyses of genetic data,” Khalfan Belhoul, CEO at the Dubai

Future Foundation, tells Fast Company. “Already, the largest genome banks in

the U.K. and the UAE each have over half a million samples, but soon, one

genome bank will surpass this with a million samples.” But what does this

mean? “It means we are entering an era where healthcare can truly become

personalized, where we can anticipate and prevent certain diseases before they

even develop,” Belhoul says. ... The potential for AI extends far beyond the

use cases dominating today’s headlines. As Friedland notes, “AI’s future lies

in multi-domain coordination, edge computing, and autonomous systems.” These

advancements are already reshaping industries like manufacturing, agriculture,

and finance.

2025 Will Be the Year That AI Agents Transform Crypto

The value of AI agents lies not just in their utility but in their potential

to scale human capabilities. Agents are no longer just tools — they are

emerging as participants in the on-chain economy, driving innovation across

finance, gaming and decentralized social platforms. With protocols such as

Virtuals and open-source frameworks like ELIZA, it’s becoming increasingly

simple for developers to build, deploy and iterate AI agents that serve an

increasingly diverse set of use cases. ... Unlike the core foundational AI

models that are developed behind the walled gardens of OpenAI and Anthropic,

AI agents are being innovated in the trenches of the crypto world. And for

good reason. Blockchains provide the ideal infrastructure as they offer

permissionless and frictionless financial rails, enabling agents to seed

wallets, transact and send funds autonomously — tasks that would be unfeasible

using traditional financial systems. In addition, the open-source nature of

crypto allows developers to leverage existing frameworks to launch and iterate

on agents faster than ever before. With more no-code platforms like Top Hat

gaining traction, it’s only getting easier for anyone to be able to launch an

agent in minutes.

Unpacking OpenAI's Latest Approach to Make AI Safer

OpenAI said it used an internal reasoning model to generate synthetic examples

of chain-of-thought responses, each referencing specific elements of the

company's safety policy. Another model, referred to as the "judge," evaluated

these examples to meet quality standards. The approach looks to address the

challenges of scalability and consistency, OpenAI said. Human-labeled datasets

are labor-intensive and prone to variability, but properly vetted synthetic data

can theoretically offer a scalable solution with uniform quality. The method can

potentially optimize training and reduce the latency and computational overhead

associated with the models reading lengthy safety documents during inference.

OpenAI acknowledged that aligning AI models with human safety values remains a

challenge. Users continue to develop jailbreak techniques to bypass safety

restrictions, such as framing malicious requests in deceptive or emotionally

charged contexts. The o3 series models scored better than its peers Gemini 1.5

Flash, GPT-4o and Claude 3.5 Sonnet on the Pareto benchmark, which measures a

model's ability to resist common jailbreak strategies. But the results may be of

little consequence, as adversarial attacks evolve alongside improvements in

model defenses.

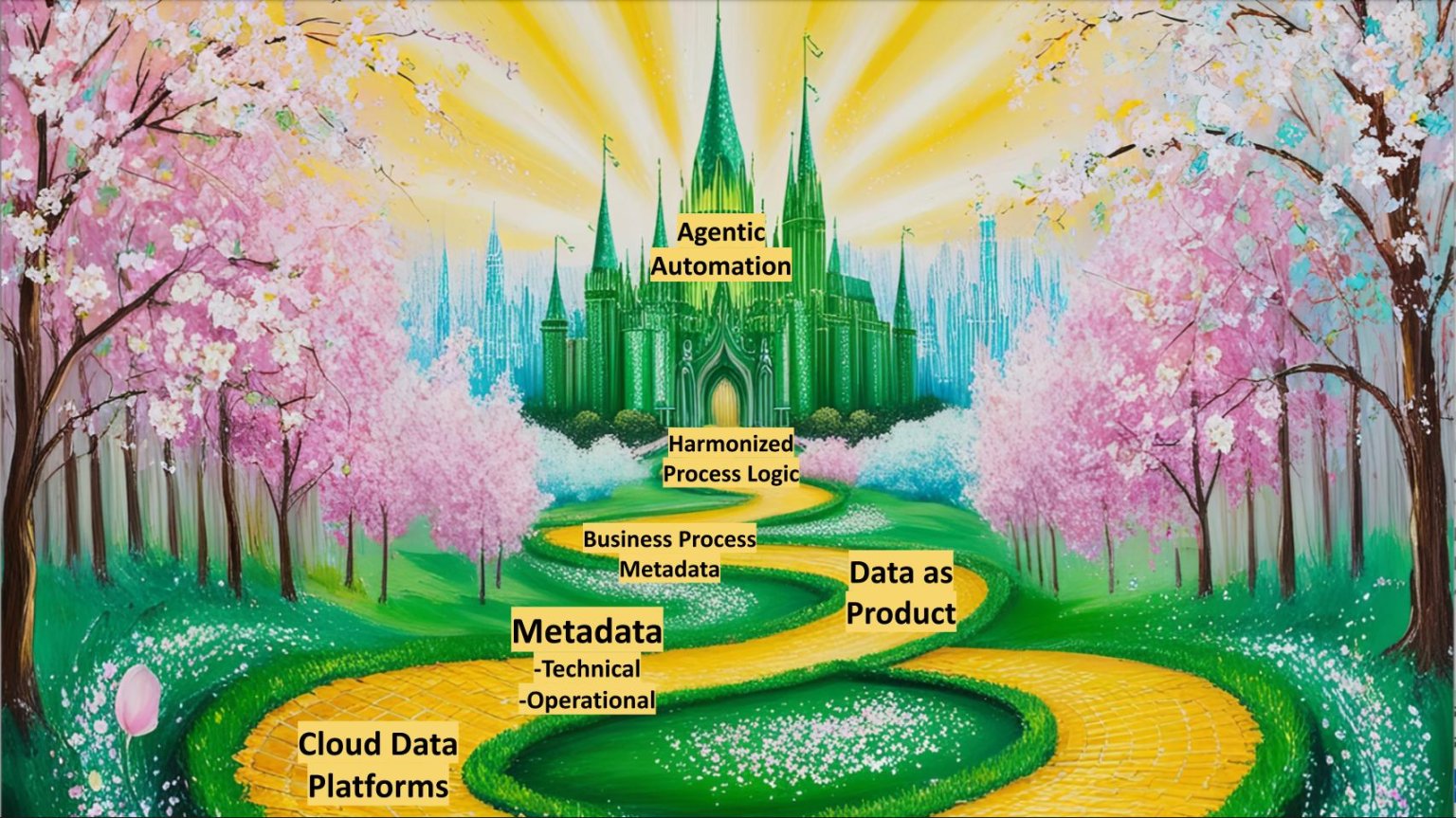

The yellow brick road to agentic AI

Many believe this AI era is the most profound we’ve ever seen in tech. We agree

and liken it to mobile’s role in driving on-premises workloads to the cloud and

disrupting information technology. But we see this as even more impactful. But

for AI agents to work we have to reinvent the software stack and break down 50

years of silo building. The emergence of data lakehouses is not the answer as

they are just a bigger siloed asset. Rather, software as a service as we know it

will be reimagined. Two prominent chief executives agree. At Amazon Web Services

Inc.’s recent AWS re:Invent conference, we sat down with Amazon.com Inc. CEO

Andy Jassy. ... There is a clear business imperative behind this shift. We

believe companies will differentiate themselves by aligning end-to-end

operations with a unified set of plans — from three-year strategic assumptions

about demand to real-time, minute-by-minute decisions, such as how to pick, pack

and ship individual orders to meet long-term goals. The function of management

has always involved planning and resource allocation across various timescales

and geographies, but previously there was no software capable of executing on

these plans seamlessly across every time horizon.

The AI backlash couldn’t have come at a better time

Developers, engineers, operations personnel, enterprise architects, IT managers,

and others need AI to be as boring for them as it has become for consumers. They

need it not to be a “thing,” but rather something that is managed and integrated

seamlessly into — and supported by — the infrastructure stack and the tools they

use to do their jobs. They don’t want to endlessly hear about AI; they just want

AI to seamlessly work for them so it just works for customers. ... The models

themselves are also, rightly, growing more mainstream. A year ago they were

anything but, with talk of potentially gazillions of parameters and fears about

the legal, privacy, financial, and even environmental challenges such a data

abyss would create. Those LLLMs are still out there, and still growing, but many

organizations are looking for their models to be far less extreme. They don’t

need (or want) a model that includes everything anyone ever learned about

anything; rather, they need models that are fine-tuned with data that is

relevant to the business, that don’t necessarily require state-of-the-art GPUs,

and that promote transparency and trust. As Matt Hicks, CEO of Red Hat, put it,

“Small models unlock adoption.”

Systems Thinking in Leading Transformation for the Future

The first step is aligning your internal goals with your external insights.

Leaders must articulate a clear vision that ties the organization's purpose to

broader societal and industry trends. For Nooyi and PepsiCo, that meant

“starting from the outside.” Nooyi tasked her senior leaders with identifying

external factors that would likely impact the company. She said, “They pointed

to several megatrends … including a preoccupation with health and wellness,

scarcity of water and other natural resources, constraints created by global

climate change … and a talent market characterized by shortages of key people.”

... Systems thinking involves understanding the interdependencies within and

outside an organization. For example, if you are embarking on any transformation

project, you’ll likely need to explore new partnerships with suppliers and

regional authorities and regulators. ... Using frameworks like OKRs (Objectives

and Key Results), you can evaluate how each initiative within your

transformation program contributes to the overarching objective. For example, a

laudable main aim such as a commitment to environmental sustainability would

likely involve numerous associated projects: for example, water conservation,

waste reduction, and reduced carbon footprint.

The 2024 cyberwar playbook: Tricks used by nation-state actors

While nation-state actors loved zero days for swift break-ins, phishing remained

a sly plan B. It let them craft sneaky schemes to worm into systems, proving

that 2024 was the year of both bold strikes and artful cons. Russian

nation-state actors leaned heavily on phishing in 2024, with other APTs, like

Iranian and Pakistani groups, dabbling in the tactic as well. The following are

some of the standout campaigns from 2024 where phishing was the go-to for

initial access. ... While credential harvesting through malware delivered via

phishing was fairly common, nation-state actors rarely resorted to scavenging

credentials from hack forums or drop sites as a primary tactic. When asked,

Hughes noted, “I’m not familiar with this being the primary MO by the APTs, who

instead are targeting devices, products and vendors with vulnerabilities and

misconfigurations, but once inside, they do compromise credentials and use those

to pivot, move laterally, persist in environments and more.” ... These actors

weren’t always about flashy, custom malware. Quite often, they used legit tools

like PowerShell, rootkits, RDP, and other off-the-shelf system features to sneak

in, stay undetected, and set up long-term access. This made their attacks

stealthy, persistent, and ready for future moves.

Generative AI is now a must-have tool for technology professionals

As part of this trend, "we are witnessing developers shift from writing code to

orchestrating AI agents," said Jithin Bhasker, general manager and vice

president at ServiceNow. The efficiency gained from gen AI adoption by

technologists isn't just about personal productivity, it's urgent "with the

projected shortage of half a million developers by 2030 and the need for a

billion new apps," he added. ... Still, as gen AI becomes a commonplace tool in

technology shops, Berent-Spillson advises caution. "The real game-changer here

is speed, but there's a catch," he said. "While AI can dramatically compress

cycle time, it will also amplify any existing process constraints. Think of it

like adding a supercharger to your car -- if your chassis isn't solid, you're

just going to get to the problem faster." Exercise caution "regarding code

quality, maintainability, and IP considerations," McDonagh-Smith advises. "While

syntactically correct, AI tools have been seen to create code that's logically

flawed or inefficient, leading to potential code degradation over time if not

reviewed carefully. We should also guard against software sprawl where the ease

of creating AI-generated code results in overly complex or unnecessary code that

might make projects more difficult to maintain over time."

Quote for the day:

"Difficulties in life are intended to

make us better, not bitter." -- Dan Reeves

No comments:

Post a Comment