Quote for the day:

"Great leaders do not desire to lead but to serve." -- Myles Munroe

Managing Technical Debt the Right Way

Here’s the uncomfortable truth: most executives don’t care about technical

purity, but they do care about value leakage. If your team can’t deliver new

features fast enough, if outages are too frequent, if security holes are piling

up, that is financial debt—just wearing a hoodie instead of a suit. The BTABoK

approach is to make debt visible in the same way accountants handle real

liabilities. Use canvases, views, and roadmaps to connect the hidden cost of

debt to business outcomes. Translate debt into velocity lost, time to market,

and risk exposure. Then prioritize it just like any other investment. ... If

your architects can’t tie debt decisions to value, risk, and strategy, then

they’re not yet professionals. Training and certification are not about passing

an exam. They are about proving you can handle debt like a surgeon handles

risk—deliberately, transparently, and with the trust of society. ... Let’s not

sugarcoat it: some executives will always see debt as “nerd whining.” But when

you put it into the lifecycle, into the transformation plan, and onto the

balance sheet, it becomes a business issue. This is the same lesson learned in

finance: debt can be a powerful tool if managed, or a silent killer if ignored.

BTABoK doesn’t give you magic bullets. It gives you a discipline and a language

to make debt a first-class concern in architectural practice. The rest is

courage—the courage to say no to shortcuts that aren’t really shortcuts, to show

leadership the cost of delay, and to treat architectural decisions with the

seriousness they deserve.

Here’s the uncomfortable truth: most executives don’t care about technical

purity, but they do care about value leakage. If your team can’t deliver new

features fast enough, if outages are too frequent, if security holes are piling

up, that is financial debt—just wearing a hoodie instead of a suit. The BTABoK

approach is to make debt visible in the same way accountants handle real

liabilities. Use canvases, views, and roadmaps to connect the hidden cost of

debt to business outcomes. Translate debt into velocity lost, time to market,

and risk exposure. Then prioritize it just like any other investment. ... If

your architects can’t tie debt decisions to value, risk, and strategy, then

they’re not yet professionals. Training and certification are not about passing

an exam. They are about proving you can handle debt like a surgeon handles

risk—deliberately, transparently, and with the trust of society. ... Let’s not

sugarcoat it: some executives will always see debt as “nerd whining.” But when

you put it into the lifecycle, into the transformation plan, and onto the

balance sheet, it becomes a business issue. This is the same lesson learned in

finance: debt can be a powerful tool if managed, or a silent killer if ignored.

BTABoK doesn’t give you magic bullets. It gives you a discipline and a language

to make debt a first-class concern in architectural practice. The rest is

courage—the courage to say no to shortcuts that aren’t really shortcuts, to show

leadership the cost of delay, and to treat architectural decisions with the

seriousness they deserve.How National AI Clouds Undermine Democracy

The rapid spread of sovereign AI clouds unintentionally creates a new form of

unchecked power. It combines state authority with corporate technology in

unclear public-private partnerships. This combination centralizes surveillance

and decision-making power, extending far beyond effective democratic oversight.

The pursuit of national sovereignty undermines the civic sovereignty of

individuals. ... The unique and overlooked danger is the rise of a permanent,

unelected techno-bureaucracy. Unlike traditional government agencies, these

hybrid entities are shielded from democratic pressures. Their technical

complexity acts as a barrier against public understanding and journalistic

inquiry. ... no sovereign cloud should operate without a corresponding

legislative data charter. This charter, passed by the national legislature, must

clearly define citizens' rights against algorithmic discrimination, set explicit

limits on data use, and create transparent processes for individuals harmed by

the system. It should recognize data portability as an essential right, not just

a technical feature. ... every sovereign AI initiative should be mandated

to serve the public good. These systems must legally demonstrate that they

fulfill publicly defined goals, with their performance measured and reported

openly. This directs the significant power of AI toward applications that

benefit the public, such as enhancing healthcare outcomes or building climate

resilience.

The rapid spread of sovereign AI clouds unintentionally creates a new form of

unchecked power. It combines state authority with corporate technology in

unclear public-private partnerships. This combination centralizes surveillance

and decision-making power, extending far beyond effective democratic oversight.

The pursuit of national sovereignty undermines the civic sovereignty of

individuals. ... The unique and overlooked danger is the rise of a permanent,

unelected techno-bureaucracy. Unlike traditional government agencies, these

hybrid entities are shielded from democratic pressures. Their technical

complexity acts as a barrier against public understanding and journalistic

inquiry. ... no sovereign cloud should operate without a corresponding

legislative data charter. This charter, passed by the national legislature, must

clearly define citizens' rights against algorithmic discrimination, set explicit

limits on data use, and create transparent processes for individuals harmed by

the system. It should recognize data portability as an essential right, not just

a technical feature. ... every sovereign AI initiative should be mandated

to serve the public good. These systems must legally demonstrate that they

fulfill publicly defined goals, with their performance measured and reported

openly. This directs the significant power of AI toward applications that

benefit the public, such as enhancing healthcare outcomes or building climate

resilience.

IT’s renaissance risks losing steam

IT-enabled value creation will etiolate without the sustained light of

stakeholder attention. CIOs need to manage IT signals, symbols, and suppositions

with an eye toward recapturing stakeholder headspace. Every IT employee needs to

get busy defanging the devouring demons of apathy and ignorance surrounding IT

operations today. ... We need to move beyond our “hero on horseback” obsession

with single actors. Instead we need to return our efforts forcefully to

l’histoire des mentalités — the study of the mental universe of ordinary people.

How is l’homme moyen sensual (the man on the street) dealing with the

technological choices arrayed before him? ... The IT pundits’ much discussed

promise of “technology transformation” will never materialize if appropriate

exothermic — i.e., behavior-inducing and energy creating — IT ideas have no mass

following among those working at the screens around the world. ... As CIO,

have you articulated a clear vision of what you want IT to achieve during your

tenure? Have you calmed the anger of unmet expectations, repaired the wounds of

system outages, alleviated the doubts about career paths, charted a

filled-with-benefits road forward and embodied the hopes of all stakeholders?

... The cognitive elephant in the room that no one appears willing to talk about

is the widespread technological illiteracy of the world’s population.

IT-enabled value creation will etiolate without the sustained light of

stakeholder attention. CIOs need to manage IT signals, symbols, and suppositions

with an eye toward recapturing stakeholder headspace. Every IT employee needs to

get busy defanging the devouring demons of apathy and ignorance surrounding IT

operations today. ... We need to move beyond our “hero on horseback” obsession

with single actors. Instead we need to return our efforts forcefully to

l’histoire des mentalités — the study of the mental universe of ordinary people.

How is l’homme moyen sensual (the man on the street) dealing with the

technological choices arrayed before him? ... The IT pundits’ much discussed

promise of “technology transformation” will never materialize if appropriate

exothermic — i.e., behavior-inducing and energy creating — IT ideas have no mass

following among those working at the screens around the world. ... As CIO,

have you articulated a clear vision of what you want IT to achieve during your

tenure? Have you calmed the anger of unmet expectations, repaired the wounds of

system outages, alleviated the doubts about career paths, charted a

filled-with-benefits road forward and embodied the hopes of all stakeholders?

... The cognitive elephant in the room that no one appears willing to talk about

is the widespread technological illiteracy of the world’s population.

How One Bad Password Ended a 158-Year-Old Business

KNP's story illustrates a weakness that continues to plague organizations across

the globe. Research from Kaspersky analyzing 193 million compromised passwords

found that 45% could be cracked by hackers within a minute. And when attackers

can simply guess or quickly crack credentials, even the most established

businesses become vulnerable. Individual security lapses can have

organization-wide consequences that extend far beyond the person who chose

"Password123" or left their birthday as their login credential. ... KNP's

collapse demonstrates that ransomware attacks create consequences far beyond an

immediate financial loss. Seven hundred families lost their primary income

source. A company with nearly two centuries of history disappeared overnight.

And Northamptonshire's economy lost a significant employer and service provider.

For companies that survive ransomware attacks, reputational damage often

compounds the initial blow. Organizations face ongoing scrutiny from customers,

partners, and regulators who question their security practices. Stakeholders

seek accountability for data breaches and operational failures, leading to legal

liabilities. ... KNP joins an estimated 19,000 UK businesses that suffered

ransomware attacks last year, according to government surveys. High-profile

victims have included major retailers like M&S, Co-op, and Harrods,

demonstrating that no organization is too large or established to be

targeted.

KNP's story illustrates a weakness that continues to plague organizations across

the globe. Research from Kaspersky analyzing 193 million compromised passwords

found that 45% could be cracked by hackers within a minute. And when attackers

can simply guess or quickly crack credentials, even the most established

businesses become vulnerable. Individual security lapses can have

organization-wide consequences that extend far beyond the person who chose

"Password123" or left their birthday as their login credential. ... KNP's

collapse demonstrates that ransomware attacks create consequences far beyond an

immediate financial loss. Seven hundred families lost their primary income

source. A company with nearly two centuries of history disappeared overnight.

And Northamptonshire's economy lost a significant employer and service provider.

For companies that survive ransomware attacks, reputational damage often

compounds the initial blow. Organizations face ongoing scrutiny from customers,

partners, and regulators who question their security practices. Stakeholders

seek accountability for data breaches and operational failures, leading to legal

liabilities. ... KNP joins an estimated 19,000 UK businesses that suffered

ransomware attacks last year, according to government surveys. High-profile

victims have included major retailers like M&S, Co-op, and Harrods,

demonstrating that no organization is too large or established to be

targeted.Has the UK’s Cyber Essentials scheme failed?

There are several reasons why larger organisations may steer clear of CE in its

current form, explains Kearns. “They typically operate complex, often

geographically dispersed networks, where basic technical controls driven by CE

do not satisfy organisational appetite to drive down risk and improve

resilience,” she says. “The CE control set is also ‘absolute’ and does not allow

for the use of compensating controls. Large complex environments, on the other

hand, often operate legacy systems that require compensating controls to reduce

risk, which prevents compliance with CE.” The point-in-time nature of assessment

is also a poor fit for today’s dynamic IT infrastructure and threat

environments, argues Pierre Noel, field CISO EMEA at security vendor Expel. ...

“For large enterprises with complex IT environments, CE may not be comprehensive

enough to address their specific security needs,” says Andy Kays, CEO of MSSP

Socura. “Despite these limitations, it still serves a valuable purpose as a

baseline, especially for supply chain assurance where larger companies want to

ensure their smaller partners have a minimum level of security.” Richard Starnes

is an experienced CISO and chair of the WCIT security panel. He agrees that

large enterprises should require CE+ certification in their supplier contracts,

where it makes sense. “This requirement should also include a contract flow-down

to ensure that their suppliers’ downstream partners are also certified,” says

Starnes.

There are several reasons why larger organisations may steer clear of CE in its

current form, explains Kearns. “They typically operate complex, often

geographically dispersed networks, where basic technical controls driven by CE

do not satisfy organisational appetite to drive down risk and improve

resilience,” she says. “The CE control set is also ‘absolute’ and does not allow

for the use of compensating controls. Large complex environments, on the other

hand, often operate legacy systems that require compensating controls to reduce

risk, which prevents compliance with CE.” The point-in-time nature of assessment

is also a poor fit for today’s dynamic IT infrastructure and threat

environments, argues Pierre Noel, field CISO EMEA at security vendor Expel. ...

“For large enterprises with complex IT environments, CE may not be comprehensive

enough to address their specific security needs,” says Andy Kays, CEO of MSSP

Socura. “Despite these limitations, it still serves a valuable purpose as a

baseline, especially for supply chain assurance where larger companies want to

ensure their smaller partners have a minimum level of security.” Richard Starnes

is an experienced CISO and chair of the WCIT security panel. He agrees that

large enterprises should require CE+ certification in their supplier contracts,

where it makes sense. “This requirement should also include a contract flow-down

to ensure that their suppliers’ downstream partners are also certified,” says

Starnes.

Is Your Data Generating Value or Collecting Digital Dust?

Economic uncertainty is prompting many companies to think about how to do more

with less. But what if they’re actually positioned to do more with more and just

don’t realize it? Many organizations already have the resources they need to

improve efficiency and resilience in challenging times. Close to two-thirds of

organizations manage 1 petabyte or more of data, which represents enough data

to cover 500 billion standard pages of text. More than 40% of companies store

even more data. Much of that data sits unanalyzed while it incurs costs related

to collection, compliance, and storage. It also poses data breach risks that

require expensive security measures to prevent. ... Engaging with too many apps

often makes employees less efficient than they could be. In 2024, companies used

an average of 21 apps just for HR tasks. Multiply that across different

functions, and it’s easy to see how finding ways to reduce the total could bring

down costs. Trimming the number of apps can also increase productivity by

reducing employee overwhelm. Constantly switching between different apps and

systems has been shown to distract employees while increasing their levels of

stress and frustration. Across the organization, switching among tasks and apps

consumes 9% of the average employee’s time at work by chipping away at their

attention and ability to focus a few seconds at a time with each of the

hundreds of tasks switches they perform every day.

Economic uncertainty is prompting many companies to think about how to do more

with less. But what if they’re actually positioned to do more with more and just

don’t realize it? Many organizations already have the resources they need to

improve efficiency and resilience in challenging times. Close to two-thirds of

organizations manage 1 petabyte or more of data, which represents enough data

to cover 500 billion standard pages of text. More than 40% of companies store

even more data. Much of that data sits unanalyzed while it incurs costs related

to collection, compliance, and storage. It also poses data breach risks that

require expensive security measures to prevent. ... Engaging with too many apps

often makes employees less efficient than they could be. In 2024, companies used

an average of 21 apps just for HR tasks. Multiply that across different

functions, and it’s easy to see how finding ways to reduce the total could bring

down costs. Trimming the number of apps can also increase productivity by

reducing employee overwhelm. Constantly switching between different apps and

systems has been shown to distract employees while increasing their levels of

stress and frustration. Across the organization, switching among tasks and apps

consumes 9% of the average employee’s time at work by chipping away at their

attention and ability to focus a few seconds at a time with each of the

hundreds of tasks switches they perform every day.The history and future of software development

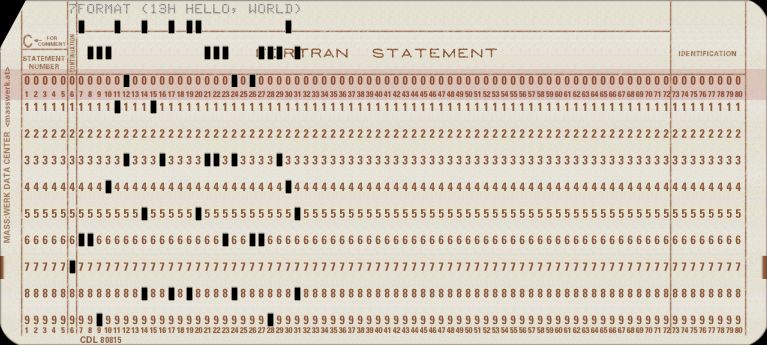

For any significant piece of software back then, you needed stacks of punch

cards. Yes, 1000 lines of code needed 1000 cards. And you needed to have them in

order. Now, imagine dropping that stack of 1000 cards! It would take me ages to

get them back in order. Devs back then experienced this a lot—so some of them

went ahead and had creative ways of indicating the order of these cards. ... y

the mid 1970s affordable home computers were starting to become a reality.

Instead of a computer just being a work thing, hobbyists started using computers

for personal things—maybe we can call these, I don't know...personal computers.

... Assembler and assembly tend to be used interchangeably. But are in reality

two different things. Assembly would be the actual language, syntax—instructions

being used and would be tightly coupled to the architecture. While the assembler

is the piece of software that assembles your assembly code into machine code—the

thing your computer knows how to execute. ... What about writing the software?

Did they use git back then? No, git only came out in 2005, so back then software

version control was quite the manual effort. From developers having their own

way of managing source code locally to even having wall charts where developers

can "claim" ownership of certain source code files. For those that were able to

work on a shared (multi-user) system, or have an early version of some networked

storage—Source code sharing was as easy as handing out floppy disks.

For any significant piece of software back then, you needed stacks of punch

cards. Yes, 1000 lines of code needed 1000 cards. And you needed to have them in

order. Now, imagine dropping that stack of 1000 cards! It would take me ages to

get them back in order. Devs back then experienced this a lot—so some of them

went ahead and had creative ways of indicating the order of these cards. ... y

the mid 1970s affordable home computers were starting to become a reality.

Instead of a computer just being a work thing, hobbyists started using computers

for personal things—maybe we can call these, I don't know...personal computers.

... Assembler and assembly tend to be used interchangeably. But are in reality

two different things. Assembly would be the actual language, syntax—instructions

being used and would be tightly coupled to the architecture. While the assembler

is the piece of software that assembles your assembly code into machine code—the

thing your computer knows how to execute. ... What about writing the software?

Did they use git back then? No, git only came out in 2005, so back then software

version control was quite the manual effort. From developers having their own

way of managing source code locally to even having wall charts where developers

can "claim" ownership of certain source code files. For those that were able to

work on a shared (multi-user) system, or have an early version of some networked

storage—Source code sharing was as easy as handing out floppy disks.

Why the operating system is no longer just plumbing

Many enterprises still think of the operating system as a “static” or background layer that doesn’t need active evolution. The reality is that modern operating systems like Red Hat Enterprise Linux (RHEL) are dynamic, intelligent platforms that actively enable and optimize everything running on top of them. Whether you're training AI models, deploying cloud-native applications, or managing edge devices, the OS is making thousands of critical decisions every second about resource allocation, security enforcement, and performance optimization. ... With image mode deployments, zero-downtime updates, and optimized container support, RHEL ensures that even resource-constrained environments can maintain enterprise-grade reliability. We’ve also focused heavily on security—confidential computing, quantum-resistant cryptography, and compliance automation—because edge environments are often exposed to greater risk. These choices allow RHEL to deliver resilience in conditions where compute power, space, and connectivity are limited. ... We don't just take community code and ship it — we validate, harden, and test everything extensively. Red Hat bridges this gap by being an active contributor upstream while serving as an enterprise-grade curator downstream. Our ecosystem partnerships ensure that when new technologies emerge, they work reliably with RHEL from day one.Ransomware now targeting backups, warns Google’s APAC security chief

Backups often contain sensitive data such as personal information, intellectual

property, and financial records. Pereira warned that attackers can use this data

as extra leverage or sell it on the dark web. The shift in focus to backup

systems underscores how ransomware has become less about disruption and more

about business pressure. If an organisation cannot restore its systems

independently, it has little choice but to consider paying a ransom. ... Another

troubling trend is “cloud-native extortion,” where attackers abuse built-in

cloud features, such as encryption or storage snapshots, to hold systems

hostage. Pereira explained that many organisations in the region are adapting by

shifting to identity-focused security models. “Cloud environments have become

the new perimeter, and attackers have been weaponising cloud-native tools,” he

said. “We now need to enforce strict cloud security hygiene, such as robust MFA,

least privilege access, proactively monitoring of role access changes or

credential leaks, using automation to detect and remediate misconfigurations,

and anomaly detection tools for cloud activities.” He pointed to rising

investments in identity and access management tools, with organisations

recognising their role in cutting down the risk of identity-based attacks. For

APAC businesses, this means moving away from legacy perimeter defences and

embracing cloud-native safeguards that assume breaches are inevitable but limit

the damage.

Backups often contain sensitive data such as personal information, intellectual

property, and financial records. Pereira warned that attackers can use this data

as extra leverage or sell it on the dark web. The shift in focus to backup

systems underscores how ransomware has become less about disruption and more

about business pressure. If an organisation cannot restore its systems

independently, it has little choice but to consider paying a ransom. ... Another

troubling trend is “cloud-native extortion,” where attackers abuse built-in

cloud features, such as encryption or storage snapshots, to hold systems

hostage. Pereira explained that many organisations in the region are adapting by

shifting to identity-focused security models. “Cloud environments have become

the new perimeter, and attackers have been weaponising cloud-native tools,” he

said. “We now need to enforce strict cloud security hygiene, such as robust MFA,

least privilege access, proactively monitoring of role access changes or

credential leaks, using automation to detect and remediate misconfigurations,

and anomaly detection tools for cloud activities.” He pointed to rising

investments in identity and access management tools, with organisations

recognising their role in cutting down the risk of identity-based attacks. For

APAC businesses, this means moving away from legacy perimeter defences and

embracing cloud-native safeguards that assume breaches are inevitable but limit

the damage.

No comments:

Post a Comment