How To Build Out a Successful Multi-Cloud Strategy

While a multi-cloud approach can deliver serious value in terms of resiliency,

flexibility and cost savings, making sure you’re choosing the right providers

requires a comprehensive assessment. Luckily, all main cloud vendors offer

free trial services so you can establish which ones best fit your needs and

see how they work with each other. It will pay to conduct proofs-of-concept

using the free trials and run your data and code on each provider. You also

need to make sure that you’re able to move your data and code around easily

during the trials. It’s also important to remember that each cloud provider

has different strengths—one company’s best option is not necessarily the best

choice for you. For example, if your startup is heavily reliant on running

artificial intelligence (AI) and machine learning (ML) applications, you might

opt for Google Cloud’s AI open source platform. Or perhaps you require an

international network of data centers, minimal latency and data privacy

compliance for certain geographies for your globally used app. Here’s where

AWS could step in. On the other hand, you might need your cloud applications

to seamlessly integrate with the various Microsoft tools that you already use.

This would make the case for Microsoft Azure.

How data and technology can strengthen company culture

Remote working exposed another potential weakness holding back teams from

realising their potential – employee expertise that isn’t being shared. Under

the lockdown, many companies realised that knowledge and experience within

their workforce were highly concentrated within specific offices, regions,

teams, or employees. How can these valuable insights be shared seamlessly

across internal networks? A truly collaborative company culture must go beyond

limited solutions, such as excessive video calls, which run the risk of

burning people out. Collaboration tools that support culture have to be chosen

based on their effectiveness at improving interactions, bridging gaps and

simplifying knowledge sharing. ... While revamping strategies in recent

months, many companies have started to prioritise customer retention and

expansion over new customer acquisition, given the state of the economy. Data

and technology can help employees adapt to this transition. Investing in tools

that empower employees gives them the confidence, knowledge and skills they

need to deliver maximum customer value. This in turn boosts customer

satisfaction as staff deliver an engaging and consistent experience each time

they connect.

Cloud environment complexity has surpassed human ability to manage

“The benefits of IT and business automation extend far beyond cost savings.

Organizations need this capability – to drive revenue, stay connected with

customers, and keep employees productive – or they face extinction,” said

Bernd Greifeneder, CTO at Dynatrace. “Increased automation enables digital

teams to take full advantage of the ever-growing volume and variety of

observability data from their increasingly complex, multicloud, containerized

environments. With the right observability platform, teams can turn this data

into actionable answers, driving a cultural change across the organization and

freeing up their scarce engineering resources to focus on what matters most –

customers and the business.” ... 93% of CIOs said AI-assistance will be

critical to IT’s ability to cope with increasing workloads and deliver maximum

value to the business. CIOs expect automation in cloud and IT operations

will reduce the amount of time spent ‘keeping the lights on’ by 38%, saving

organizations $2 million per year, on average. Despite this advantage,

just 19% of all repeatable operations processes for digital experience

management and observability have been automated. “History has shown

successful organizations use disruptive moments to their advantage,” added

Greifeneder.

A New Risk Vector: The Enterprise of Things

The ultimate goal should be the implementation of a process for formal review

of cybersecurity risk and readout to the governance, risk, and compliance

(GRC) and audit committee. Each of these steps must be undertaken on an

ongoing basis, instead of being viewed as a point-in-time exercise. Today's

cybersecurity landscape, with new technologies and evolving adversary trade

craft, demands a continuous review of risk by boards, as well as the constant

re-evaluation of the security budget allocation against rising risk areas. to

ensure that every dollar spent on cybersecurity directly buys down those areas

of greatest risk. We are beginning to see some positive trends in this

direction. Nearly every large public company board of directors today has made

cyber-risk an element either of the audit committee, risk committee, or safety

and security committee. The CISO is also getting visibility at the board

level, in many cases presenting at least once if not multiple times a year.

Meanwhile, shareholders are beginning to ask the tough questions during annual

meetings about what cybersecurity measures are being implemented. In

today's landscape, each of these conversations about cyber-risk at the board

level must include a discussion about the Enterprise of Things given the

materiality of risk.

FreedomFI: Do-it-yourself open-source 5G networking

FreedomFi offers a couple of options to get started with open-source private

cellular through their website. All proceeds will be reinvested towards

building up the Magma's project open-source software code. Sponsors

contributing $300 towards the project will receive a beta FreedomFi gateway

and limited, free access to the Citizens Broadband Radio Service (CBRS) shared

spectrum in the 3.5 GHz "innovation band." Despite the name "good-buddy,"

CBRS has nothing to do with the CB radio service used by amateurs and truckers

for two-way voice communications. CB lives on in the United States in the

27MHz band. Those contributing at $1,000 dollars will get support with a

"network up" guarantee, offering FreedomFi guidance over a series of Zoom

sessions. The company guarantees they won't give up until you get a

connection. FreedomFi will be demonstrating an end-to-end private cellular

network deployment during their upcoming keynote at the Open Infrastructure

Summit and publishing step-by-step instructions on the company blog. This

isn't just a hopeful idea being launched on a wing and a prayer. WiConnect

Wireless is already working with it. "We operate hundreds of towers, providing

fixed wireless access in rural areas of Wisconsin," said Dave Bagett,

WiConnect's president.

Why We Must Unshackle AI From the Boundaries of Human Knowledge

Artificial intelligence (AI) has made astonishing progress in the last decade.

AI can now drive cars, diagnose diseases from medical images, recommend

movies, even whom you should date, make investment decisions, and create art

that people have sold at auction. A lot of research today, however, focuses on

teaching AI to do things the way we do them. For example, computer vision and

natural language processing – two of the hottest research areas in the field –

deal with building AI models that can see like humans and use language like

humans. But instead of teaching computers to imitate human thought, the time

has now come to let them evolve on their own, so instead of becoming like us,

they have a chance to become better than us. Supervised learning has thus far

been the most common approach to machine learning, where algorithms learn from

datasets containing pairs of samples and labels. For example, consider a

dataset of enquiries (not conversions) for an insurance website with

information about a person’s age, occupation, city, income, etc. plus a label

indicating whether the person eventually purchased the insurance.

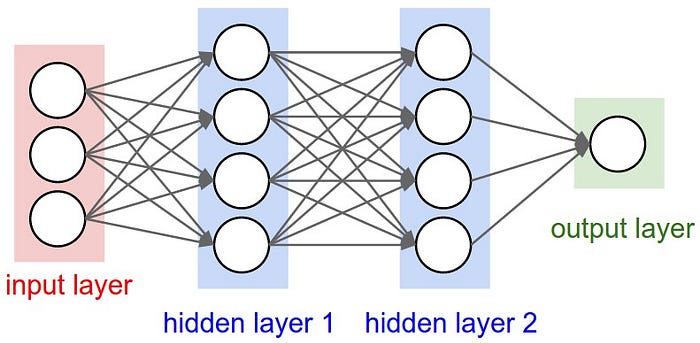

The Race for Intelligent AI

The key takeaway is that fundamentally BERT and GPT3 have the same structure

in terms of information flow. Although attention layers in transformers can

distribute information in a way that a normal neural network layer cannot, it

still retains the fundamental property that it passes forward information from

input to output. The first problem with feed forward neural nets is that they

are inefficient. When processing information, the processing chain can often

be broken down into multiple small repetitive tasks. For example, addition is

a cyclical process, where single digit adders, or in a binary system full

adders, can be used together to compute the final result. In a linear

information system, to add three numbers there would have to be three adders

chained together; this is not efficient, especially for neural networks, which

would have to learn each adder unit. This is inefficient when it is possible

to learn one unit and reuse it. This is also not how back propagation tends to

learn, the neural network would try to create a hierarchical decomposition of

the process, which in this case would not ‘scale’ to more digits. Another

issue with using feed forward neural networks to simulate “human level

intelligence” is thinking. Thinking is an optimization process.

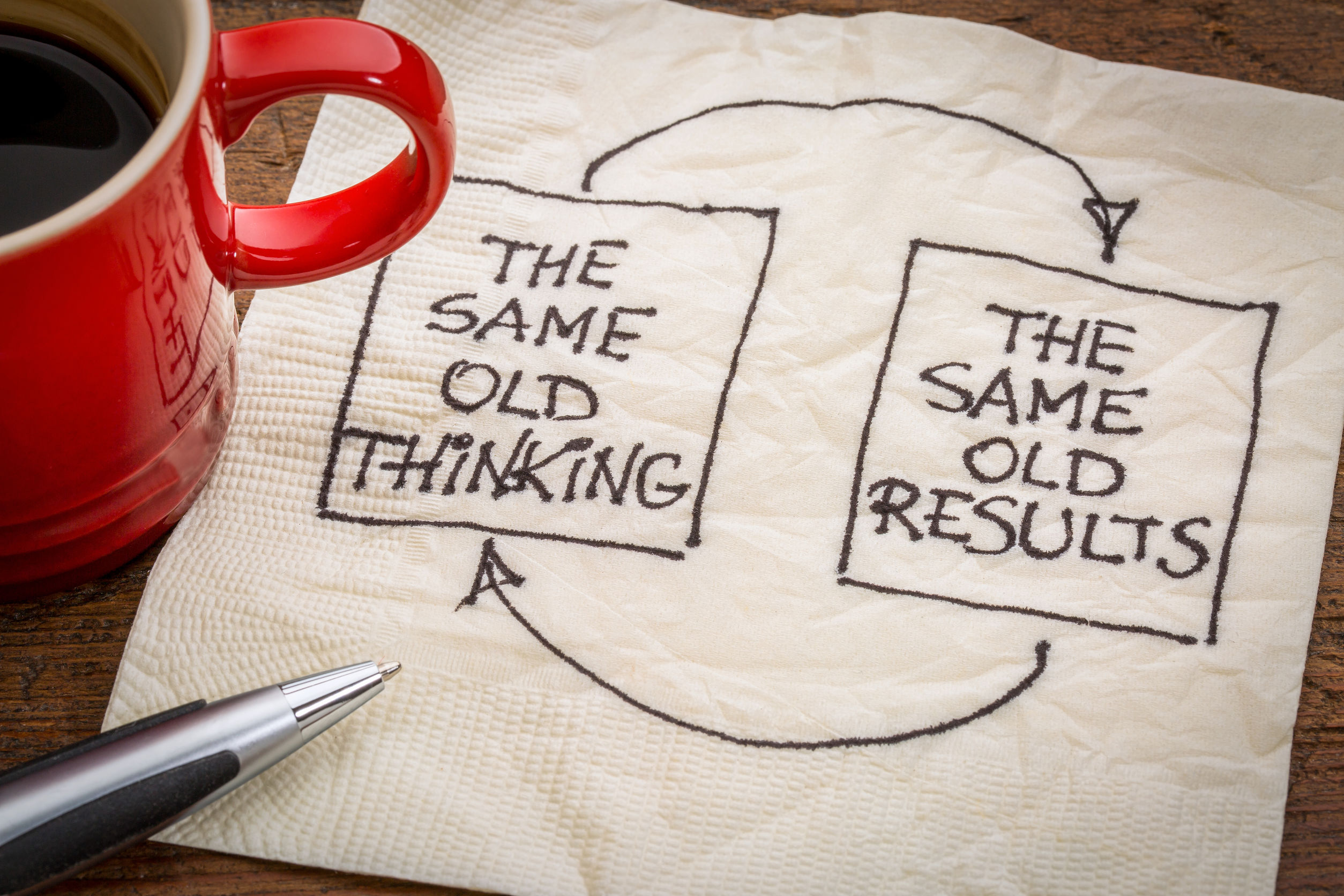

Why Agile Transformations sometimes fail

The attitude regarding Agile adoption that is represented by top management

impacts the whole process. Disengaged management is the most common reason for

my ranking. There were not too many examples in my career when the bottom-up

Agile transformation was successful. Usually, top management is at some point

aware of Agile activities in the company, Scrum adoption, although they leave

it to the teams. One of the frequent reasons for this behavior is that top

management is not acquainted with the understanding that Agility is beneficial

for business, product, and the most important – customers/users. They consider

Agile, Scrum and Lean to be things that might improve delivery and teams'

productivity. Let's imagine the situation when a large number of Scrum Masters

reported the same impediment. It becomes an organizational impediment. How

would you resolve it when decision-makers are not interested in it? What would

be the impact on teams' engagement and product delivery? Active management

that fosters Agility and empirical way, and actively participates in the whole

process is a secret ingredient that makes the transition more realistic.

Another observation I have made is a strong focus on delivery, productivity,

technology and effectiveness.

The encryption war is on again, and this time government has a new strategy

So what's going on here? Adding two new countries -- Japan and India -- the

statement suggests that more governments are getting worried, but the tone is

slightly different now. Perhaps governments are trying a less direct approach

this time, and hoping to put pressure on tech companies in a different way. "I

find it interesting that the rhetoric has softened slightly," says Professor

Alan Woodward of the University of Surrey. "They are no longer saying 'do

something or else'". What this note tries to do is put the ball firmly back

in the tech companies' court, Woodward says, by implying that big tech is

putting people at risk by not acceding to their demands -- a potentially

effective tactic in building a public consensus against the tech companies. "It

seems extraordinary that we're having this discussion yet again, but I think

that the politicians feel they are gathering a head of steam with which to put

pressure on the big tech companies," he says. Even if police and intelligence

agencies can't always get encrypted messages from tech companies, they certainly

aren't without other powers. The UK recently passed legislation giving law

enforcement wide-ranging powers to hack into computer systems in search of

data.

Code Security: SAST, Shift-Left, DevSecOps and Beyond

One of the most important elements in DevSecOps revolves around a project’s

branching strategy. In addition to the main branch, every developer uses their

own separate branch. They develop their code and then merge it back into that

main branch. A key requirement for the main branch is to maintain zero

unresolved warnings so that it passes all functional testing. Therefore, before

a developer on an individual branch can submit their work, it also needs to pass

all functional tests. And all static analysis tests need to pass. When a pull

request or merge request has unresolved warnings, it is rejected, must be fixed

and resubmitted. These include functional test case failures and static analysis

warnings. Functional test failures must be fixed. However, the root cause of the

failure may be hard to find. A functional test error might say, “Input A should

generate output B,” but C comes out instead, but there is no indication as to

which piece of code to change. Static analysis, on the other hand, will reveal

exactly where there is a memory leak and will provide detailed explanations for

each warning. This is one way in which static analysis can help DevSecOps

deliver the best and most secure code. Finally, let’s review Lean and

shift-left, and see how they are connected.

Quote for the day:

'The mediocre leader tells; The good leader explains; The superior leader demonstrates; and The great leader inspires." -- Buchholz and Roth

No comments:

Post a Comment