Egregor Ransomware Adds to Data Leak Trend

As with other ransomware gangs, such as Maze and Sodinokibi, the operators

behind the Egregor ransomware are threatening to leak victims' data if the

ransom demands are not met within three days, according to an Appgate alert.

The cybercriminals linked to Egregor are also taking a page from the Maze

playbook, creating a "news" site on the darknet that offers a list of victims

that have been targeted and updates about when stolen and encrypted data will

be released, according to the alert. "Egregors' ransom note also says that

aside from decrypting all the files in the event the company pays the ransom,

they will also provide recommendations for securing the company's network,

'helping' them to avoid being breached again, acting as some sort of "black

hat pentest team," according to Appgate. It's not clear how much ransom the

operators behind Egregor are demanding or if any data has been leaked,

according to Appgate. A copy of one ransom note posted online notes the

cybercriminals plan to release stolen data through what they call "mass

media." While Appgate released an alert to customers on Friday, the Egregor

ransomware variant was first spotted in mid-September by several independent

security researchers, including Michael Gillespie, who posted samples of the

ransom note on Twitter.

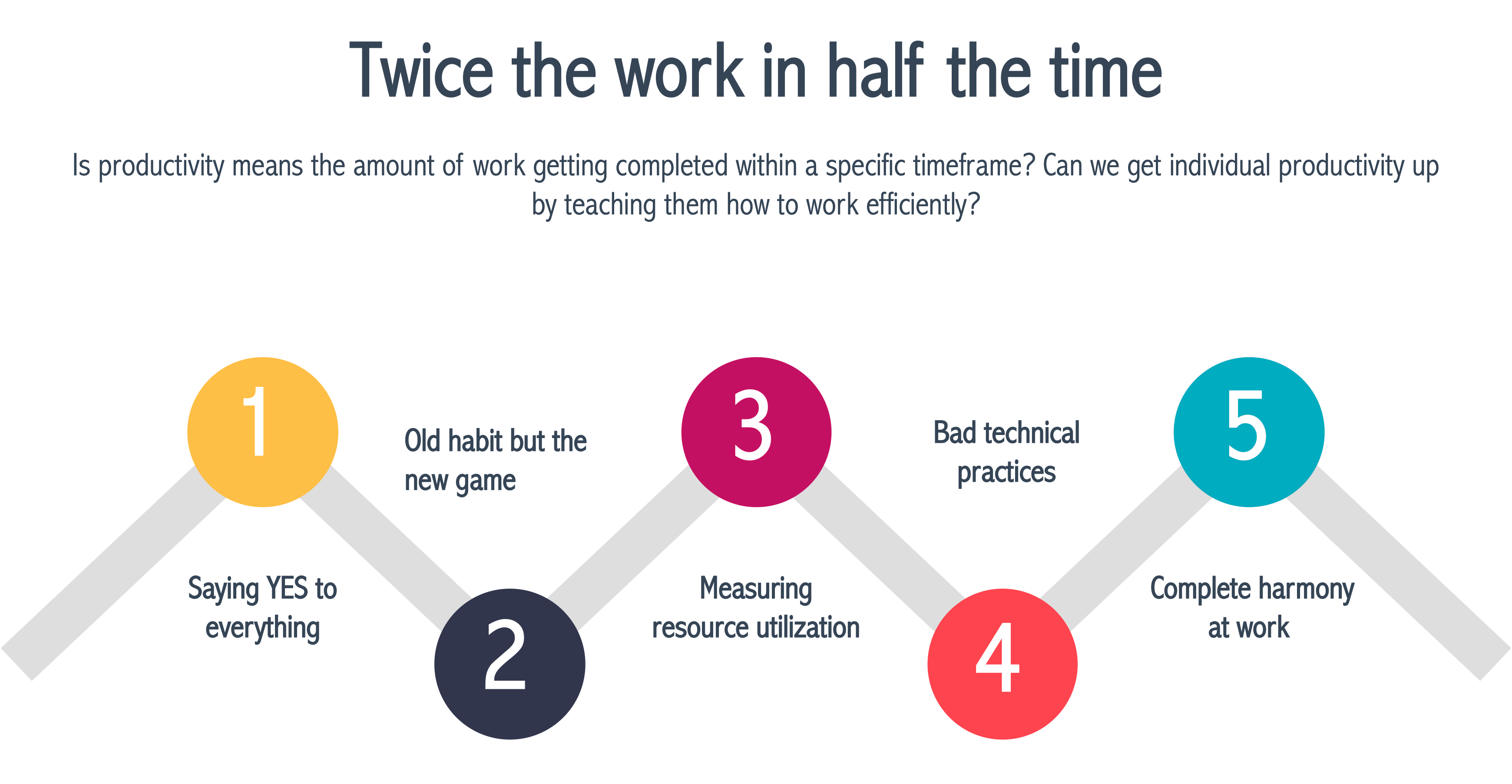

Five reasons why Scrum is not helping in getting twice the work done in half the time

Do you measure the velocity of the team? Do you calculate how long a person

was busy doing something? Do you measure estimated time for a task vs. actual

time spent? Or measuring things like defects per story, defect removal

efficiency and code coverage, etc. It is not that the above is harmful as long

it is used for the right purposes like velocity for forecasting and code

coverage for quality of code. But it makes more sense to measure time to

market, customer satisfaction, NPS, usages index, response time, and

innovation rate. If you were releasing once a year and now releasing every

quarter, you have already improved by 400%, but would you like to stick here?

Look at how much time your team takes from development to deployment in

production? ... We wanted people to reach faster by driving faster. We

taught them how to drive, manage traffic well, and put instructions

everywhere, but people are still not going above 40 KM an hour. Although it

has improved the overall time as there are fewer troubles while driving. When

checked, people complained about 20 years old car that they have been driving.

We have a similar story to our team.

How technology will shape the future of the workplace

Organisations often find it challenging to carry out business transformation

projects successfully — and shaping the future of the workplace is no

different. While there may be a willingness to change, there are many ways

that change projects become stuck in the mire, their momentum stalled by

hundreds of micro-actions taken (and not taken) throughout the organisation.

The pandemic changed things. Businesses have learned that a major change

project that would normally have taken six months to a year — such as enabling

everyone to work remotely — can be done much faster. Necessity is indeed the

mother of invention; innovation happens when people and organisations realise

they have to act fast to stay competitive. ... As virtual working becomes less

novel, more businesses will explore ways that they can support their employees

and keep the team working efficiently. We’ll also start to see a re-evaluation

of what working means. The days when it was defined by who sat at their desk

the longest had already started to wane before the pandemic hit. Now, with the

freedom to be creative that lockdown granted business leaders, companies are

starting to look beyond hours worked and things produced and towards the

quality of that work and the effect it has on the goals of the business.

Data Management skills

Nowadays, the digital transformation is actually about applying a data-driven

approach to every aspect of the business in an effort to create a competitive

advantage. That's why more and more companies want to build their own data

lake solutions. This trend is still continuing and those skills are still in

need. The most popular tools here are still HDFS for the on-prem solution and

cloud data storage solutions from AWS, GCP, and Azure. Aside from that, there

are also some data platforms that are trying to fill several niches and create

integrated solutions, for example, Cloudera, Apache Hudi, Delta Lake. ...

There are Data Warehouses where the information is sorted, ordered, and

presented in the form of final conclusions(the rest is discarded), and Data

Lakes — "dump everything here, because you never know what will be useful".

Data Hub is focused on those who do not belong to either the first or the

second category. The Data Hub architecture allows you to leave your data where

it is, providing centralization of the processing but not the storage. The

data is searched and accessed right where it is located at the moment. But,

because the Data Hub is planned and managed, organizations must invest

significant time and energy determining what their data means, where it comes

from and what transformations it must complete before it can be put into the

Data Hub.

These 10 tech predictions could mean huge changes ahead

According to Ashenden, the need to support creativity and innovation is urgent

for businesses in the current context. As a result, the tools that enable

collaboration are getting a huge boost – and not a short-term one. "Those

areas will become much more central going forward," she said. "A lot of work

processes that once relied on face-to-face have gone digital now, and that

won't go back. Even when people are back in the office – once these things

live in a digital world, that's where they live." Connectivity, according to

CCS Insights, will also change as a result of the switch to remote work. From

next year, the firm expects network operators to offer dedicated "work from

home" packages to businesses, differentiating between corporate and personal

usage, so that employers can provide staff with appropriate services such as

security, collaboration tools and IT support. Operators will also increase

their focus on connectivity in suburban zones, rather than city centers, as

the workforce becomes increasingly established outside of the office. And as

connectivity becomes ever-more important, the research firm predicts that the

next three years will be rocked by governments' actions to better protect

their national telecom infrastructure.

Improving Webassembly and Its Tooling -- Q&A with Wasmtime’s Nick Fitzgerald

It’s about discovering otherwise hidden and hard-to-find bugs. There’s a ton

that we miss with basic unit testing, where we write out some fixed set of

inputs and assert that our program produces the expected output. We overlook

some code paths or we fail to exercise certain program states. The reliability

of our software suffers. We are fallible, but at least we can recognize our

limitations and compensate for them. Testing pseudo-random inputs helps us avoid

our own biases by feeding our system “unexpected” inputs. It helps us find

integer overflow bugs or pathological inputs that allow (untrusted and

potentially hostile) users to trigger out-of-memory bugs or timeouts that could

be leveraged as part of a denial of service attack. Some people are familiar

with testing pseudo-random inputs via “property-based testing” where you assert

that some property always holds and then the testing framework tries to find

inputs where your invariant is violated. For example, if you are implementing

the reverse method for an array, you might assert the property that reversing an

array twice is identical to the original array.

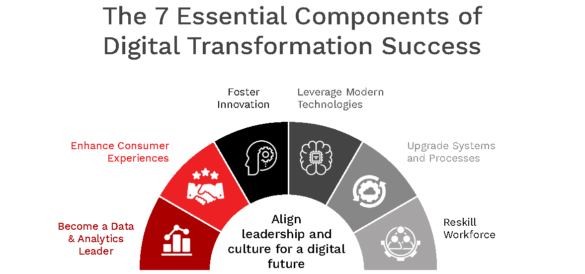

7 Essentials of Digital Transformation Success

Consumers have come to expect organizations to use their personal

information to create custom solutions. Especially during the pandemic,

consumers have become accustomed to the benefits of Netflix and Spotify

using machine learning for entertainment recommendations, Zoom using just a

couple clicks to create video engagement, and Google Home or Amazon Alexa

using voice for everything from answering inquiries to simplifying shopping.

These same consumers expect their bank or credit union to use their

relationship date, behaviors and preferences the same way … or better. But,

advanced analytics and AI should not be a goal in and of itself. These tools

should be used to support broader strategies. According to Wharton, “Instead

of exhaustively looking for all the areas AI could fit in, a better approach

would be for companies to analyze existing goals and challenges with a close

eye for the problems that AI is uniquely equipped to solve.” Some solutions

include everything from fraud detection to facilitating predictive solution

recommendations for customers. Now more than ever, AI needs to be used to

deliver human-like intelligence across the entire organization.

Inadequate skills and employee burnout are the biggest barriers to digital transformation

The ongoing disruption of the pandemic has shown how important it can be for

businesses to be built for change. Many executives are facing demand

fluctuations, new challenges to support employees working remotely and

requirements to cut costs. In addition, the study reveals that the majority

of organizations are making permanent changes to their organizational

strategy. For instance, 94% of executives surveyed plan to participate in

platform-based business models by 2022, and many reported they will increase

participation in ecosystems and partner networks. Executing these new

strategies may require a more scalable and flexible IT infrastructure.

Executives are already anticipating this: the survey showed respondents plan

a 20 percentage point increase in prioritization of cloud technology in the

next two years. What’s more, executives surveyed plan to move more of their

business functions to the cloud over the next two years, with customer

engagement and marketing being the top two cloudified functions. COVID-19

has disrupted critical workflows and processes at the heart of many

organizations’ core operations. Technologies like AI, automation and

cybersecurity that could help make workflows more intelligent, responsive

and secure are increasing in priority across the board for responding global

executives.

Is Cloud Migration a Path to Carbon Footprint Reduction?

Energy efficiency with an enterprise may go hand in hand with other

organizational traits, according to the report. Accenture’s research from

2013 to 2019 found that companies that consistently earned high marks on

environmental, social, and governance performance also saw operating margins

4.7x higher than organizations with lower performance in those areas. There

were also indications of higher annual returns to shareholders among those

environmentally minded enterprises. In addition to the potential benefit

cloud migration presents for the environment, Accenture’s report shows there

can be total cost of ownership savings of up to 30-40% when organizations

migrate to more cost-efficient public clouds. The report also shed light on

how cloud migration affected Accenture’s expenses. The firm runs 95% of its

applications in the cloud, the report says. After its third year of

migration, Accenture saw $14.5 million in benefits, plus another $3 million

in annualized costs saved by right sizing its service consumption. Moving to

the cloud might not mean much in terms of cutting energy consumption if the

service provider does not take steps to be more energy efficient.

Neuromorphic computing could solve the tech industry's looming crisis

Rather than separate out the memory and computing like most chips in use

today, neuromorphic hardware keeps both together, with processors having their

own local memory -- a more brain-like arrangement -- that saves energy and

speeds up processing. Neuromorphic computing could also help spawn a new wave

of artificial intelligence (AI) applications. Current AI is usually narrow and

developed by learning from stored data, developing and refining algorithms

until they reliably match a particular outcome. Using neuromorphic tech's

brain-like strategies, however, could allow AI to take on new tasks. Because

neuromorphic systems can work like the human brain -- able to cope with

uncertainty, adapt, and use messy, confusing data from the real world -- it

could lay the foundations for AIs to become more general. "The more brain-like

workloads approximate computing, where there's more fuzzy associations that

are in play -- this rapid adaptive behaviour of learning and self modifying

the programme, so to speak. These are types of functions that conventional

computing is not so efficient at and so we were looking for new architectures

that can provide breakthroughs," says Mike Davies

Quote for the day:

"It's not the position that makes the leader. It's the leader that makes the position." -- Stanley Huffty

No comments:

Post a Comment