5 best practices for software development partnerships

“The key to successful co-creation is ensuring your partner is not just doing

their job, but acting as a true strategic asset and advisor in support of your

company’s bottom line,” says Mark Bishopp, head of embedded payments/finance and

partnerships at Fortis. “This begins with asking probing questions during the

prospective stage to ensure they truly understand, through years of experience

on both sides of the table, the unique nuances of the industries you’re working

in.” Beyond asking questions about skills and capabilities, evaluate the

partner’s mindset, risk tolerance, approach to quality, and other areas that

require alignment with your organization’s business practices and culture. ...

To eradicate the us-versus-them mentality, consider shifting to more open,

feedback-driven, and transparent practices wherever feasible and compliant.

Share information on performance issues and outages, have everyone participate

in retrospectives, review customer complaints openly, and disclose the most

challenging data quality issues.

Revolutionizing Algorithmic Trading: The Power of Reinforcement Learning

The fundamental components of a reinforcement learning system are the agent, the

environment, states, actions, and rewards. The agent is the decision-maker, the

environment is what the agent interacts with, states are the situations the

agent finds itself in, actions are what the agent can do, and rewards are the

feedback the agent gets after taking an action. One key concept in reinforcement

learning is the idea of exploration vs exploitation. The agent needs to balance

between exploring the environment to find out new information and exploiting the

knowledge it already has to maximize the rewards. This is known as the

exploration-exploitation tradeoff. Another important aspect of reinforcement

learning is the concept of a policy. A policy is a strategy that the agent

follows while deciding on an action from a particular state. The goal of

reinforcement learning is to find the optimal policy, which maximizes the

expected cumulative reward over time. Reinforcement learning has been

successfully applied in various fields, from game playing (like the famous

AlphaGo) to robotics (for teaching robots new tasks).

Data Governance Roles and Responsibilities

Executive-level roles include leadership in the C-suite at the organization’s

top. According to Seiner, people at the executive level support, sponsor, and

understand Data Governance and determine its overall success and traction.

Typically, these managers meet periodically as part of a steering committee to

cover broadly what is happening in the organization, so they would add Data

Governance as a line item, suggested Seiner. These senior managers take

responsibility for understanding and supporting Data Governance. They keep up to

date on Data Governance progress through direct reports and communications from

those at the strategic level. ... According to Seiner, strategic members take

responsibility for learning about Data Governance, reporting to the executive

level about the program, being aware of Data Governance activities and

initiatives, and attending meetings or sending alternates. Moreover, this group

has the power to make timely decisions about Data Governance policies and how to

enact them.

Effective Test Automation Approaches for Modern CI/CD Pipelines

Design is not just about unit tests though. One of the biggest barriers to test

automation executing directly in the pipeline is that the team that deals with

the larger integrated system only starts a lot of their testing and automation

effort once the code has been deployed into a bigger environment. This wastes

critical time in the development process, as certain issues will only be

discovered later and there should be enough detail to allow testers to at least

start writing the majority of their automated tests while the developers are

coding on their side. This doesn’t mean that manual verification, exploratory

testing, and actually using the software shouldn’t take place. Those are

critical parts of any testing process and are important steps to ensuring

software behaves as desired. These approaches are also effective at finding

faults with the proposed design. However, automating the integration tests

allows the process to be streamlined.

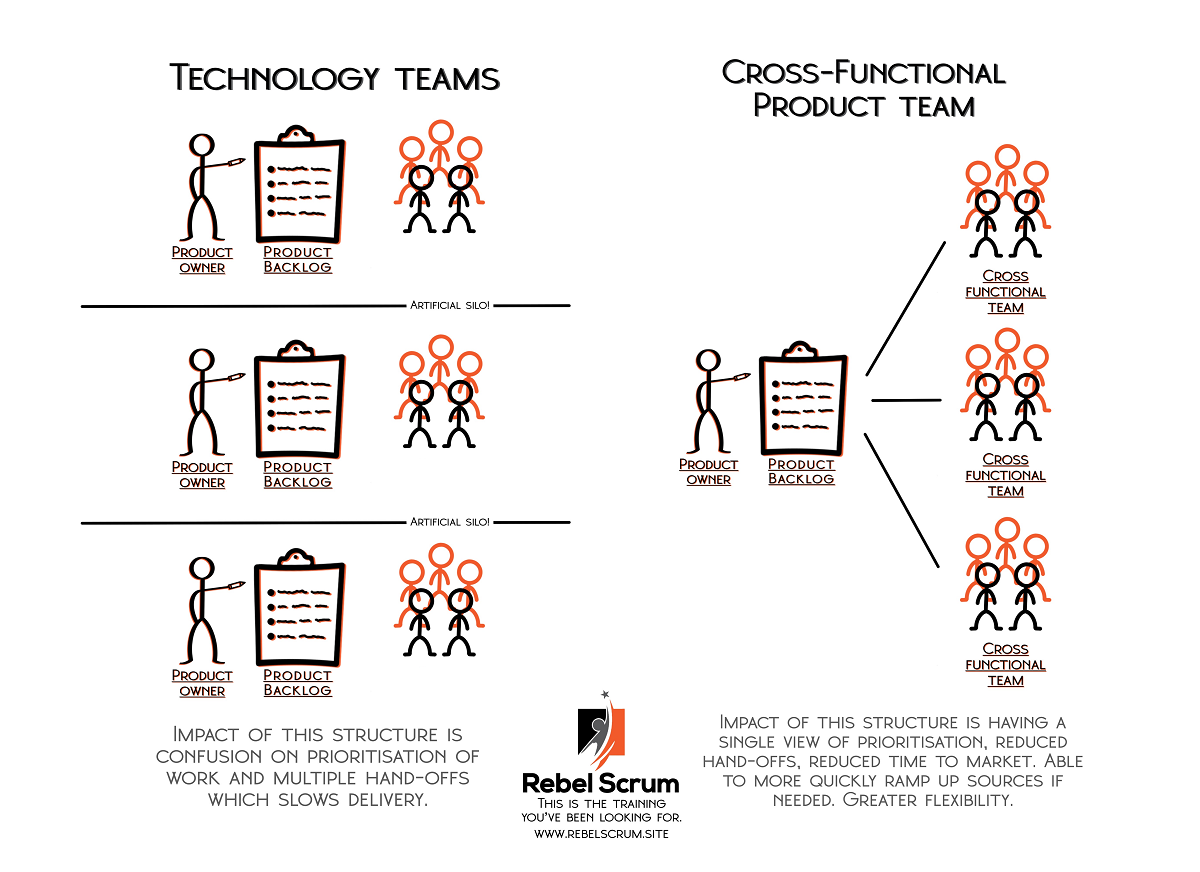

What Does Being a Cross-Functional Team in Scrum Mean?

By bringing together individuals with different skills and perspectives, these

teams promote innovation, problem-solving, and a holistic approach to project

delivery. They reduce handoffs, bottlenecks, and communication barriers often

plaguing traditional development models. Moreover, cross-functional teams enable

faster feedback cycles and facilitate continuous improvement. With all the

necessary skills in one team, there's no need to wait for handoffs or external

dependencies. This enables quicker decision-making, faster iterations, and the

ability to respond to customer feedback promptly. In short, being a

cross-functional Scrum Team means having a group of individuals with diverse

skills, a shared sense of responsibility, and a collaborative mindset. They work

together autonomously, leveraging their varied expertise to deliver high-quality

software increments. ... Building genuinely cross-functional Scrum Teams starts

with product definition. This means identifying and understanding the scope,

requirements, and goals of the product the team will work on.

The strategic importance of digital trust for modern businesses

Modern software development processes, like DevOps, are highly automated. An

engineer clicks a button that triggers a sequence of complicated, but automated,

steps. If a part of this sequence (e.g., code signing) is manual then there is a

likelihood that the step may be missed because everything else is automated.

Mistakes like using the wrong certificate or the wrong command line options can

happen. However, the biggest danger is often that the developer will store

private code signing keys in a convenient location (like their laptop or build

server) instead of a secure location. Key theft, misused keys, server breaches,

and other insecure processes can permit code with malware to be signed and

distributed as trusted software. Companies need a secure, enterprise-level code

signing solution that integrates with the CI/CD pipeline and automated DevOps

workflows but also provides key protection and code signing policy

enforcement.

Managing IT right starts with rightsizing IT for value

IT financial management — sometimes called FinOps — is overlooked in many

organizations. A surprising number of organizations do not have a very good

handle on the IT resources being used. Another way of saying this is: Executives

do not know what IT they are spending money on. CIOs need to make IT spend

totally transparent. Executives need to know what the labor costs are, what the

application costs are, and what the hardware and software costs are that support

those applications. The organization needs to know everything that runs — every

day, every month, every year. IT resources need to be matched to business units.

IT and the business unit need to have frank discussions about how important that

IT resource really is to them — is it Tier One? Tier Two? Tier Thirty? In the

data management space — same story. Organizations have too much data. Stop

paying to store data you don’t need and don’t use. Atle Skjekkeland, CEO at

Norway-based Infotechtion, and John Chickering, former C-level executive at

Fidelity, both insist that organizations, “Define their priority data, figure

out what it is, protect it, and get rid of the rest.”

Implementing Risk-Based Vulnerability Discovery and Remediation

A risk-based vulnerability management program is a complex preventative

approach used for swiftly detecting and ranking vulnerabilities based on

their potential threat to a business. By implementing a risk-based

vulnerability management approach, organizations can improve their security

posture and reduce the likelihood of data breaches and other security

events. ... Organizations should still have a methodology for testing and

validating that patches and upgrades have been appropriately implemented and

would not cause unanticipated flaws or compatibility concerns that might

harm their operations. Also, remember that there is no "silver bullet":

automated vulnerability management can help identify and prioritize

vulnerabilities, making it easier to direct resources where they are most

needed. ... Streamlining your patching management is another crucial

part of your security posture: an automated patch management system is a

powerful tool that may assist businesses in swiftly and effectively applying

essential security fixes to their systems and software.

Upskilling the non-technical: finding cyber certification and training for internal hires

“If you are moving people into technical security from other parts of the

organization, look at the delta between the employee's transferrable skills

and the job they’d be moving into. For example, if you need a product

security person, you could upskill a product engineer or product manager

because they know how the product works but may be missing the security

mindset,” she says. “It’s important to identify those who are ready for a

new challenge, identify their transferrable skills, and create career paths

to retain and advance your best people instead of hiring from outside.” ...

While upskilling and certifying existing employees would help the

organization retain talented people who already know the company, Diedre

Diamond, founding CEO of cyber talent search company CyberSN, cautions

against moving skilled workers to entry-level roles in security that don’t

pay what the employees are used to earning. Upskilling financial analysts

into compliance, either as a cyber risk analyst or GRC analyst will require

higher-level certifications, but the pay for those upskilled positions may

be more equitable for those higher-paid employees, she adds.

Data Engineering in Microsoft Fabric: An Overview

Fabric makes it quick and easy to connect to Azure Data Services, as well as

other cloud-based platforms and on-premises data sources, for streamlined

data ingestion. You can quickly build insights for your organization using

more than 200 native connectors. These connectors are integrated into the

Fabric pipeline and utilize the user-friendly drag-and-drop data

transformation with dataflow. Fabric standardizes on Delta Lake format.

Which means all the Fabric engines can access and manipulate the same

dataset stored in OneLake without duplicating data. This storage system

provides the flexibility to build lakehouses using a medallion architecture

or a data mesh, depending on your organizational requirement. You can choose

between a low-code or no-code experience for data transformation, utilizing

either pipelines/dataflows or notebook/Spark for a code-first experience.

Power BI can consume data from the Lakehouse for reporting and

visualization. Each Lakehouse has a built-in TDS/SQL endpoint, for easy

connectivity and querying of data in the Lakehouse tables from other

reporting tools.

Quote for the day:

"Be willing to make decisions.

That's the most important quality in a good leader." -- General George S. Patton, Jr.

/filters:no_upscale()/articles/architecture-modernization-domain-driven-discovery/en/resources/1figure1-large-1683727412214.jpg)