Law enforcement crackdowns and new techniques are forcing cybercriminals to pivot

Because of stepped-up law enforcement efforts, cybercriminals are also

facing a crisis in cashing out their cryptocurrencies, with only a handful

of laundering vehicles in place due to actions against crypto-mixers who

help obfuscate the money trail. "Eventually, they'll have to cash out to pay

for their office space in St. Petersburg to pay for their Lambos. So,

they're going to need to find an exchange," Burns Coven

said. Cybercriminals are just sitting on their money, like stuffing

money under the mattress. "It's been a tumultuous two years for the threat

actors," she said. "A lot of law enforcement takedowns, challenging

operational environments, and harder to get funds. And we're seeing this

sophisticated laundering technique called absolutely nothing doing, just

sitting on it." Despite the rising number of challenges, "I don't think

there's a mass exodus of threat actors from ransomware," Burns Coven tells

CSO, saying they are shifting tactics rather than exiting the business

altogether.

5 IT management practices certain to kill IT productivity

Holding people accountable is root cause analysis predicated on the

assumption that if something goes wrong it must be someone’s fault. It’s a

flawed assumption because most often, when something goes wrong, it’s the

result of bad systems and processes, not someone screwing up. When a manager

holds someone accountable they’re really just blame-shifting. Managers are,

after all, accountable for their organization’s systems and processes,

aren’t they? Second problem: If you hold people accountable when something

goes wrong, they’ll do their best to conceal the problem from you. And the

longer nobody deals with a problem, the worse it gets. One more: If you hold

people accountable whenever something doesn’t work, they’re unlikely to take

any risks, because why would they? Why it’s a temptation: Finding someone to

blame is, compared to serious root cause analysis, easy, and fixing the

“problem” is, compared to improving systems and practices, child’s play. As

someone once said, hard work pays off sometime in the indefinite future, but

laziness pays off right now.

How AI ethics is coming to the fore with generative AI

The discussion of AI ethics often starts with a set of principles guiding the

moral use of AI, which is then applied in responsible AI practices. The most

common ethical principles include being human-centric and socially beneficial,

being fair, offering explainability and transparency, being secure and safe,

and showing accountability. ... “But it’s still about saving lives and while

the model may not detect everything, especially the early stages of breast

cancer, it’s a very important question,” Sicular says. “And because of its

predictive nature, you will not have everyone answering the question in the

same fashion. That makes it challenging because there’s no right or wrong

answer.” ... “With generative AI, you will never be able to explain 10

trillion parameters, even if you have a perfectly transparent model,” Sicular

says. “It’s a matter of AI governance and policy to decide what should be

explainable or interpretable in critical paths. It’s not about generative AI

per se; it's always been a question for the AI world and a long-standing

problem.”

Design Patterns Are A Better Way To Collaborate On Your Design System

You probably don’t think of your own design activities as a “pattern-making”

practice, but the idea has a lot of very useful overlap with the practice of

making a design system. The trick is to collaborate with your team to find

the design patterns in your own product design, the parts that repeat in

different variations that you can reuse. Once you find them, they are a

powerful tool for making design systems work with a team. ... All designers

and developers can make their design system better and more effective by

focusing on patterns first (instead of the elements), making sure that each

is completely reusable and polished for any context in their

product. “Pattern work can be a fully integrated part of both getting

some immediate work done and maintaining a design system. ... This kind of

design pattern activity can be a direct path for designers and developers to

collaborate, to align the way things are designed with the way they are

built, and vice-versa. For that purpose, a pattern does not have to be a

polished design. It can be a rough outline or wireframe that designers and

developers make together. It needs no special skills and can be started and

iterated on by all.

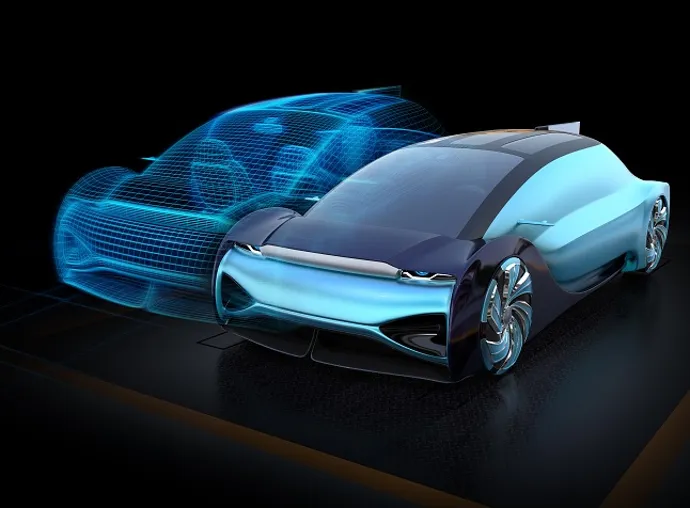

Digital Twin Technology: Revolutionizing Product Development

Digital twin technology accelerates product development while reducing time

to market and improving product performance, Norton says. The ability to

design and develop products using computer-aided design and advanced

simulation techniques can also facilitate collaboration, enable data driven

decision making, engineer a market advantage, and reduce design churn.

“Furthermore, developing an integrated digital thread can enable digital

twins across the product lifecycle, further improving product design and

performance by utilizing feedback from manufacturing and the field.” Using

digital twins and generative design upfront allows better informed product

design, enabling teams to generate a variety of possible designs based on

ranked requirements and then run simulations on their proposed design,

Marshall says. “Leveraging digital twins during the product use-cycle allows

them to get data from users in the field in order to get feedback for better

development,” she adds. Digital twin investments should always be aimed at

driving business value.

DevEx, a New Metrics Framework From the Authors of SPACE

Organizations can improve developer experience by identifying the top points

of friction that developers encounter, and then investing in improving areas

that will increase the capacity or satisfaction of developers. For example, an

organization can focus on reducing friction in development tools in order to

allow developers to complete tasks more seamlessly. Even a small reduction in

wasted time, when multiplied across an engineering organization, can have a

greater impact on productivity than hiring additional engineers. ... The first

task for organizations looking to improve their developer experience is to

measure where friction exists across the three previously described

dimensions. The authors recommend selecting topics within each dimension to

measure, capturing both perceptual and workflow metrics for each topic, and

also capturing KPIs to stay aligned with the intended higher-level outcomes.

... The DevEx framework provides a practical framework for understanding

developer experience, while the accompanying measurement approaches

systematically help guide improvement.

While there are many companies with altruistic intentions, the reality is

that most organizations are beholden to stakeholders whose chief interests

are profit and growth. If AI tools help achieve those objectives, some

companies will undoubtedly be indifferent to their downstream consequences,

negative or otherwise. Therefore, addressing corporate accountability around

AI will likely start outside the industry in the form of regulation.

Currently, corporate regulation is pretty straightforward. Discrimination,

for instance, is unlawful and definable. We can make clean judgments about

matters of discrimination because we understand the difference between male

and female, or a person’s origin or disability. But AI presents a new

wrinkle. How do you define these things in a world of virtual knowledge? How

can you control it? Additionally, a serious evaluation of what a company is

deploying is necessary. What kind of technology is being used? Is it

critical to the public? How might it affect others? Consider airport

security.

Prepare for generative AI with experimentation and clear guidelines

Your first step should be deciding where to put generative AI to work in

your company, both short-term and into the future. Boston Consulting Group

(BCG) calls these your “golden” use cases — “things that bring true

competitive advantage and create the largest impact” compared to using

today’s tools — in a recent report. Gather your corporate brain trust to

start exploring these scenarios. Look to your strategic vendor partners to

see what they’re doing; many are planning to incorporate generative AI into

software ranging from customer service to freight management. Some of these

tools already exist, at least in beta form. Offer to help test these apps;

it will help teach your teams about generative AI technology in a context

they’re already familiar with. ... To help discern the applications that

will benefit the most from generative AI in the next year or so, get the

technology into the hands of key user departments, whether it’s marketing,

customer support, sales, or engineering, and crowdsource some ideas. Give

people time and the tools to start trying it out, to learn what it can do

and what its limitations are.

Cyberdefense will need AI capabilities to safeguard digital borders

Speaking at CSIT's twentieth anniversary celebrations, where he announced

the launch of the training scheme, Teo said: "Malign actors are exploiting

technology for their nefarious goals. The security picture has, therefore,

evolved. Malicious actors are using very sophisticated technologies and

tactics, whether to steal sensitive information or to take down critical

infrastructure for political reasons or for profit. "Ransomware attacks

globally are bringing down digital government services for extended periods

of time. Corporations are not spared. Hackers continue to breach

sophisticated systems and put up stolen personal data for sale, and

classified information." Teo also said that deepfakes and bot farms are

generating fake news to manipulate public opinion, with increasingly

sophisticated content that blur the line between fact and fiction likely to

emerge as generative AI tools, such as ChatGPT, mature and become widely

available. "Threats like these reinforce our need to develop strong

capabilities that will support our security agencies and keep Singapore

safe," the minister said.

Five key signs of a bad MSP relationship – and what to do about them

Red flags to look out for here include overly long and unnecessarily

complicated contracts. These are often signs of MSPs making lofty promises,

trying to tie you into a longer project, and pre-emptively trying to raise

bureaucratic walls to make accessing the services you are entitled to more

complex. The advice here is simple – don’t rush the contract signing.

Instead, ensure that the draft contract is passed through the necessary

channels, so that all stakeholders have complete oversight. Also, do not be

tempted by outlandish promises; think pragmatically about what you want to

achieve with your MSP relationship, and make sure the contract reflects your

goals. If you’re already locked into a contract, consider renegotiating

specific terms. ... If projects are moving behind schedule and issues are

coming up regularly, this is a sign that your project lacks true project

management leadership. Of course, both parties will need some time when the

project starts to get processes running smoothly, but if you’re deep into a

contract and still experiencing delays and setbacks, this is a sign that all

is not well at your MSP.

Quote for the day:

"The greatest thing is, at any moment, to be willing to give up who we are

in order to become all that we can be." -- Max de Pree

No comments:

Post a Comment