From Agile to DevOps to DevSecOps: The Next Evolution

While Agile and DevOps share common goals, they have not always agreed on how to

achieve those goals. DevOps differs in many respects from Agile, but, at its

best, DevOps applies Agile methodologies, along with lean manufacturing

principles, to speed up software deployment. One area of particular tension

between Agile and DevOps is that the latter relies heavily on tools; in

particular, when it comes to the automation of testing and deployment processes.

But DevOps can overcome the resistance of Agile developers to tool usage simply

by applying Agile principles themselves. Effectively, DevOps proponents must

convince Agile teams that dogmatic adherence to the underlying principles of

Agile is actually inconsistent with Agile in the first place. Ironically, Agile

developers who insist that the process is always bad actually violate Agile

principles by refusing to acknowledge the benefits offered through change,

another Agile principle. The challenge is to have the Agile development teams

trust in the automation efforts of DevOps, while at the same time encouraging

the DevOps team to consider the business goals of deployment rather than

pursuing speed of deployment for its own sake.

Geoff Hinton’s Latest Idea Has The Potential To Break New Ground In Computer Vision

According to Dr. Hinton, the obvious way to represent the part-whole hierarchy

is by combining dynamically created graphs with neural network learning

techniques. But, if the whole computer is a neural network, he explained, it is

unclear how to represent part-whole hierarchies that are different for every

image, if we want the structure of the neural net to be identical for all

images. Capsule networks introduced by Dr.Hinton a couple of years ago offer a

solution: A group of neurons, called a capsule, is set aside for each possible

type of object or part in each region of the image. However, the problem with

capsules is they use a mixture to model the set of possible parts. The computer

will have a hard time answering questions like “Is the headlight different from

the tyres and more such questions” (more on this example in the next section).

The recent work on Neural Field offers a simple way to represent values of depth

or intensity of the image. It uses a neural network that takes as input a code

vector representing the image along with image location and outputs the

predicted value at that location.

Addressing Security Throughout the Infrastructure DevOps Lifecycle

Keep in mind that developer-first security doesn’t preclude “traditional”

cloud security methods — namely monitoring running cloud resources for

security and compliance misconfigurations. First of all, unless you’ve

achieved 100% parity between IaC and the cloud (unlikely), runtime scanning is

essential for complete coverage. You probably still have teams or parts of

your environment — maybe legacy resources — that are still being manually

provisioned via legacy systems or directly in your console and so need to be

continuously monitored. Even if you are mostly covered by IaC, humans make

mistakes and SREmergencies are bound to happen. We recently wrote about the

importance of cloud drift detection to catch manual changes that result in

unintentional deltas between code configuration and running cloud resources.

Insight into those resources in production is essential to determine those

potentially risky gaps. Runtime scanning also has some advantages. Because it

follows the actual states of configurations, it’s the only viable way of

evaluating configuration changes over time when managing configuration in

multiple methods. Relying solely on build-time findings without attributing

them to actual configuration states in runtime could result in configuration

clashes.

Privacy breaches in digital transactions: Examination under Competition Law or Data Protection Law?

As long as the search engines’ search data is kept secret, no rival or would

be rival or entrant, will have access to this critical ‘raw material’ for

search innovation. Further, when transactions take place in the digital

economy, firms generally tend to collect personal as well as non-personal data

of users in exchange for services provided. While it can be argued that

personal data is probably collected with the user’s consent, usually,

collection of non-personal data happens without the consent or knowledge of

the consumers. Data is further compromised when businesses that have large

amounts of data merge or amalgamate, and when dominant firms abuse their

market position and resort to unethical practices. Traditional Competition Law

analysis involves a wide focus on ‘pricing models’ i.e., methods used by

business players to determine the price of their goods or services. User data

forms part of the ‘non-pricing model’. With the Competition Act, 2002

undergoing a number of changes owing to technological developments, there is a

possibility that non-pricing models are also considered under the ambit of the

Act.

GraphQL: Making Sense of Enterprise Microservices for the UI

GraphQL has become an important tool for enterprises looking for a way to

expose services via connected data graphs. These graph-oriented ways of

thinking offer new advantages to partners and customers looking to consume

data in a standardized way. Apart from the external consumption benefits,

using GraphQL at Adobe has offered our UI engineering teams a way to grapple

with the challenges related to the increasingly complicated world of

distributed systems. Adobe Experience Platform itself offers dozens of

microservices to its customers, and our engineering teams also rely on a fleet

of internal microservices for things like secret management, authentication,

and authorization. Breaking services into smaller components in a

service-oriented architecture brings a lot of benefits to our teams. Some

drawbacks need to be mitigated to deploy the advantages. More layers mean more

complexity. More services mean more communication. GraphQL has been a key

component for the Adobe Experience Platform user experience engineering team:

one that allows us to embrace the advantages of SOA and helping us to navigate

the complexities of microservice architecture.

Serverless Functions for Microservices? Probably Yes, but Stay Flexible to Change

When serverless functions are idle they cost nothing (“Pay per use” model). If

a serverless function is called by 10 clients at the same time, 10 instances

of it are spun up almost immediately (at least in most cases). The entire

provision of infrastructure, its management, high availability (at least

up to a certain level) and scaling (from 0 to the limits defined by the

client) are provided out of the box by teams of specialists working behind the

scenes. Serverless functions provide elasticity on steroids and allow you to

focus on what is differentiating for your business. ... A “new service” needs

to go out fast to the market, with the lowest possible upfront investment, and

needs to be a “good service” since the start. When we want to launch a new

service, a FaaS model is likely the best choice. Serverless functions can be

set up fast and minimise the work for infrastructure. Their “pay per use”

model means no upfront investment. Their scaling capabilities provide good

consistent response time under different load conditions. If, after some time,

the load becomes more stable and predictable, then the story can change, and a

more traditional model based on dedicated resources, whether Kubernetes

clusters or VMs, can become more convenient than FaaS.

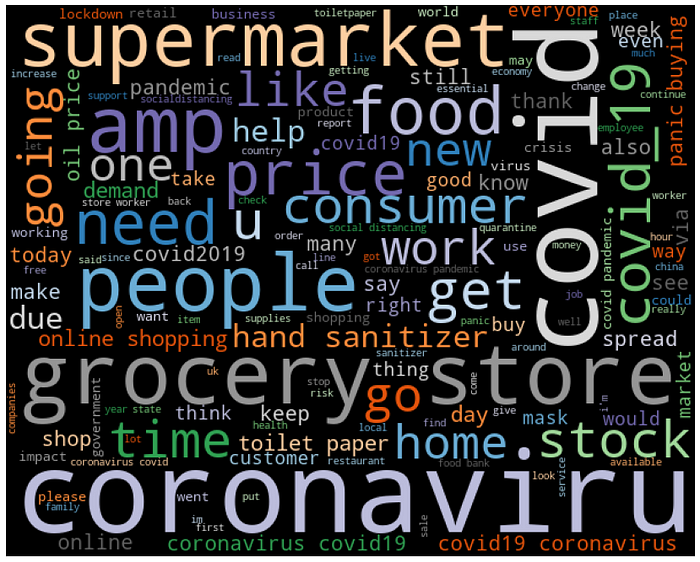

Unconventional Sentiment Analysis: BERT vs. Catboost

Sentiment analysis is a Natural Language Processing (NLP) technique used to

determine if data is positive, negative, or neutral. Sentiment analysis is

fundamental, as it helps to understand the emotional tones within language.

This, in turn, helps to automatically sort the opinions behind reviews, social

media discussions, etc., allowing you to make faster, more accurate decisions.

Although sentiment analysis has become extremely popular in recent times, work

on it has been progressing since the early 2000s. Traditional machine learning

methods such as Naive Bayesian, Logistic Regression, and Support Vector

Machines (SVMs) are widely used for large-scale sentiment analysis because

they scale well. Deep learning (DL) techniques have now been proven to provide

better accuracy for various NLP tasks, including sentiment analysis; however,

they tend to be slower and more expensive to learn and use. ... CatBoost is a

high-performance, open-source library for gradient boosting on decision trees.

From release 0.19.1, it supports text features for classification on GPU

out-of-the-box. The main advantage is that CatBoost can include categorical

functions and text functions in your data without additional preprocessing.

How to gain advanced cyber resilience and recovery across the IT ops and SecOps divide

The legacy IT world was all about protecting what they know about, and it’s

hard to change. The new world is all about automation, right? It impacts

everything we want to do and everything that we can do. Why wouldn’t we try to

make our jobs as simple and easy as possible? When I first got into IT, one of

my friends told me that the easiest thing you can do is script everything that

you possibly can, just to make your life simpler. Nowadays, with the way

digital workflows are going, it’s not just automating the simple things -- now

we’re able to easily to automate the complex ones, too. We’re making it so

anybody can jump in and get this automation going as quickly as possible. ...

The security challenge has changed dramatically. What’s the impact of Internet

of things (IoT) and edge computing? We’ve essentially created a much larger

attack surface area, right? What’s changed in a very positive way is that this

expanded surface has driven automation and the capability to not only secure

workflows but to collaborate on those workflows. We have to have the

capability to quickly detect, respond, and remediate.

Compute to data: using blockchain to decentralize data science and AI with the Ocean Protocol

If algorithms run where the data is, then this means how fast they will run

depends on the resources available at the host. So the time needed to train

algorithms that way may be longer compared to the centralized scenario,

factoring in the overhead of communications and crypto. In a typical scenario,

compute needs move from client side to data host side, said McConaghy:

"Compute needs don't get higher or lower, they simply get moved. Ocean

Compute-to-Data supports Kubernetes, which allows massive scale-up of compute

if needed. There's no degradation of compute efficiency if it's on the host

data side. There's a bonus: the bandwidth cost is lower, since only the final

model has to be sent over the wire, rather than the whole dataset. There's

another flow where Ocean Compute-to-Data is used to compute anonymized data.

For example using Differential Privacy, or Decoupled Hashing. Then that

anonymized data would be passed to the client side for model building there.

In this case most of the compute is client-side, and bandwidth usage is higher

because the (anonymized) dataset is sent over the wire. Ocean Compute-to-Data

is flexible enough to accommodate all these scenarios".

Exchange Server Attacks Spread After Disclosure of Flaws

Beyond the U.S. federal government, the impact of the vulnerabilities

continues to grow - and not just among the targeted sectors named by

Microsoft. The company says those groups include infectious disease

researchers, law firms, higher education institutions, defense contractors,

policy think tanks and nongovernment organizations. Volexity, which

contributed research for the vulnerability findings, first noticed

exploitation activity against its customers around Jan. 6. That activity has

suddenly ticked up now that the vulnerabilities are public, says Adair, CEO

and founder of the firm. "The exploit already looks like it has spread to

multiple Chinese APT groups who have become rather aggressive and noisy -

quite a marked change from how it started with what we were seeing," he says.

Threat detection company Huntress says it has seen compromises of unpatched

Exchange servers in small hotels, one ice cream company, a kitchen appliance

manufacturer and what it terms "multiple senior citizen communities." "We have

also witnessed many city and county government victims, healthcare providers,

banks/financial institutions and several residential electricity providers,"

writes John Hammond, a senior threat researcher at Huntress.

Quote for the day:

"Leadership development is a lifetime

journey, not a quick trip." -- John Maxwell

No comments:

Post a Comment