Proptech disruption trends: innovation in the real estate space

Users have found that RPA can learn how to complete administrative tasks,

leaving more time to spend on duties that require a more human touch, such as

customer service. Tom Reiss, CEO of Roby AI, explained: “By learning how a

user carries out a task, RPA can then be custom built and combined with tasks

which require an element of human touch. This approach means that companies can

become hugely efficient, and staff are no longer weighed down with laborious

tasks. “Whilst some companies have traditionally feared proptech, this kind of

clever technology can be implemented easily alongside existing structures. In

turn, creating minimal disruption to the business and maximum output when it

comes to efficiency, cost saving and employee satisfaction.” ... “Video

surveillance can be performed in real time, or data can be collected and stored

for the purpose of evaluation when required.” Lodhia went on to explain how the

cloud has further facilitated safety measures, which have benefitted from remote

monitoring and management, particularly during the Covid-19 pandemic. “The

impact of cloud technology has had a dramatic impact on proptech, and there are

two main benefits,” Lodhia said.

How the Digital Twin Drives Smart Manufacturing

One of the initial areas of focus for implementation of the digital twin has

been asset lifecycle management (ALM). Maintaining assets in the field has

traditionally been a time-consuming and costly task, but critical to equipment

and system uptime. Today, maintenance technicians can leverage technologies like

augmented reality (AR) that allows them to access virtual engineering models and

overlay these models over the physical equipment on which they are performing

maintenance using specialized AR goggles or glasses. This enables them to use

the most accurate and up-to-date engineering, helping ensure that the correct

maintenance and performance specifications are performed efficiently. These same

maintenance methods, based on merging of virtual and physical environments, can

be applied to factory production systems, machines, and work cells. In addition,

products, production systems, machines, and work cells can be simulated

virtually to test and validate physical systems prior to assembly and

installation. Moreover, the virtual commissioning of production automation—an

established technology and process—is merging with the more expansive scope of

the digital twin.

What's between your clouds? That's key to multi-cloud performance

First, you need management and monitoring layers. These include AIOps, security

managers, governance tooling, and other technologies that can manage and control

heterogeneous cloud deployments. The management and monitoring layers are just

as important—perhaps even more so—than are the native services that run on those

public clouds. These layers of software systems become the jumping-off point for

modern cloud operations, and they can operate without leveraging cloud-specific

systems as you move forward. Second, public cloud providers are beginning to

invest in cross-cloud solutions. Most won't mention the word multi-cloud, but

they plan to support this architecture, nonetheless. This puts the nail in the

coffin of less complex, single-cloud deployments that do not take advantage of

best-of-breed. Some people remain skeptical that public cloud providers will

build technology that will integrate with the competition, but the providers

really have no other choice. Remember when Apple and Microsoft devices could not

communicate? Cloud vendors do. This is not a new trend. Enterprises will

continue to move to multi-cloud as the preferred cloud deployment platform, and

that move is to the middle.

How We’ll Conduct Algorithmic Audits in the New Economy

Lack of transparent accountability for algorithm-driven decision making tends to

raise alarms among impacted parties. Many of the most complex algorithms are

authored by an ever-changing, seemingly anonymous cavalcade of programmers over

many years. Algorithms’ seeming anonymity -- coupled with their daunting size,

complexity and obscurity -- presents the human race with a seemingly intractable

problem: How can public and private institutions in a democratic society

establish procedures for effective oversight of algorithmic decisions? Much as

complex bureaucracies tend to shield the instigators of unwise decisions,

convoluted algorithms can obscure the specific factors that drove a specific

piece of software to operate in a specific way under specific circumstances. In

recent years, popular calls for auditing of enterprises’ algorithm-driven

business processes has grown. Regulations such as the European Union (EU)’s

General Data Protection Regulation may force your hand in this regard. GDPR

prohibits any “automated individual decision-making” that “significantly

affects” EU citizens. Specifically, GDPR restricts any algorithmic approach that

factors a wide range of personal data -- including behavior, location,

movements, health, interests, preferences, economic status, and so on—into

automated decisions.

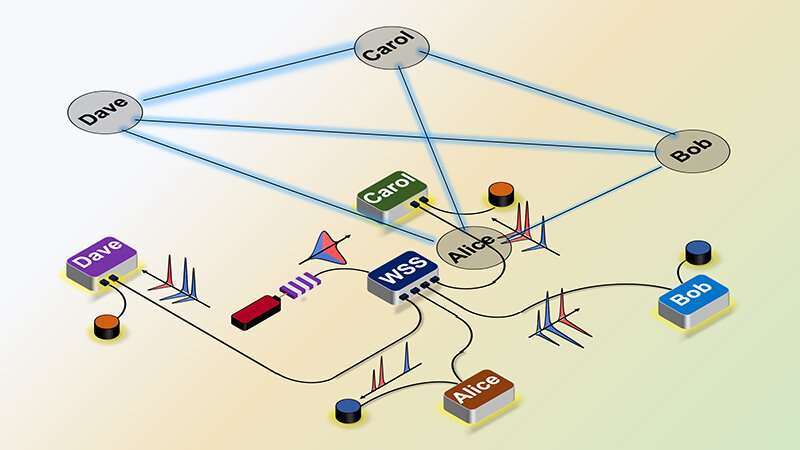

A quantum internet is closer to reality, thanks to this switch

For a quantum internet, forming connections between users and adjusting

bandwidth means distributing entanglement, the ability of photons to maintain a

fixed quantum mechanical relationship with one another no matter how far apart

they may be to connect users in a network. Entanglement plays a key role in

quantum computing and quantum information processing. "When people talk about a

quantum internet, it's this idea of generating entanglement remotely between two

different stations, such as between quantum computers," said Navin Lingaraju, a

Purdue Ph.D. student in electrical and computer engineering. "Our method changes

the rate at which entangled photons are shared between different users. These

entangled photons might be used as a resource to entangle quantum computers or

quantum sensors at the two different stations." Purdue researchers performed the

study in collaboration with Joseph Lukens, a research scientist at Oak Ridge

National Laboratory. The wavelength-selective switch that the team deployed is

based on similar technology used for adjusting bandwidth for today's classical

communication.

What is a solutions architect? A vital role for IT-business alignment

A solutions architect is responsible for evaluating an organization’s business

needs and determining how IT can support those needs leveraging software,

hardware, or infrastructure. Aligning IT strategy with business goals has become

paramount, and a solutions architect can help determine, develop, and improve

technical solutions in support of business goals. A solutions architect also

bridges communication between IT and business operations to ensure everyone is

aligned in developing and implementing technical solutions for business

problems. The process requires regular feedback, adjustments, and

problem-solving in order to properly design and implement potential solutions.

Solution architecture itself encompasses business, system, information,

security, application and technology architecture. Some examples of solutions

architecture include developing cloud infrastructure for efficiency,

implementing microservices for ecommerce, or adopting security measures for

data, systems, and networks. While the scope of the job can vary depending on a

business’ specific needs, there are certain responsibilities, skills, and

qualifications that solutions architects will need to meet to get the job.

Digital transformation: 5 new realities for CIOs

We’re not just working from home but also attending school, shopping, and

conducting all essential communications without ever walking out the front door.

Many jobs that we previously thought were only doable from the job site can now

be done remotely. Product development teams now have their living rooms and

garages full of parts, equipment, etc., harkening back to the early start-up era

for companies like Apple, HP, Microsoft, and others. Of course, the more we do

from home, the more our finite bandwidth resources are taxed. Traditional peak

hours for internet usage were in the evening, but with everyone home 24/7,

streaming everything simultaneously, the Wi-Fi is straining to remain stable

during an employee’s more ideal work hours. We must equip WFH employees with the

technology and bandwidth they need to be productive and efficient. Allocate

budget to upgrade employees’ home networks to premium bandwidth. Nothing causes

more headaches than choppy bandwidth on Zoom when trying to support clients. ...

With the move to the cloud and WFH, we’re now forced to manage a high-threat

environment every time an employee fires up a laptop or mobile phone and taps

into the company network or cloud resources.

AI in Hydroponics: The Future Of Smart Farming

AI-driven’ Smart Hydroponics’ can determine optimum growth for a plant through a

combination of hardware setup and a software tool that can recreate its growth

trajectory. Insights are generated from data obtained by sensors in the

hardware. The sensing hardware is divided into three categories, each of which

is strategically placed within the hydroponics farm. They sit near the plant

roots and collect data about the crop vitals, pH levels, electrical conductivity

levels, and nutrient supply. They also detect light density, temperature, and

humidity levels. A visual camera also checks the growing plants for colouration

and feeds the data to the AI software. On the other hand, insights about the

precise nature and needs of the products are generated through machine learning.

The AI software system works like the brain behind the entire Hydroponic farm.

It can choose between different types of LED lighting and modulate light

intensity. It can even turn on a suitable irrigation system. It drives

end-to-end automation so that fewer manual tasks are delegated. Currently, AI in

Hydroponics in India may be in a fledgeling state.

Fintech disruption trends: a changing payment landscape on the horizon

With such a dramatic drift shift to digital commerce, largely accelerated by the

impact of Covid-19, demand for software-based payment technology will

exponentially increase, according to Justin Pike, founder and chairman of

MYPINPAD — the software-based payments company. In this digital world the

consumer is opened up to a variety of choice that can’t be replicated in the

physical world and competition is fierce. “Consequently, consumer facing brands

have recognised the criticality of technology that can significantly improve the

customer experience,” says Pike. He believes that software-based payment

technology forms the missing piece of the puzzle in terms of innovating and

improving the customer experience in a remote environment, where the customer

experience is completed on mobile devices. “Standardisation of the payment

experience through software, across all channels (both online and offline) is

where we are rapidly heading. This innovation will bring a myriad of benefits

for both consumer and brand, but it absolutely must be built on a foundation of

security,” Pike continues. “For merchants, the opportunity to reach new

markets and customers is too good to miss.

Arguing your way to better strategy

Iterative visualization is achieved by creating a strategy map, which tracks a proposed strategy’s causal path backward from its desired outcome to the factors required to make it happen. The authors illustrate this process by producing a strategy map based on statements about Walmart’s low-cost model, which enabled the retailer to attract customers and vanquish competitors in the pre-digital economy. Working backward from the desired outcome of low costs, they map two of its enablers: operational efficiencies and a bargaining advantage over suppliers. In turn, they enumerate the enablers of those enablers, which for bargaining include high-volume purchasing, negotiating prowess, and private labels. And so on. A strategy map is only the first step in making a strategy argument. “At this stage,” Sørensen and Carroll explain, “these statements are just unfounded claims in the strategy argument, and their veracity and importance have yet to be demonstrated.” That work begins in the second set of activities — logical formalization. Logical formalization entails testing the validity and soundness of the premises underlying the statements in a strategy map.

Quote for the day:

"If you only read the books that

everyone else is reading, you can only think what everyone else is

thinking." -- Haruki Murakami

No comments:

Post a Comment