Why disaster recovery preparation is even more important during a pandemic

From a cyber perspective, disaster recovery during a pandemic raises new

challenges as well. The rapid expansion of remote work introduces new

vulnerabilities. Many organizations have relaxed perimeter security controls

to allow remote connectivity, introducing new threat vectors that threat

actors can exploit to gain access to networks. Lately, many of these attacks

have focused on ransomware and data destruction, which encrypt data and often

corrupt critical backup systems, rendering existing disaster recovery plans

unusable. An "all hands on deck" approach to manual recovery is often the only

response to these conditions. Unfortunately, social distancing protocols and

remote work arrangements can make those manual recovery efforts an

impossibility. ... IT disaster recovery generally falls into one of two

categories: A natural disaster event (earthquake, flood, etc.) or a system

failure (such as failures in hardware, software or electrical). This year,

actual DR responses we have witnessed have included issues with local or

regional power outages, or power infrastructure issues. We have seen this

across multiple industries including financial services with outages during

peak customer windows and prolonged recovery times.

Iranian hacker group developed Android malware to steal 2FA SMS codes

In a report published today, Check Point researchers said they also discovered

a potent Android backdoor developed by the group. The backdoor could steal the

victim's contacts list and SMS messages, silently record the victim via the

microphone, and show phishing pages. But the backdoor also contained routines

that were specifically focused on stealing 2FA codes. Check Point said the

malware would intercept and forward to the attackers any SMS message that

contained the "G-" string, usually employed to prefix 2FA codes for Google

accounts sent to users via SMS. The thinking is that Rampant Kitten operators

would use the Android trojan to show a Google phishing page, capture the

user's account credentials, and then access the victim's account. If the

victim had 2FA enabled, the malware's 2FA SMS-intercepting functionality would

silently send copies of the 2FA SMS code to the attackers, allowing them to

bypass 2FA. But that was not it. Check Point also found evidence that the

malware would also automatically forwarding all incoming SMS messages from

Telegram and other social network apps. These types of messages also contain

2FA codes, and it's very likely that the group was using this functionality to

bypass 2FA on more than Google accounts.

Clean Coders: Why India isn’t on the List

A more vexing element that drives the problem — a majority of the Indian

software companies look at software purely as a business. It’s mostly about

getting the deliverables ready on the quoted time and almost never about

striving for quality results. Consequently, the team treats coding as a task

to be ticked off with numbers rather than a task requiring quality — something

that would actually educate folks to avoid future mistakes. It’s a chain

reaction, really. When the organization itself does not prioritize clean

quality coding when a product is being developed, most coders lose the urge to

be curious about better practices and approaches since they have to direct all

their efforts into meeting deadlines. Even to this day, many skilled

professionals in the industry lack the ability to convey their ideas and pain

points effectively during client meetings or within the team. Organizations

need to establish the fact that coding is only one aspect of the job and that

communication is equally important. Especially in the service sector, when we

are constantly collaborating on large-scale projects, it’s absolutely crucial

for clients and internal teams to be on the same page.

6 big data blunders businesses should avoid

Owing to modern technologies, all trades, irrespective of size, have access to

granular and rich data that is based on their operations and clients. The

major hurdle in this is dealing with a massive quantity of data that are both

challenging to maintain and costly to manage. Despite the presence of

appropriate tools, dealing with such data is a cumbersome activity. Errors are

a frequent presence with the layers of complexity involved in dealing with Big

Data. However, Big Data holds diverse leverages for businesses. ... Thus, Big

Data becomes the defining leverage for innovative enterprises to gain an edge

over their competitors. The usage of these data is sure to exceed 274.3

billion by 2022 globally with each individual generating approximately 1.7

megabytes of information per second. With such leverages in point, can

you really afford to make mistakes with regard to blunders regarding Big Data?

So, here are some big data blunders that businesses need to avoid to harness

its full capabilities and enjoy the leverages that it brings.

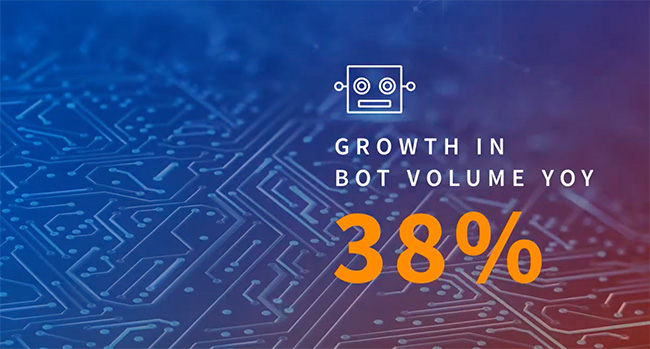

Tracking global cybercrime activity and the impact on the digital economy

The EMEA region saw lower overall attack rates in comparison to most other

global regions from January through June 2020. This is due to a high volume of

trusted login transactions across relatively mature mobile apps. The attack

patterns in EMEA were also more benign and had less volatility and fewer

spikes in attack rates. However, there are some notable exceptions. Desktop

transactions conducted from EMEA had a higher attack rate than the global

average and automated bot attack volume grew 45% year over year. The UK

originates the highest volume of human-initiated cyberattacks in EMEA, with

Germany and France second and third in the region. The UK is also the second

largest contributor to global bot attacks behind the U.S. One example of a UK

banking fraud network saw more than $17 million exposed to fraud across 10

financial services organizations. This network alone consisted of 7,800

devices, 5,200 email addresses and 1,000 telephone numbers. The overall

human-initiated attack rate fell through the first half of 2020, showing a 33%

decline year over year. The breakdown by sector shows a 23% decline in

financial services and a 55% decline in e-commerce attack rates.

Load Testing APIs and Websites with Gatling: It’s Never Too Late to Get Started

If it is your first time load testing, whether you already know the target

user behavior or not, you should start with a capacity test. Stress testing is

useful but analyzing the metrics is really tricky under such a load. Since

everything is failing at the same time, it makes the task difficult, even

impossible. Capacity testing offers the luxury to go slowly to failure, which

is more comfortable for the first analysis. To get started, just run a

capacity test that makes your application crash as soon as possible. You only

need to add complexity to the scenario when everything seems to run smoothly.

... If an average can give you a quick overview of what happened in a run, it

will hide under the rug all the things you actually want to look at. This is

where percentiles come in handy. Think of it this way: if the average response

time is some amount of milliseconds, how does the experience feel in the worst

case for 1% of your user base? Better or worse? How does it feel for 0.1% of

your users? And so on, getting closer and closer to zero. The higher the

amount of users and requests, the closer you’ll need to get to zero in order

to study extreme behaviors.

Who Should Own Data Governance?

Many organizations position data governance under the Chief Financial

Officer (CFO). Other organizations position data governance under the Chief

Risk Officer (CRO) or the Chief Operational Officer (COO). In addition, some

organizations position data governance under the Chief Privacy Officer (CPO)

or the Chief Information Security Officer (CISO). These days there are so

many C-levels. Placement of data governance under any one of these C-level

people is never wrong. Data governance must reside somewhere and having a

C-level person as your Executive Sponsor is always a good thing. In fact,

many organizations state that senior leadership’s support, sponsorship and

understanding of data governance is the number one best practice for

starting and sustaining their program. Having a C-level person as your

Executive Sponsor often dictates where data governance will reside in the

organization. Is it better for data governance to be placed in Finance and

report through the CFO than it is to have it reside in Operations and report

through the COO? The answer to that question is, “It depends.” It depends on

the interest and ability of that person and that part of the organization to

provide for the proper level of capacity in terms of resources to

operationalize and engage the organization.

Why Are Some Cybersecurity Professionals Not Finding Jobs?

Simply stated, these good people cannot get hired in a cyber job. Going much

further, they argue that select organizations (who discuss millions of

unfilled jobs) are pushing their own training agendas, certifications

offered, want to boost certain company stock prices or have other reasons to

encourage this “abundance of cyber jobs remain vacant” narrative, even

though it is not true, in their opinion. I want to be clear up front

that, I disagree with this narrative. I do believe that many (perhaps

millions but we can argue the numbers in another blog) global cybersecurity

job vacancies do exist. Nevertheless, I truly sympathize with these people

who disagree, and I want to try and help as many as I can find employment. I

also want hiring managers to set proper expectations. In addition to

my blogs and articles, I have personally mentored and helped dozens of

people find cyber jobs, from high school students to new college graduates

to CISOs and CSOs. (Note: this is not my "day job" but one way I try to give

back to the security community – just like so many others are doing as

well.) I also champion ways that government CISOs struggle in this area, and

how tech leaders can find more cyber talent.

Seven Steps to Realizing the Value of Modern Apps

With organizations running a multitude of environments to meet the demands

of their applications, each with unique technological requirements, finding

the platform isn’t the only challenge. What’s hard is that the development

and management is more complex than ever before, with IT and developers

navigating traditional apps, cloud- native, SaaS, services and on-prem, for

example. Here’s where you need a common ground between IT teams, Lines

of Business and developers – where having a single digital platform is

critical – to remove the potential for silos springing up, enable the better

deployment of resources, and provide a consistent approach to managing

applications, infrastructure and business needs together. It’s about

creating one, common platform to ‘run all the things’. One, software-defined

digital foundation that provides the platform – and choice of where to run

IT – to drive business value, create the best environment for developers and

help IT effectively manage existing and new technology via any cloud for any

application on any device with intrinsic security. One platform that can

deliver all apps, enabling developers to use the latest development

methodologies and container technologies for faster time to

production.

4 measures to counteract risk in financial services

Financial services regulators across jurisdictions have identified

concentration risk as a factor to consider in assessing risk in outsourcing.

That risk has two components (i) micro-risk where reliance on a single

provider for core operations may present an undue risk of operations if

there is a single point of failure and (ii) macro-risk where reliance on

financial firms within the ecosystem are so reliant on a vendor that a

single point of failure risks causing a broad systemic risk to the

operations of the financial services sector. Notably, this risk is not

unique to cloud services and, as the Bank of England commented in its

Consultation Paper on Outsourcing and Third Party Risk Management, “a small

number of third parties have traditionally dominated the provision of

certain functions, products, or services to firms, such as cash machines or

IT mainframes.” In other words, the issue of concentration risk is not net

new but has been a feature within the financial services industry for

decades. While cloud remains relatively nascent compared to entrenched

providers of legacy systems, like the mainframe, its increasing adoption

means that financial institutions must account for, and mitigate against,

micro-risk issues of concentration in use of cloud providers.

Quote for the day:

“When we are no longer able to change a situation, we are challenged to change ourselves.” -- Viktor E. Frankl

No comments:

Post a Comment