Brain-Inspired Electronic System Could Make AI 1,000 Times More Energy Efficient

In the new study, published in Nature Communications, engineers at UCL found

that accuracy could be greatly improved by getting memristors to work together

in several sub-groups of neural networks and averaging their calculations,

meaning that flaws in each of the networks could be canceled out. Memristors,

described as “resistors with memory,” as they remember the amount of electric

charge that flowed through them even after being turned off, were considered

revolutionary when they were first built over a decade ago, a “missing link”

in electronics to supplement the resistor, capacitor, and inductor. They have

since been manufactured commercially in memory devices, but the research team

say they could be used to develop AI systems within the next three years.

Memristors offer vastly improved efficiency because they operate not just in a

binary code of ones and zeros, but at multiple levels between zero and one at

the same time, meaning more information can be packed into each bit. Moreover,

memristors are often described as a neuromorphic (brain-inspired) form of

computing because, like in the brain, processing and memory are implemented in

the same adaptive building blocks, in contrast to current computer systems

that waste a lot of energy in data movement.

Management skills: Five ways building your network will help you get ahead

Mark Gannon, director of business change and information solutions at

Sheffield City Council, says smart digital leaders make sure they carry on

learning – even once they get to the very top. Gannon says developing

experiences outside the day job has always been important to him, both as

full-time CIO and in his stint as a consultant before joining the council.

"There's the basic stuff about just getting out there and understanding your

customers and spending time to speak with them. Consulting was interesting

because it gave me the opportunity to look outside my own experience and see

what other organisations were doing. I think it's really important to be

constantly learning," he says. Gannon suggests his determination to develop

new skills might be something to do with having completed a doctorate prior to

joining the IT profession. His interest in education continues to this day –

Gannon is a school parent governor. "Being a governor is interesting and

getting out and engaging with other networks in the city is something I do a

lot. We've developed a cross-community network, called dotSHF, which is about

how we bring together the work that's being done by sole traders, and private

and public sector organisations around digital," says Gannon.

Telling tales: using behavioural AI to reconstruct attack storylines

Behavioral AI can be used to mitigate automatically—a seriously powerful

gamechanger. The technology is capable of making a decision on the device,

without relying on the cloud, or on humans, to tell it what to do. Monitoring

behaviour is a tricky, complex problem, and you want to feed your algorithm

robust, informative, context-rich data which really captures the essence of a

program’s execution. To do this, you need to monitor the operating system at a

very low level and, most importantly, link individual behaviours together to

create full “storylines”. For example, if a program executes another program,

or uses the operating system to schedule itself to execute on boot up, you

don’t want to consider these different, isolated executions, but a single

story. Training AI models on behavioural data is similar to training static

models, but with the added complexity of the time dimension. In other words,

instead of evaluating all features at once, you need to consider cumulative

behaviours up to various points in time. Interestingly, if you have good

enough data, you don’t really need an AI model to convict an execution as

malicious. For example, if the program starts executing but has no user

interaction, then it tries to register itself to start when the machine is

booted, then it starts listening to keystrokes, you could say it’s very likely

a keylogger and should be stopped.

Microsoft Updates Edge With Exciting New Features To Beat Chrome

Microsoft’s Edge browser is growing in popularity, reaching the number two

position in the desktop browser market, even beating privacy-focused option

Firefox. Now Microsoft has just unveiled a bunch of new features that make it

a valid alternative to Google Chrome as an increasing number of people work

from home. One very useful update which would be great if it comes to fruition

was spotted by Windows Latest in the Edge Canary developer build is a new

feature called “Web Capture” which allows you to take a screenshot of a

webpage—in full or cropped—and copy it to the clipboard or preview it. ...

Meanwhile, more new features to boost your security are expected in Edge 86,

which is due to drop in the next few weeks, Microsoft has confirmed. This

includes new alerts for the Edge password monitor if a compromised password is

detected. At the same time, Edge will add the option to show or hide the

favorites bar from the favorites management page. Edge will also add policy

improvements for enterprises using the browser for various users and

applications. Just last week, Microsoft started to roll out Edge 85 with

multiple features aiming to help those working from home during the

coronavirus pandemic.

How AI will automate cybersecurity in the post-COVID world

At a basic level, AI uses data to make predictions and then automates actions.

This automation can be used for good or evil. Cybercriminals take AI designed

for legitimate purposes and use it for illegal schemes. Consider one of the

most common defenses attempted against credential stuffing – CAPTCHA. Invented

a couple of decades ago, CAPTCHA tries to protect against unwanted bots by

presenting a challenge (e.g., reading distorted text) that humans should find

easy and bots should find difficult. Unfortunately, cybercriminal use of AI

has inverted this. Google did a study a few years ago and found that

machine-learning based optical character recognition (OCR) technology could

solve 99.8% of CAPTCHA challenges. This OCR, as well as other CAPTCHA-solving

technology, is weaponized by cybercriminals who include it in their credential

stuffing tools. Cybercriminals can use AI in other ways too. AI technology has

already been created to make cracking passwords faster, and machine learning

can be used to identify good targets for attack, as well as to optimize

cybercriminal supply chains and infrastructure. We see incredibly fast

response times from cybercriminals, who can shut off and restart attacks with

millions of transactions in a matter of minutes.

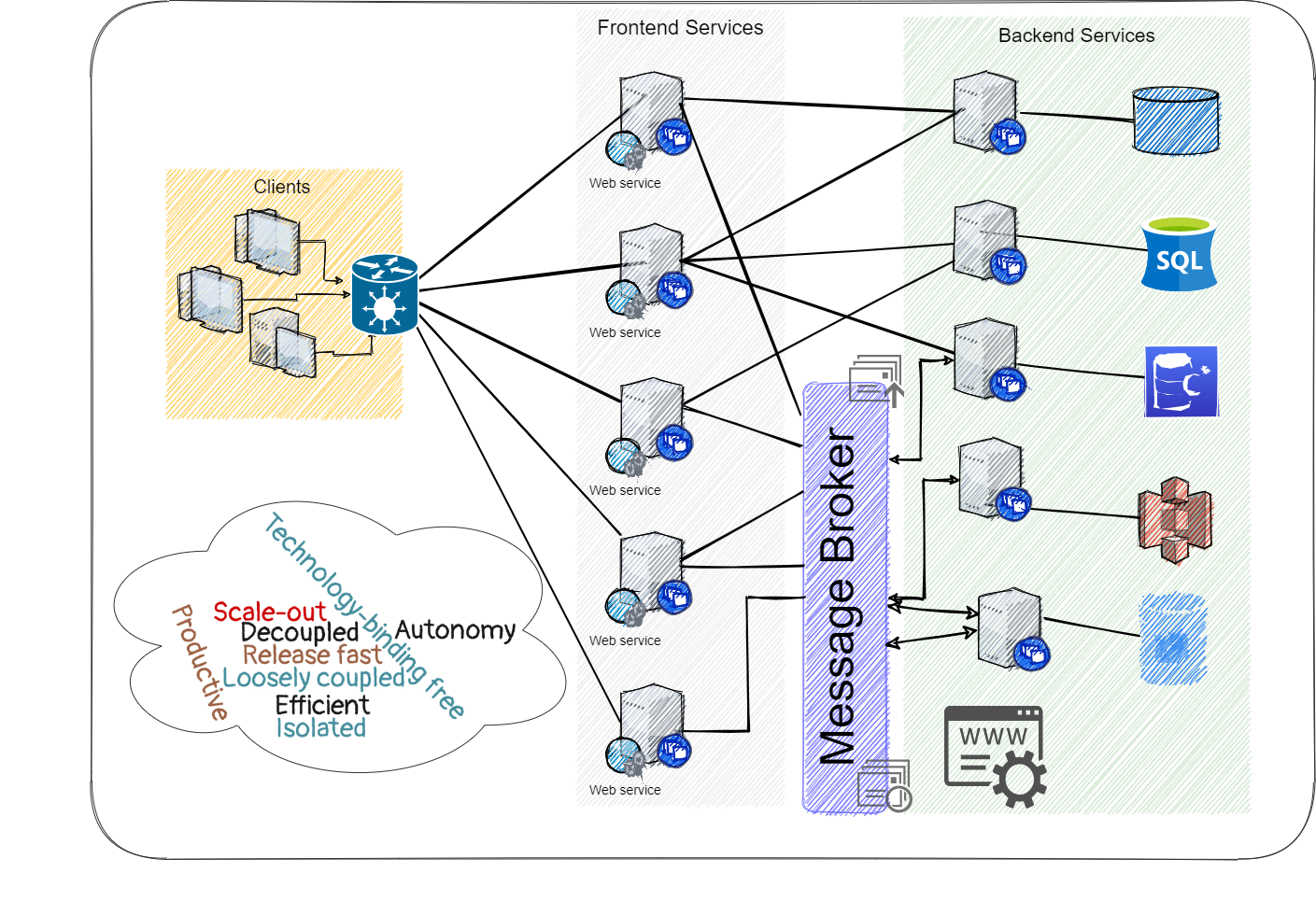

The Principles of Planning and Implementing Microservices

Each service should have a version, which updates regularly in every release.

Versioning allows to identify a service and deploy a specific version of it.

It also enables the consumers of the service to be aware when the service has

changed, and by that avoid breaking the existing contract and the

communication between the services. Different versions of the same service can

coexist. With that, the migration from the old version of the new version can

be gradual without having too much impact on the whole application. ... In a

microservices environment, there are many small services that communicate

constantly with each other, so it is easier to get lost in what the service

does or how to use its API. Documentation can facilitate that. Keeping valid

up-to-date documentation is a tedious and time-consuming task. Naturally, this

can be prioritised low in the tasks list of the developer. Therefore,

automation is required instead of documenting manually (readme files, notes,

procedures). There are various tools to codify and automate tasks to keep the

documentation updated while the code continues to change. Tools like Swagger

UI or API Blueprint can do the job. They can generate a web UI for your

microservices API, which alleviates the orientation efforts. once again,

standardization is an advantage; for example, Swagger implements the OpenAPI

specification, which is an industry-standard.

How Cybercriminals Take the Fun Out of Gaming

The underground market is also active. In a recent blog, Singer broke down the

world of cybercrime in games. "The first thing to understand about the

criminals who attack the games industry is that they participate in a working,

fluid, day-to-day economy that they manage completely themselves," he wrote.

"Cybercriminals have built informal structures that mirror the efficiencies of

standard enterprise operations. They have developers, QA folks, middle

managers, project managers, salespeople, and even marketing and PR people who

hype vendors and products." Austin Francisco, security analyst at Key Cyber

Solutions (KCS) – who has "been gaming since the '90s" – says hackers

advertise stolen goods and cheats as "a product and not like a hack," offering

player values such as the ability to "have 100% accuracy aim" or "see people

through walls," for example. Singer doesn't understand the appeal, but "there

are enough people who enjoy it that there's a thriving industry," he says. One

popular attack is account takeovers (ATO), which is used to steal other

players' goods. It's a large market due to the sheer amount of value tied to a

player account: from in-game currencies to achievements unlocked to player

status and "skins"

“Enterprise-Class Open-Source Data Tools” Is Not an Oxymoron

Open source may bring up pictures of dark alleys and bug-ridden software, but

in today’s data-driven world, there’s a new class of solutions. These

open-source tools are the basis for inquiries into the deepest complexities of

artificial intelligence and big data, designed around the massive data load we

create each day. The open-source community works fast, addressing bugs,

security loopholes, and the simple need to make streamlined tools for

real-time insight. Today’s open-source tools result from years of research and

a generation of developers who don’t remember a time when data wasn’t the new

oil. Data itself is coming unlocked from previous silos and repositories,

existing in a continuous state—data in motion. Leveraging open-source tools

allows companies to dream of a reality in which company decisions are

data-driven by the second. Every person in the organization has access to the

data they need. Enterprises must find open-source tools with layers of

capability explicitly designed for their unique data picture. These tools

facilitate complex governance without creating pipeline bottlenecks. They

provide automated documentation of changes, usage, and authorship.

Threat identification is IT ops' role in SecOps

Identifying important assets helps focus SecOps efforts. Additionally, IT

operations teams should base threat identification practices on workflows. The

goal is to understand workflows and their properties, as well as the

statistical results of valid workflow patterns. IT ops teams can thus

recognize the ways in which a workflow deviates from the norm, and potential

threats because of this deviation. There are generally two pieces to this

process: threat incident logging and tracking, and workflow monitoring for

abnormal patterns. Many security threats to IT systems require multiple

attempts by the attacker. At least some of these attempts get recognized,

reported and logged as violations. However, logging tools often ignore a low

volume of incidents. These tools use pattern analysis to indicate an active

threat. To help the tools find these patterns, classify threat incidents. For

example, a series of incidents from a single location or individual that has

rarely generated an incident -- imagine someone entering the wrong password --

is a potential threat indicator. While multiple incidents stemming from one

source is suspect, so is a series of incidents generated by different sources.

Intruders might try several different IP addresses in an attack, for example.

In this example, a pattern of events in the threat incident log will be

obvious.

Demystifying Behavior Driven Development with Cucumber-JVM

Keeping aside the fancy terms for end-to-end test writing such as reusability,

maintainability, and scalability, I always prefer to have a simple definition

for writing them. That is, test cases should be written and arranged in a way

that they can run any number of times, in any sequence, and with a variety of

different datasets. However, it is not as simple as it sounds. This kind of

test writing approach demands different teams to collaborate to discuss

product behavior from the very first day. Therefore, Behavior Driven

Development is based on a fair collaboration among three amigos (Business

Analysts, Developer, and Tester) to its entirety. Intriguingly, the primary

reason for the popularity of BDD testing is its non-technical, clear, and

concise, plain English [or any other international language of your choice ]

language. This way, a business owner can play a significant yet prompt role by

specifying the requirement in a language which is understood not just by

different teams (developers and testers) but also by the testing framework as

well. In our case of Cucumber-JVM, the commonly understandable language is

Gherkin, which shapes the overall concept. Gherkin is a language with no

technical barriers;

Quote for the day:

"Hold yourself responsible for a higher standard than anybody expects of you. Never excuse yourself." -- Henry Ward Beecher

No comments:

Post a Comment