The Importance Of Predictive AI In Cybersecurity

The utilization of AI systems, in the realm of cybersecurity, can have three

kinds of impact, it is constantly expressed in the work: «AI can: grow cyber

threats (amount); change the run of the mill character of these dangers

(quality); and present new and obscure dangers (quantity and quality).

Artificial intelligence could grow the set of entertainers that are fit for

performing noxious cyber activities, the speed at which these actors can play

out the exercises, and the set of plausible targets. Fundamentally, AI-fueled

cyber attacks could likewise be available in more powerful, finely targeted

and advanced activities because of the effectiveness, scalability and

adaptability of these solutions. Potential targets are all the more

effectively identifiable and controllable. In a mix of defensive techniques

and cyber threat detection, AI will move towards predictive techniques that

can identify Intrusion Detection Systems (IDS) pointed toward recognizing

illegal activity within a computer or network, or spam or phishing with

two-factor authentication systems. The guarded strategic utilization of AI

will likewise focus soon on automated vulnerability testing, also known as

fuzzing.

Top 9 ways RPA and analytics work together

Increasingly, organizations are using robotic process automation in analytics

tasks from assembling data spread across the company to analyzing how business

processes work and how they can improve. "RPA is helping streamline the

processes that create valuable insights, changing what areas analytics are

measuring and helping to find new domains of time-consuming tasks to focus

on," said Michael Shepherd, engineer at Dell Technologies Services. When it

comes to RPA and analytics, the automation tool should be a complement to,

rather than a replacement for, an integrated data platform across the company,

said Jonathan Hassell, content director for data and AI at O'Reilly Media.

When companies lack an integrated data platform, it makes analytics more

difficult overall. "RPA can help in some ways, but the potential for RPA to

unlock insights in data and further output and processes requires a good data

platform with great health and hygiene," he said. Hassell recommended

organizations look at three key ways RPA can change analytics. First, it helps

create better data from the outset. Second, the organization can deploy it in

the context of machine learning to sift through large quantities of data and

identify useful information for humans to look at.

Rethinking AI Ethics - Asimov has a lot to answer for

AI per se is neither ethical or not, it is how it is applied by practitioners.

But if an AI model is introduced into the market that routinely disrupts

ethical concepts, what is it? A neutrally ethical artifact produced by

unethical people? Think about the judicial system COMPAS that routinely made

bail and sentencing recommendations two- to three-times more severe for

African-Americans. That is clearly an unethical AI application. Instead of

teaching people about the ethics of Plato, and Aristotle and Kant and trying

to draw a line from that to build AI applications, a better approach is to

start with identifying developers who are simply good people. People who show

forbearance, who have the backbone to resist their organization's directive to

deliver wrong things. People who have prudence can look beyond the results of

a model and project how it will affect the world. Only good people should

develop AI. A quick search turns up over a hundred AI Ethics proclamations

from governments, non-profit special interest groups, government committees,

etc, and it's consuming too much energy and bandwidth. In fact, the cynical

view is that all of this is just a way to avoid authorities from issuing rules

for AI.

Designing for Privacy — An Emerging Software Pattern

The first thing to do is to separate, and consider differently, the user data

that your system needs to “know” from the user data that the system can

collect and treat without actually having access to it. We call those two

categories: Known and Unknown User Data. There is no magic recipe for this

separation as it depends on your system’s functionality. There are systems

where all user data can be considered Unknown — where the system has no

knowledge of the user to whom it delivers its functionality. Yet, most systems

need to identify the user in order to make him pay for a service that they

deliver. A ride sharing platform might want to consider the identity of riders

and drivers “known” but the destination of the ride and any messages exchanged

between drivers and riders “unknown”. A hotel booking platform might perform

the split in such a way to connect users with hotels, get a fee from hotels,

but ignore the dates of the booking that reveal the user’s whereabouts. Once

you segment which user data to treat as “known” and which as “unknown” you can

adopt a new flavor of client-server architecture — the one when you treat the

“known” data as you normally would, but where the “unknown” user data is kept

at the user’s endpoint;

Blockchain may break EU privacy law—and it could get messy

This technology’s transparency and immutability, meaning it cannot be edited

later, is one of its biggest selling points. It’s also why massive enterprises

have been drawn to using it. But if someone wanted to invoke their right to be

forgotten—and order entries about themselves to be erased from the

blockchain—networks may be duty bound to comply under court order. Here’s the

kicker: in some cases, it may be near impossible to obey these orders because

of the sheer levels of computing power required to edit a blockchain. And in

others, the decentralized nature of some networks may mean it’s impossible to

pin down someone who can be held responsible for fulfilling the court order.

As part of her research, Dr Wahlstrom looked at a variation of blockchain

technology that is known as Holochain. She concluded that it could be more

compatible with the “right to be forgotten” legislation because of how its

distributed database breaks the blockchain up—meaning it is easier for a

smaller node to prevent contested data from being reshared. “This allows

individuals to verify data without disclosing all its details or permanently

storing it in the cloud,” she added.

RBI seeks exemption from data protection law

The banking regulator has also gone a step further and suggested that instead

of the Central government, “sectoral regulators be given the power to classify

personal data as critical.” Any critical data, according to the proposed act,

can be processed only in India. Objecting to classification of financial data

as sensitive personal data , RBI’s note maintained that this would lead to

higher compliance and explicit consent, which “would translate to increase in

costs for providing services to customers. Financial inclusion efforts rely on

lower service charges for offering basic banking services. The increase in

costs would compel banks to increase the charges associated with offering

banking services.” RBI’s note also pointed out that countries such as the UK,

France, Germany and Italy do not make such a classification. Privacy experts

said the RBI cannot legally claim an exemption from the obligations that stem

from the 2017 Supreme Court ruling as the Puttuswamy judgment, which upheld

privacy as a fundamental right for Indian citizens. “What the bill does is

flesh out that right in terms of the actual actions that need to be

done.

Digital transformation takes more than the wave of a wand

Championing digital transformation requires more than a magic wand or “plug

and play” approach. Even the best technology is virtually worthless if

everyone isn’t able, available and on board to use it. Without the proper

talent, employee training and company-wide desire to evolve in place, people

will inevitably revert to their old ways, using antiquated and siloed tools

like Excel. Plus, the systems you already have in place have to keep running

smoothly as you roll out digitization plans. Digital transformation does not

happen with the flip of a switch. It requires ongoing strategic efforts to

create a balance among new technologies, strategic solutions and traditional

systems. This is why the aforementioned culture shift is essential before

starting your transformation journey. As Raconteur author Ben Rossi says in

this “Digital innovation and the supply chain” report, “A top-down mandate

from board level to drive supply chain transformation is critical to getting

the rest of the company to collaborate and change their mindset. Without that,

heads of supply chain will run into resistance to change, in turn reducing the

chance of achieving the broader transformation goals.”

Genetic Algorithms: a developer perspective

There are various interesting theories regarding convergence in evolutionary

algorithms, but these are of no concern to us here. Our interest is in

understanding how these algorithms may be used to solve Artificial

Intelligence problems, rather than in understanding why they actually work.

One important class of evolutionary algorithms used in practical applications

is genetic algorithms: these stress the importance of the data representation

used to encode possible solutions to our optimization problem. The class name

is inspired by an analogy with genetic code – the material that encodes our

‘phenotype’ or physical appearance. The use of the adjective ‘genetic’

reflects the fact that evolving solutions are represented by data structures,

usually strings, reminiscent of biological genetic code. ... The goal of a

genetic algorithm is to discover a phenotype that maximises fitness, by

allowing a certain population to evolve across several generations. The next

question is: how does the evolution of individuals happen? Genetic algorithms

apply a set of ‘genetic operations’ to chromosomes of each generation to allow

them to reproduce and, in the process, introduce casual mutations, much as

occurs in most living beings.

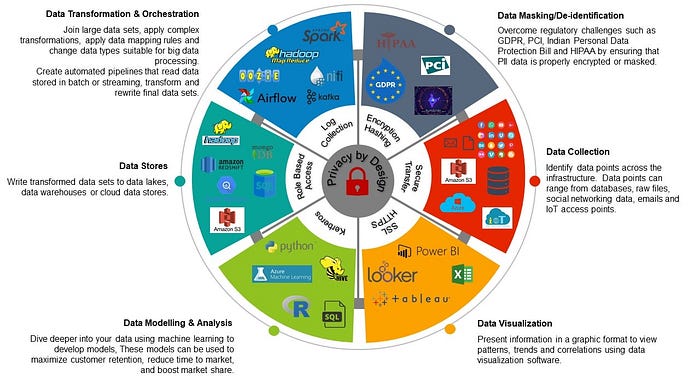

The Story of Data — Privacy By Design

Every byte of data has a story to tell. The question is whether the story is

being narrated accurately and securely. Usually, we focus sharply on the

trends around data with a goal of revenue acceleration but commonly forget

about the vulnerabilities caused due to bad data management. Data possesses

immense power, but immense power comes with increased responsibility. In

today’s world collecting, analyzing and build prediction models is simply not

enough. I keep reminding my students that we are in a generation where the

requirements for data security have perhaps surpassed the need for data

correctness. Hence the need for Privacy By Design is greater than ever. ...

Until recently businesses have focused on looking at data over long stretches

of time, made possible by Big Data. With the advent of Internet of Things

(IoT) analyzing real-time data has gained immense importance. It is very

common these days to have devices in our homes that collect personal data and

transmit it to external locations for either monitoring or analytical

purposes. In many cases the the poor consumer is finding it difficult to

balance the benefits they get from surrendering their personal data against

the risk involved with providing them.

Enterprise Data Literacy: Understanding Data Management

Sandwell believes that the Data Literacy problem stems from specialized

information needs and a lack of shared context. He remarked: “Data Literacy

affects all organizational levels. Everyone uses data for different reasons,

including senior managers and the Chief Data Officer (CDO). The CDO tends to

come from the business side and takes that perspective. However, he or she may

have a steep learning curve about making technical infrastructure ready to serve

and deliver.” On the technical side, workers have a good data inventory;

however, they have less of an understanding of what the data contents mean to

the business. Meanwhile the more data literate data scientists and business

analysts put business and technical information together faster, with more

direct data querying and manipulation. So, across the enterprise, everyone has a

different Data Literacy perspective and talks at cross purposes to one other.

Add to the situation various data maturity levels across departments and

enterprises. Some ask about “the data on-hand, where to access it, and how it

gets used and by whom.” Others have figured out these basics and have different

questions on how to do Metadata Management and create a data catalog of all the

data sets. Since everyone has different data requirements at different times,

getting to a uniform Enterprise Data Literacy remains elusive.

Quote for the day:

"Leading people is like cooking. Don_t stir too much; It annoys the ingredients_and spoils the food." -- Rick Julian

No comments:

Post a Comment