RPA use cases that take RPA to next level

"Start with a small piece of a larger process and take on more," he said. "Then, look upstream and downstream, and ask, 'How do we take that small use case and expand the scope of what the bot is automating? Can we take on more steps in the process, or can we initiate the automation earlier in the process to grow?'" At the same time, Abel said CIOs should create an RPA center of excellence and develop the tech talent needed to take on bigger RPA use cases. He agreed that a strong RPA governance program to ensure the bots are monitored and address any change control procedures is crucial. It's also essential to maintain a strong data governance program, he said, as the bots need good data to operate accurately. Additionally, Abel said he advises CIOs to work with other enterprise executives to develop RPA use cases that align to business objectives so that RPA deployments have long-term value. Abel pointed to one client's experience as a cautionary tale. He said that company jumped right into RPA, deploying bots to automate various tasks.

The underlying blockchain transactional network will be able to handle thousands of transactions per second; data about the financial transactions will be kept separate from data about the social network, according to David Marcus, the former president of PayPal. He is now leading Facebook's new digital wallet division, Calibra. Aside from limited cases, Calibra will not share account information or financial data with Facebook or any third party without customer consent, the social network said in a statement. "This means Calibra customers' account information and financial data will not be used to improve ad targeting on the Facebook family of products," Facebook said. Calibra and its underlying blockchain distributed ledger will scale to meet the demands of "billions," Marcus said in an interview with Fox Business News this morning. Libra is different from other cryptocurrencies, such as bitcoin, in that it is backed by fiat currency, so its value is not simply determined by supply and demand. Bitcoin is "not a good medium of exchange today because [fiat] currency is actually very stable and bitcoin is volatile," Marcus said in the Fox Business News interview.

Western Digital launches open-source zettabyte storage initiative

With this project Western Digital is targeting cloud and hyperscale providers and anyone building a large data center who has to manage a large amount of data, according to Eddie Ramirez, senior director of product marketing for Western Digital. Western Digital is changing how data is written and stored from the traditional random 4K block writes to large blocks of sequential data, like Big Data workloads and video streams, which are rapidly growing in size and use in the digital age. “We are now looking at a one-size-fits-all architecture that leaves a lot of TCO [total cost of ownership] benefits on the table if you design for a single architecture,” Ramirez said. “We are looking at workloads that don’t rely on small block randomization of data but large block sequential write in nature.” Because drives use 4k write blocks, that leads to over-provisioning of storage, especially around SSDs. This is true of consumer and enterprise SSDs alike. My 1TB SSD drive has only 930GB available. And that loss scales. An 8TB SSD has only 6.4TB available, according to Ramirez.

'Extreme But Plausible' Cyberthreats

A new report from Accenture highlights five key areas where cyberthreats in the financial services sector will evolve. Many of these threats could comingle, making them even more disruptive, says Valerie Abend, a managing director at Accenture who's one of the authors of the report. The report, "Future Cyber Theats: Extreme But Plausible Threat Scenarios in Financial Services," focuses on credential and identity theft; data theft and manipulation; destructive and disruptive malware; cyberattackers' use of emerging technologies, such as blockchain, cryptocurrency and artificial intelligence; and disinformation campaigns. In an interview with Information Security Media Group, Abend offers an example of how attackers could comingle threats. If attackers were to wage "a multistaged attack using credential theft against multiple parties that then used disruptive or destructive malware, so that they actually change the information at key points in the business process of critical financial functions ... and then used misinformation outside of that entity using various parts of social media ... they could really do some serious damage," Abend says.

How to prepare for and navigate a technology disaster

Two key developments will have the largest impact on business continuity and disaster recovery planning. The first is serverless architecture. Using this term very loosely, the adoption of these capabilities will dramatically increase application and data portability and enable workloads to be executed virtually anywhere. We're quite a bit of a way from this being the default way you build applications, but it's coming, and it's coming fast. The second is edge computing. As modern applications and business intelligence are moved to the edge, the ability to 'fail over' to additional resources will increase, minimizing (if not eliminating) real and perceived downtime. The more identical places you can run your application, the better the level of availability and performance is going to be. This definitely isn't simple, but we're seeing (and developing) applications each and every day that are built with this architecture in mind, and it's game changing for enterprise and application architecture and planning.

Q&A on the Book Risk-First Software Development

The Risk Landscape is the idea that whenever we do something to deal with one risk, what’s actually happening is that we’re going to pick up other risks as a result. For example, hiring new developers into a team might mean you can clear more Feature Risks (by building features the customers need), but it also means you’re going to pick up Coordination and Agency Risk, because of your bigger team. So, you’re moving about on a Risk Landscape, hoping to find a nice position where the risks are better for you. This first volume of Risk-First Software Development was all about that landscape, and the types of risks you’ll find on it. I am planning a second volume, which again will all be available to read on riskfirst.org. This will focus more on the tools and techniques you can use to navigate the Risk Landscape. For example, if I have a distributed team, I might face a lot of Coordination Risk, where work is duplicated, or people step on each other’s toes. What are the techniques I can use to address that? I could introduce a chat tool like Slack, but it might end up wasting developer time and causing more Schedule Risk.

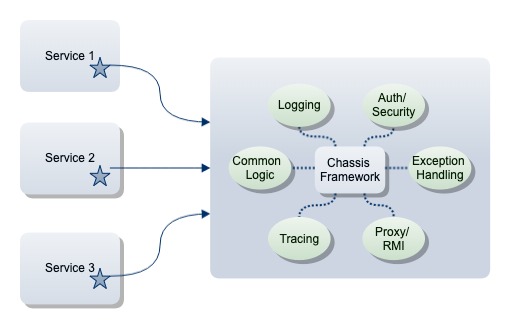

This is not something new. Reusability is something we learn in at the very beginning of our developer lives. This pattern cuts down on the redundancy factor and complexity across services by abstracting the common logic to a separate layer. If you have a very generic chassis, it could even be used across platforms or organizations and wouldn't need to be limited to a specific project. It depends on how you write it and what piece of logic you move to this framework. Chassis are a part your microservices infrastructure layer. You can move all sorts of connectivity, configuration, and monitoring to a base framework. ... When you start writing a new service by identifying a domain (DDD) or by identifying the functionality, you might end up writing lots of common code. As you progress and create more and more services, it could result in code duplication, or even chaos, to manage such common concerns and redundant functionalities. Moving such logic to a common place and reusing it across different services would improve the overall lifecycle of your service. You might spend some initial effort in creating this component but it will make your life easier later on.

Remember data is used for dealing with your customers, making decisions, generating reports, understanding revenue and expenditures. Everyone from the customer service team to your senior executive team use data and rely on it being good enough to use. Data governance provides the foundation so that everything else can work. This will include obvious “data” activities like master data management, business intelligence, big data analytics, machine learning and artificial intelligence. But don’t get stuck thinking only in terms of data. Lots of processes in your organization can go wrong if the data is wrong, leading to customer complaints, damaged stock, and halted production lines. Don’t limit your thinking to only data activities. If your organization is using data (and to be honest, which companies aren’t?) you need data governance. Some people may not believe that data governance is sexy, but it is important for everyone. It need not be an overly complex burden that adds controls and obstacles to getting things done. Data governance should be a practical thing, designed to proactively manage the data that is important to your organization.

A well-managed cloud storage service ties directly into the apps you use to create and edit business documents, unlocking a host of collaboration scenarios for employees in your organization and giving you robust version tracking as a side benefit. Any member of your organization can, for example, create a document (or a spreadsheet or presentation) using their office PC, and then review comments and changes from co-workers using a phone or tablet. A cloud-based file storage service also allows you to share files securely, using custom links or email, and it gives you as administrator the power to prevent people in your organization from sharing your company's secrets without permission. With the assistance of sync clients for every major desktop and mobile platform, employees have access to key work files anytime, anywhere, on any device. You might already have access to full-strength cloud collaboration features without even knowing it. If you use Microsoft's Office 365 or Google's G Suite, cloud storage isn't a separate product, it's a feature.

Boost QA velocity with incremental integration testing

There are several strategies for incremental integration testing, including bottom-up, top-down and a hybrid approach blending elements of both, as well as automation. Each method has benefits and limitations, and gets incorporated into an overall test strategy in different ways. These incremental approaches help enable shift-left testing, which means automation shapes how teams can perform the practice. ... Often, the best approach is hybrid, or sandwich, integration testing, which combines both top-down and bottom-up techniques. Hybrid integration testing exploits bottom-up and top-down during the same test cycles. Testers use both drivers and stubs in this scenario. The hybrid approach is multilayered, testing at least three levels of code at the same time. Hybrid integration testing offers the advantages of both approaches, all in support of shift left. Some of the disadvantages remain, especially as the test team must work on both drivers and stubs.

Quote for the day:

"What you do makes a difference, and you have to decide what kind of difference you want to make." -- Jane Goodall

No comments:

Post a Comment