MongoDB CEO tells hard truths about commercial open source

As ugly as that sentiment may seem, it's (mostly) true. Not completely, because MongoDB has had some external contributions. For example, Justin Dearing responded to Ittycheria's claim thus: "As someone that has made a (very tiny) contribution to the [MongoDB] server source code, this is kind of insulting to hear [it] said this way." There's also the inconvenient truth that part of MongoDB's popularity has been the broad array of drivers available. While the company writes the primary drivers used with MongoDB, the company relies on third-party developers to pick up the slack on lesser-used drivers. Those drivers, though less used, still contribute to the overall value of MongoDB. But it's largely true, all the same. And it's probably even more true of all the other open source companies that have been lining up to complain about public clouds like AWS "stealing" their code. None of these companies is looking for code contributions. Not really. When AWS, for example, has tried to commit code, they've been rebuffed.

Wyden also recommends implementing new technology and better training for government workers to help ensure that sensitive documents can be sent securely with better encryption. "Many people incorrectly believe that password-protected .zip files can protect sensitive data," Wyden writes in the letter. "Indeed, many password-protected .zip files can be easily broken with off-the-shelf hacking tool. This is because many of the software programs that create .zip files use a weak encryption algorithm by default. While secure methods to protect and share data exist and are feely available, many people do not know which software they should use." Wyden notes that the increasing number of data breaches, as well as nation-state attacks, point to the need to develop new standards, protocols and guidelines to ensure that sensitive files are encrypted and can be securely shared. He also asked NIST to develop easy-to-use instructions so that the public to take advantage of newer technologies. A spokesperson for NIST tells Information Security Media Group that the agency is reviewing Wyden's letter and will provide a response to the senator's concerns and questions.

Q&A on the Book Empathy at Work

Emotional empathy is inherent in us; when we see someone laughing, we smile. When we see someone crying, we feel sad. Cognitive empathy is understanding what a person is thinking or feeling; this one is often referred to as “perspective taking” because we are actively engaged in attempting to “get” where the person is coming from. Empathic concern is being so moved by what another person is going through that we are empowered to act. The majority of the time when people are talking about empathy, they are referring to empathic concern. These definitions of empathy are all accurate and informative. But a big point that I always try to make is that empathy is a verb; it’s a muscle that must be worked consistently for any real change to occur. That’s why everyone’s definition of what empathy is in their own lives is going to be a little bit different. We all feel understand in a different way, so each person truly has to define what empathy looks and feels like for themselves. For me, it’s allowing me to finish my thoughts. I’m a stutterer, and it sometimes takes me a bit to get a word or a thought out.

A Developer's Journey into AI and Machine Learning

There are challenges. Microsoft really has to sell developers and data engineers that data science, AI and ML is not some big, scary, hyper-nerd technology. There are corners where that is true, but this field is open to anyone who's willing to learn. And Microsoft is certainly doing its part with streamlined services and tools like Cognitive Services and ML.NET. End of the day, anyone who is already a developer/engineer clearly has the core capabilities to be successful in this field. All people need to do is level up some of their skills and add new ones. In some cases, people will need to unlearn what they've already learned, particularly around the field of certainty. The way I like to put it, a DBA (database admin) will always give a precise answer. Inaccurate maybe, but never imprecise. "There are 864,782 records in table X," for example. But a data science/ML/AI practitioner deals with probabilities. "There's a 86.568% chance there's a cat in this picture." It's a change of mindset as much as a change in technologies.

Break free from traditional network security

While it can be argued that perimeterless network security will become essential to keep the wheels of commerce turning, Simon Persin, director at Turnkey Consulting, says: “A lack of network perimeters needs to be matched with technology that can prevent damage.” In a perimeterless network architecture, the design and behaviour of the network infrastructure should aim to prevent IT assets being exposed to threats such as rogue code. Persin says that by understanding which protocols are allowed to run on the network, an SDN can allow people to perform the legitimate tasks required by their role. Within a network architecture, a software-defined network separates the forwarding and control planes. Paddy Francis, chief technology officer for Airbus CyberSecurity, says this means routers essentially become basic switches, forwarding network traffic in accordance with rules defined by a central controller. What this means from a monitoring perspective, says Francis, is that packet-by-packet statistics can be sent back to the central controller from each forwarding element.

The Unreasonable Effectiveness of Software Analytics

Software analytics distills large amounts of low-value data into small chunks of very-high-value data. Such chunks are often predictive; that is, they can offer a somewhat accurate prediction about some quality attribute of future projects—for example, the location of potential defects or the development cost. In theory, software analytics shouldn’t work because software project behavior shouldn’t be predictable. Consider the wide, ever-changing range of tasks being implemented by software and the diverse, continually evolving tools used for software’s construction (for example, IDEs and version control tools). Let’s make that worse. Now consider the constantly changing platforms on which the software executes (desktops, laptops, mobile devices, RESTful services, and so on) or the system developers’ varying skills and experience. Given all that complex and continual variability, every software project could be unique. And, if that were true, any lesson learned from past projects would have limited applicability for future projects.

Robots can now decode the cryptic language of central bankers

But robots aren’t that smart yet, according to Dirk Schumacher, a Frankfurt-based economist at French lender Natixis SA, which this month started publishing an automated sentiment index of European Central Bank meeting statements. “The question is how intelligent it can become,” he said. “Maybe in a few years time we’ll have algorithms which get everything right, but at this stage I find it a nice crosscheck to verify one’s own assessments.” The main edge humans still have over machines is being able to read and understand ambiguity, Schumacher said. While Natixis’ system can quantify how optimistic or pessimistic ECB policy makers are looking at word choice and intensity, it can’t discern if a policy maker said something ironic — although arguably not all humans could either. “It’s not a perfect science and it’s hard to see that humans will be replaced by these methods anytime soon,” said Elisabetta Basilico, an investment adviser who writes about quantitative finance. Prattle, which was recently acquired by Liquidnet, claims its software accurately predicts G10 interest rate moves 9.7 times out of 10.

Error-Resilient Server Ecosystems for Edge and Cloud Datacenters

Realizing our proposed errorresilient, energy-efficient ecosystem faces many challenges, in part because it requires the design of new technologies and the adoption of a system operation philosophy that departs from the current pessimistic one. The UniServer Consortium (www .uniserver2020.eu)—consisting of academic institutions and leading companies such as AppliedMicro Circuits, ARM, and IBM—is working toward such a vision. Its goal is the development of a universal system architecture and software ecosystem for servers used for cloud- and edge-based datacenters. The European Community’s Horizon 2020 research program is funding UniServer (grant no. 688540). The consortium is already implementing our proposed ecosystem in a state-of-the art X-Gene2 eight-core, ARMv8-based microserver with 28- nm feature sizes. The initial characterization of the server’s processing cores shows that there is a significant safety margin in the supply voltage used to operate each core. Results show that the some cores could use 10 percent below the nominal supply voltage that the manufacturer advises. This could lead to a 38 percent power savings.

What is edge computing, and how can you get started?

Edge computing architecture is a modernized version of data center and cloud architectures with the enhanced efficiency of having applications and data closer to sources, according to Andrew Froehlich, president of West Gate Networks. Edge computing also seeks to eliminate bandwidth and throughput issues caused by the distance between users and applications. Edge computing is not the same as the network edge, which is more similar to a town line. A network edge is one or more boundaries within a network to divide the enterprise-owned and third-party-operated parts of the network, Froehlich said. This distinction enables IT teams to designate control of network equipment. Edge computing's ability to bring compute and storage data in or near enterprise branches is attractive to those who require quick response times and support for large data amounts. Edge computing can bring several benefits to enterprise networks with centralized management, lights-out operations and cloud-style infrastructure, according to John Burke

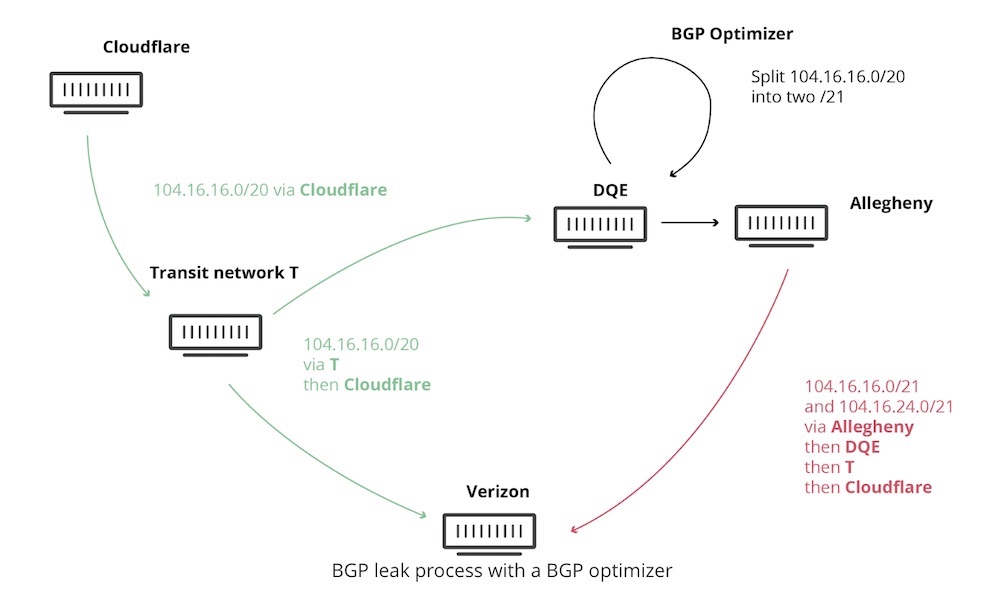

Cloudflare Criticizes Verizon Over Internet Outage

Cloudflare put the blame squarely on Verizon for not adequately filtering erroneous routes announced by an ISP, DQE Communications, in Pennsylvania. It pulled no punches, saying there was no good reason for Verizon's failure other than "sloppiness or laziness." "The leak should have stopped at Verizon," writes Tom Strickx, a Cloudflare network software engineer, in the blog post. "However, against numerous best practices outlined below, Verizon's lack of filtering turned this into a major incident that affected many Internet services such as Amazon, Linode and Cloudflare." DQE used a BGP optimizer, which allows for more specific BGP routes, Strickx writes. Those more specific routes trump more general ones in announcements. DQE announced the routes to one of its customers, Allegheny Technologies, a metals manufacturing company. Then, those routes went to Verizon. To be fair, the ultimate responsibility falls on DQE for announcing the wrong routes. Allegheny is somewhat to blame for pushing those routes on. But then Verizon - one of the largest transit providers in the world - propagated the routes.

Quote for the day:

"Defeat is not the worst of failures. Not to have tried is the true failure." -- George Woodberry

No comments:

Post a Comment