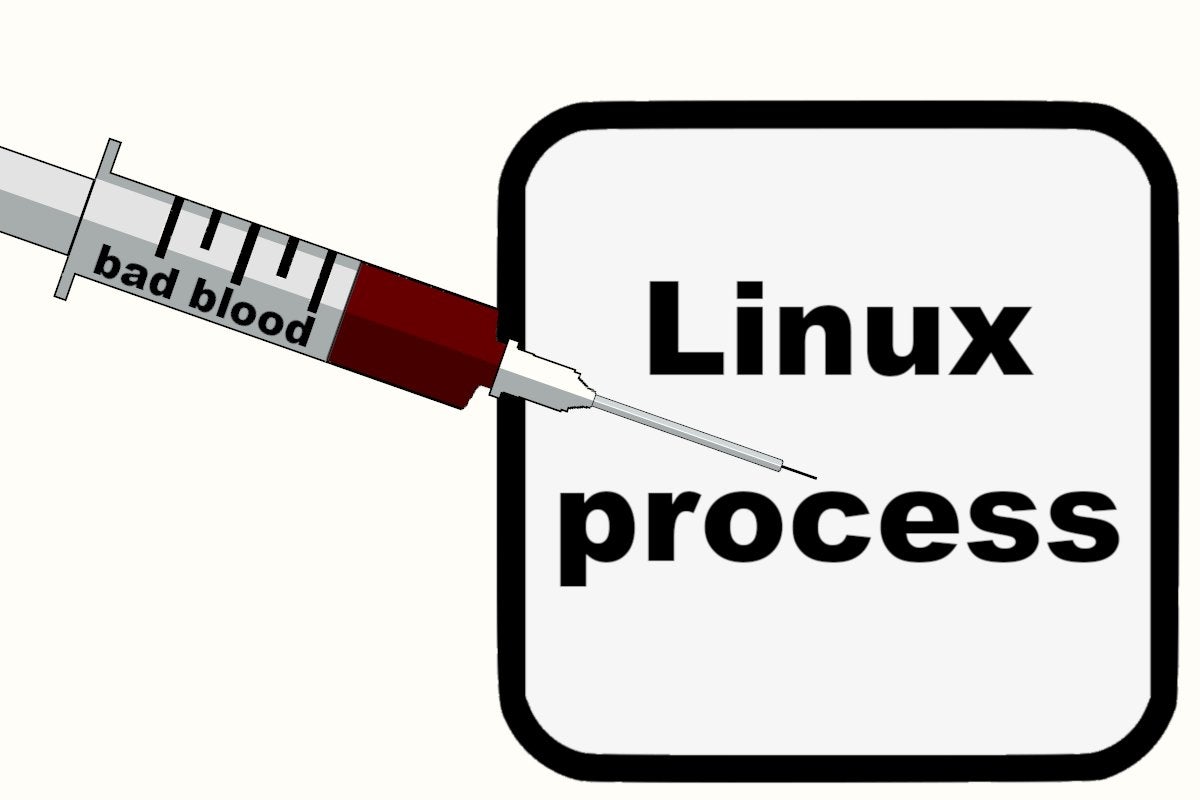

Tracking down library injections on Linux

The linux-vdso.so.1 file (which may have a different name on some systems) is one that the kernel automatically maps into the address space of every process. Its job is to find and locate other shared libraries that the process requires. One way that this library-loading mechanism is exploited is through the use of an environment variable called LD_PRELOAD. As Jaime Blasco explains in his research, "LD_PRELOAD is the easiest and most popular way to load a shared library in a process at startup. This environmental variable can be configured with a path to the shared library to be loaded before any other shared object." ... Note that the LD_PRELOAD environment variable is at times used legitimately. Various security monitoring tools, for example, could use it, as might developers while they are troubleshooting, debugging or doing performance analysis. However, its use is still quite uncommon and should be viewed with some suspicion. It's also worth noting that osquery can be used interactively or be run as a daemon (osqueryd) for scheduled queries. See the reference at the bottom of this post for more on this.

Responsible Data Management: Balancing Utility With Risks

To mitigate risks relating to data sharing, good protocols for information exchange need to be in place. Currently these exist bilaterally between certain organisations, but these should extend to apply multilaterally, to an entire sector or to an entire response to maximise impact. Another way to improve inter-agency data sharing is to use contemporary cryptographic solutions, which allows for data usage without giving up data governance. In other words, one organisation can run analyses on another organisation’s data and get aggregate outputs, without ever accessing the data directly. There are a number of other data-management practices that can reduce the risks of the data falling into the wrong hands, such as ensuring that all computers in the field are password protected, and have firewalls and up-to-date antivirus software, operating systems and browsers. Additionally, the data files themselves should be encrypted. There are open-source programs that solve all of these tasks, so addressing them may be a matter of competence inside organisations rather than funding.

Insurer: Breach Undetected for Nine Years

But despite the common challenges in detecting data breaches, the nine-year lag time at Dominion National is unusually high, some experts note. "Dominion National's notification of a breach nine years after the unauthorized access may be an unenviable record for detection," says Hewitt of CynergisTek. "This is unusual because it strongly suggests that they may not have been performing comprehensive security audits or performing system activity reviews." Tom Walsh, president of the consultancy tw-Security, notes: "I am surprised that they detected it dating that far back. Most organizations do not retain audit logs or event logs for that long. "Most disturbing is that an intruder or a malicious program or code could be into the systems and not previously detected. Nine years is beyond the normal refresh lifecycle for most servers. I would have thought that it could have been detected during an upgrade or a refresh of the hardware." Walsh adds that it is still unclear whether the incident is reportable under the HIPAA Breach Notification Rule. "They were careful in stating that there is no evidence to indicate that data was even accessed," he notes.

Going Beyond GDPR to Protect Customer Data

GDPR was something of a superstar in 2018. Searches on the regulation hit Beyoncé and Kardashian territory periodically throughout the year. In the United States, individual states began either exploring their own version of the GDPR or, in the case of California, enacting their own regulations. Other states that either enacted or strengthened existing data governance laws similar to the GDPR include Alabama, Arizona, Colorado, Iowa, Louisiana, Nebraska, Oregon, South Carolina, South Dakota, Vermont and Virginia. At this point, there is also a growing number of companies operating outside the EU that are ceasing operations with the EEA rather than taking on expensive changes to their business applications and practices and becoming subject to possible fines assessments. GDPR prosecutions continue, as do the filing of complaints and investigations. Each member country has its own listing of court cases in progress, so it’s a bit difficult to quantify just how many investigations and cases are active.

Juniper’s Mist adds WiFi 6, AI-based cloud services to enterprise edge

“Mist's AI-driven Wi-Fi provides guest access, network management, policy applications and a virtual network assistant as well as analytics, IoT segmentation, and behavioral analysis at scale,” Gartner stated. “Mist offers a new and unique approach to high-accuracy location services through a cloud-based machine-learning engine that uses Wi-Fi and Bluetooth Low Energy (BLE)-based signals from its multielement directional-antenna access points. The same platform can be used for Real Time Location System (RTLS) usage scenarios, static or zonal applications, and engagement use cases like wayfinding and proximity notifications.” Juniper bought Mist in March for $405 million for this AI-based WIFI technology. For Juniper the Mist buy was significant as it had depended on agreements with partners such as Aerohive and Aruba to deliver wireless, according to Gartner. Mist, too, has partners and recently announced joint product development with VMware that integrates Mist WLAN technology and VMware’s VeloCloud-based NSX SD-WAN. “Mist has focused on large enterprises and has won some very well known brands,” said Chris Depuy

DevSecOps Keys to Success

The most important elements of a successful DevSecOps implementation are automation and collaboration. 1) With DevSecOps, the goal is to embed security early on into every phase of the development/deployment lifecycle. By designing a strategy with automation in mind, security is no longer an afterthought; instead, it becomes part of the process from the beginning. This ensures security is ingrained at the speed and agility of DevOps without slowing business outcomes. 2) Similar to DevOps where there is close alignment between developers and technology operations engineers, collaboration is crucial in DevSecOps. Rather than considering security to be “someone else’s job,” developers, technology operations and security teams all work together on a common goal. By collaborating around shared goals, DevSecOps teams make informed decisions in a workflow where there is the biggest context around how changes will impact production and the least business impact to take corrective action.

Microsoft beefs up OneDrive security

![Microsoft > OneDrive [Office 365]](https://images.idgesg.net/images/article/2019/02/cw_microsoft_office_365_onedrive-100787148-large.jpg)

The new feature - dubbed OneDrive Personal Vault - was trumpeted as a special protected partition of OneDrive where users could lock their "most sensitive and important files." They would access that area only after a second step of identity verification, ranging from a fingerprint or face scan to a self-made PIN, a one-time code texted to the user's smartphone or the use of the Microsoft Authenticator mobile app. The idea behind OneDrive Personal Vault, said Seth Patton, general manager for Microsoft 365, is to create a failsafe so that "in the event that someone gains access to your account or your device," the files within the vault would remain sacrosanct. Access to the vault will also be on a timer, Patton said, that locks the partition after a user-set period of inactivity. Files opened from the vault will also close when the timer expires. As the feature's name implied, the vault is only for OneDrive Personal, the consumer-grade storage service, not for the OneDrive for Business available to commercial customers. Although OneDrive Personal is a free service - albeit with a puny 5GB of storage - many come to it from the Office 365 subscription service.

Disaster planning: How to expect the unexpected

Larger organisations will typically have the advantages of a greater resource reserve and multiple premises in different regions, but they can be slow to react and their communications can struggle. Smaller organisations can be much more adaptable and swifter to react, but rarely have resources in reserve and are usually based in one fixed location. As with all things, preparation is key, and therefore it is worth taking time to prepare business continuity strategies and disaster plans. Rather than being scenario-specific – having dedicated plans for different eventualities – organisations should take an agnostic approach with their business continuity strategies. “If your business recovery plan is written strictly for recovery, regardless of scenario, then you will be in the best shape, as you will know that whatever happens, the plan has been tested,” says Dan Johnson, director of global business continuity and disaster recovery at Ensono. “You will go to your backup procedures that keep your daily processes moving and make sure business is flowing.”

An IoT maturity model and tips for IoT deployment success

Nemertes compiled these results into an IoT maturity model that companies can use as their roadmap to success (see figure). The maturity model comprises four levels: unprepared -- the organization lacks tools and processes to address the IoT initiative; reactive -- the organization has platforms and processes to respond to but not proactively address the issue; proactive -- the organization has the tools and processes to proactively deliver on the issue as it is currently understood; and anticipatory -- the organization has the tools, processes and people to handle future requirements. The third of organizations in the survey with successful IoT deployments were likely to be at Level 2 or Level 3 IoT maturity, and in a couple of areas -- namely executive sponsorship, budgeting and architecture -- successful organizations far outshone organizations that were less successful. ... In addition, key IoT team members include the IoT architect, IoT security architect, IoT network architect and IoT integration architect. Most large organizations have different architects that encompass these responsibilities, though their job titles may not reflect it.

AI presents host of ethical challenges for healthcare

With respect to ethics, she observed that the massive volumes of health data being leveraged by AI must be carefully protected to preserve privacy. “The sheer volume, variability and sensitive nature of the personal data being collected require newer, extensive, secure and sustainable computational infrastructure and algorithms,” according to Tourassi’s testimony. She also told lawmakers that data ownership and use when it comes to AI continues to be a sensitive issue that must be addressed. “The line between research use and commercial use is blurry,” said Tourassi. To maintain a strong ethical AI framework, Tourassi believes fundamental questions need to be answered such as: Who owns the intellectual property of data-driven AI algorithms in healthcare? The patient or the medical center collecting the data by providing the health services? Or the AI developer? “We need a federally coordinated conversation involving not only the STEM sciences but also social sciences, economics, law, public policy and patient advocacy stakeholders” to “address the emerging domain-specific complexities of AI use,” she added

Quote for the day:

"Nobody in your organization will be able to sustain a level of motivation higher than you have as their leader." -- Danny Cox

No comments:

Post a Comment