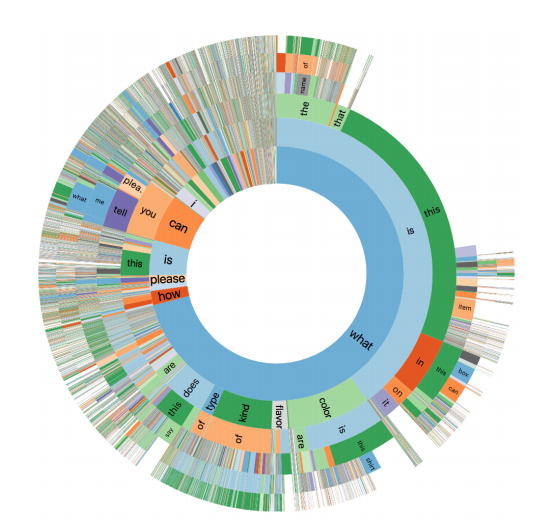

The questions are sometimes simple, but by no means always. Many questions can be summarized as “What is this?” However, only 2 percent call for a yes-or-no answer, and fewer than 2 percent can be answered with a number. And there are other unexpected features. It turns out that while most questions begin with the word “what,” almost a quarter begin with a much more unusual word. This is almost certainly the result of the recording process clipping the beginning of the question. But answers are often still possible. Take questions like “Sell by or use by date of this carton of milk” or “Oven set to thanks?” Both are straightforward to answer if the image provides the right information. The team also analyzed the images. More than a quarter are unsuitable for eliciting an answer, because they are not clear or do not contain the relevant info. Being able to spot these quickly and accurately would be a good start for a machine vision algorithm.

The questions are sometimes simple, but by no means always. Many questions can be summarized as “What is this?” However, only 2 percent call for a yes-or-no answer, and fewer than 2 percent can be answered with a number. And there are other unexpected features. It turns out that while most questions begin with the word “what,” almost a quarter begin with a much more unusual word. This is almost certainly the result of the recording process clipping the beginning of the question. But answers are often still possible. Take questions like “Sell by or use by date of this carton of milk” or “Oven set to thanks?” Both are straightforward to answer if the image provides the right information. The team also analyzed the images. More than a quarter are unsuitable for eliciting an answer, because they are not clear or do not contain the relevant info. Being able to spot these quickly and accurately would be a good start for a machine vision algorithm.

Memcached Servers Being Exploited in Huge DDoS Attacks

Security researchers have previously warned about Internet-facing Memcached servers being open to data theft and other security risks. Desler theorizes one reason why attackers have not used Memcached as an amplification vector in DDoS attacks previously is simply because they have not considered it and not because of any technical limitations. Exploiting Memcached servers is new as far real-world DDoS attacks are concerned, says Chad Seaman, senior engineer, with Akamai's Security Intelligence Response Team. "A researcher had theorized this could be done previously," Seaman says. "But as Memcached isn't meant to run on the Internet and is a LAN-scoped technology that is wide open, he thought it could really only be impactful in a LAN environment." But the use of default settings and reckless administration overall among many enterprises has resulted in a situation where literally tens of thousands of boxes running Memcached are on the public-facing Internet, Seaman says.

Firms failing to learn from cyber attacks

The survey findings suggest security inertia has infiltrated many organisations, with an inability to repel or contain cyber threats and the resultant impact on the business. This inertia is reflected in the fact that 46% of respondents said their organisation cannot prevent attackers from breaking into internal networks every time it is attempted, 36% said that administrative credentials are stored in Word or Excel documents on company PCs, and half admitted their customers’ privacy or PII (personally identifiable information) could be at risk because their data is not secured beyond the legally-required basics. The report notes that the automated processes inherent in cloud and DevOps mean that privileged accounts, credentials and secrets are being created at a prolific rate. If compromised, the report said these can give attackers a crucial jumping-off point to achieve lateral access to sensitive data across networks, data and applications or to use cloud infrastructure for illicit crypto mining activities.

While the “shift to Teal” is a more big picture view, there is an interesting perspective on self-organization in teams and organizations that states basically that organizations with self-organizing teams actually still have leaders / leadership. This perspective brings the big picture view above more in focus in individual organizations and companies. This is discussed in a book by Lex Sisney titled “Organizational Physics - The Science of Growing a Business”. Sisney proposes that in reality instead of having top-down or bottom up organization, some of the most new and adaptable organizations are actually “Design-Centric” organizations. ... So the leadership shift is not a choice of top-down or bottom-up, but rather one where the leader designs a system within the organization that allows teams to self-organize and to be empowered to deliver the organization’s objectives. If this is done well, there is little need for the leader to intervene in the organization or system because the people and teams are able to effectively lead and guide the organization themselves.

14 top tools to assess, implement, and maintain GDPR compliance

The European Union’s General Data Protection Regulation (GDPR) goes into effect in May 2018, which means that any organization doing business in or with the EU has six months from this writing to comply with the strict new privacy law. The GDPR applies to any organization holding or processing personal data of E.U. citizens, and the penalties for noncompliance can be stiff: up to €20 million (about $24 million) or 4 percent of annual global turnover, whichever is greater. Organizations must be able to identify, protect, and manage all personally identifiable information (PII) of EU residents even if those organizations are not based in the EU. Some vendors are offering tools to help you prepare for and comply with the GDPR. What follows is a representative sample of tools to assess what you need to do for compliance, implement measures to meet requirements, and maintain compliance once you reach it.

Chris Webber, a security strategist with SafeBreach, says configuration errors are one of the most frequently occurring issues with NGFWs. “Many users get tripped up if they only rely on vendor-supplied defaults,” Webber said. “A next-generation firewall can be like having a Swiss army knife on your network, but many times its features aren’t turned on, which lets attackers gain access.” Webber also noted that most vendors provide auto-migration tools to help new customers migrate from their legacy firewalls to NGFWs but that errors may occur during this process, as vendor features and architecture can vary. SafeBreach said it has discovered breach scenarios due to these policy gaps and errors resulting from assumptions about new NGFW vendor default policies and auto-migration challenges. Another issue is that many users don’t decrypt encrypted traffic like SSL, TLS, and SSH, which can become a major blind spot for customers, Webber said.

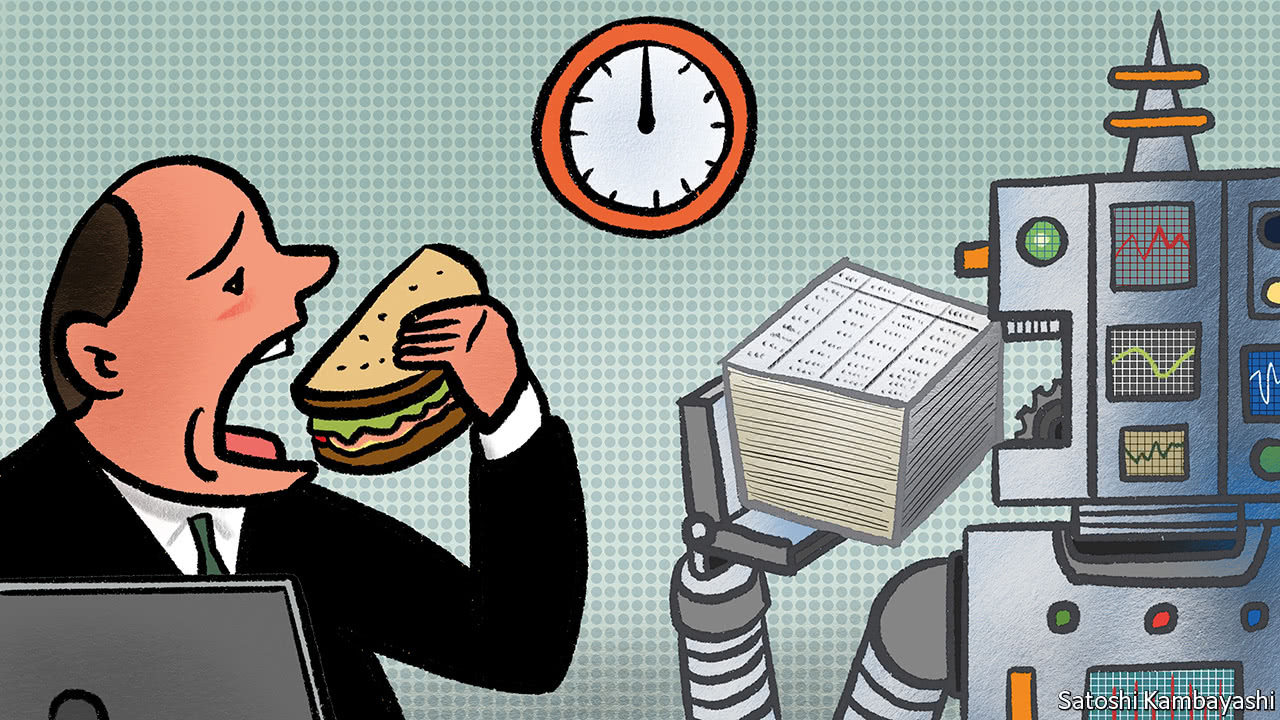

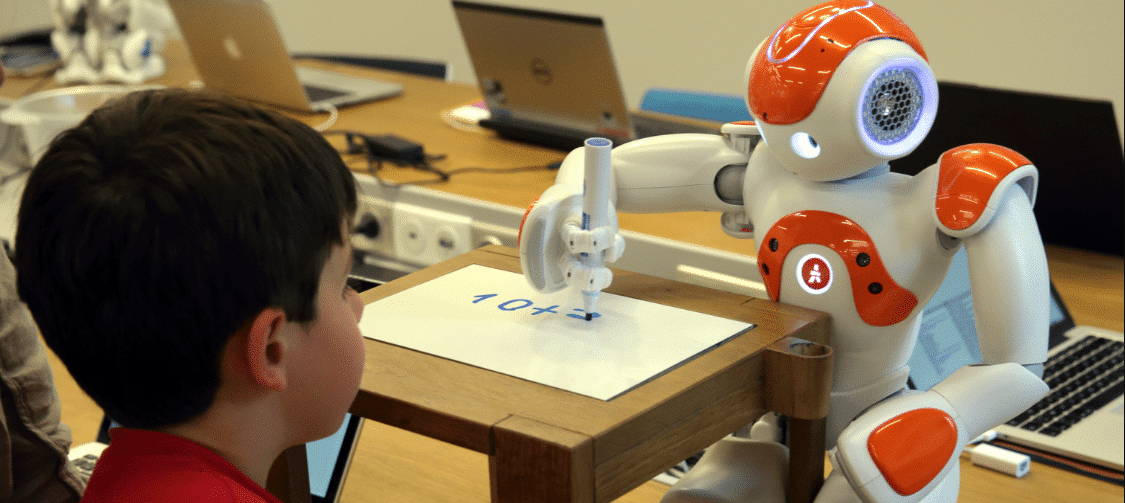

The future of every type of ambitious commercial business, whether it’s a factory making products, a bank loaning money, an IT support shop helping users, a grocery store selling goods, a law firm prepping available information for its client cases, an analyst firm producing insight… is to perform its business operations with the optimum balance of talent, so it can maximise its immediate profits, with an eye on the future to stay ahead of the competition. As soon as someone’s output is predictable, taking inputs from various sources to produce outputs, you can start to figure out how to program software and machines to perform said tasks – and computers will always be cheaper than humans, once they are functional and can do the job. So our goal has to be about furthering our abilities, not only to get the basics of our jobs done, but to immerse ourselves into helping our colleagues and bosses figure out the what next. Because if we only focus on the now, we are eventually going to render ourselves predictable and replaceable.

Virtual Private Networks: Why Their Days Are Numbered

VPNs require an array of equipment, protocols, service providers and topologies to be successfully implemented across an enterprise network – and the complexity is only perpetuated as networks grow. Purchasing the excess capacity and new Multiprotocol Label Switching (MPLS) connections needed to support effective VPNs can weigh heavily on IT budgets, while managing these networks will require greater reliance on personnel. Rather than limit the number of devices on their networks, organizations need to seek out solutions that simplify network management as companies continue embracing mobile and remote workforces. Even businesses that continue to rely on VPN or backhaul networks to protect their data need to employ a defense-in-depth approach to security, since VPNs, on their own, only offer the baseline protections of a standard web proxy. As more solutions move to the cloud and enterprises rely less and less on physical servers and network connections, the need for VPNs will eventually evolve, if not disappear altogether.

From a security standpoint, what you really want is to be alerted when employees do something suspicious. User behavior analytics (UBA) are a smarter way to sniff out anomalies in users' actions and flag them for further investigation. Companies like IBM and Varonis have developed advanced UBA tools that can detect unusual activity. Is an employee trying to access a file they shouldn’t? Maybe they’re downloading something at 3:00am from a location that isn’t their home. Perhaps they’re trying to move laterally between systems. The beauty of UBA is that it highlights malicious insiders and outsiders using stolen credentials equally well, though it may require further investigation to determine which is which. If you’re going to go to the trouble of monitoring your employees, then maybe you should extract more value from the data you collect. There’s a new breed of software that offers the same potential security protections to ensure compliance but focuses on the end user experience and how it might be improved to remediate issues as they happen.

Monitoring the state of an application is important during development and in production. With a monolithic application, this is rather straightforward, since one can attach a native debugger to the process and have the ability to get a complete picture of the state of the application and its evolution. Monitoring a microservice-based application poses a greater challenge, particularly when the application is composed of tens or hundreds of microservices. Due to the fact that any request may involve being processed by many microservices running multiple times -- potentially on different servers -- it is exceptionally difficult to follow the “story” of the application and identify the causes of problems when they arise. Currently, the main methodology relies on obtaining a trace of all transactions and dependencies using tools that, for example, implement the OpenTracing standard. These tools capture timing, events, and tags, and collect this data out-of-band (asynchronously).

Quote for the day:

"The mark of a great man is one who knows when to set aside the important things in order to accomplish the vital ones." -- Brandon Sanderson

Quote for the day:

"The mark of a great man is one who knows when to set aside the important things in order to accomplish the vital ones." -- Brandon Sanderson