Organizations are investing more money in their analytics programs. These programs do more now than recommending a new blouse or what to watch next on Netflix. If you are SpaceX and your data is incorrect, it could result in the loss of a multi-million-dollar rocket, Biltz said. That's a big deal. The Accenture report, culled from survey responses of more than 6,300 business and IT executives worldwide, found that 82% of those executives are using data to drive critical and automated decisions. What's more, 97% of business decisions are made using data that managers consider to be of unacceptable quality, Accenture notes, citing a study published in HBR. "Now it becomes vitally important that the data you have is as true, as correct, as you can make it," Biltz said. Right now, organizations don't have the systems in place to do that." Plus, there's just more data now, coming from a variety of different sources, than there ever has been in the past.

9 ways to overcome employee resistance to digital transformation

While it's easy to assume technology changes would cause the most issues in the transformation process, tech isn't actually the root of the problem, said R/GA Austin's senior technology director Katrina Bekessy. "Rather, it's usually organizing the people and processes around the new tech that's difficult," Bekessy said. "It's hard to change the way people work, and realign them to new roles and responsibilities. In short, digital transformation is not only a transformation of tech, but it also must be a transformation in a team's (or entire company's) culture and priorities." Inertia and ignorance are two key parts of employee resistance to transformation, according to Michael Dortch, principal analyst and managing editor at DortchOnIT.com. "Inertia results in the 'but we've always done it this way' response to any proposed change in operations, process, or technology, while ignorance limits the ability of constituents to see the necessity and benefits of digital transformation," Dortch said.

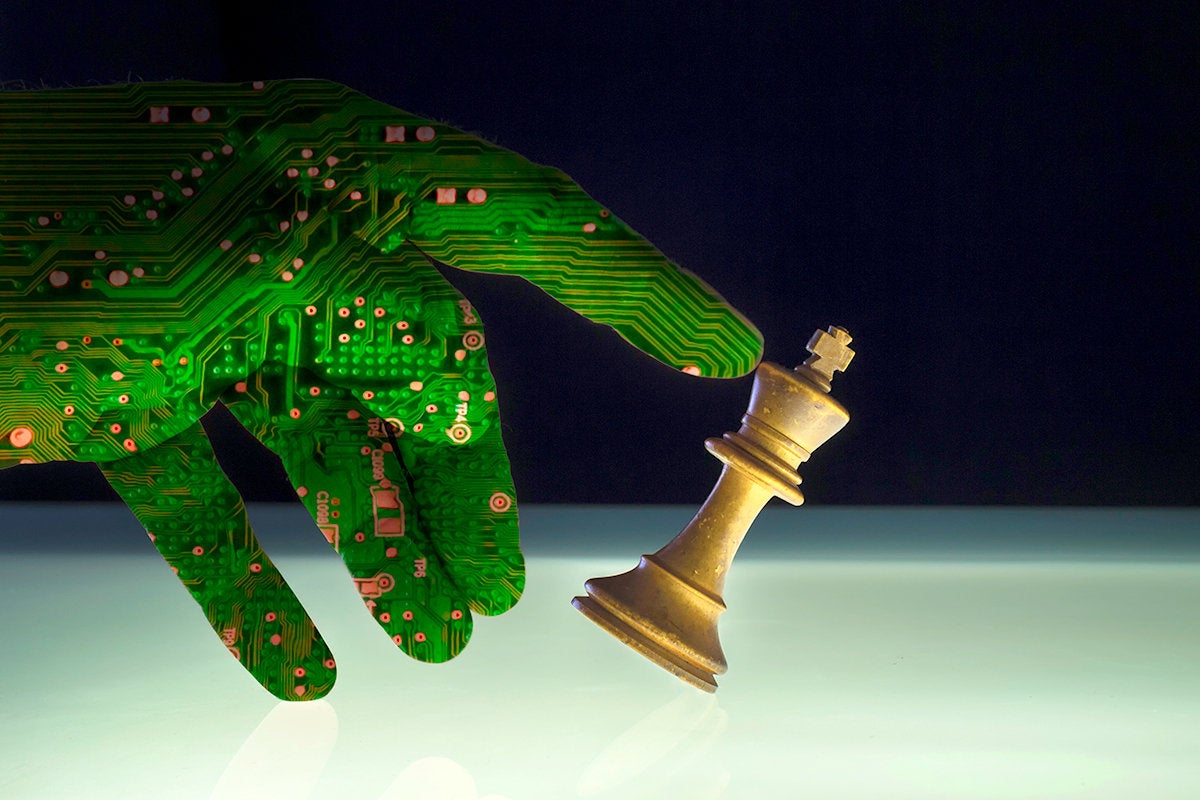

8 Machine Learning Algorithms explained in Human language

What we call “Machine Learning” is none other than the meeting of statistics and the incredible computation power available today (in terms of memory, CPUs, GPUs). This domain has become increasingly visible important because of the digital revolution of companies leading to the production of massive data of different forms and types, at ever increasing rates: Big Data. On a purely mathematical level most of the algorithms used today are already several decades old. ... You are looking for a good travel destination for your next vacation. You ask your best friend for his opinion. He asks you questions about your previous trips and makes a recommendation. You decide to ask a group of friends who ask you questions randomly. They each make a recommendation. The chosen destination is the one that has been the most recommended by your friends. The recommendations made by your best friend and the group will both make good destination choices. But when the first recommendation method works very well for you, the second will be more reliable for other people.

3 Things You Need to Know (and Do) Before Adopting AI

AI enables machines to learn and act, either in place of humans or to supplement the work of humans. We’re already seeing widespread use of AI in our daily lives, such as when brands like Netflix and Amazon present us with options based on our buying behaviors, or when chat bots respond to our queries. AI is used to pilot airplanes and even streamline our traffic lights. And, that’s just the beginning as we enter the age of AI and machine learning, with these technologies replacing traditional manufacturing as drivers of economic growth. A McKinsey Global Institute study found that technology giants Baidu and Google spent up to $30 billion on AI in 2016, with 90 percent of those funds spent on research and development, and deployment and 10 percent on AI acquisitions. In 2018, AI adoption is expected to jump from 13 percent to 30 percent, according to Spiceworks' 2018 State of IT report.

Is the IoT backlash finally here?

As pretty much everyone knows, the Internet of Things (IoT) hype has been going strong for a few years now. I’ve done my part, no doubt, covering the technology extensively for the past 9 months. As vendors and users all scramble to cash in, it often seems like nothing can stop the rise IoT. Maybe not, but there have been rumblings of a backlash to the rise of IoT for several years. Consumer and experts worry that the IoT may not easily fulfill its heavily hyped promise, or that it will turn out to be more cumbersome than anticipated, allow serious security issues, and compromise our privacy. Others fear the technology may succeed too well, eliminating jobs and removing human decision-making from many processes in unexamined and potentially damaging ways. As New York magazine put it early last year, “We’re building a world-size robot, and we don’t even realize it.” Worse, this IoT robot “can only be managed responsibly if we start making real choices about the interconnected world we live in.”

Intel expects PCs with fast 5G wireless to ship in late 2019

Intel will show off a prototype of the new 5G connected PC at Mobile World Congress show in Barcelona. In addition the company will demonstrate data streaming over the 5G network. At its stand, Intel said that it will also show off eSIM technology—the replacement for actual, physical SIM cards—and a thin PC running 802.11ax Wi-Fi, the next-gen Wi-Fi standard. Though 5G technology is the mobile industry’s El Dorado, it always seems to be just over the next hill. Intel has promoted 5G for several years, saying it will handle everything from a communications backbone for intelligent cars to swarms of autonomous drones talking amongst themselves. Carriers, though, have started nailing down when and where customers will be able to access 5G technology. AT&T said Wednesday, for example, that a dozen cities including Dallas and Waco, Texas, and Atlanta, Georgia, will receive their first 5G deployments by year’s end. Verizon has plans for three to five markets, including Sacramento, California.

Who's talking? Conversational agent vs. chatbot vs. virtual assistant

A conversational agent is more focused on what it takes in order to maintain a conversation. With virtual agents or personal assistants, those terms tend to be more relevant in cases where you're trying to create this sense that the conversational agent you're dealing with has its own personality and is somehow uniquely associated with you. At least for me, the term virtual assistant sort of metaphorically conjures the idea of your own personal butler -- someone who is there with you all the time, knows you deeply but is dedicated to just you and serving your needs. .. I think there becomes an intersection between the two ideas. For it to serve you on a personal level, any kind of good personal assistant or virtual assistant needs to retain a great deal of context about you but then use that context as a way of interacting with you -- to use the conversational agent technique for not just anticipating your need but responding to your need and getting to know you better to be able to respond to that need better in the future.

Why the GDPR could speed up DevOps adoption

One of the key trends that's happening now, especially with the changing demographics and change in technology, is most people are interacting with businesses digitally, via their phones, via their computers and so on. A lot of businesses, whether it's retail or banking or insurance or whatever have you—the face of those businesses has started to become digital and where they're not becoming digital there are new companies that are springing up that are disrupting those businesses. DevOps, the whole movement, the single biggest thing about it is agility, which is the ability to bring applications to market quicker, so this new demographic that's interacting with all the businesses digitally can consume or can interact with these businesses in ways that they're used to interacting with everything else, and for these businesses to protect themselves against disruption from other people.

Cisco Report Finds Organizations Relying on Automated Cyber-Security

Among the high-level findings in the 68-page report is that 39 percent of organizations stated they rely on automation for their cyber-security efforts. Additionally, according to Cisco's analysis of over 400,000 malicious binary files, approximately 70 percent made use of some form of encryption. Cisco also found that attackers are increasingly evading defender sandboxes with sophisticated techniques. "I'm not surprised attackers are going after supply chain, using cryptography and evading sandboxed environments, we've seen all these things coming for a long time," Martin Roesch, Chief Architect in the Security Business Group at Cisco, told eWEEK. "I've been doing this for so long, it's pretty hard for me to be surprised at this point." Roesch did note however that he was pleasantly surprised that so many organizations are now relying on automation, as well as machine learning and artificial intelligence, for their cyber-security operations.

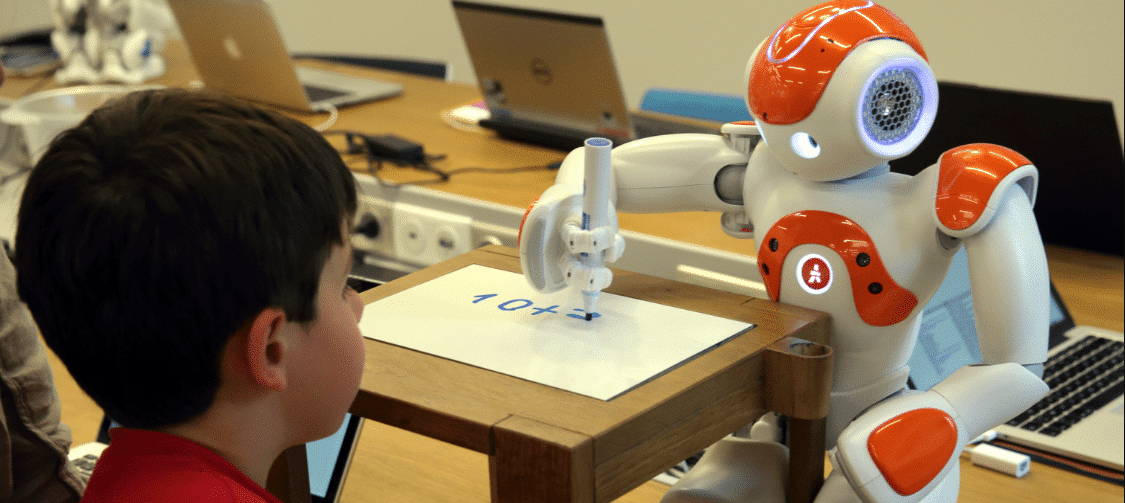

Artificial general intelligence (AGI): The steps to true AI

AI lets a relatively dumb computer do what a person would do using a large amount of data. Tasks like classification, clustering, and recommendations are done algorithmically. No one paying close attention should be fooled into thinking that AI is more than a bit of math. AGI is where the computer can “generally” perform any intellectual task a person can and even communicate in natural language the way a person can. This idea isn’t new. While the term “AGI” harkens back to 1987, the original vision for AI was basically what is now AGI. Early researchers thought that AGI (then AI) was closer to becoming reality than it actually was. In the 1960s, they thought it was 20 years away. So Arthur C. Clarke was being conservative with the timeline for 2001: A Space Odyssey. A key problem was that those early researchers started at the top and went down. That isn’t actually how our brain works, and it isn’t the methodology that will teach a computer how to “think.” In essence, if you start with implementing reason and work your way down to instinct, you don’t get a “mind.”

Quote for the day:

"A man's character may be learned from the adjectives which he habitually uses in conversation." -- Mark Twain

No comments:

Post a Comment