An introduction to data science and machine learning with Microsoft Excel

To most people, MS Excel is a spreadsheet application that stores data in

tabular format and performs very basic mathematical operations. But in

reality, Excel is a powerful computation tool that can solve complicated

problems. Excel also has many features that allow you to create machine

learning models directly into your workbooks. While I’ve been using Excel’s

mathematical tools for years, I didn’t come to appreciate its use for learning

and applying data science and machine learning until I picked up Learn Data

Mining Through Excel: A Step-by-Step Approach for Understanding Machine

Learning Methods by Hong Zhou. Learn Data Mining Through Excel takes you

through the basics of machine learning step by step and shows how you can

implement many algorithms using basic Excel functions and a few of the

application’s advanced tools. While Excel will in no way replace Python

machine learning, it is a great window to learn the basics of AI and solve

many basic problems without writing a line of code. Linear regression is a

simple machine learning algorithm that has many uses for analyzing data and

predicting outcomes. Linear regression is especially useful when your data is

neatly arranged in tabular format. Excel has several features that enable you

to create regression models from tabular data in your spreadsheets.

The Four Mistakes That Kill Artificial Intelligence Projects

Humans have a “complexity bias,” or a tendency to look at things we don’t

understand well as complex problems, even when it’s just our own naïveté.

Marketers take advantage of our preference for complexity. Most people would

pay more for an elaborate coffee ritual with specific timing, temperature,

bean grinding and water pH over a pack of instant coffee. Even Apple

advertises its new central processing unit (CPU) as a “16-core neural engine”

instead of a chip and a “retina display” instead of high-definition. It’s not

a keyboard; it’s a “magic keyboard.” It’s not gray; it’s “space gray.”

The same bias applies to artificial intelligence, which has the unfortunate

side effect of leading to overly complex projects. Even the term “artificial

intelligence” is a symptom of complexity bias because it really just means

“optimization” or “minimizing error with a composite function.” There’s

nothing intelligent about it. Many overcomplicate AI projects by thinking that

they need a big, expensive team skilled in data engineering, data modeling,

deployment and a host of tools, from Python to Kubernetes to PyTorch. In

reality, you don’t need any experience in AI or code.

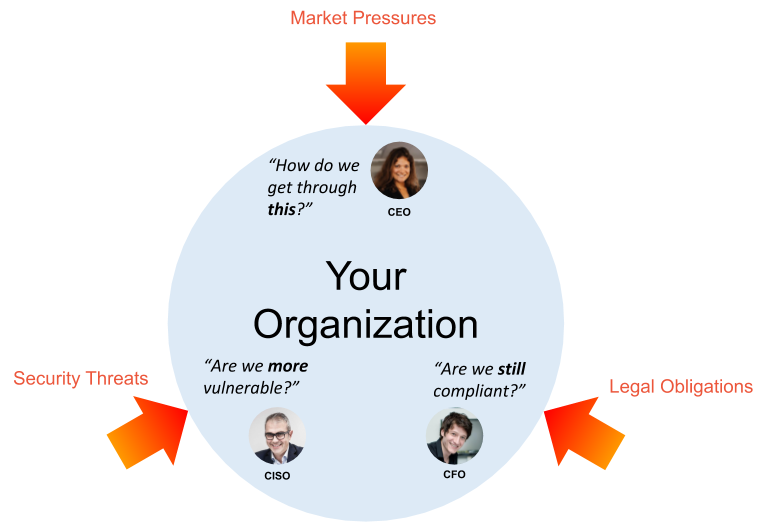

Three reasons why context is key to narrowing your attack surface

Security has become too complex to manage without a contextual understanding

of the infrastructure, all assets and their vulnerabilities. Today’s typical

six-layer enterprise technology stack consists of networking, storage,

physical servers, as well as virtualization, management and application

layers. Tech stacks can involve more than 1.6 billion versions of tech

installations for 300+ products provided by 50+ vendors, per Aberdeen

Research. This sprawl is on top of the 75 security products that an enterprise

leverages on average to secure their network. Now, imagine carrying over this

identical legacy system architecture but with thousands of employees all

shifting to remote work and leveraging cloud-based services at the same time.

Due to security teams implementing new network configurations and security

controls essentially overnight, there is a high potential of new risks being

introduced through misconfiguration. Security teams have more ingress and

egress points to configure, more technologies to secure and more changes to

properly validate. The only way to meaningfully address increased risk while

balancing limited staff and increased business demands is to gain contextual

insight into the exposure of the enterprise environment that enables smarter,

targeted risk reduction.

How to Really Improve Your Business Processes with “Dashboards”

The managers’ success is measured mainly by this task. That is correct in most

cases as he is usually receiving a bonus based on how well the KPIs under his

responsibility perform over a given period. Our goal is to design an

information system to support him with this task. The best way to do that is

to help him answer the main questions related to each step based on the data

we have: Is there a problem? What caused the problem? Which actions

should we take? Were the actions successful? From looking at the

questions above, you can already tell that the dashboard described at the

beginning of this article only helps answer the first question. Most of the

value that an automated analytics solution could have is left out. Let’s have

a look at how a more sophisticated solution could answer these questions. I

took the screenshots from one of the actual dashboards we implemented at my

company. ... The main idea is that a bad result is, in most cases, not caused

by the average. Most of the time, outliers drag down the overall result.

Consequently, showing a top-level KPI without quickly allowing for root cause

analysis leads to ineffective actions as the vast majority of dimension

members is not a problem.

The top 5 open-source RPA frameworks—and how to choose

RPA has the potential to reduce costs by 30% to 50%. It is a smart investment

that can significantly improve the organization's bottom line. It is very

flexible and can handle a wide range of tasks, including process replication

and web scraping. RPA can help predict errors and reduce or eliminate entire

processes. It also helps you stay ahead of the competition by using

intelligent automation. And it can improve the digital customer experience by

creating personalized services. One way to get started with RPA is to use

open-source tools, which have no up-front licensing fees. Below are five

options to consider for your first RPA initiative, with pros and cons of each

one, along with advice on how to choose the right tool for your your company.

... When compared to commercial RPA tools, open source reduces your cost for

software licensing. On the other hand, it may require additional

implementation expense and preparation time, and you'll need to rely on the

open-source community for support and updates. Yes there are trade-offs

between commercial and open souce RPA tools—I'll get to those in a minute. But

when used as an operational component of your RPA implementations, open-source

tools can improve the overall ROI of your enterprise projects. Here's our list

of contenders.

What happens when you open source everything?

If you start giving away the product for free, it’s natural to assume sales

will slow. The opposite happened. (Because, as Ranganathan pointed out, the

product wasn’t the software, but rather the operationalizing of the software.)

“So on the commercial side, we didn’t lose anybody in our pipeline [and] it

increased our adoption like crazy,” he said. I asked Ranganathan to put some

numbers on “crazy.” Well, the company tracks two things closely: creation of

Yugabyte clusters (an indication of adoption) and activity on its community

Slack channel (engagement being an indication of production usage). At the

beginning of 2019, before the company opened up completely, Yugabyte had about

6,000 clusters (and no Slack channel). By the end of 2019, the company had

roughly 64,000 clusters (a 10x boom), with 650 people in the Slack channel.

The Yugabyte team was happy with the results. The company had hoped to see a

4x improvement in cluster growth in 2020. As of mid-December, clusters have

grown to nearly 600,000, and could well get Yugabyte to another 10x growth

year before 2020 closes. As for Slack activity, they’re now at 2,200, with

people asking about use cases, feature requests, and more.

Quote for the day:

"If something is important enough, even if the odds are against you, you should still do it." -- Elon Musk

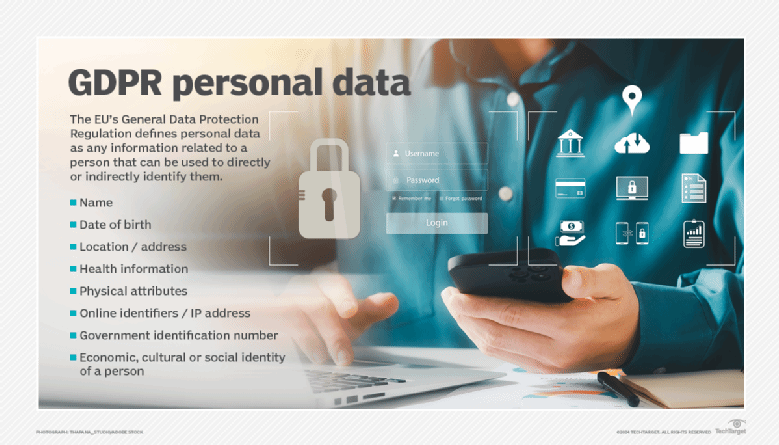

Simplifying Cybersecurity: It’s All About The Data

The most effective way to secure data is to encrypt it and then only decrypt

it when an authorized entity (person or app) requests access and is

authorized to access it. Data moves between being at rest in storage, in

transit across a network and in use by applications. The first step is to

encrypt data at rest and in motion everywhere, which makes data security

pervasive within the organization. If you do not encrypt your network

traffic inside your “perimeter,” you aren’t fully protecting your data. If

you encrypt your primary storage and then leave secondary storage

unencrypted, you are not fully protecting data. While data is often

encrypted at rest and in transit, rarely is it encrypted while in use by

applications. Any application or cybercriminal with access to the server can

see Social Security numbers, credit card numbers and private healthcare data

by looking at the memory of the server when the application is using it. A

new technology called confidential computing makes it possible to encrypt

data and applications while they are in use. Confidential computing uses

hardware-based trusted execution environments (TEEs) called enclaves to

isolate and secure the CPU and memory used by the code and data from

potentially compromised software, operating systems or other VMs running on

the same server.

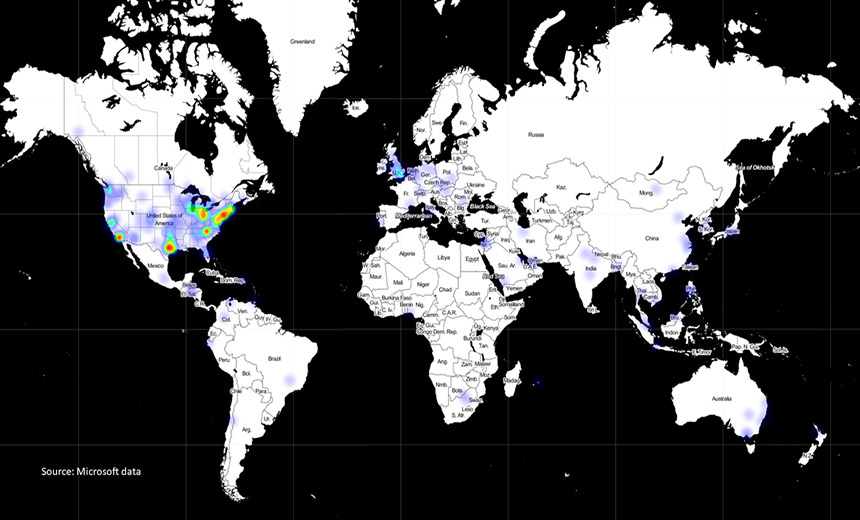

Why the US government hack is literally keeping security experts awake at night

One reason the attack is so concerning is because of who may have been

victimized by the spying campaign. At least two US agencies have publicly

confirmed they were compromised: The Department of Commerce and the

Agriculture Department. The Department of Homeland Security's cyber arm

was also compromised, CNN previously reported. But the range of potential

victims is much, much larger, raising the troubling prospect that the US

military, the White House or public health agencies responding to the

pandemic may have been targeted by the foreign spying, too. The Justice

Department, the National Security Agency and even the US Postal Service

have all been cited by security experts as potentially vulnerable. All

federal civilian agencies have been told to review their systems in an

emergency directive by DHS officials. It's only the fifth such directive

to be issued by the Cybersecurity and Infrastructure Security Agency since

it was created in 2015. It isn't just the US government in the crosshairs:

The elite cybersecurity firm FireEye, which itself was a victim of the

attack, said companies across the broader economy were vulnerable to the

spying, too.

Creating the Corporate Future

With the move to the later 20th century, post-industrial age began with

the systems thinking at its core. The initiating dilemma for this change

was that not all problems could be solved by the prevailing world view,

analysis. It is unfortunate to think about the number of MBAs that were

graduated with callus analysis at their core. As enterprise architects

know, when a system is taken apart, it loses its essential properties. A

system is a whole that cannot be understood through analysis. What is

needed instead is a synthesis or the putting together things together. In

sum, analysis focuses on structure whereas synthesis focuses on why things

operate as they do. At the beginning of the industrial age, the

corporation was viewed as a legal mechanism and as a machine. However, in

the post-industrial age, Ackoff suggests a new view of the corporation. He

suggests a view of the corporation as a purposefully system that is part

of more purposeful systems and parts of which, people, have purposes on

their own. Here leaders need to be aware of the interactions of

corporations at the societal, organizational, and individual level. At the

same time, they need to realize how an organizations parts affect the

system and how external systems affect the system.

Microservices vs. Monoliths: An Operational Comparison

There are a number of factors at play when considering complexity: The

complexity of development, and the complexity of running the software. For

the complexity of development the size of the codebase can quickly grow

when building microservice-based software. Multiple source codes are

involved, using different frameworks and even different languages. Since

microservices need to be independent of one another there will often be

code duplication. Also, different services may use different versions of

libraries, as release schedules are not in sync. For the running and

monitoring aspect, the number of affected services is highly relevant. A

monolith only talks to itself. That means it has one potential partner in

its processing flow. A single call in a microservice architecture can hit

multiple services. These can be on different servers or even in different

geographic locations. In a monolith, logging is as simple as viewing a

single log-file. However, for microservices tracking an issue may involve

checking multiple log files. Not only is it necessary to find all the

relevant logs outputs, but also put them together in the correct order.

Microservices use a unique id, or span, for each call.

Quote for the day:

"If something is important enough, even if the odds are against you, you should still do it." -- Elon Musk