Being a responsible CTO isn’t just about moving to the cloud

The reasons for needing to be a responsible CTO are just as strong as the need

to be a tech-savvy one if a company wants to thrive in a digital economy. There

are many facets to being a responsible CTO, such as making sure that code is

being written in a diverse way, and that citizen data is being used

appropriately. In a BCS webinar, IBM fellow and vice-president for technology in

EMEA, Rashik Parmar, summarised that the three biggest forces driving

unprecedented change today included post-pandemic work; digitalisation; and the

climate emergency. With many organisations turning to technology to help solve

some of the biggest challenges they’re facing today, it’s clear that there will

need to be answers about how this tech-heavy economy will impact the

environment. It makes sense that this is often the first place that a CTO will

start when deciding how to drive a more responsible future. ... If we focus on

the environmental considerations, it’s becoming more commonly known that whilst

a move to the cloud may be better for reducing an organisation’s carbon

emissions than running multiple on-premises systems, the initiative alone isn’t

going to spell good news for climate change.

The reasons for needing to be a responsible CTO are just as strong as the need

to be a tech-savvy one if a company wants to thrive in a digital economy. There

are many facets to being a responsible CTO, such as making sure that code is

being written in a diverse way, and that citizen data is being used

appropriately. In a BCS webinar, IBM fellow and vice-president for technology in

EMEA, Rashik Parmar, summarised that the three biggest forces driving

unprecedented change today included post-pandemic work; digitalisation; and the

climate emergency. With many organisations turning to technology to help solve

some of the biggest challenges they’re facing today, it’s clear that there will

need to be answers about how this tech-heavy economy will impact the

environment. It makes sense that this is often the first place that a CTO will

start when deciding how to drive a more responsible future. ... If we focus on

the environmental considerations, it’s becoming more commonly known that whilst

a move to the cloud may be better for reducing an organisation’s carbon

emissions than running multiple on-premises systems, the initiative alone isn’t

going to spell good news for climate change.Frozen Neon Invention Jolts Quantum Computer Race

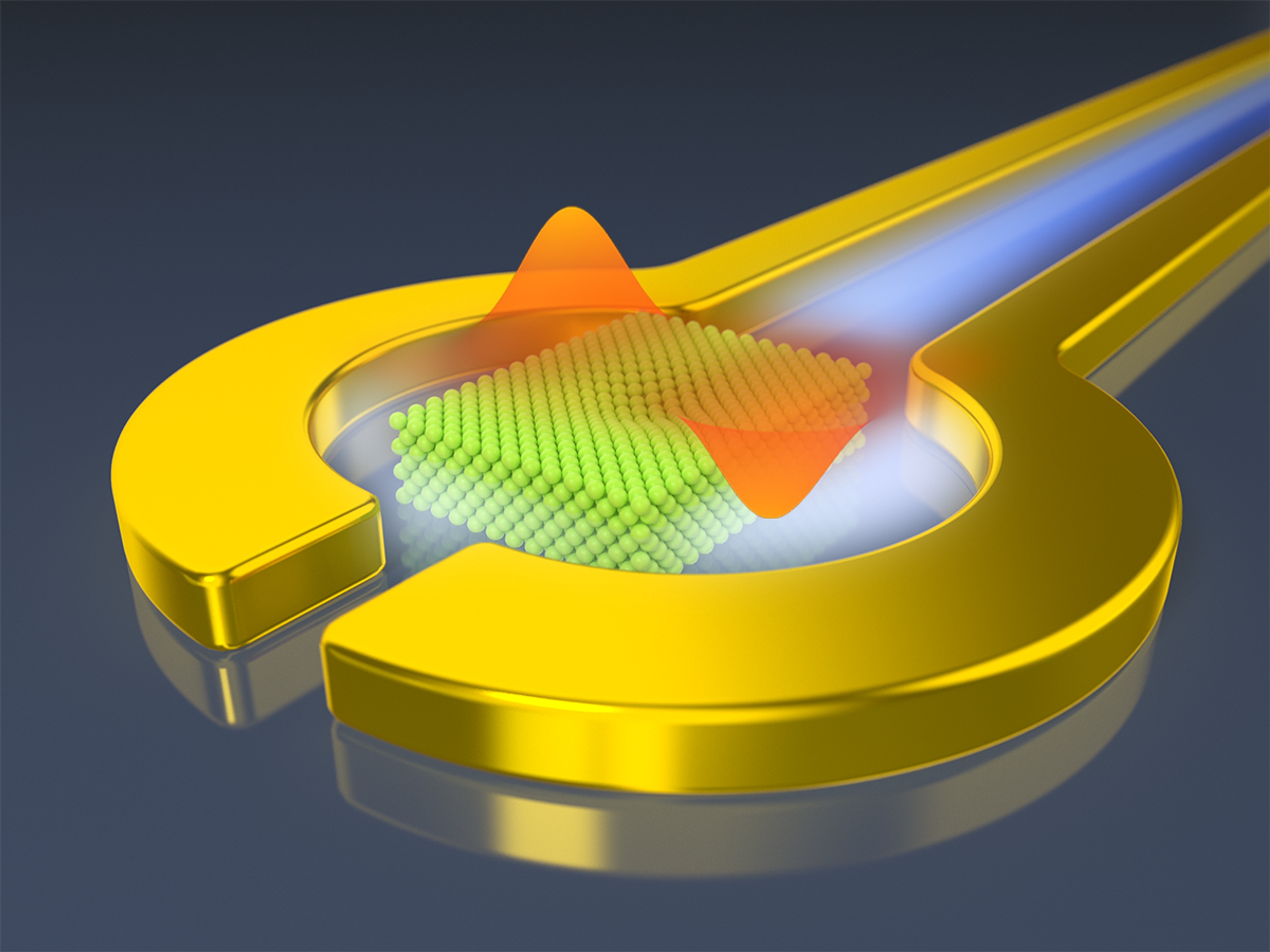

The group's experiments reveal that within optimization, the new qubit can

already stay in superposition for 220 nanoseconds and change state in only a few

nanoseconds, which outperform qubits based on electric charge that scientists

have worked on for 20 years. "This is a completely new qubit platform," Jin

says. "It adds itself to the existing qubit family and has big potential to be

improved and to compete with currently well-known qubits." The researchers

suggest that by developing qubits based on an electron's spin instead of its

charge, they could develop qubits with coherence times exceeding one second.

They add the relative simplicity of the device may lend itself to easy

manufacture at low cost. The new qubit resembles previous work creating qubits

from electrons on liquid helium. However, the researchers note frozen neon is

far more rigid than liquid helium, which suppresses surface vibrations that can

disrupt the qubits. It remains uncertain how scalable this new system is—whether

it can incorporate hundreds, thousands or millions of qubits.

The group's experiments reveal that within optimization, the new qubit can

already stay in superposition for 220 nanoseconds and change state in only a few

nanoseconds, which outperform qubits based on electric charge that scientists

have worked on for 20 years. "This is a completely new qubit platform," Jin

says. "It adds itself to the existing qubit family and has big potential to be

improved and to compete with currently well-known qubits." The researchers

suggest that by developing qubits based on an electron's spin instead of its

charge, they could develop qubits with coherence times exceeding one second.

They add the relative simplicity of the device may lend itself to easy

manufacture at low cost. The new qubit resembles previous work creating qubits

from electrons on liquid helium. However, the researchers note frozen neon is

far more rigid than liquid helium, which suppresses surface vibrations that can

disrupt the qubits. It remains uncertain how scalable this new system is—whether

it can incorporate hundreds, thousands or millions of qubits.AI for Cybersecurity Shimmers With Promise, but Challenges Abound

There are definitely differences in opinions between business executives, who

largely consider AI to be a perfect solution, and security analysts on the

ground, who have to deal with the day-to-day reality, says Devo's Ollmann. "In

the trenches, the AI part is not fulfilling the expectations and the hopes of

better triaging, and in the meantime, the AI that is being used to detect

threats is working almost too well," he says. "We see the net volume of alerts

and incidents that are making it into the SOC analysts hands is continuing to

increase, while the capacity to investigate and close those cases has remained

static." The continuing challenges that come with AI features mean that

companies still do not trust the technology. A majority of companies (57%) are

relying on AI features more or much more than they should, compared with only

14% who do not use AI enough, according to respondents to the survey. In

addition, few security teams have turned on automated response, partly because

of this lack of trust, but also because automated response requires a tighter

integration between products that just is not there yet, says Ollman.

There are definitely differences in opinions between business executives, who

largely consider AI to be a perfect solution, and security analysts on the

ground, who have to deal with the day-to-day reality, says Devo's Ollmann. "In

the trenches, the AI part is not fulfilling the expectations and the hopes of

better triaging, and in the meantime, the AI that is being used to detect

threats is working almost too well," he says. "We see the net volume of alerts

and incidents that are making it into the SOC analysts hands is continuing to

increase, while the capacity to investigate and close those cases has remained

static." The continuing challenges that come with AI features mean that

companies still do not trust the technology. A majority of companies (57%) are

relying on AI features more or much more than they should, compared with only

14% who do not use AI enough, according to respondents to the survey. In

addition, few security teams have turned on automated response, partly because

of this lack of trust, but also because automated response requires a tighter

integration between products that just is not there yet, says Ollman.

Concerned about cloud costs? Have you tried using newer virtual machines?

“Customers are willing to pay more for newer GPU instances if they deliver value in being able to solve complex problems quicker,” he wrote. Some of this can be chalked up to the fact that, until recently, customers looking to deploy workloads on these instances have had to do so on dedicated GPUs, as opposed to renting smaller virtual processing units. And while Rogers notes that customers, in large part, prefer to run their workloads this way, that may be changing. Over the past few years, Nvidia — which dominates the cloud GPU market — has, for one, introduced features that allow customers to split GPUs into multiple independent virtual processing units using a technology called Multi-instance GPU or MIG for short. Debuted alongside Nvidia’s Ampere architecture in early 2020, the technology enables customers to split each physical GPU into up to seven individually addressable instances. And with the chipmaker’s Hopper architecture and H100 GPUs, announced at GTC this spring, MIG gained per-instance isolation, I/O virtualization, and multi-tenancy, which open the door to their use in confidential computing environments.Attackers Use Event Logs to Hide Fileless Malware

The ability to inject malware into system’s memory classifies it as

fileless. As the name suggests, fileless malware infects targeted computers

leaving behind no artifacts on the local hard drive, making it easy to

sidestep traditional signature-based security and forensics tools. The

technique, where attackers hide their activities in a computer’s

random-access memory and use a native Windows tools such as PowerShell and

Windows Management Instrumentation (WMI), isn’t new. What is new is new,

however, is how the encrypted shellcode containing the malicious payload is

embedded into Windows event logs. To avoid detection, the code “is divided

into 8 KB blocks and saved in the binary part of event logs.” Legezo said,

“The dropper not only puts the launcher on disk for side-loading, but also

writes information messages with shellcode into existing Windows KMS event

log.” “The dropped wer.dll is a loader and wouldn’t do any harm without the

shellcode hidden in Windows event logs,” he continues. “The dropper searches

the event logs for records with category 0x4142 (“AB” in ASCII) and having

the Key Management Service as a source.

The ability to inject malware into system’s memory classifies it as

fileless. As the name suggests, fileless malware infects targeted computers

leaving behind no artifacts on the local hard drive, making it easy to

sidestep traditional signature-based security and forensics tools. The

technique, where attackers hide their activities in a computer’s

random-access memory and use a native Windows tools such as PowerShell and

Windows Management Instrumentation (WMI), isn’t new. What is new is new,

however, is how the encrypted shellcode containing the malicious payload is

embedded into Windows event logs. To avoid detection, the code “is divided

into 8 KB blocks and saved in the binary part of event logs.” Legezo said,

“The dropper not only puts the launcher on disk for side-loading, but also

writes information messages with shellcode into existing Windows KMS event

log.” “The dropped wer.dll is a loader and wouldn’t do any harm without the

shellcode hidden in Windows event logs,” he continues. “The dropper searches

the event logs for records with category 0x4142 (“AB” in ASCII) and having

the Key Management Service as a source.

Fortinet CEO Ken Xie: OT Business Will Be Bigger Than SD-WAN

"We definitely see OT as a bigger market going forward, probably bigger than SD-WAN," Xie tells investors Wednesday. "The growth is very, very strong. We do see a lot of potential, and we also have invested a lot in this area to meet the demand." Despite its potential, Fortinet's OT practice today is considerably smaller than its SD-WAN business, which has been a company priority for years. SD-WAN accounted for 16% of Fortinet's total billings in the quarter ended Dec. 31 while OT accounted for just 8% of total billings over that same time period. Fortinet last summer had the second-largest SD-WAN market share in the world, trailing only Cisco. Fortinet's OT success coincides with growing demand from manufacturers, which CFO Keith Jensen says is the one vertical that continues to stand out for the company. ... "The strength in manufacturing really speaks to the threat environment, ransomware, OT, and things of that nature," Jensen says. "Manufacturing is trying desperately to break into the top five of our verticals and it's getting closer and closer every quarter."Meta has built a massive new language AI—and it’s giving it away for free

Meta AI says it wants to change that. “Many of us have been university

researchers,” says Pineau. “We know the gap that exists between universities

and industry in terms of the ability to build these models. Making this one

available to researchers was a no-brainer.” She hopes that others will pore

over their work and pull it apart or build on it. Breakthroughs come faster

when more people are involved, she says. Meta is making its model, called

Open Pretrained Transformer (OPT), available for non-commercial use. It is

also releasing its code and a logbook that documents the training process.

The logbook contains daily updates from members of the team about the

training data: how it was added to the model and when, what worked and what

didn’t. In more than 100 pages of notes, the researchers log every bug,

crash, and reboot in a three-month training process that ran nonstop from

October 2021 to January 2022. With 175 billion parameters (the values in a

neural network that get tweaked during training), OPT is the same size as

GPT-3. This was by design, says Pineau.

Meta AI says it wants to change that. “Many of us have been university

researchers,” says Pineau. “We know the gap that exists between universities

and industry in terms of the ability to build these models. Making this one

available to researchers was a no-brainer.” She hopes that others will pore

over their work and pull it apart or build on it. Breakthroughs come faster

when more people are involved, she says. Meta is making its model, called

Open Pretrained Transformer (OPT), available for non-commercial use. It is

also releasing its code and a logbook that documents the training process.

The logbook contains daily updates from members of the team about the

training data: how it was added to the model and when, what worked and what

didn’t. In more than 100 pages of notes, the researchers log every bug,

crash, and reboot in a three-month training process that ran nonstop from

October 2021 to January 2022. With 175 billion parameters (the values in a

neural network that get tweaked during training), OPT is the same size as

GPT-3. This was by design, says Pineau. Tackling the threats posed by shadow IT

Shadow IT can be tough to mitigate, given the embedded culture of hybrid working in many organizations, in addition to a general lack of engagement from employees with their IT teams. For staff to continue accessing apps securely from anywhere, at any time, and from any device, businesses must evolve their approach to organizational security. Given the modern-day working environment moves at such a fast pace, employees have turned en masse to shadow IT when the experience isn’t quick or accurate enough. This leads to the bypassing of secure networks and best practices and can leave IT departments out of the process. A way of controlling this is by deploying corporate managed devices that provide remote access, giving IT teams most of the control and removing the temptation for employees to use unsanctioned hardware. Providing them with compelling apps, data, and services with a good user experience should see a reduced dependence on shadow IT, putting IT teams back in the driving seat and restoring security.5 AI adoption mistakes to avoid

Every AI-related business goal begins with data – it is the fuel that

enables AI engines to run. One of the biggest mistakes companies make is not

taking care of their data. This begins with the misconception that data is

solely the responsibility of the IT department. Before data is captured and

input into AI systems, business subject matter experts and data scientists

should be looped in, and executives should provide oversight to ensure the

right data is being captured and maintained appropriately. It’s important

for non-IT personnel to realize they not only benefit from good data in

yielding quality AI recommendations, but their expertise is a critical input

to the AI system. Make sure that all teams have a shared sense of

responsibility for curating, vetting, and maintaining data. Data management

procedures are also a key component of data care. ... AI requires

intervention to sustain it as an effective solution over time. For example,

if AI is malfunctioning or if business objectives change, AI processes need

to change. Doing nothing or not implementing adequate intervention could

result in AI recommendations that hinder or act contrary to business

objectives.

Every AI-related business goal begins with data – it is the fuel that

enables AI engines to run. One of the biggest mistakes companies make is not

taking care of their data. This begins with the misconception that data is

solely the responsibility of the IT department. Before data is captured and

input into AI systems, business subject matter experts and data scientists

should be looped in, and executives should provide oversight to ensure the

right data is being captured and maintained appropriately. It’s important

for non-IT personnel to realize they not only benefit from good data in

yielding quality AI recommendations, but their expertise is a critical input

to the AI system. Make sure that all teams have a shared sense of

responsibility for curating, vetting, and maintaining data. Data management

procedures are also a key component of data care. ... AI requires

intervention to sustain it as an effective solution over time. For example,

if AI is malfunctioning or if business objectives change, AI processes need

to change. Doing nothing or not implementing adequate intervention could

result in AI recommendations that hinder or act contrary to business

objectives.SEC Doubles Cyber Unit Staff to Protect Crypto Users

The SEC says that the newly named Crypto Assets and Cyber Unit, formerly

known as the Cyber Unit, in the Division of Enforcement, will grow to 50

dedicated positions. "The U.S. has the greatest capital markets because

investors have faith in them, and as more investors access the crypto

markets, it is increasingly important to dedicate more resources to

protecting them," says SEC Chair Gary Gensler. This dedicated unit has

successfully brought dozens of cases against those seeking to take advantage

of investors in crypto markets, he says. ... "This is great news! A lot of

the cryptocurrency market is against any regulations, including those that

would safeguard their own value, but that's not the vast majority of the

rest of the world. The cryptocurrency world is full of outright scams,

criminals and ne'er-do-well-ers," says Roger Grimes, data-driven defense

evangelist at cybersecurity firm KnowBe4. Grimes adds that even legal and

very sophisticated financiers and investors are taking advantage of the

immaturity of the cryptocurrency market.

The SEC says that the newly named Crypto Assets and Cyber Unit, formerly

known as the Cyber Unit, in the Division of Enforcement, will grow to 50

dedicated positions. "The U.S. has the greatest capital markets because

investors have faith in them, and as more investors access the crypto

markets, it is increasingly important to dedicate more resources to

protecting them," says SEC Chair Gary Gensler. This dedicated unit has

successfully brought dozens of cases against those seeking to take advantage

of investors in crypto markets, he says. ... "This is great news! A lot of

the cryptocurrency market is against any regulations, including those that

would safeguard their own value, but that's not the vast majority of the

rest of the world. The cryptocurrency world is full of outright scams,

criminals and ne'er-do-well-ers," says Roger Grimes, data-driven defense

evangelist at cybersecurity firm KnowBe4. Grimes adds that even legal and

very sophisticated financiers and investors are taking advantage of the

immaturity of the cryptocurrency market. Quote for the day:

"The very essence of leadership is that you have to have vision. You can't blow an uncertain trumpet." -- Theodore M. Hesburgh