The 5-step plan for better Fraud and Risk management in the payments industry

The overall complexity and size of the digital payments industry make it

extremely difficult to detect fraud. In this context, merchants and payment

companies can introduce fraud monitoring and anti-fraud mechanisms that verify

every transaction in real-time. The AI-based systems can take into account

different aspects such as suspicious transactions, for example, amount, unique

bank card token, user’s digital fingerprint, the IP address of the payer, etc.,

to evaluate the authenticity. Today, OTPs are synonymous with two-factor

authentication and are thought to augment existing passwords with an extra layer

of security. Yet, fraudsters manage to circumvent it every day. With Out-of-Band

Authentication solutions in combination with real-time Fraud Risk management

solutions, the service provider can choose one of many multi-factor

authentication options available during adaptive authentication, depending on

their preference and risk profile Just like 3D Secure, this is another

internationally-accepted compliance mechanism that ensures that all the

intermediaries involved in the payments system must take special care of the

sensitive client information.

The Importance of Pipeline Quality Gates and How to Implement Them

There is no doubt that CI/CD pipelines have become a vital part of the modern

development ecosystem that allows teams to get fast feedback on the quality of

the code before it gets deployed. At least that is the idea in principle. The

sad truth is that too often companies fail to fully utilize the fantastic

opportunity that a CI/CD pipeline offers in being able to provide rapid test

feedback and good quality control by failing to implement effective quality

gates into their respective pipelines. A quality gate is an enforced measure

built into your pipeline that the software needs to meet before it can proceed

to the next step. This measure enforces certain rules and best practices that

the code needs to adhere to prevent poor quality from creeping into the code. It

can also drive the adoption of test automation, as it requires testing to be

executed in an automated manner across the pipeline. This has a knock-on effect

of reducing the need for manual regression testing in the development cycle

driving rapid delivery across the project.

Best of 2022: Measuring Technical Debt

Of the different forms of technical debt, security and organizational debt are

the ones most often overlooked and excluded in the definition. These are also

the ones that often have the largest impact. It is important to recognize that

security vulnerabilities that remain unmitigated are technical debt just as much

as unfixed software defects. The question becomes more interesting when we look

at emerging vulnerabilities or low-priority vulnerabilities. While most will

agree that known, unaddressed vulnerabilities are a type of technical debt, it

is questionable if a newly discovered vulnerability is also technical debt. The

key here is whether the security risk needs to be addressed and, for that

answer, we can look at an organization’s service level agreements (SLAs) for

vulnerability management. If an organization sets an SLA that requires all

high-level vulnerabilities be addressed within one day, then we can say that

high vulnerabilities older than that day are debt. This is not to say that

vulnerabilities that do not exceed the SLA do not need to be addressed; only

that vulnerabilities within the SLA represent new work and only become debt when

they have exceeded the SLA.

DevOps Trends for Developers in 2023

Security automation is the concept of automating security processes and tasks

to ensure that your applications and systems remain secure and free from

malicious threats. In the context of CI/CD, security automation ensures that

your code is tested for vulnerabilities and other security issues before it

gets deployed to production. In addition, by deploying security automation in

your CI/CD pipeline, you can ensure that only code that has passed all

security checks is released to the public/customers. This helps to reduce the

risk of vulnerabilities and other security issues in your applications and

systems. The goal of security automation in CI/CD is to create a secure

pipeline that allows you to quickly and efficiently deploy code without

compromising security. Since manual testing might take a lot of time and

developers' time, many organizations are integrating security automation in

their CI/CD pipeline today. ... Also, the introduction of AI/ML in the

software development lifecycle (SDLC) is getting attention as the models are

trained to detect irregularities in the code and give suggestions to enhance

or rewrite it.

What Brands Get Wrong About Customer Authentication

When comparing friction for customers with security accounts and practical

security needs, one of the main challenges is convincing the revenue side of a

business of the need for best practice from a security standpoint.

Cybersecurity teams must demonstrate that the financial risks of not putting

security in place - i.e., fraud, account takeover, reputation loss, regulatory

fines, lawsuits, etc. - overwhelm the loss of revenue and abandonment of

transactions on the other side. There are always costs associated with

security systems, but comparing the costs associated with fraud to those of

implementing new security measures will justify the purchase. There is a fine

balance between having effective security and operating a business. Customers

quickly become frustrated by jumping through hoops to log in, and the password

route is unsustainable. It’s time to look at the relationship between security

and authentication and develop solutions for both. Taking authentication to

the next level requires thinking outside the box. If you want to implement an

authentication strategy that doesn’t drive away customers, you need to make

customer experience the focal point.

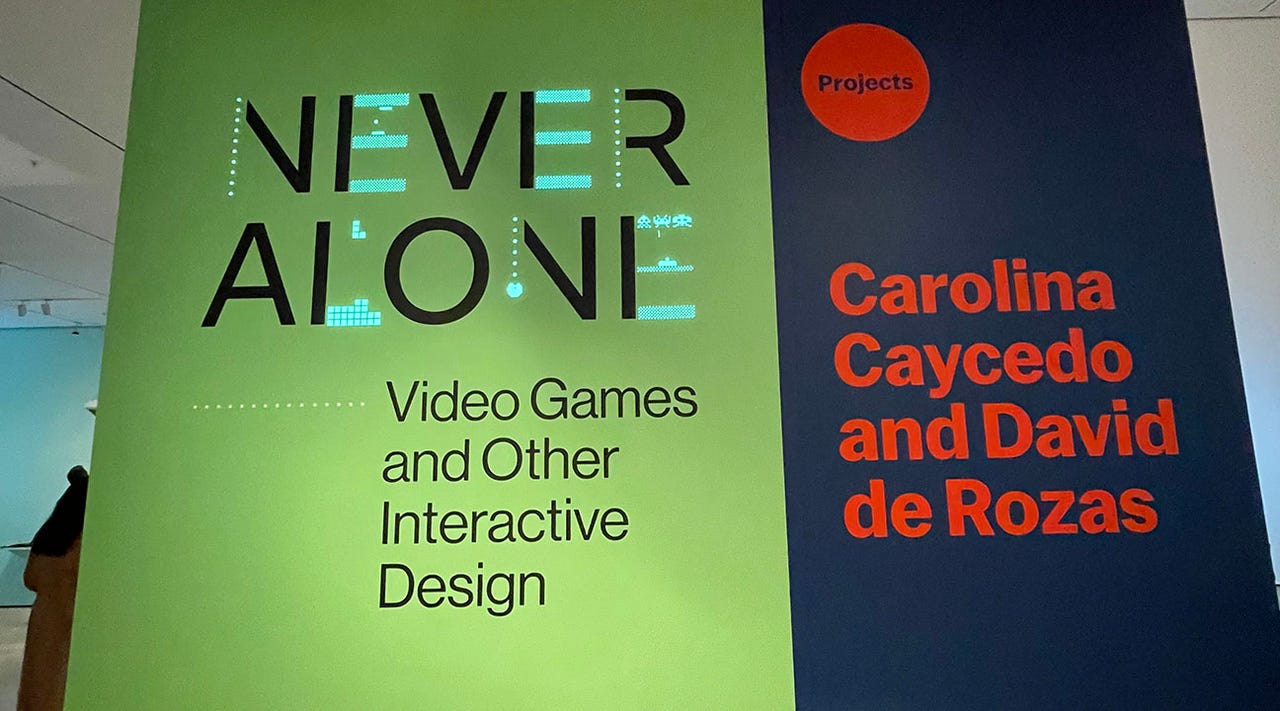

Video games and robots want to teach us a surprising lesson. We just have to listen

The speedy, colorful ghosts zooming their way around the maze greeted me as I

stared at the screen of a Pac-Man machine, a part of the 'Never Alone: Video

Games and Other Interactive Design' exhibit of the Museum of Modern Art in New

York City. Using the tiniest amount of RAM and code, each ghost is programmed

with its own specific behaviors, which combine to create the masterpiece work,

according to Paul Galloway, collection specialist for the Architecture and

Design Department. This was the first time I'd seen video games inside a

museum, and I had come to this exhibit to see if I could glean some insight

into technology through the lens of art. It's an exhibit that is more timely

now more than ever, as technology has been absorbed into nearly every

facet of our lives both at work and at home -- and what I learnt is that our

empathy with technology is leading to new kinds of relationships between

ourselves and our robot friends. ... According to Galloway, the Never Alone

exhibit is linked to an Iñupiaq video game included in the exhibit called

Never Alone (Kisima Ingitchuna).

The increasing impact of ransomware on operational technology

To protect against initial intrusion of networks, organisations must

consistently find and remediate key vulnerabilities and known exploits, while

monitoring the network for attack attempts. Also, wherever possible equipment

should be kept up-to-date. VPNs in particular need close attention from cyber

security personnel; new VPN keys and certificates must be created, with

logging of activity over VPNs being enabled. Access to OT environments via

VPNs calls for architecture reviews, multi-factor authentication (MFA) and

jump hosts. In addition, users should read emails in plain text only, as

opposed to rendering HTML, and disable Microsoft Office macros. For network

access attempts from threat actors, organisations should perform an

architecture review for routing protocols involving OT, and monitor for the

use of open source tools. MFA should be implemented to access OT systems, and

intelligence sources utilised for threat and communication identification and

tracking.

The security risks of Robotic Process Automation and what you can do about it

RPA credentials are often shared so they can be used repeatedly. Because these

accounts and credentials are left unchanged and unsecured, a cyber attacker

can steal them, use them to elevate privileges, and move laterally to gain

access to critical systems, applications, and data. In addition, users with

administrator privileges can retrieve credentials stored in locations that are

not secured. As many enterprises leveraging RPA have numerous bots in

production at any given time, the potential risk is very high. Securing the

privileged credentials utilised by this emerging digital workforce is an

essential step in securing RPA workflows. ... The explosion in identities is

putting more pressure on security teams since it leads to the creation of more

vulnerabilities. The management of machine identities, in particular, poses

the biggest problem, given that they can be generated quickly without

consideration for security protocols. Further, while credentials used by

humans often come with organisational policy that mandates regular updates,

those used by robots remain unchanged and unmanaged.

Best of 2022: Using Event-Driven Architecture With Microservices

Most existing systems live on-premises, while microservices live in private

and public clouds so the ability for data to transit the often unstable and

unpredictable world of wide area networks (WANs) is tricky and time-consuming.

There are mismatches everywhere: updates to legacy systems are slow, but

microservices need to be fast and agile. Legacy systems use old communication

mediums, but microservices use modern open protocols and APIs. Legacy systems

are nearly always on-premise and at best use virtualization, but microservices

rely on clouds and IaaS abstraction. The case becomes clear – organizations

need an event-driven architecture to link all these legacy systems versus

microservices mismatches. ... Orchestration is a good description –

composers create scores containing sheets of music that will be played by

musicians with differing instruments. Each score and its musician are like a

microservice. In a complex symphony with a hundred musicians playing a wide

range of instruments – like any enterprise with complex applications – far

more orchestration is required.

Scope 3 is coming: CIOs take note

Many companies in Europe have built teams to address IT sustainability and

have appointed directors to lead the effort. Gülay Stelzmüllner, CIO of

Allianz Technology, recently hired Rainer Karcher as head of IT

sustainability. “My job is to automate the whole process as much as possible,”

says Karcher, who was previously director of IT sustainability at Siemens.

“This includes getting source data directly from suppliers and feeding that

into data cubes and data meshes that go into the reporting system on the front

end. Because it’s hard to get independent and science-based measurements from

IT suppliers, we started working with external partners and startups who can

make an estimate for us. So if I can’t get carbon emissions data directly from

a cloud provider, I take my invoices containing consumption data, and then

take the location of the data center and the kinds of equipment used. I put

all that information to a rest API provided by a Berlin-based company, and

using a transparent algorithm, they give me carbon emissions per service.”

Internally speaking, the head of IT sustainability role has become more common

in Europe—and some of the more forward-thinking US CIOs are starting to see

the need in their own organizations.

Quote for the day:

"The only way to follow your path is

to take the lead." -- Joe Peterson

No comments:

Post a Comment