Nvidia still crushing the data center market

EVGA CEO Andy Han cited several grievances with Nvidia, not the least of which

was that it competes with Nvidia. Nvidia makes graphics cards and sells them to

consumers under the brand name Founder’s Edition, something AMD and Intel do

very little or not at all. In addition, Nvidia’s line of graphics cards was

being sold for less than what licensees were selling their cards. So not only

was Nvidia competing with its licensees, but it was also undercutting them.

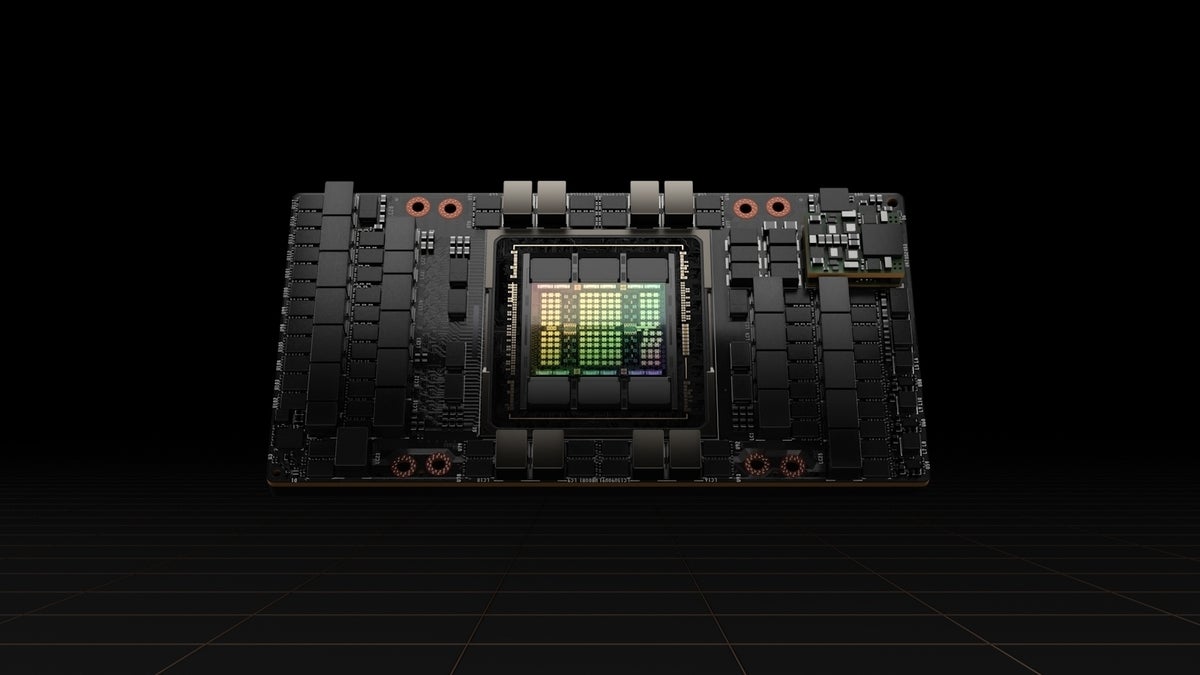

Nvidia does the same on the enterprise side, selling DGX server units

(rack-mounted servers packed with eight A100 GPUs) in competition with OEM

partners like HPE and Supermicro. Das defends this practice. “DGX for us has

always been sort of the AI innovation vehicle where we do a lot of item

testing,” he says, adding that building the DGX servers gives Nvidia the chance

to shake out the bugs in the system, knowledge it passes on to OEMs. “Our work

with DGX gives the OEMs a big head-start in getting their systems ready and out

there. So it's actually an enabler for them.” But both Snell and Sag think

Nvidia should not be competing against its partners. “I'm highly skeptical of

that strategy,” Snell says.

A Look Ahead: Cybersecurity Trends to Watch in 2023

Multifactor authentication was once considered the gold standard of identity

management, providing a crucial backstop for passwords. All that changed this

year with a series of highly successful attacks using MFA bypass and MFA fatigue

tactics, combined with tried-and-true phishing and social engineering. That

success won’t go unnoticed. Attackers will almost certainly increase multifactor

authentication exploits. "Headline news attracts the next wave of also-rans and

other bad actors that want to jump on the newest methods to exploit an attack,"

Bird says. "We're going to see a lot of situations where MFA strong

authentication is exploited and bypassed, but it's just unfortunately a reminder

to us all that tech is only a certain percentage of the solution." Ransomware

attacks have proliferated across public and private sectors, and tactics to

pressure victims into paying ransoms have expanded to double and even triple

extortion. Because of the reluctance of many victims to report the crime, no one

really knows whether things are getting better or worse.

Why zero knowledge matters

In a sense, zero knowledge proofs are a natural elaboration on trends in

complexity theory and cryptography. Much of modern cryptography (of the

asymmetric kind) is dependent on complexity theory because asymmetric security

relies on using functions that are feasible in one form but not in another. It

follows that the great barrier to understanding ZKP is the math. Fortunately, it

is possible to understand conceptually how zero knowledge proofs work without

necessarily knowing what a quadratic residue is. For those of us who do care, a

quadratic residue of y, for a value z is: . This rather esoteric concept was

used in one of the original zero knowledge papers. Much of cryptography is built

on exploring the fringes of math (especially factorization and modulus) for

useful properties. Encapsulating ZKP's complex mathematical computations in

libraries that are easy to use will be key to widespread adoption. We can do a

myriad of interesting things with such one-way functions. In particular, we can

establish shared secrets on open networks, a capability that modern secure

communications are built upon

Rust Microservices in Server-side WebAssembly

Rust enables developers to write correct and memory-safe programs that are as

fast and as small as C programs. It is ideally suited for infrastructure

software, including server-side applications, that require high reliability

and performance. However, for server-side applications, Rust also presents

some challenges. Rust programs are compiled into native machine code, which is

not portable and is unsafe in multi-tenancy cloud environments. We also lack

tools to manage and orchestrate native applications in the cloud. Hence,

server-side Rust applications commonly run inside VMs or Linux containers,

which bring significant memory and CPU overhead. This diminishes Rust’s

advantages in efficiency and makes it hard to deploy services in

resource-constrained environments, such as edge data centers and edge clouds.

The solution to this problem is WebAssembly (WASM). Started as a secure

runtime inside web browsers, Wasm programs can be securely isolated in their

own sandbox. With a new generation of Wasm runtimes, such as the Cloud Native

Computing Foundation’s WasmEdge Runtime, you can now run Wasm applications on

the server.

How to automate data migration testing

Testing with plenty of time before the official cutover deadline is usually

the bulk of the hard work involved in data migration. The testing might be

brief or extended, but it should be thoroughly conducted and confirmed before

the process is moved forward into the “live” phase. An automated data

migration approach is a key element here. You want this process to work

seamlessly while also operating in the background with minimal human

intervention. This is why I favor continuous or frequent replication to keep

things in sync. One common strategy is to run automated data synchronizations

in the background via a scheduler or cron job, which only syncs new data. Each

time the process runs, the amount of information transferred will become less

and less. ... Identify the automatic techniques and principles that will

ensure the data migration runs on its own. These should be applied across the

board, regardless of the data sources and/or criticality, for consistency and

simplicity’s sake. Monitoring and alerts that notify your team of data

migration progress are key elements to consider now.

Clean Code: Writing maintainable, readable and testable code

Clean code makes it easier for developers to understand, modify, and maintain

a software system. When code is clean, it is easier to find and fix bugs, and

it is less likely to break when changes are made. One of the key principles of

clean code is readability, which means that code should be easy to understand,

even for someone who is not familiar with the system. To achieve this,

developers should e.g. use meaningful names for variables, functions, and

classes. Another important principle of clean code is simplicity, which

means that code should be as simple as possible, without unnecessary

complexity. To achieve this, developers should avoid using complex data

structures or algorithms unless they are necessary, and should avoid adding

unnecessary features or functionality. In addition to readability and

simplicity, clean code should also be maintainable, which means that it should

be easy to modify and update the code without breaking it. To achieve this,

developers should write modular code that is organized into small, focused

functions, and should avoid duplication of code. Finally, clean code

should be well-documented.

Artificial intelligence predictions 2023

Synthetic data – data artificially generated by a computer simulation – will

grow exponentially in 2023, says Steve Harris, CEO of Mindtech. “Big companies

that have already adopted synthetic data will continue to expand and invest as

they know it is the future,” says Harris. Harris gives the example of car

crash testing in the automotive industry. It would be unfeasible to keep

rehearsing the same car crash again and again using crash test dummies. But

with synthetic data, you can do just that. The virtual world is not limited in

the same way, which has led to heavy adoptoin of synthetic data for AI road

safety testing. Harris says synthetic data is now being used in industries he

never expcted in order to improve development, services and innnovation. ...

Banks will use AI more heavily to give them a competitive advantage to analyse

the capital markets and spot opportunities. “2023 is going to be the year the

rubber meets the road for AI in capital markets, says Matthew Hodgson, founder

and CEO of Mosaic Smart Data. “Amidst the backdrop of volatility and economic

uncertainty across the globe, the most precious resource for a bank is its

transaction records – and within this is its guide to where opportunity

resides.

Group Coaching - Extending Growth Opportunity Beyond Individual Coaching

First, as a coach since our focus is on the relationship and interactions

between the individuals, we don’t coach individuals in separate sessions.

Instead, we bring them together as the group/team that they are part of and

coach the entire group. Anything said by one member of the team is heard by

everyone right there and then. The second building block is holding the mirror

to the intangible entity mentioned above. To be accurate, holding the mirror

is not a new skill for proponents of individual coaching, but it takes a

significantly different approach in group coaching and has a more pronounced

impact here. Holding the mirror here means picking up the intangibles and

making the implicit explicit, for example, sensing the mood in the room, or

reading the body language, drop/increase in energy, head nods, smiles, drop in

shoulders, emotions etc. and playing back to the room your observation (sans

judgement obviously). Making the intangibles explicit is an important step in

group coaching - name it to tame it, if you will. The third building block is

the believing and trusting in the group system that it is intelligent and

self-healing.

Hybrid cloud in 2023: 5 predictions from IT leaders

Hood says this trend is fundamentally about operators accelerating their 5G

network deployments while simultaneously delivering innovative edge services

to their enterprise customers, especially in key verticals like retail,

manufacturing, and energy. He also expects growing use of AL/ML at the edge to

help optimize telco networks and hybrid edge clouds. “Many operators have been

consuming services from multiple hyperscalers while building out their

on-premise deployment to support their different lines of business,” Hood

says. “The ability to securely distribute applications with access to data

acceleration and AI/ML GPU resources while meeting data sovereignty

regulations is opening up a new era in building application clouds independent

of the underlying network infrastructure.” ... “Given a background of low

margins, limited budgets, and the complexity of IT systems required to keep

their businesses operating, many retailers now understandably rely on a hybrid

cloud approach to help reduce costs whilst delivering value to their

customers,” says Ian Boyle, Red Hat chief architect for retail.

Looking ahead to the network technologies of 2023

The growth in Internet dependence is really what’s been driving the cloud,

because high-quality, interactive, user interfaces are critical, and the

cloud’s technology is far better for those things, not to mention easier to

employ than changing a data center application would be. A lot of cloud

interactivity, though, adds to latency and further validates the need for

improvement in Internet latency. Interactivity and latency sensitivity tend to

drive two cloud impacts that then become network impacts. The first is that as

you move interactive components to the cloud via the Internet, you’re creating

a new network in and to the cloud that’s paralleling traditional MPLS VPNs.

The second is that you’re encouraging cloud hosting to move closer to the edge

to reduce application latency. ... What about security? The Internet and cloud

combination changes that too. You can’t rely on fixed security devices inside

the cloud, so more and more applications will use cloud-hosted instances of

security tools. Today, only about 7% of security is handled that way, but that

will triple by the end of 2023 as SASE, SSE, and other cloud-hosted security

elements explode.

Quote for the day:

"Leadership is unlocking people's

potential to become better." -- Bill Bradley

No comments:

Post a Comment