7 ways CIOs can build a high-performance team

“People want to grow and change, and good business leaders are willing to give

them the opportunity to do so,” adds Cohn. Here, you can get HR involved,

encouraging them to bring their expertise and ideas to the table to help you

come up with the right approach to training and employee development. In

addition, it’s important to remember that an empathetic leader understands that

people come from different places and therefore won’t grow and develop in the

same manner. Modern CIOs must approach upskilling and training with this reality

in mind, advises Benjamin Marais, CIO at financial services company Liberty

Group SA. You also need to create opportunities that expose your employees to

what’s happening outside the business, suggests van den Berg. This is especially

true where it pertains to future technologies and skills because if teams know

what’s out there, they better understand what they need to do to keep up. Given

the rise in competition for skills in the market, you have to demonstrate your

best when trying to attract top talent and retain them, stresses Cohn.

10 Trends in DevOps, Automated Testing and More for 2023

Developers and QA professionals are some of the most sought-after skilled

laborers who are acutely aware of the value they provide to organizations. As

we head into next year, this group will continue to leverage the demand for

their skills in pursuit of their ideal work environment. Companies that do not

consider their developer experience and force pre-pandemic systems onto a

hybrid-first world set themselves up for failure, especially when tools for

remote and virtual testing and quality assurance are readily available.

Developer teams also need to be equally equipped for success through the tools

and opportunities that can help ensure an innate sense of value to the

organization – and if they don’t have the tools they need, these developers

will find them elsewhere. ... We’re starting to see consolidation in both the

market and in the user personas we’re all chasing. Testing companies are

offering monitoring, and monitoring companies are offering testing. This is a

natural outcome of the industry’s desire to move toward true observability:

deep understanding of real-world user behavior, synthetic user testing,

passively watching for signals and doing real-time root cause analysis—all in

service of perfecting the customer experience.

The beautiful intersection of simulation and AI

Simulation models can synthesize real-world data that is difficult or

expensive to collect into good, clean and cataloged data. While most AI models

run using fixed parameter values, they are constantly exposed to new data that

may not be captured in the training set. If unnoticed, these models will

generate inaccurate insights or fail outright, causing engineers to spend

hours trying to determine why the model is not working. ... Businesses have

always struggled with time-to-market. Organizations that push a buggy or

defective solution to customers risk irreparable harm to their brand,

particularly startups. The opposite is true as “also-rans” in an established

market have difficulty gaining traction. Simulations were an important design

innovation when they were first introduced, but their steady improvement and

ability to create realistic scenarios can slow perfectionist engineers. Too

often, organizations try to build “perfect” simulation models that take a

significant amount of time to build, which introduces the risk that the market

will have moved on.

What is VPN split tunneling and should I be using it?

The ability to choose which apps and services use your VPN of choice and which

don't is incredibly powerful. Activities like remote work, browsing your

bank's website, or online shopping via public Wi-Fi can definitely benefit

from the added security of a VPN, but other pursuits, like playing online

games or streaming readily available content, can be hurt by the slight delay

VPNs may add to your traffic. The modest decrease to your connection speed is

barely noticeable for browsing, but can be disastrous for online games. Being

able to simultaneously connect to sensitive sites and services through your

secure VPN, and to non-sensitive games and apps means you won't constantly

need to enable and disable your VPN connection when switching tasks. This is

important as forgetting to enable it at the wrong time could leave you exposed

to security risks. ... Split tunneling divides your network traffic in two.

Your standard, unencrypted traffic continues to flow unimpeded down one path,

while your sensitive and secured data gets encrypted and routed through the

VPN's private network. It's like having a second network connection that's

completely separate, a tiny bit slower, but also far more secure.

Why don’t cloud providers integrate?

Although it’s not an apples-to-apples comparison, Google’s Athos enables

enterprises to run applications across clouds and other operating

environments, including ones Google doesn’t control. As with Amazon DataZone,

it’s very possible to manage third-party data sources. One senior IT executive

from a large travel and hospitality company told me on condition of anonymity,

“I’m sure [cloud vendors] can integrate with third-party services, but I

suspect that’s not a choice they’re willing to make. For instance, they could

publish some interfaces for third parties to integrate with their control

plane as well as other means in the data plane.” Integration is possible, in

other words, but vendors don’t always seem to want it. This desire to control

sometimes leads vendors down roads that aren’t optimal for customers. As this

IT executive said, “The ecosystem is being broken. Instead of interoperating

with third-party services, [cloud vendors often] choose to create

API-compatible competing services.” He continued, “There is a zero-sum game

mindset here.” Namely, if a customer runs a third-party database and not the

vendor’s preferred first-party database, the vendor has lost.

How RegTech helps financial services providers overcome regulation challenges

Two main types of RegTech capabilities are helping financial service

institutions stay compliant: software that encompasses the whole system — for

example a full client onboarding cycle — and software that manages a

particular process, such as reporting or document management. Hugo Larguinho

Brás explains: “The technologies that handle the whole process from A to Z are

typically heavier to deploy, but they will allow you to cover most of your

needs. These are also more expensive and often more difficult to adapt in line

with a company’s specificities.” “Meanwhile, those technologies that treat

part of the process can be combined with other tools. While this brings more

agility, the need to find and combine several tools can also turn your target

model more complex to run.” “We see more and more cloud and on-premises

solutions available to asset management and securities companies, from

software-as-a-service (SaaS) and platform-as-a-service (PaaS) deployed

in-house, to solutions combined to outsourced capabilities ...”

What You Need to Know About Hyperscalers

Current hyperscaler adopters are primarily large enterprises. “The speed,

efficiencies, and global reach hyperscalers can provide will surpass what most

enterprise organizations can build within their own data centers,” Drobisewski

says. He predicts that the partnerships being built today between hyperscalers

and large enterprises are strategic and will continue to grow in value. “As

hyperscalers maintain their focus on lifecycle, performance, and resiliency,

businesses can consume hyperscaler services to thrive and accelerate the

creation of new digital experiences for their customers,” Drobisewski says.

... Many adopters begin their hyperscaler migration by selecting the software

applications that are best suited to run within a cloud environment, Hoecker

says. Over time, these organizations will continue to migrate workloads to the

cloud as their business goals evolve, he adds. Many hyperscaler adopters, as

they become increasingly comfortable with the approach, are beginning to

establish multi-cloud estates. “The decision criteria is typically based on

performance, cost, security, access to skills, and regulatory and compliance

factors,” Hoecker notes.

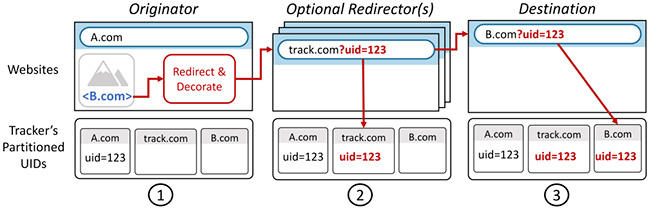

UID smuggling: A new technique for tracking users online

Researchers at UC San Diego have for the first time sought to quantify the

frequency of UID smuggling in the wild, by developing a measurement tool

called CrumbCruncher. CrumbCruncher navigates the Web like an ordinary user,

but along the way, it keeps track of how many times it has been tracked using

UID smuggling. The researchers found that UID smuggling was present in about 8

percent of the navigations that CrumbCruncher made. The team is also releasing

both their complete dataset and their measurement pipeline for use by browser

developers. The team’s main goal is to raise awareness of the issue with

browser developers, said first author Audrey Randall, a computer science Ph.D.

student at UC San Diego. “UID smuggling is more widely used than we

anticipated,” she said. “But we don’t know how much of it is a threat to user

privacy.” ... UID smuggling can have legitimate uses, the researchers say. For

example, embedding user IDs in URLs can allow a website to realize a user is

already logged in, which means they can skip the login page and navigate

directly to content.

Bring Sanity to Managing Database Proliferation

How can you avoid being a victim of the bow wave of database proliferation?

Recognize that you can allocate your resources in a way that benefits both

your bottom line and your stress level by consolidating how you run and manage

modern databases. Investing heavily in self-managing the legacy databases used

in high volume by many of your people makes a lot of sense. Database workloads

that are typically used for mission-critical transaction processing, such as

IBM DB2 in financial services, are subject to performance tuning, regular

patching and upgrading by specialized database administrators in a kind of

siloed sanctum sanctorum. Many organizations will hire an in-house Oracle or

SAP Hana expert and create a team, ... But what about the 40 other highly

functional, highly desirable cloud databases in your enterprise that aren’t

used as often? Do you need another 20 people to manage them? Open source

databases like MySQL, MongoDB, Cassandra, PostgreSQL and many others have

gained wide adoption, and many of their use cases are considered

mission-critical.

An Ode to Unit Tests: In Defense of the Testing Pyramid

What does the unit in unit tests mean? It means a unit of behavior. There's

nothing in that definition dictating that a test has to focus on a single

file, object, or function. Why is it difficult to write unit tests focused on

behavior? A common problem with many types of testing comes from a tight

connection between software structure and tests. That happens when the

developer loses sight of the test goal and approaches it in a clear-box

(sometimes referred to as white-box) way. Clear-box testing means testing with

the internal design in mind to guarantee the system works correctly. This is

really common in unit tests. The problem with clear-box testing is that tests

tend to become too granular, and you end up with a huge number of tests that

are hard to maintain due to their tight coupling to the underlying structure.

Part of the unhappiness around unit tests stems from this fact. Integration

tests, being more removed from the underlying design, tend to be impacted less

by refactoring than unit tests. I like to look at things differently. Is this

a benefit of integration tests or a problem caused by the clear-box testing

approach? What if we had approached unit tests in an opaque-box approach?

Quote for the day:

"Strategy is not really a solo sport

even if you_re the CEO." -- Max McKeown

No comments:

Post a Comment