Digitalization: Welcome to the City 4.0

Applied to cities, digitalization can not only improve efficiency by minimizing the waste of time and resources, but it will simultaneously improve a city’s productivity, secure growth, and drive economic activities. The Finnish capital of Helsinki is currently in the process of proving this. An early adopter of smart city technology and modeling, it launched the Helsinki 3D+ project to create a three-dimensional representation of the city using reality capture technology provided by the software company Bentley Systems for geocoordination, evaluation of options, modeling, and visualization. The project’s aim is to improve the city’s internal services and processes and provide data for further smart city development. Upon completion, Helsinki’s 3-D city model will be shared as open data to encourage commercial and academic research and development. Thanks to the available data and analytics, the city will be able to drive its green agenda in a way that is much more focused on sustainable consumption of natural resources and a healthy environment.

How to decommission a data center

"They need to know what they have. That’s the most basic. What equipment do you have? What apps live on what device? And what data lives on each device?” says Ralph Schwarzbach, who worked as a security and decommissioning expert with Verisign and Symantec before retiring. All that information should be in a configuration management database (CMDB), which serves as a repository for configuration data pertaining to physical and virtual IT assets. A CMDB “is a popular tool, but having the tool and processes in place to maintain data accuracy are two distinct things," Schwarzbach says. A CMDB is a necessity for asset inventory, but “any good CMDB is only as good as the data you put in it,” says Al DeRose, a senior IT director responsible for infrastructure design, implementation and management at a large media firm. “If your asset management department is very good at entering data, your CMDB is great. [In] my experience, smaller companies will do a better job of assets. Larger companies, because of the breadth of their space, aren’t so good at knowing what their assets are, but they are getting better.”

The Problem With “Cloud Native”

The problem is thinking about and creating a common understanding around a change that big. Here the industry does itself no favors. For years, many people thought cloud technology was somehow part of the atmosphere itself. In reality, few things are so very physical: Big public cloud computing vendors like Amazon Web Services, Microsoft Azure, and Google Cloud each operate globe-spanning systems, with millions of computer servers connected by hundreds of thousands of miles of fiber-optic cable. Most people now know the basics of cloud computing, but understanding it remains a problem. Take a current popular term, “cloud native.” Information technologists use it to describe strategies, people, teams, and companies that “get” the cloud, and they use it for maximum utility. Others use it to describe an approach to building, deploying, and managing things in a cloud computing environment. People differ. Whether it’s referring to people or software, “cloud native” is shorthand for operating with the fullest power of the cloud.

Why You Need a Cyber Hygiene Program

Well-known campaigns and breaches either begin or are accelerated by breakdowns in the most mundane areas of security and system management. Unpatched systems, misconfigured protections, overprivileged accounts and pervasively interconnected internal networks all make the initial intrusion easier and make the lateral spread of an attack almost inevitable. I use the phrase “cyber hygiene” to describe the simple but overlooked security housekeeping that ensures visibility across the organization’s estate, that highlights latent vulnerability in unpatched systems and that encourages periodic review of network topologies and account or role permissions. These are not complex security tasks like threat hunting or forensic root cause analysis; they are simple, administrative functions that can provide value far in excess of more expensive and intrusive later-stage security investments. ... The execution of the most cyber hygiene falls squarely on the shoulders of the IT, network and support teams.

A Beginner's Guide to Microsegmentation

Security experts overwhelmingly agree that visibility issues are the biggest obstacles that stand in the way of successful microsegmentation deployments. The more granular segments are broken down, the better the IT organization need to understand exactly how data flows and how systems, applications, and services communicate with one another. "You not only need to know what flows are going through your route gateways, but you also need to see down to the individual host, whether physical or virtualized," says Jarrod Stenberg, director and chief information security architect at Entrust Datacard. "You must have the infrastructure and tooling in place to get this information, or your implementation is likely to fail." This is why any successful microsegmentation needs to start with a thorough discovery and mapping process. As a part of that, organizations should either dig up or develop thorough documentation of their applications, says Stenberg, who explains that documentation will be needed to support all future microsegmentation policy decisions to ensure the app keeps working the way it is supposed to function.

Cryptoming Botnet Smominru Returns With a Vengeance

Smominru uses a number of methods to compromise devices. For example, in addition to exploiting the EternalBlue vulnerability found in certain versions of Windows, it uses brute-force attacks against MS-SQL, Remote Desktop Protocol and Telnet, according to the Guardicore report. Once the botnet compromises the system, a PowerShell script named blueps.txt is downloaded onto the machine to run a number of operations, including downloading and executing three binary files - a worm downloader, a Trojan and a Master Boot Record (MBR) rootkit, Guardicore researchers found. Malicious payloads move through the network through the worm module. The PcShare open-source Trojan has a number of jobs, including acting as the command-and-control, capturing screenshots and stealing information, and most likely downloading a Monero cryptominer, the report notes. The group behind the botnet uses almost 20 scripts and binary payloads in its attacks. Plus, it uses various backdoors in different parts of the attack, the researchers report. Newly created users, scheduled tasks, Windows Management Instrumentation objects and services run when the system boots, Guardicore reports.

How to prevent lingering software quality issues

To build in quality, he advocates that IT undertake systematic approaches to software testing. In manufacturing, building in quality entails designing a process that helps improve the final product, while in IT that approach is about producing a higher-quality application. Yet, software quality and usability issues are, in many ways, harder to diagnose than problems in physical goods manufacturing. "In manufacturing, we can watch a product coming together and see if there's going to be interference between different parts," Gruver writes in the book. "In software, it's hard to see quality issues. The primary way that we start to see the product quality in software is with testing. Even then, it is difficult to find the source of the problem." Gruver recommends that software teams put together a repeatable deployment pipeline, which enables them to have a "stable quality signal" that informs the relevant parties as to whether the amount of variation in performance and quality between software builds is acceptable.

The arrival of 'multicloud 2.0'

What’s helpful around the federated Kubernetes approach is that this architecture makes it easy to deal with multiple clusters running on multiple clouds. This is from using two major building blocks. First is the capability of syncing resources across clusters. As you may expect, this would be the core challenge for those deploying multicloud Kubernetes. Mechanisms within Kubernetes can automatically sync deployments on plural clusters, running on many public clouds. Second is intercluster discovery. This means the capability of automatically configuring DNS servers and load balancers with backends supporting all clusters running across many public clouds. The benefits of leveraging multicloud/federated Kubernetes include high availability, considering you can replicate active/active clusters across multiple public clouds. Thus, if one has an outage, the other can pick up the processing without missing a beat. Also, you avoid that dreaded provider lock-in. This considering that Kubernetes is the abstraction layer that’s able to remove you from the complexities and native details of each public cloud provider.

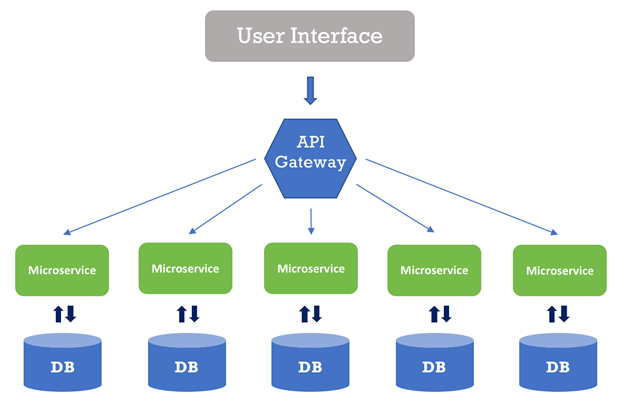

Microservices With Node.js: Scalable, Superior, and Secure Apps

Node.js is designed to build highly-scalable apps easier through non-blocking I/O and event-driven model that makes it suitable for data-centric and real-time apps. Node.js is highly suitable for real-time collaboration tools, streaming and networking apps, and data-intensive applications. Microservices, on the other hand, makes it easy for the developer to create smaller services that are scalable, independent, loosely coupled, and very suitable for complex, large enterprise applications. The nature and goal of both these concepts are identical at the core, making both suitable for each other. Together used, they can power highly-scalable applications and handle thousands of concurrent requests without slowing down the system. Microservices and Node.js have given rise to culture like DevOps where frequent and faster deliveries are of more value than the traditional long development cycle. Microservices are closely associated with container orchestration, or we can say that Microservices are managed by container platform, offering a modern way to design, develop, and deploy software.

Supply Chain Attacks: Hackers Hit IT Providers

Symantec says the group has hit at least 11 organizations, mostly in Saudi Arabia, and appears to have gained admin-level access to at least two organizations as part of its efforts to parlay hacks of IT providers into the ability to hack their many customers. In those two networks, it notes, attackers had managed to infect several hundred PCs with malware called Backdoor.Syskit. "This is an unusually large number of computers to be compromised in a targeted attack," Symantec's security researchers say in a report. "It is possible that the attackers were forced to infect many machines before finding those that were of most interest to them." Backdoor.Syskit is a Trojan, written in Delphi and .NET, that's designed to phone home to a command-and-control server and give attackers remote access to the infected system so they can push and execute additional malware on the endpoint, according to Symantec. The security firm first rolled out an anti-virus signature for the malware on Aug. 21. Symantec says attackers have in some cases also used PowerShell backdoors - also known as a living off the land attack, since it's tough to spot attackers' use of legitimate tools.

Quote for the day:

"A culture of discipline is not a principle of business; it is a principle of greatness." -- Jim Collins

No comments:

Post a Comment