Optimization Techniques For Edge AI

Edge devices often have limited computational power, memory, and storage

compared to centralised servers. Due to this, the cloud-centric ML models need

to be retargeted so that they fit in the available resource budget. Further,

many edge devices run on batteries, making energy efficiency a critical

consideration. The hardware diversity in edge devices ranging from

microcontrollers to powerful edge servers, each with different capabilities

and architectures requires different model refinement and retargeting

strategies. ... Many use cases involve the distributed deployment of numerous

IoT or edge devices, such as CCTV cameras, working collaboratively towards

specific objectives. These applications often have built-in redundancy, making

them tolerant to failures, malfunctions, or less accurate inference results

from a subset of edge devices. Algorithms can be employed to recover from

missing, incorrect, or less accurate inputs by utilising the global

information available. This approach allows for the combination of high and

low accuracy models to optimise resource costs while maintaining the required

global accuracy through the available redundancy.

The Cyber Resilience Act: A New Era for Mobile App Developers

Collaboration is key for mobile app developers to prepare for the CRA. They

should first conduct a thorough security audit of their apps, identifying and

addressing any vulnerabilities. Then, they’ll want to implement a structured

plan to integrate the needed security features, based on the CRA’s checklist.

It may also make sense to invest in a partnership with cybersecurity experts

who can more efficiently provide more insights and help streamline this

process in general. Developers cannot be expected to become top-notch security

experts overnight. Working with cybersecurity firms, legal advisors and

compliance experts can clarify the CRA and simplify the path to compliance and

provide critical insights into best practices, regulatory jargon and tech

solutions, ensuring that apps meet CRA standards and maintain innovation. It’s

also important to note that keeping comprehensive records of compliance

efforts is essential under the CRA. Developers should establish a clear

process for documenting security measures, vulnerabilities addressed, and any

breaches or other incidents that were identified and remediated.

Sometimes the cybersecurity tech industry is its own worst enemy

One of the fundamental infosec problems facing most organizations is that

strong cybersecurity depends on an army of disconnected tools and

technologies. That’s nothing new — we’ve been talking about this for years.

But it’s still omnipresent. ... To a large enterprise, “platform” is a code

word for vendor lock-in, something organizations tend to avoid. Okay, but

let’s say an organization was platform curious. It could also take many months

or years for a large organization to migrate from distributed tools to a

central platform. Given this, platform vendors need to convince a lot of

different people that the effort will be worth it — a tall task with skeptical

cybersecurity professionals. ... Fear not, for the security technology

industry has another arrow in its quiver — application programming interfaces

(APIs). Disparate technologies can interoperate by connecting via their APIs,

thus cybersecurity harmony reigns supreme, right? Wrong! In theory, API

connectivity sounds good, but it is extremely limited in practice. For it to

work well, vendors have to open their APIs to other vendors.

How to Apply Microservice Architecture to Embedded Systems

In short, the process of deploying and upgrading microservices for an embedded

system has a strong dependency on the physical state of the system’s hardware.

But there’s another significant constraint as well: data exchange. Data

exchange between embedded devices is best implemented using a binary data

format. Space and bandwidth capacity are limited in an embedded processor, so

text-based formats such as XML and JSON won’t work well. Rather, a binary

format such as protocol buffers or a custom binary format is better suited for

communication in an MOA scenario in which each microservice in the

architecture is hosted on an embedded processor. ... Many traditional

distributed applications can operate without each microservice in the

application being immediately aware of the overall state of the application.

However, knowing the system’s overall state is important for microservices

running within an embedded system. ... The important thing to understand is

that any embedded system will need a routing mechanism to coordinate traffic

and data exchange among the various devices that make up the system.

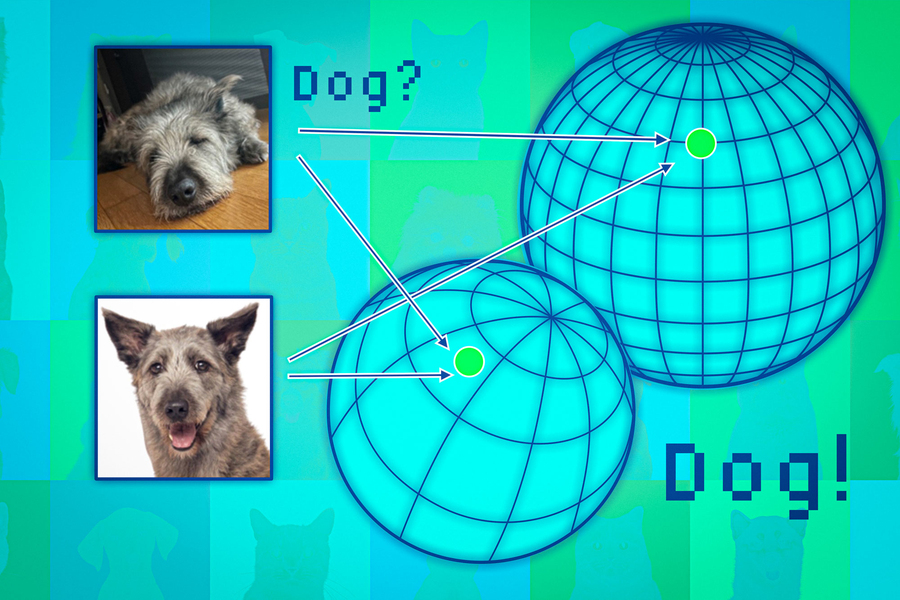

How to assess a general-purpose AI model’s reliability before it’s deployed

But these models, which serve as the backbone for powerful artificial

intelligence tools like ChatGPT and DALL-E, can offer up incorrect or

misleading information. In a safety-critical situation, such as a pedestrian

approaching a self-driving car, these mistakes could have serious

consequences. To help prevent such mistakes, researchers from MIT and the

MIT-IBM Watson AI Lab developed a technique to estimate the reliability of

foundation models before they are deployed to a specific task. They do this by

considering a set of foundation models that are slightly different from one

another. Then they use their algorithm to assess the consistency of the

representations each model learns about the same test data point. If the

representations are consistent, it means the model is reliable. When they

compared their technique to state-of-the-art baseline methods, it was better

at capturing the reliability of foundation models on a variety of downstream

classification tasks. Someone could use this technique to decide if a model

should be applied in a certain setting, without the need to test it on a

real-world dataset.

The Role of Technology in Modern Product Engineering

/pcq/media/media_files/5XXHuthlNeHT5EL5tDGP.png)

Product engineering has seen a significant transformation with the integration

of advanced technologies. Tools like Computer-Aided Design (CAD),

Computer-Aided Manufacturing (CAM), and Computer-Aided Engineering (CAE) have

paved the way for more efficient and precise engineering processes. The early

adoption of these technologies has enabled businesses to develop multi-million

dollar operations, demonstrating the profound impact of technological

advancements in the field. ... Deploying complex software solutions often

involves customization and integration challenges. Addressing these challenges

requires close client engagement, offering configurable options, and

implementing phased customization. ... The future of product engineering is

being shaped by technology integration, strategic geographic diversification,

and the adoption of advanced methodologies like DevSecOps. As the tech

landscape evolves with trends such as AI, Augmented Reality (AR), Virtual

Reality (VR), IoT, and sustainable technology, continuous innovation and

adaptation are essential.

A New Approach To Multicloud For The AI Era

The evolution from cost-focused to value-driven multicloud strategies marks a

significant shift. Investing in multicloud is not just about cost efficiency;

it's about creating an infrastructure that advances AI initiatives, spurs

innovation and secures a competitive advantage. Unlike single-cloud or hybrid

approaches, multicloud offers unparalleled adaptability and resource

diversity, which are essential in the AI-driven business environment. Here are

a few factors to consider. ... The challenge of multicloud is not simply to

utilize a variety of cloud services but to do so in a way that each

contributes its best features without compromising the overall efficiency and

security of the AI infrastructure. To achieve this, businesses must first

identify the unique strengths and offerings of each cloud provider. For

instance, one platform might offer superior data analytics tools, another

might excel in machine learning performance and a third might provide the most

robust security features. The task is to integrate these disparate elements

into a seamless whole.

How Can Organisations Stay Secure In The Face Of Increasingly Powerful AI Attacks

One of the first steps any organisation should take when it comes to staying

secure in the face of AI-generated attacks is to acknowledge a significant

top-down disparity between the volume and strength of cyberattacks, and the

ability of most organisations to handle them. Our latest report shows that

just 58% of companies are addressing every security alert. Without the right

defences in place, the growing power of AI as a cybersecurity threat could see

that number slip even lower. ... Fortunately, there is a solution: low-code

security automation. This technology gives security teams the power to

automate tedious and manual tasks, allowing them to focus on establishing an

advanced threat defence. ... There are other benefits too. These include the

ability to scale implementations based on the team’s existing experience and

with less reliance on coding skills. And unlike no-code tools that can be

useful for smaller organisations that are severely resource-constrained,

low-code platforms are more robust and customisable. This can result in easier

adaptation to the needs of the business.

Time for reality check on AI in software testing

Given that AI-augmented testing tools are derived from data used to train AI

models, IT leaders will also be more responsible for the security and privacy

of that data. Compliance with regulations like GDPR is essential, and robust

data governance practices should be implemented to mitigate the risk of data

breaches or unauthorized access. Algorithmic bias introduced by skewed or

unrepresentative training data must also be addressed to mitigate bias within

AI-augmented testing as much as possible. But maybe we’re getting ahead of

ourselves here. Because even with AI’s continuing evolution, and autonomous

testing becomes more commonplace, we will still need human assistance and

validation. The interpretation of AI-generated results and the ability to make

informed decisions based on those results will remain a responsibility of

testers. AI will change software testing for the better. But don’t treat any

tool using AI as a straight-up upgrade. They all have different merits within

the software development life cycle.

Overlooked essentials: API security best practices

In my experience, there are six important indicators organizations should

focus on to detect and respond to API security threats effectively – shadow

APIs, APIs exposed to the internet, APIs handling sensitive data,

unauthenticated APIs, APIs with authorization flaws, APIs with improper rate

limiting. Let me expand on this further. Shadow APIs: Firstly, it’s important

to identify and monitor shadow APIs. These are undocumented or unmanaged APIs

that can pose significant security risks. Internet-exposed APIs: Limit and

closely track the number of APIs accessible publicly. These are more prone to

external threats. APIs handling sensitive data: APIs that process sensitive

data and are also publicly accessible are among the most vulnerable. They

should be prioritized for security measures. Unauthenticated APIs: An API

lacking proper authentication is an open invitation to threats. Always have a

catalog of unauthenticated APIs and ensure they are not vulnerable to data

leaks. APIs with authorization flaws: Maintain an inventory of APIs with

authorization vulnerabilities. These APIs are susceptible to unauthorized

access and misuse. Implement a process to fix these vulnerabilities as a

priority.

Quote for the day:

"The successful man doesn't use

others. Other people use the successful man. For above all the success is of

service" -- Mark Kainee

No comments:

Post a Comment