How Good Is ChatGPT at Coding, Really?

A study published in the June issue of IEEE Transactions on Software Engineering

evaluated the code produced by OpenAI’s ChatGPT in terms of functionality,

complexity and security. The results show that ChatGPT has an extremely broad

range of success when it comes to producing functional code—with a success rate

ranging from anywhere as poor as 0.66 percent and as good as 89

percent—depending on the difficulty of the task, the programming language, and a

number of other factors. While in some cases the AI generator could produce

better code than humans, the analysis also reveals some security concerns with

AI-generated code. ... Overall, ChatGPT was fairly good at solving problems in

the different coding languages—but especially when attempting to solve coding

problems that existed on LeetCode before 2021. For instance, it was able to

produce functional code for easy, medium, and hard problems with success rates

of about 89, 71, and 40 percent, respectively. “However, when it comes to the

algorithm problems after 2021, ChatGPT’s ability to generate functionally

correct code is affected. It sometimes fails to understand the meaning of

questions, even for easy level problems,” Tang notes.

What can devs do about code review anxiety?

A lot of folks reported that either they would completely avoid picking up code

reviews, for example. So maybe someone's like, “Hey, I need a review,” and folks

are like, “I'm just going to pretend I didn't see that request. Maybe somebody

else will pick it up.” So just kind of completely avoiding it because this

anxiety refers to not just getting your work reviewed, but also reviewing other

people's work. And then folks might also procrastinate, they might just kind of

put things off, or someone was like, “I always wait until Friday so I don't have

to deal with it all weekend and I just push all of that until the very last

minute.” So definitely you see a lot of avoidance. ... there is this

misconception that only junior developers or folks just starting out experience

code review anxiety, with the assumption that it's only because you're

experiencing the anxiety when your work is being reviewed. But if you think

about it, anytime you are a reviewer, you're essentially asked to contribute

your expertise and so there is an element of, “If I mess up this review, I was

the gatekeeper of this code. And if I mess it up, that might be my fault.” So

there's a lot of pressure there.

Securing the Growing IoT Threat Landscape

What’s clear is that there should be greater collective responsibility between

stakeholders to improve IoT security outlooks. A multi-stakeholder response is

necessary, leading to manufacturers prioritising security from the design phase,

to governments implementing legislation to mandate responsibility. Currently,

some of the leading IoT issues relate to deployment problems. Alex suggests that

IT teams also need to ensure default device passwords are updated and complex

enough to not be easily broken. Likewise, he highlights the need for monitoring

to detect malicious activity. “Software and hardware hygiene is essential,

especially as IoT devices are often built on open source software, without any

convenient, at scale, security hardening and update mechanisms,” he highlights.

“Identifying new or known vulnerabilities and having an optimised testing and

deployment loop is vital to plug gaps and prevent entry from bad actors.” A

secure-by-design approach should ensure more robust protections are in place,

alongside patching and regular maintenance. Alongside this, security features

should be integrated from the start of the development process.

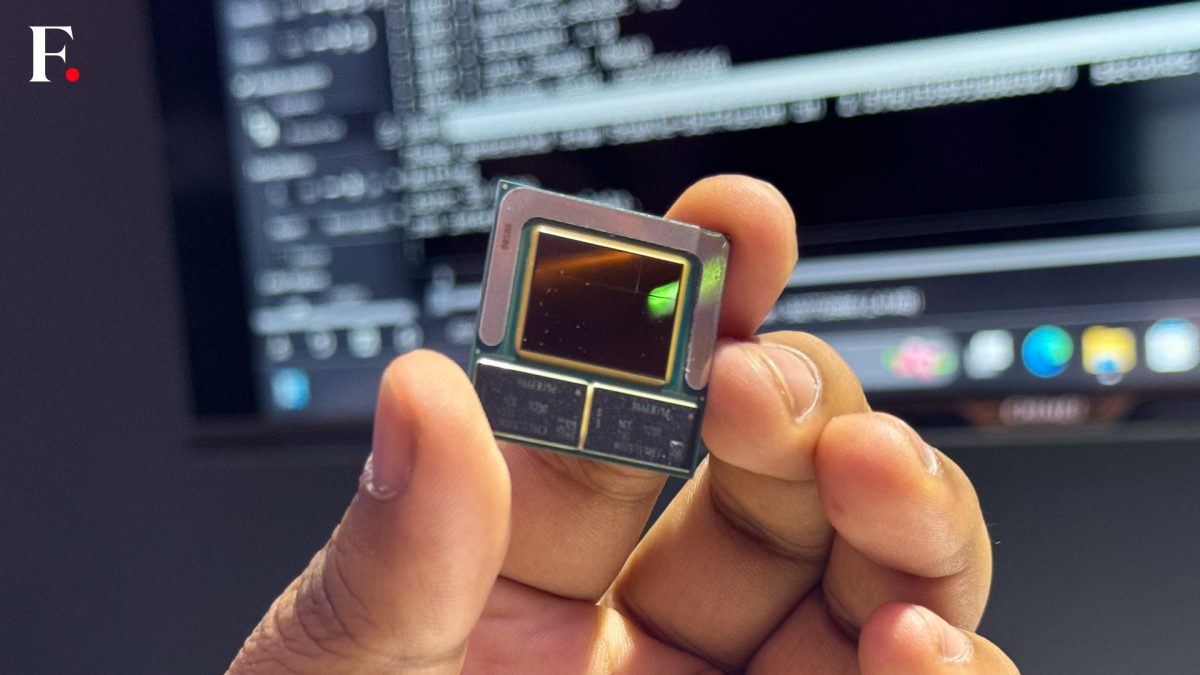

Beyond GPUs: Innatera and the quiet uprising in AI hardware

“Our neuromorphic solutions can perform computations with 500 times less

energy compared to conventional approaches,” Kumar stated. “And we’re seeing

pattern recognition speeds about 100 times faster than competitors.” Kumar

illustrated this point with a compelling real-world application. ... Kumar

envisions a future where neuromorphic chips increasingly handle AI workloads

at the edge, while larger foundational models remain in the cloud. “There’s a

natural complementarity,” he said. “Neuromorphics excel at fast, efficient

processing of real-world sensor data, while large language models are better

suited for reasoning and knowledge-intensive tasks.” “It’s not just about raw

computing power,” Kumar observed. “The brain achieves remarkable feats of

intelligence with a fraction of the energy our current AI systems require.

That’s the promise of neuromorphic computing – AI that’s not only more capable

but dramatically more efficient.” ... As AI continues to diffuse into every

facet of our lives, the need for more efficient hardware solutions will only

grow. Neuromorphic computing represents one of the most exciting frontiers in

chip design today, with the potential to enable a new generation of

intelligent devices that are both more capable and more sustainable.

Artificial intelligence in cybersecurity and privacy: A blessing or a curse?

AI helps cybersecurity and privacy professionals in many ways, enhancing their

ability to protect systems, data, and users from various threats. For

instance, it can analyse large volumes of data, spot anomalies, and identify

suspicious patterns for threat detection, which helps to find unknown or

sophisticated attacks. AI can also defend against cyber-attacks by analysing

and classifying network data, detecting malware, and predicting

vulnerabilities. ... The harmful effects of AI may be fewer than the positive

ones, but they can have a serious impact on organisations that suffer from

them. Clearly, as AI technology advances, so do the strategies for both

protecting and compromising digital systems. Security professionals should not

ignore the risks of AI, but rather prepare for them by using AI to enhance

their capabilities and reduce their vulnerabilities. ... As attackers are

increasingly leveraging AI, integrating AI defences is crucial to stay ahead

in the cybersecurity game. Without it, we risk falling behind.” Consequently,

cybersecurity and privacy professionals, and their organisations, should

prepare for AI-driven cyber threats by adopting a multi-faceted approach to

enhance their defences while minimising risks and ensuring ethical use of

technology.

Intel is betting big on its upcoming Lunar Lake XPUs to change how we think of AI in our PCs

)

Designed with power efficiency in mind, the Lunar Lake architecture is ideal

for portable devices such as laptops and notebooks. These processors balance

performance and efficiency by integrating Performance Cores (P-cores) and

Efficiency Cores (E-cores). This combination allows the processors to handle

both demanding tasks and less intensive operations without draining the

battery. The Lunar Lake processors will feature a configuration of up to eight

cores, split equally between P-cores and E-cores. This design aims to improve

battery life by up to 60 per cent, positioning Lunar Lake as a strong

competitor to ARM-based CPUs in the laptop market. Intel anticipates that

these will be the most efficient x86 processors it has ever developed. ... A

major highlight of the Lunar Lake processors is the inclusion of the new Xe2

GPUs as integrated graphics. These GPUs are expected to deliver up to 80 per

cent better gaming performance compared to previous generations. With up to

eight second-generation Xe-cores, the Xe2 GPUs are designed to support

high-resolution gaming and multimedia tasks, including handling up to three 4K

displays at 60 frames per second with HDR.

Cyber Threats And The Growing Complexity Of Cybersecurity

Irvine envisions a future where the cybersecurity industry undergoes

significant disruption, with a greater emphasis on data-driven risk

management. “The cybersecurity industry is going to be disrupted severely. We

start to think about cybersecurity more as a risk and we start to put more

data and more dollars and cents around some of these analyses,” she predicted.

As the industry matures, Dr. Irvine anticipates a shift towards more

transparent and effective cybersecurity solutions, reducing the prevalence of

smoke and mirrors in the marketplace. She also claims that “AI and LLM's will

take over jobs. There will be automation, and we're going to need to upskill

individuals to solve some of these hard problems. It's just a challenge for

all of us to figure out how.” Kosmowski also remarked that the industry must

remain on top of what will continue to be a definitive risk to organizations,

“Over 86% of companies are hybrid and expect to remain hybrid for the

foreseeable future, plus we know IT proliferation is continuing to happen at a

pace that we have never seen before.”

The blueprint for data center success: Documentation and training

In any data center, knowledge is a priceless asset. Documenting

configurations, network topologies, hardware specifications, decommissioning

regulations, and other items mentioned above ensures that institutional

knowledge is not lost when individuals leave the organization. So, no need to

panic once the facility veteran retires, as you’ll already have all the

information they have! This information becomes crucial for staff, maintenance

personnel, and external consultants to understand every facet of the systems

quickly and accurately. It provides a more structured learning path,

facilitates a deeper understanding of the data center's infrastructure and

operations, and allows facilities to keep up with critical technological

advances. By creating a well-documented environment, facilities can rest

assured knowing that authorized personnel are adequately trained, and vital

knowledge is not lost in the shuffle, contributing to overall operational

efficiency and effectiveness, and further mitigating future risks or

compliance violations.

Why Knowledge Is Power in the Clash of Big Tech’s AI Titans

The advanced AI models currently under development across big tech -- models

designed to drive the next class of intelligent applications -- must learn

from more extensive datasets than the internet can provide. In response, some

AI developers have turned to experimenting with AI-generated synthetic data, a

risky proposition that could potentially put an entire engine at risk if even

a small semblance of the learning model is inaccurate. Others have pivoted to

content licensing deals for access to useful, albeit limited, proprietary

training data. ... The real differentiating edge lies in who can develop a

systemic means of achieving GenAI data validation, integrity, and reliability

with a certificated or “trusted” designation, in addition to acquiring expert

knowledge from trusted external data and content sources. These two twin

pillars of AI trust, coupled with the raw computing and computational power of

new and emerging data centers, will likely be the markers of which big tech

brands gain the immediate upper hand.

Should Sustainability be a Network Issue?

The beauty of replacing existing network hardware components with

energy-efficient, eco-friendly, small form factor infrastructure elements

wherever possible is that no adjustments have to be made to network

configurations and topology. In most cases, you're simply swapping out

routers, switches, etc. The need for these equipment upgrades naturally occurs

with the move to Wi-Fi 6, which requires new network switches, routers, etc.,

in order to run at full capacity. Hardware replacements can be performed on a

phased plan that commits a portion of the annual budget each year for network

hardware upgrades ... There is a need in some cases to have discrete computer

networks that are dedicated to specific business functions, but there are

other cases where networks can be consolidated so that resources such as

storage and processing can be shared. ... Network managers aren’t professional

sustainability experts—but local utility companies are. In some areas of the

U.S., utility companies offer free onsite energy audits that can help identify

areas of potential energy and waste reduction.

Quote for the day:

"It takes courage and maturity to know

the difference between a hoping and a wishing." --

Rashida Jourdain

No comments:

Post a Comment