AI success: Real or hallucination?

The biggest problem may not be compliance muster, but financial muster. If AI is consuming hundreds of thousands of GPUs per year, requiring that those running AI data centers canvas frantically in search of the power needed to drive these GPUs and to cool them, somebody is paying to build AI, and paying a lot. Users report that the great majority of the AI tools they use are free. Let me try to grasp this; AI providers are spending big to…give stuff away? That’s an interesting business model, one I personally wish was more broadly accepted. But let’s be realistic. Vendors may be willing to pay today for AI candy, but at some point AI has to earn its place in the wallets of both supplier and user CFOs, not just in their hearts. We have AI projects that have done that, but most CIOs and CFOs aren’t hearing about them, and that’s making it harder to develop the applications that would truly make the AI business case. So the reality of AI is buried in hype? It sure sounds like AI is more hallucination than reality, but there’s a qualifier. Millions of workers are using AI, and while what they’re currently doing with it isn’t making a real business case, that’s a lot of activity.

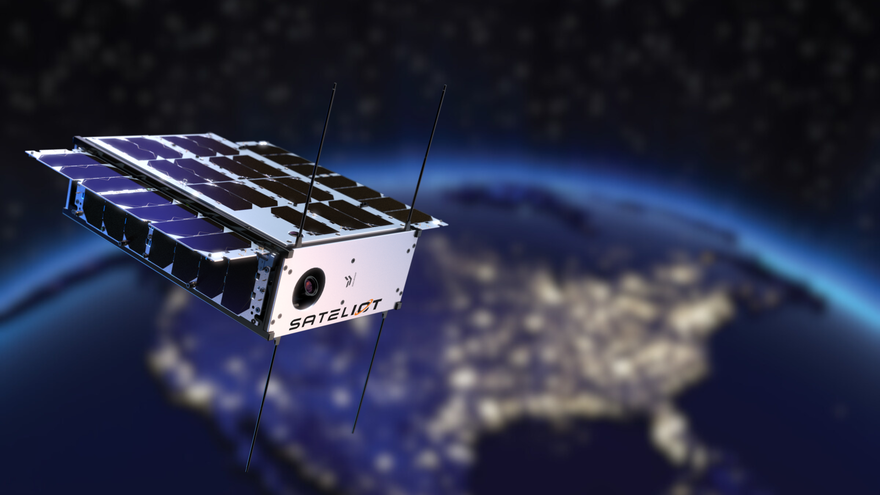

Space: The Final Frontier for Cyberattacks

.jpg?width=850&auto=webp&quality=95&format=jpg&disable=upscale)

"Since failing to imagine a full range of threats can be disastrous for any security planning, we need more than the usual scenarios that are typically considered in space-cybersecurity discussions," Lin says. "Our ICARUS matrix fills that 'imagineering' gap." Lin and the other authors of the report — Keith Abney, Bruce DeBruhl, Kira Abercromby, Henry Danielson, and Ryan Jenkins — identified several factors as increasing the potential for outer space-related cyberattacks over the next several years and decades. Among them is the rapid congestion of outer space in recent years as the result of nations and private companies racing to deploy space technologies; the remoteness of space; and technological complexity. ... The remoteness — and vastness of space — also makes it more challenging for stakeholders — both government and private — to address vulnerabilities in space technologies. There are numerous objects that were deployed into space long before cybersecurity became a mainstream concern that could become targets for attacks.

The perils of overengineering generative AI systems

Overengineering any system, whether AI or cloud, happens through easy access

to resources and no limitations on using those resources. It is easy to find

and allocate cloud services, so it’s tempting for an AI designer or engineer

to add things that may be viewed as “nice to have” more so than “need to

have.” Making a bunch of these decisions leads to many more databases,

middleware layers, security systems, and governance systems than needed. ...

We need to account for future growth,” but this can often be handled by

adjusting the architecture as it evolves. It should never mean tossing money

at the problems from the start. This tendency to include too many services

also amplifies technical debt. Maintaining and upgrading complex systems

becomes increasingly difficult and costly. If data is fragmented and siloed

across various cloud services, it can further exacerbate these issues, making

data integration and optimization a daunting task. Enterprises often find

themselves trapped in a cycle where their generative AI solutions are not just

overengineered but also need to be more optimized, leading to diminished

returns on investment.

Data fabric is a design concept for integrating and managing data. Through

flexible, reusable, augmented, and sometimes automated data integration, or

copying of data into a desired target database, it facilitates data access

across the business and data analysts. ... Physically moving data can be

tedious, involving planning, modeling, and developing ETL/ELT pipelines, along

with associated costs. However, a data fabric abstracts these steps, providing

capabilities to copy data to a target database. Analysts can then replicate

the data with minimal planning, reduced data silos, and enhanced data

accessibility and discovery. Data fabric is an abstracted semantic-based data

capability that provides the flexibility to add new data sources,

applications, and data services without disrupting existing infrastructure.

... As the data volume increases, the fabric adapts without compromising

efficiency. Data fabric empowers organizations to leverage multiple cloud

providers. It facilitates flexibility, avoids vendor lock-in, and accommodates

future expansion across different cloud environments.

DFIR and its role in modern cybersecurity

In incident response, digital forensics provides detailed insights to

highlight the cause and sequence of events in breaches. This data is vital for

successful containment, eradication of the danger, and recovery. Conducting

post-incident forensic reports can similarly enhance security by pinpointing

system vulnerabilities and suggesting actions to prevent future breaches.

Incorporating digital forensics into incident response essentially allows you

to examine incidents thoroughly, leading to faster recovery, enhanced security

measures, and increased resilience to cyber threats. This partnership improves

your ability to identify, evaluate, and address cyber threats thoroughly. ...

Emerging trends and technologies are shaping the future of DFIR in

cybersecurity. Artificial intelligence and machine learning are increasing the

speed and effectiveness of threat detection and response. Cloud computing is

revolutionising processes with its scalable options for storing and analysing

data. Additionally, improved coordination with other cybersecurity sectors,

such as threat intelligence and network security, leads to a more cohesive

defence plan.

Ensuring Application Security from Design to Operation with DevSecOps

DevSecOps is as much about cultural transformation as it is about tools and

processes. Before diving into technical integrations, ensure your team’s

mindset aligns with DevSecOps principles. Underestimating the cultural

aspects, such as resistance to change, fear of increased workload or

misunderstanding the value of security, can impede adoption. You can address

these challenges by highlighting the benefits of DevSecOps, celebrating

successes and promoting a culture of learning and continuous improvement.

Developers should be familiar with the nuances of the security tools in use

and how to interpret their outputs. ... DevSecOps is a journey, not a

destination. Regularly review the effectiveness of your tool integrations and

workflows. Gather feedback from all stakeholders and define metrics to measure

the effectiveness of your DevSecOps practices, such as the number of

vulnerabilities identified and remediated, the time taken to fix critical

issues and the frequency of zero-day attacks and other security

incidents.

Essential skills for leaders in industry 4.0

Agility enables swift adaptation to new technologies and market shifts,

keeping your organisation competitive and innovative. Digital leaders must

capitalise on emerging opportunities and navigate disruptions such as

technological advancements, shifting consumer preferences, and increased

global competition. ... Effective communication is vital for digital

leadership, especially when implementing organisational change. Inspiring

positive, incremental change requires empowering your team to work towards

common business goals and objectives. Key communication skills include

clarity, precision, active listening, and transparency. ... Empathy is

essential for guiding your team through digital transformation. True adoption

demands conviction from top leaders and a determined spirit throughout the

organisation. Success lies in integrating these concepts into the company’s

operations and culture. Acknowledge that change can be overwhelming, and by

addressing employees' stressors proactively, you can secure their support for

strategic initiatives. ... Courage is indispensable for digital leaders,

requiring the embrace of risk to ensure success.

Platform as a Runtime - The Next Step in Platform Engineering

It is almost impossible to ensure that all developers 100% comply with all the

system's non-functional requirements. Even a simple thing like input

validations may vary between developers. For instance, some will not allow

Nulls in a string field, while others allow Nulls, causing inconsistency in

what is implemented across the entire system. Usually, the first step to

aligning all developers on best practices and non-functional requirements is

documentation, build and lint rules, and education. However, in a complex

world, we can’t build perfect systems. When developers need to implement new

functionality, they are faced with trade-offs they need to make. The need for

standardization comes to mitigate scaling challenges. Microservices is another

solution to try and handle scaling issues, but as the number of microservices

grows, you will start to face the complexity of a Large-Scale Microservices

environment. In distributed systems, requests may fail due to network issues.

Performance is degraded since requests flow across multiple services via

network communication as opposed to in-process method calls in a

Monolith.

The distant world of satellite-connected IoT

The vision is that IoT, and mobile phones, will be designed so that as they

cross out of terrestrial connectivity, they can automatically switch to

satellite. Devices will no longer be either or, they will be both, offering a

much more reliable network as when a device loses contact with the terrestrial

network and permanently available alternative can be used. “Satellite is

wonderful from a coverage perspective,” says Nuttall. “Anytime you see the

sky, you have satellite connectivity. The challenge lies in it being a

separate device, and that ecosystem has not really proliferated or grown at

scale.” Getting to that point, MacLeod predicts that we will first see people

using 3GPP-type standards over satellite links, but they won’t immediately be

interoperating. “Things can change, but in order to make the space segment

super efficient, it currently uses a data protocol that's referred to as NIDD

- non-IP-based data delivery - which is optimized for trickier links,”

explains MacLeod. “But NB-IoT doesn’t use it, so the current style of

addressing data communication in space isn’t mirrored by that on the ground

network. Of course, that will change, but none of us knows exactly how long it

will take.”

Navigating the cloud: How SMBs can mitigate risks and maximise benefits

SMBs often make several common mistakes when it comes to cloud security. By

recognizing and addressing these blind spots, organizations can significantly

enhance their cybersecurity. One major mistake is placing too much trust in

the cloud provider. Many IT leaders assume that investing in cloud services

means fully outsourcing security to a third party. However, security

responsibilities are shared between the cloud service provider (CSP) and the

customer. The specific responsibilities depend on the type of cloud service

and the provider. Another common error is failing to back up data.

Organisations should not assume that their cloud provider will automatically

handle backups. It's essential to prepare for worst-case scenarios,

such as system failures or cyberattacks, as lost data can lead to significant

downtime, productivity, and reputation losses. Neglecting regular patching

also exposes cloud systems to vulnerabilities. Unpatched systems can be

exploited, leading to malware infections, data breaches, and other security

issues. Regular patch management is crucial for maintaining cloud security,

just as it is for on-premises systems.

Quote for the day:

"What seems to us as bitter trials are

often blessings in disguise." -- Oscar Wilde

No comments:

Post a Comment