The shift towards a combined framework for API threat detection and the protection of vital business applications signals a move to proactive and responsive security. ... Companies cannot afford to underestimate the threat bots pose to their API-driven applications and infrastructure. Traditional silos between fraud and security teams create dangerous blind spots. Fraud detection often lacks visibility into API-level attacks, while API security tools may overlook fraudulent behavior disguised as legitimate traffic. This disconnect leaves businesses vulnerable. By integrating fraud detection, API security, and advanced bot protection, organizations create a more adaptive defense. This proactive approach offers crucial advantages: swift threat response, the ability to anticipate and mitigate vulnerabilities exploited by bots and other malicious techniques, and an in-depth understanding of application abuse patterns. These advantages lead to more effective threat identification and neutralization, combating both low-and-slow attacks and sudden volumetric attacks from bots.

Fortifying the Software Supply Chain

Firstly, it enhances security and compliance by consolidating code repositories

in a single, cloud-based platform. This allows organizations to gain better

control over access permissions and enforce consistent security policies across

the entire codebase. Centralized environments can be configured to comply with

industry standards and regulations automatically, reducing the risk of breaches

that could disrupt the supply chain. As Jen Easterly, Director of the U.S.

Cybersecurity and Infrastructure Security Agency (CISA), emphasizes,

centralizing source code in the cloud aligns with the goal of working with the

open source community to ensure secure software while reaping its benefits.

Secondly, cloud-based centralization fosters improved collaboration and

efficiency among development teams. With a centralized platform, teams can

collaborate in real-time, regardless of their geographical location,

facilitating faster decision-making and problem-solving. ... Thirdly,

centralized cloud environments offer enhanced reliability and disaster recovery

capabilities. Cloud providers typically replicate data across multiple

locations, ensuring that a failure in one area does not result in data loss.

GenAI can enhance security awareness training

Social engineering is fundamentally all about psychology and putting the victim

in a situation where they feel under pressure to make a decision. Therefore, any

form of communication that imparts a sense of urgency and makes an unusual

request needs to be flagged, not immediately responded to, and subjected to a

rigorous verification process. Much like the concept of zero trust, the approach

should be “never trust, always verify”, and the education process should outline

the steps that should be taken following an unusual request. For instance, in

relation to CFO fraud, the accounts department should have a set limit for

payments and exceeding these should trigger a verification process. This might

see staff use a token-based system or authenticator to verify the request is

legitimate. Secondly, users need to be aware of oversharing. Is there a company

policy to prevent information being divulged over the phone? Restrictions on the

posting of company photos that an attacker could exploit? Could social media

posts be used to guess passwords or an individual’s security questions? Such

steps can reduce the likelihood of digital details being mined.

AI and Human Capital Management: Bridging the Gap Between HR and Technology

/pcq/media/media_files/irms4rHwjUn6108Aw5YO.jpg)

Amid the strides of technology, the relevance of the human factor remains

important for the human resource professional. They grapple with the challenge

of seamlessly blending advanced technology with the distinctive human elements

that define their workforce. In this contemporary landscape, HR assumes a

novel role as a vital link between the embrace of technology and the

preservation of human relations. The shift to this new way of working requires

an appropriate use of technology to support and enhance existing HR

capabilities, thereby increasing their flexibility and effectiveness. ...

Ethical considerations comprise one of the most significant challenges while

applying AI in HR, which mandates the implementation of mechanisms like equal

opportunities, fair decision-making, and transparency in AI utilization in

carrying out the duties. Additionally, the integration of AI into HR

operations necessitates modifying change management processes to provide

reassurance, organize skill preparation and training programs, and cultivate

the acceptance of AI in the organization.

Differential Privacy and Federated Learning for Medical Data

Federated learning is a key strategy to build that trust backed up by the

technology, not only on contracts and faith in ethics of particular employees

and partners of the organizations forming consortia. First of all, the data

remains at the source, never leaves the hospital, and is not being centralized

in a single, potentially vulnerable location. Federated approach means there

aren’t any external copies of the data that may be hard to remove after the

research is completed. The technology blocks access to raw data because of

multiple techniques that follow defense in depth principle. Each of them is

minimizing the risk of data exposure and patient re-identification by tens or

thousands of times. Everything to make it economically unviable to discover

nor reconstruct raw level data. Data is minimized first to expose only the

necessary properties to machine learning agents running locally, PII data is

stripped, and we also use anonymization techniques. Then local nodes protect

local data against the so-called too curious data scientist threat by allowing

only the code and operations accepted by local data owners to run against

their data.

CIO risk-taking 101: Playing it safe isn’t safe

As CIO, you’re in the risk business. Or rather, every part of your

responsibilities entails risk, whether you’re paying attention to it or not.

And in spite of the spate of books that extol risk-taking as the only smart

path, it’s worth remembering that their authors don’t face what might be the

biggest risk CIOs have to deal with every day: executive teams adept at

preaching risk-taking without actually supporting it. ... ut the staff members

and sponsor who annoy you the most in charge of these initiatives. Worst case

they succeed, and people you don’t like now owe you a favor or two. Best case

they fail and will be held accountable. You can’t lose. ... Those who

encourage risk-taking often ignore its polysemy. One meaning: initiatives

that, as outlined above, have potential benefit but a high probability of

failure. The other: structural risks — situations that might become real and

would cause serious damage to the IT organization and its business

collaborators if they do. You can choose to not charter a risky initiative,

ignoring and eschewing its potential benefits. When it comes to structural

risks you can ignore them as well, but you can’t make them go away by doing so

and will be blamed if they’re “realized”

Digital Personal Data Protection Act, 2023 - Impact on Banking Sector Outsourced Services

Regulated Entities must design their own privacy compliance program for

application-based services – and not solely rely on a package provided by the

SP. While the SP may add value and save costs, any solution it provides will

likely be optimized for its own efficiency. Customer data management practices

can differentiate a business from competitors, enhance customer trust, and

provide a competitive advantage. Also, financial penalties under the DPDP are

high, extending up to INR 250 crores (on the Regulated Entity as the ‘data

fiduciary’ and not on the processor), apart from the reputational damage a

breach or prosecution can cause, making it critical to have thorough oversight

over the SP vis-à-vis privacy protection. ... For consumer facing services, in

addition to security, SPs must technically ensure that the client can comply

with its DPDP obligations such as data access requests, erasure, correction

and updating personal data, consent withdrawal. Also, the platform should be

capable of integrating with consent managers.

Why Is a Data-Driven Culture Important?

Trust and commitment are two important features in a data-driven culture.

Trust in the data is exceptionally important, but trust in other staff, for

purposes of collaboration and teamwork, is also quite important. Dealing with

internal conflicts and misinformation disrupts the smooth flow of doing

business. There are a number of issues to consider when creating a data-driven

culture. ... In a data-driven culture, everyone should be involved, and this

should be communicated to staff and management (exceptions are allowed, for

example, the janitor). Everyone using data in doing their job should

understand they are also creating data that can be used later for research.

When people understand their roles, they can work together as an efficient

team to find and eliminate sources of bad data. The process of locating and

repairing sources of poor-quality data acts as an educational process for

staff and empowers them to be proactive, taking responsibility when they

notice a data flow problem. Shifting to a data-driven culture may result in

having to hire a few specialists – individuals who are skilled in Data

Management, data visualization, and data analysis.

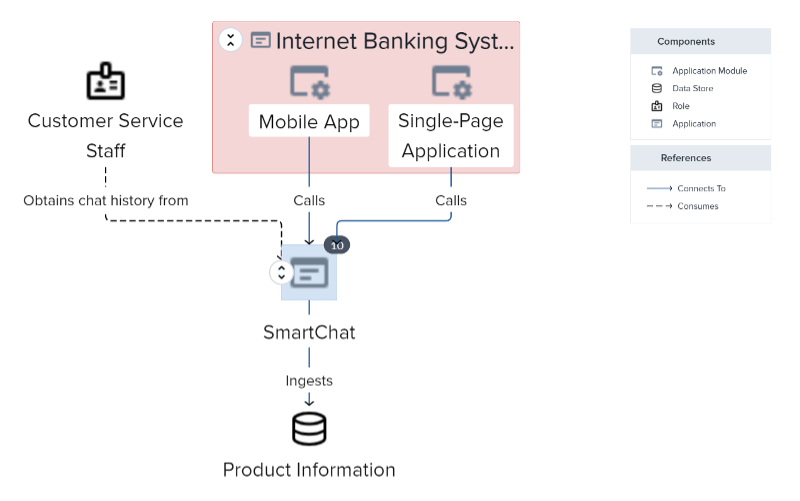

Harnessing the Collective Ignorance of Solution Architects

One key benefit of adopting an architecture platform, and having different

development teams contribute and maintain their designs in a shared model, is

that higher levels of abstraction can gain a wide-angled view of the resulting

picture. At the context level, the view becomes enterprise-wide, with an

abstracted map of the entire application landscape, and how it is joined up,

both from an IT perspective, and to its consuming organizational units,

revealing its contribution to business products and services, value streams

and business capabilities. ... by combining the abstracting power of a modern

EA platform with the consistency and integrity of the C4 model approach, I

have removed from their workload the Sysyphean task of hand-crafting an

enterprise IT model and replaced it with the “collective ignorance” of an army

of supporters who will construct and maintain that enterprise view out of

their own interest. Guidance and encouragement are all that is required. The

model will remain consistent with the aggregated truth of all solution designs

because they are one and the same model, viewed from different angles with

different degrees of “selective ignorance”.

5 Hard Truths About the State of Cloud Security 2024

"There's a fundamental misunderstanding of the cloud that somehow there's more

security natively built into it, that you're more secure by going to the cloud

just by the act of going to the cloud," he says. The problem is that while

hyperscale cloud providers may be very good at protecting infrastructure, the

control and responsibility over their customer's security posture they have is

very limited. "A lot of people think they're outsourcing security to the cloud

provider. They think they're transferring the risk," he says. "In

cybersecurity, you can never transfer the risk. If you are the custodian of

that data, you are always the custodian of the data, no matter who's holding

it for you." ... "So much of the zero trust narrative is about identity,

identity, identity," Kindervag says. "Identity is important, but we consume

identity in policy in zero trust. It's not the end-all, be-all. It doesn't

solve all the problems." What Kindervag means is that with a zero trust model,

credentials don't automatically give users access to anything under the sun

within a given cloud or network. The policy limits exactly what and when

access is given to specific assets.

Quote for the day:

"Great achievers are driven, not so

much by the pursuit of success, but by the fear of failure." --

Larry Ellison

No comments:

Post a Comment