Get the most value from your data with data lakehouse architecture

A data lakehouse is essentially the next breed of cloud data lake and

warehousing architecture that combines the best of both worlds. It is an

architectural approach for managing all data formats (structured,

semi-structured, or unstructured) as well as supporting multiple data workloads

(data warehouse, BI, AI/ML, and streaming). Data lakehouses are underpinned by a

new open system architecture that allows data teams to implement data structures

through smart data management features similar to data warehouses over a

low-cost storage platform that is similar to the ones used in data lakes. ... A

data lakehouse architecture allows data teams to glean insights faster as they

have the opportunity to harness data without accessing multiple systems. A data

lakehouse architecture can also help companies ensure that data teams have the

most accurate and updated data at their disposal for mission-critical machine

learning, enterprise analytics initiatives, and reporting purposes. There are

several reasons to look at modern data lakehouse architecture in order to drive

sustainable data management practices.

A CISO’s guide to discussing cybersecurity with the board

When you get a chance to speak with executives, you typically don’t have much

time to discuss details. And frankly, that’s not what executives are looking

for, anyway. It’s important to phrase cybersecurity conversations in a way that

resonates with the leaders. Messaging starts with understanding the C-suite and

boards’ priorities. Usually, they are interested in big picture initiatives, so

explain why cyber investment is critical to the success of these initiatives.

For example, if the CEO wants to increase total revenue by 5% in the next year,

explain how they can prevent major unnecessary losses from a cyber attack with

an investment in cybersecurity. Once you know the executive team and board’s

goals, look to specific members, and identify a potential ally. Has one team

recently had a workplace security breach? Does one leader have a difficult time

getting his or her team to understand the makings of a phishing scheme? These

interests and experiences can help guide the explanation of the security

solution. If you’re a CISO, you’re well-versed in cybersecurity, but remember

that not everyone is as involved in the subject as you are, and business leaders

probably will not understand technical jargon.

Best of 2021 – Containers vs. Bare Metal, VMs and Serverless for DevOps

A bare metal machine is a dedicated server using dedicated hardware. Data

centers have many bare metal servers that are racked and stacked in clusters,

all interconnected through switches and routers. Human and automated users of a

data center access the machines through access servers, high security firewalls

and load balancers. The virtual machine introduced an operating system

simulation layer between the bare metal server’s operating system and the

application, so one bare metal server can support more than one application

stack with a variety of operating systems. This provides a layer of abstraction

that allows the servers in a data center to be software-configured and

repurposed on demand. In this way, a virtual machine can be scaled horizontally,

by configuring multiple parallel machines, or vertically, by configuring

machines to allocate more power to a virtual machine. One of the problems with

virtual machines is that the virtual operating system simulation layer is quite

“thick,” and the time required to load and configure each VM typically takes

some time. In a DevOps environment, changes occur frequently.

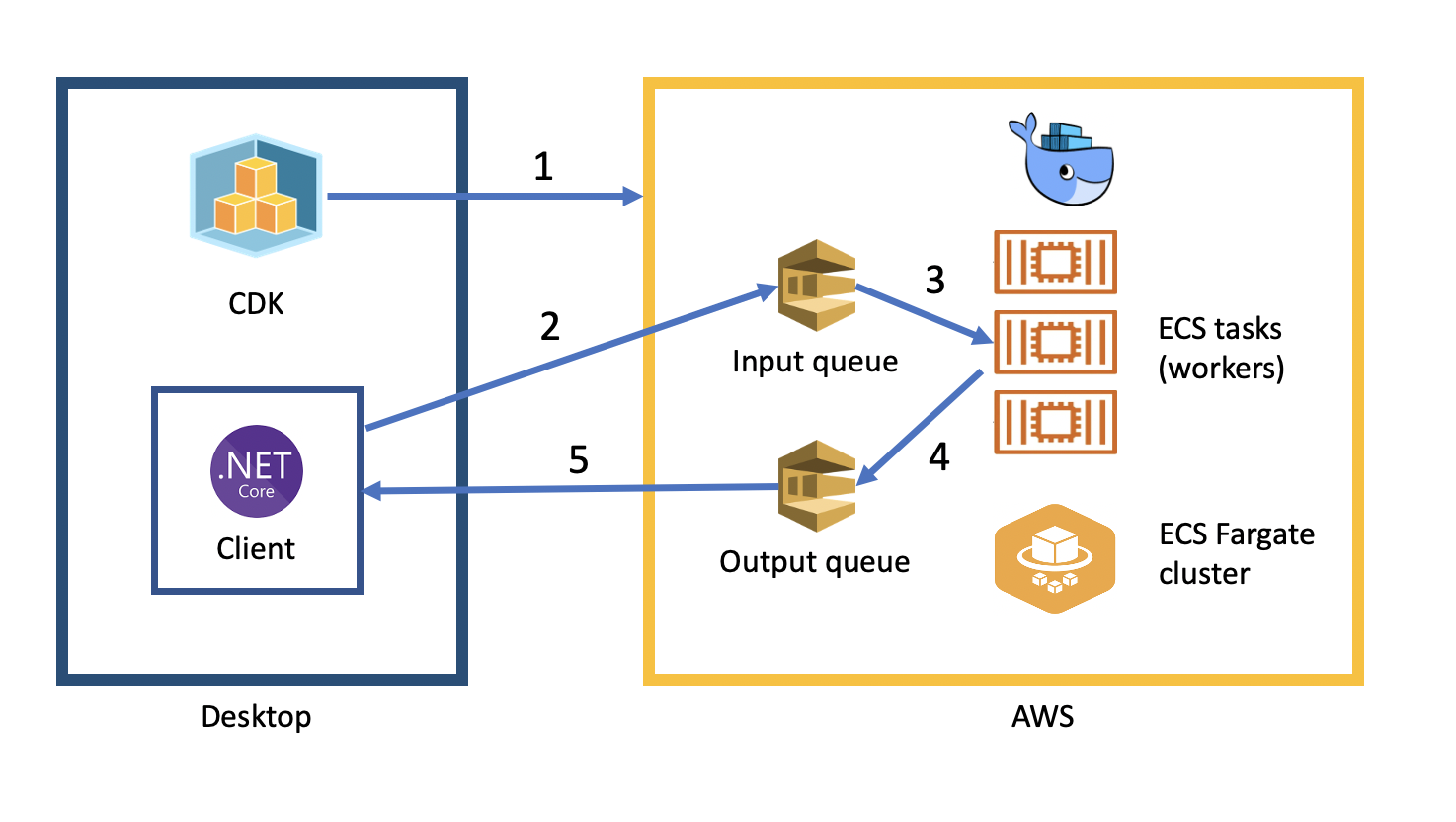

Desktop High-Performance Computing

Many engineering teams rely on desktop products that only run on Microsoft

Windows. Desktop engineering tools that perform tasks such as optical ray

tracing, genome sequencing, or computational fluid dynamics often couple

graphical user interfaces with complex algorithms that can take many hours to

run on traditional workstations, even when powerful CPUs and large amounts of

RAM are available. Until recently, there has been no convenient way to scale

complex desktop computational engineering workloads seamlessly to the cloud.

Fortunately, the advent of AWS Cloud Development Kit (CDK), AWS Elastic

Container Service (ECS), and Docker finally make it easy to scale desktop

engineering workloads written in C# and other languages to the cloud. ... The

desktop component first builds and packages a Docker image that can perform the

engineering workload (factor an integer). AWS CDK, executing on the desktop,

deploys the Docker image to AWS and stands up cloud infrastructure consisting of

input/output worker queues and a serverless ECS Fargate cluster.

Micromanagement is not the answer

Neuroscience also reveals why micromanaging is counterproductive. Donna

Volpitta, an expert in “brain-based mental health literacy,” explained to me

that the two most fundamental needs of the human brain are security and

autonomy, both of which are built on trust. Leaders who instill a sense of trust

in their employees foster that sense of security and autonomy and, in turn,

loyalty. When leaders micromanage their employees, they undermine that sense of

trust, which tends to breed evasion behaviors in employees. It’s a natural brain

response. “Our brains have two basic operating modes—short-term and long-term,”

Volpitta says. “Short-term is about survival. It’s the freeze-flight-fight

response or, as I call it, the ‘grasshopper’ brain that is jumping all over.

Long-term thinking considers consequences [and] relationships, and is necessary

for complex problem solving. It’s the ‘ant’ brain, slower and steadier.” She

says micromanagement constantly triggers short-term, survival thinking

detrimental to both social interactions and task completion.

Unblocking the bottlenecks: 2022 predictions for AI and computer vision

One of the key challenges of deep learning is the need for huge amounts of

annotated data to train large neural networks. While this is the conventional

way to train computer vision models, the latest generation of technology

providers are taking an innovative approach that enables machine learning with

comparatively less training data. This includes moving away from supervised

learning to self-supervised and weakly supervised learnings where data

availability is less of an issue. This approach, also known as few shot

learning, detects objects as well as new concepts with considerably less input

data. In many cases the algorithm can be trained with as little as 20 images.

... Privacy remains a major concern in the AI sector. In most cases, a business

must share its data assets with the AI provider via third party servers or

platforms when training computer vision models. Under such arrangements there is

always the risk that the third party could be hacked or even exploit valuable

metadata for its own projects. As a result, we’re seeing the rise of Privacy

Enhancing Computation, which enables data to be shared between different

ecosystems in order to create value, while maintaining data confidentiality.

How Automation Can Solve the Security Talent Shortage Puzzle

Supporting remote and hybrid work requires organizations to invest in and

implement new technologies that facilitate needs such as remote access, secure

file-sharing, real-time collaboration and videoconferencing. Businesses must

also hire professionals to configure, implement and maintain these tools with an

eye towards security – a primary concern here, as businesses of all sizes now

live or die by the availability and integrity of their data. The increasing

complexity of IT environments – many of which are now pressured to support

bring-your-own-device (BYOD) policies – has only intensified the need for

competent cybersecurity talent. It’s not surprising that the ongoing shortage of

trained professionals makes it difficult for organizations to expand their

business and adopt new technologies. Almost half a million cybersecurity jobs

remain open in the U.S. alone, forcing businesses to compete aggressively to

fill these roles. Yet, economic pressures make it particularly difficult for

small-to mid-sized businesses (SBMs) to play this game. Most cannot hope to

match the high salaries that large enterprises offer.

HIPAA Privacy and Security: At a Crossroads in 2022?

The likely expansion of OCR’s mission into protecting the confidentiality of

SUD data comes as actions to enforce the HITECH Breach Notification and HIPAA

Security Rule appear to be at a standstill. According to the data compiled by

OCR, in 2021 there were more than 660 breaches of the unauthorized disclosure

of unsecured PHI reported by HIPAA-covered entities and their business

associates that compromised the health information of over 40 million people.

A significant number of the breaches reported to OCR appear to show violations

of the HIPAA standards due to late reporting and failure to adequately secure

information systems or train workforce members on safeguarding PHI. In 2021,

OCR announced settlements in two enforcement actions involving compliance with

the HIPAA Security Rule standards. OCR has been mum on its approach to

enforcement of the HIPAA breach and security rules. One explanation could be

the impact being felt by the 5th Circuit Court of Appeals decision overturning

an enforcement action against the University of Texas MD Anderson Cancer

Center.

Will we see GPT-3 moment for computer vision?

It is truly the age of large models. Each of these models is bigger and more

advanced than the previous one. Take, for example, GPT-3 – when it was

introduced in 2020, it was the largest language model trained on 175 billion

parameters. Fast forward one year, and we already have the GLaM model, which

is a trillion weight model. Transformer models like GPT-3 and GLaM are

transforming natural language processing. We are having active conversations

around these models making job roles like writers and even programmers

obsolete. While these can be dismissed as speculations, for now, one cannot

deny that these large language models have truly transformed the field of NLP.

Could this innovation be extended to other fields – like computer vision? Can

we have a GPT-3 moment for computer vision? OpenAI recently released GLIDE, a

text-to-image generator, where the researchers applied guided diffusion to the

problem of text conditional image synthesis. For GLIDE, the researchers

trained a 3.5 billion parameter diffusion model that uses a text encoder.

Next, they compared CLIP (Contrastive Language-Image Pre-training) guidance

and classifier free guidance.

What is Legacy Modernization

To achieve a good level of agility the systems supporting the organization

also have to quickly react and change to the surrounding environment. Legacy

systems place a constraint on agility since they are often difficult to change

or provide inefficient support to business activities. This is not unusual, at

the time of the system design there were perhaps technology constraints that

no longer exist, or the system was designed for a particular way of working

that is no longer relevant. Legacy Modernization changes or replaces legacy

systems, making the organization more efficient and cost-effective. Not only

can this optimize existing business processes, but it can open new business

opportunities. Security is an important driver for Legacy Modernization.

Cyber-attacks on organizations are common and become more sophisticated over

time. The security of a system degrades over time, and legacy systems may no

longer have the support or the technologies required to deter modern attack

methods, this makes them an easy target for hackers. This represents a

significant business risk to an organization.

Quote for the day:

"Leadership is particularly necessary

to ensure ready acceptance of the unfamiliar and that which is contrary to

tradition." -- Cyril Falls

No comments:

Post a Comment