Open source and mental health: the biggest challenges facing developers

The very nature of open source projects means its products are readily available

and ripe for use. Technological freedom is something to be celebrated. However,

it should not come at the expense of an individual’s mental health. Open source

is set up for collaboration. But in reality, a collaborative approach does not

always materialise. The accessibility of these projects means that many

effective pieces of coding start as small ventures by individual developers,

only to snowball into substantial projects on which companies rely, but rarely

contribute back to it. Open source is for everyone, but responsibility comes

along with that. If we want open source projects to stay around, any company

using open source projects should dedicate some substantial time contributing

back to open source projects, avoiding unreasonable strain on individual

developers by doing so. Sadly, 45% of developers report a lack of support with

their open source work. Without sufficient support, the workload to maintain

such projects can place developers under enormous pressure, reducing confidence

in their ability and increasing anxiety.

Chaos Engineering - The Practice Behind Controlling Chaos

I always tell people that Chaos Engineering is a bit of a misnomer because it’s

actually as far from chaotic as you can get. When performed correctly everything

is in control of the operator. That mentality is the reason our core product

principles at Gremlin are: safety, simplicity and security. True chaos can be

daunting and can cause harm. But controlled chaos fosters confidence in the

resilience of systems and allows for operators to sleep a little easier knowing

they’ve tested their assumptions. After all, the laws of entropy guarantee the

world will consistently keep throwing randomness at you and your systems. You

shouldn’t have to help with that. One of the most common questions I receive is:

“I want to get started with Chaos Engineering, where do I begin?” There is no

one size fits all answer unfortunately. You could start by validating your

observability tooling, ensuring auto-scaling works, testing failover conditions,

or one of a myriad of other use cases. The one thing that does apply across all

of these use cases is start slow, but do not be slow to start.

How to ward off the Great Resignation in financial services IT

The upshot for CIOs in financial services: You must adapt to recruit and keep

talent – and build a culture that retains industry-leading talent. After

recently interviewing more than 20 former financial services IT leaders who

departed for other companies, I learned that it isn’t about a bad boss or poor

pay. They all fondly remembered their time at the firms, yet that wasn’t

enough to keep them. ... It is a journey that begins with small steps. Find

something small to prove out and get teams to start working in this new way.

Build a contest for ideas – assign numbers to submissions so executives have

no idea who or what level submitted, and put money behind it. Have your teams

vote on the training offered. This allows them to become an active participant

and feel their opinions matter. It can also improve the perception that the

importance of technology is prioritized as you give access to not only learn

new technologies but encourage teams to learn. ... The better these leaders

work together, the more that impact, feeling of involvement, and innovation

across teams can grow.

DataOps or Data Fabric: Which Should Your Business Adopt First?

Every organization is unique, so every Data Strategy is equally unique. There

are benefits to both approaches that organizations can adopt, although

starting with a DataOps approach is likely to show the largest benefit in the

shortest amount of time. DataOps and data fabric both correlate to maturity.

It’s best to implement DataOps first if your enterprise has identified

setbacks and roadblocks with data and analytics across the organization.

DataOps can help streamline the manual processes or fragile integration points

enterprises and data teams experience daily. If your organization’s data

delivery process is slow to reach customers, then a more flexible, rapid, and

reliable data delivery method may be necessary, signifying an organization may

need to add on a data fabric approach. Adding elements of a data fabric is a

sign that the organization has reached a high level of maturity in its data

projects. However, an organization should start with implementing a data

fabric over DataOps if they have many different and unique integration styles,

and more sources and needs than traditional Data Management can address.

How to Repurpose an Obsolete On-Premises Data Center

Once a data center has been decommissioned, remaining servers and storage

resources can be repurposed for applications further down the chain of

business criticality. “Servers that no longer offer critical core functions

may still serve other departments within the organization as backups,” Carlini

says. Administrators can then migrate less important applications to the older

hardware and the IT hardware itself can be located, powered, and cooled in a

less redundant and secure way. “The older hardware can continue on as

backup/recovery systems, or spare systems that are ready for use should the

main cloud-based systems go off-line,” he suggests. Besides reducing the need

to purchase new hardware, reassigning last-generation data center equipment

within the organization also raises the enterprise's green profile. It shows

that the enterprise cares about the environment and doesn’t want to add to the

already existing data equipment in data centers, says Ruben Gamez CEO of

electronic signature tool developer SignWell. “It's also very sustainable.”

Mitigating Insider Security Threats with Zero Trust

Zero Trust aims at minimising lateral movement of attacks in an organisation,

which is the most common cause of threat duplication or spread of malwares and

viruses. In expeditions during organising capture the flag events, we often

give exercises to work with metasploits, DDos attacks and understanding attack

vectors and how attacks move. For example, a phishing email attack targeting a

user was used which had a false memo that was instructed to be forwarded by

each employee to their peers. That email had MS powershell malware embedded

and it was used to depict how often good looking emails are too good to be

genuine. And since, just like that, the attack vectors are often targeted to

be inside of organisations, Zero Trust suggests to always verify all network

borders with equal scrutiny. Now, as with every new technology, Zero Trust is

not built in a day, so it might sound like a lot of work for many small

businesses as security sometimes comes across as an expensive

investment.

Trends in Blockchain for 2022

Blockchain is ushering in major economic shifts. But the cryptocurrency market

is still a ‘wild west’ with little regulation. According to recent reports, it

appears the U.S. Securities and Exchange Commission is gearing up to more

closely regulate the cryptocurrency industry in 2022. “More investment in

blockchain is bringing it into the mainstream, but what’s holding back a lot

of adoption is regulatory uncertainty,” said Parlikar. Forbes similarly

reports regulatory uncertainty as the biggest challenge facing blockchain

entrepreneurs. Blockchain is no longer relegated to the startup domain,

either; well-established financial institutions also want to participate in

the massive prosperity, said Parlikar. This excitement is causing a

development-first, law-later mindset, similar to the legal grey area that

followed Uber as it first expanded its rideshare business. “[Blockchain]

businesses are trying to hedge risk,” Parlikar explained. “We want to comply

and aren’t doing nefarious things intentionally—there’s just a tremendous

opportunity to innovate and streamline operations and increase the end-user

experience.”

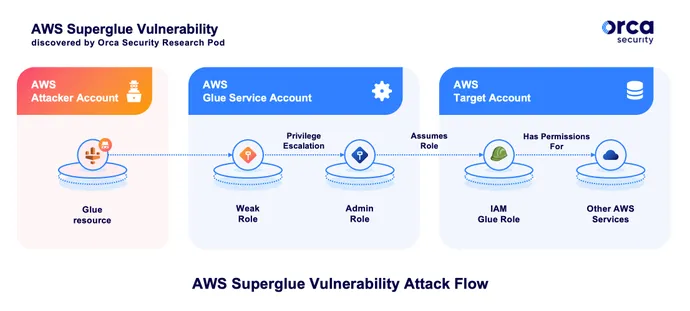

New Vulnerabilities Highlight Risks of Trust in Public Cloud

The most significant of the two vulnerabilities occurred in AWS Glue, a

serverless integration service that allows AWS users to manage, clean, and

transform data, and makes the datastore available to the user's other

services. Using this flaw, attackers could compromise the service and become

an administrator — and because the Glue service is trusted, they could use

their role to access other users' environments. The exploit allowed Orca's

researchers to "escalate privileges within the account to the point where we

had unrestricted access to all resources for the service in the region,

including full administrative privileges," the company stated in its advisory.

Orca's researchers could assume roles in other AWS customers' accounts that

have a trusted relationship with the Glue service. Orca maintains that every

account that uses the Glue service has at least one role that trusts the Glue

service. A second vulnerability in the CloudFormation (CF) service, which

allows users to provision resources and cloud assets, allowed the researchers

to compromise a CF server and run as an AWS infrastructure service.

Why Saying Digital Transformation Is No Longer Right

Technology is multiplicative, it doesn't know whether it's multiplying a

positive or a negative. So, if you have bad customer service at the front

counter, and you add technological enablement - voila! You're now able to

deliver bad service faster, and to more people than ever before! The term

‘Digital Transformation’ implies a potentially perilous approach of focusing on

technology first. In my career as a technology professional, I’ve seen my

share of project successes and failures. The key differentiator between success

and failure is the clarity of the desired outcome right from the start of the

initiative. I had a colleague who used to say: “Projects fail at the start, most

people only notice at the end.” Looking back at the successful initiatives which

I was a part of, they possessed several common key ingredients: the clarity of a

compelling goal, the engagement of people, and a discipline for designing

enablement processes. With those ingredients in place, a simple, and reliable

enabling tool (the technology), developed using clear requirements acts like an

unbelievable accelerant.

Four key lessons for overhauling IT management using enterprise AI

One of the greatest challenges for CIOs and IT leaders these days is managing

tech assets that are spread across the globe geographically and across the

internet on multi-cloud environments. On one hand, there’s pressure to increase

access for those people who need to be on your network via their computers,

smartphones and other devices. On the other hand, each internet-connected device

is another asset to be monitored and updated, a potential new entry point for

bad actors, etc. That’s where the scalability of automation and machine learning

is essential. As your organisation grows and becomes more spread out, there’s no

need to expand your IT department. A unified IT management system, powered by

AI, will keep communication lines open while continually alerting you to

threats, triggering appropriate responses to input and making updates across the

organisation. It is never distracted or overworked. ... When it comes to these

enterprise AI solutions, integration can be more challenging. And in some cases,

businesses end up spending as much on customising the solution as they did on

the initial investment.

Quote for the day:

"Strong leaders encourage you to do

things for your own benefit, not just theirs." -- Tim Tebow

No comments:

Post a Comment