Updating Data Governance: Set Up a Cohesive Plan First

Just because a company has a Data Governance framework it used with a mature

technology project, like a data warehouse, does not mean it is sufficient for

newer technology initiatives, like machine learning. New business requirements

need to be considered, especially where system integration is necessary. For

example, Data Quality must be good for all data sets, across the entire

enterprise, before machine learning can be applied to a new

venture. Danette McGilvray, President and Principal at Granite Falls

Consulting, said, “The cold brutal reality is that the data is not good enough

to support machine learning in practically every company.” This is only one of

many business needs that crop up before succeeding at such an undertaking.

Revisiting Data Governance prior to starting a new data project reduces exposure

to mistakenly overlooking prerequisites, and moves toward a unified Data

Management approach. Rethinking older Data Governance plans alone does not

necessarily lead to a more coherent Data Governance.

Just because a company has a Data Governance framework it used with a mature

technology project, like a data warehouse, does not mean it is sufficient for

newer technology initiatives, like machine learning. New business requirements

need to be considered, especially where system integration is necessary. For

example, Data Quality must be good for all data sets, across the entire

enterprise, before machine learning can be applied to a new

venture. Danette McGilvray, President and Principal at Granite Falls

Consulting, said, “The cold brutal reality is that the data is not good enough

to support machine learning in practically every company.” This is only one of

many business needs that crop up before succeeding at such an undertaking.

Revisiting Data Governance prior to starting a new data project reduces exposure

to mistakenly overlooking prerequisites, and moves toward a unified Data

Management approach. Rethinking older Data Governance plans alone does not

necessarily lead to a more coherent Data Governance.

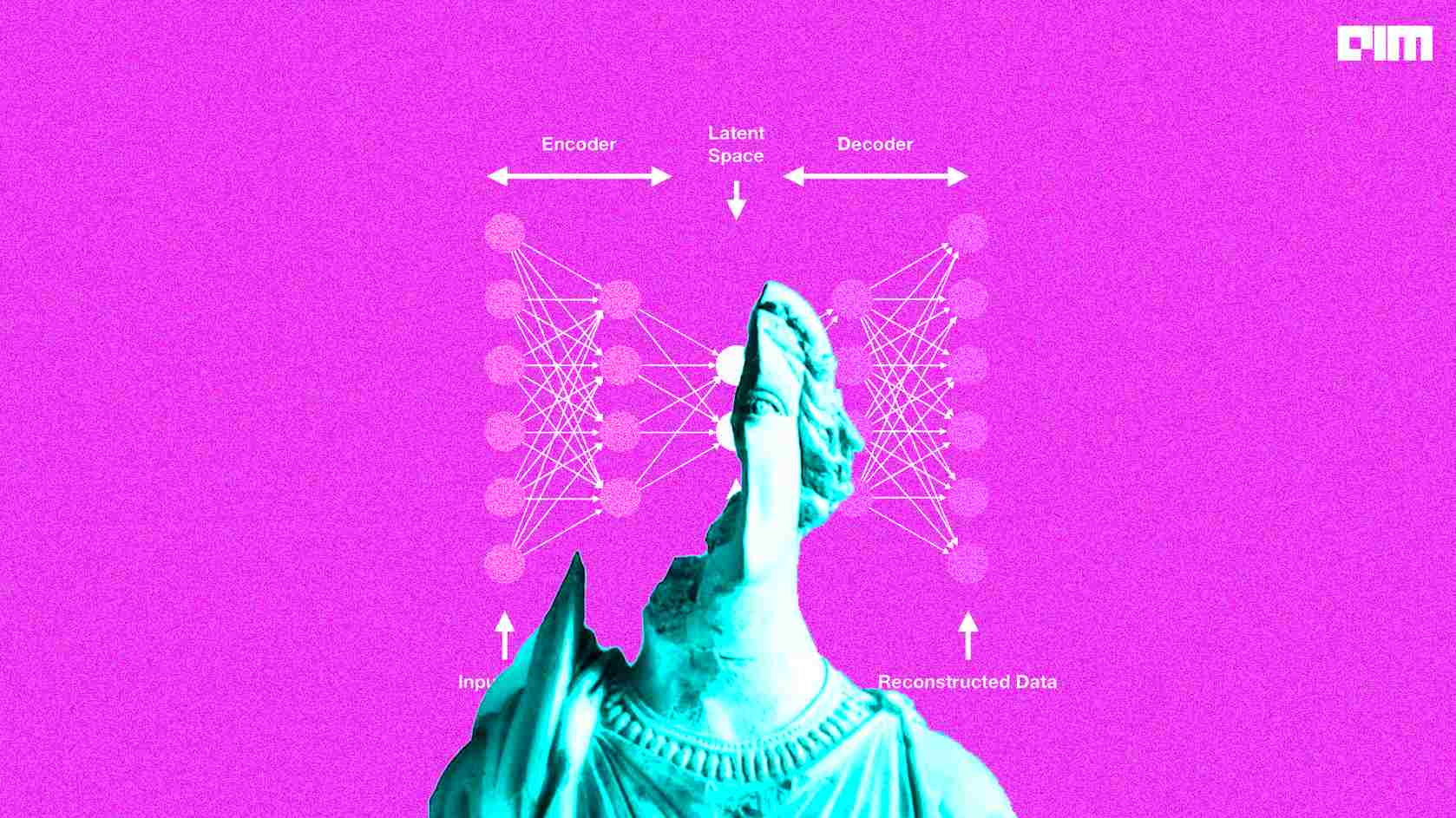

A Hands-On Guide to Outlier Detection with Alibi Detect

Data points that are unusually far apart from the rest of the observations in a

dataset are known as outliers. They are primarily caused by data errors

(measurement or experimental errors, data collection or processing errors, and

so on) or naturally very singular and different behaviour from the norm, for

example, in medical applications, very few people have upper blood pressure

greater than 200, so If we keep them in the dataset, our statistical analysis,

and modelling conclusions will be skewed. To name a few, they can alter the mean

and standard deviation values. As a result, it’s critical to accurately detect

and handle outliers, either by removing them or reducing them to a predefined

value. Outlier detection is thus critical for identifying anomalies whose model

predictions we can’t trust and shouldn’t use in production. The type of outlier

detector that is appropriate for a given application is determined by the data’s

modality and dimensionality, as well as the availability of labelled normal and

outlier data and whether the detector is pre-trained (offline) or updated

online.

Data points that are unusually far apart from the rest of the observations in a

dataset are known as outliers. They are primarily caused by data errors

(measurement or experimental errors, data collection or processing errors, and

so on) or naturally very singular and different behaviour from the norm, for

example, in medical applications, very few people have upper blood pressure

greater than 200, so If we keep them in the dataset, our statistical analysis,

and modelling conclusions will be skewed. To name a few, they can alter the mean

and standard deviation values. As a result, it’s critical to accurately detect

and handle outliers, either by removing them or reducing them to a predefined

value. Outlier detection is thus critical for identifying anomalies whose model

predictions we can’t trust and shouldn’t use in production. The type of outlier

detector that is appropriate for a given application is determined by the data’s

modality and dimensionality, as well as the availability of labelled normal and

outlier data and whether the detector is pre-trained (offline) or updated

online.How a startup uses AI to put worker safety first

Computer vision has progressed from an experimental technology to one that can

interpret patterns in images and classify them using machine learning algorithms

to scale. Advances in deep learning and neural networks enable computer vision

uses to increase for enterprises, improving worker safety in the process.

Computer vision techniques to reduce worker injuries and improve in-plant safety

are based on unsupervised machine learning algorithms that excel at identifying

patterns and anomalies in images. Computer vision platforms, including

Everguard’s SENTRI360, rely on convolutional neural networks to categorize

images and industrial workflows at scale. The quality of the datasets used to

train supervised and unsupervised machine learning algorithms determines their

accuracy. Convolutional neural networks also require large amounts of data to

improve their precision in predicting events, fine-tuned through iterative

cycles of machine learning models. Each iteration of a machine learning model

then extracts specific attributes of an image and, over time, classifies

attributes.

Computer vision has progressed from an experimental technology to one that can

interpret patterns in images and classify them using machine learning algorithms

to scale. Advances in deep learning and neural networks enable computer vision

uses to increase for enterprises, improving worker safety in the process.

Computer vision techniques to reduce worker injuries and improve in-plant safety

are based on unsupervised machine learning algorithms that excel at identifying

patterns and anomalies in images. Computer vision platforms, including

Everguard’s SENTRI360, rely on convolutional neural networks to categorize

images and industrial workflows at scale. The quality of the datasets used to

train supervised and unsupervised machine learning algorithms determines their

accuracy. Convolutional neural networks also require large amounts of data to

improve their precision in predicting events, fine-tuned through iterative

cycles of machine learning models. Each iteration of a machine learning model

then extracts specific attributes of an image and, over time, classifies

attributes. 'Work’ in 2022: What’s next?

Undoubtedly, it needs a complete overhaul of the existing policies, but what we

must not forget is that in an evolving environment nothing can be treated as

constant. Therefore, swift revision of new policies is very important to match

up to the changing scenarios while maintaining people centricity as the central

thought. Knowing the employee pulse will be the key to create or revise policies

for which regular surveys, town halls, leadership connections are extremely

important. Employee safety and well-being will continue to hold the top of mind

space and the inclination of workplace culture transformation would be towards

empathy and flexibility. Though the challenge of overcoming

‘how-much-is-too-much’ is something that the organizations would need to solve

for. They will have to rally together to find the sweet spot to maintain the

right balance between productivity and not hampering the work life balance of

the employees. ... If data is considered the new oil of the 21st century,

‘Trust’ will become equivalent to it in the post pandemic world making the

relationship between the employer and the employee go through a gradual

transition where managing expectations from both ends will be essential.

Undoubtedly, it needs a complete overhaul of the existing policies, but what we

must not forget is that in an evolving environment nothing can be treated as

constant. Therefore, swift revision of new policies is very important to match

up to the changing scenarios while maintaining people centricity as the central

thought. Knowing the employee pulse will be the key to create or revise policies

for which regular surveys, town halls, leadership connections are extremely

important. Employee safety and well-being will continue to hold the top of mind

space and the inclination of workplace culture transformation would be towards

empathy and flexibility. Though the challenge of overcoming

‘how-much-is-too-much’ is something that the organizations would need to solve

for. They will have to rally together to find the sweet spot to maintain the

right balance between productivity and not hampering the work life balance of

the employees. ... If data is considered the new oil of the 21st century,

‘Trust’ will become equivalent to it in the post pandemic world making the

relationship between the employer and the employee go through a gradual

transition where managing expectations from both ends will be essential.

Blockchain technology developments help elevate food safety protocols

Blockchain technologies, something we have been discussing for a few years, are

closer than we think. Transparency, traceability, and sustainability are vital

to everyone in the industry. The FDA has outlined four core elements in the New

Era of Smarter Food Safety Blueprint, and the first of these elements is

tech-enabled traceability. Traceability processes are critical to ensure all

food items are traced and tracked throughout the supply chain. Traceability is

essential for food safety as well as operational efficiency. With a solid

traceability program, it is possible to locate a product at any stage of the

food chain within the supply chain — literally from farm to table. For this

technology to work well, it must be user-friendly and affordable to all — small

businesses and large corporations alike. When it is available and widely used,

it will minimize foodborne illness outbreaks and assist significantly with

speeding up the process of finding the source if an outbreak does occur.

Affordable digital technology connecting buyers with validated verified sellers

is at the forefront.

Blockchain technologies, something we have been discussing for a few years, are

closer than we think. Transparency, traceability, and sustainability are vital

to everyone in the industry. The FDA has outlined four core elements in the New

Era of Smarter Food Safety Blueprint, and the first of these elements is

tech-enabled traceability. Traceability processes are critical to ensure all

food items are traced and tracked throughout the supply chain. Traceability is

essential for food safety as well as operational efficiency. With a solid

traceability program, it is possible to locate a product at any stage of the

food chain within the supply chain — literally from farm to table. For this

technology to work well, it must be user-friendly and affordable to all — small

businesses and large corporations alike. When it is available and widely used,

it will minimize foodborne illness outbreaks and assist significantly with

speeding up the process of finding the source if an outbreak does occur.

Affordable digital technology connecting buyers with validated verified sellers

is at the forefront. I followed my dreams and got demoted to software developer

I was just a UX person, not a coder. Surrounded by only the most freakishly good

developers at Facebook (and then at Stack Overflow), I pushed whatever fantasies

I had about coding professionally aside. During these few years in which I’ve

been coding in earnest on the side, I also found myself regularly discouraged

and confused by the sheer number of possible things that I could learn or do. I

can’t count the number of quarter-finished games and barely-started projects I

have in my private GitHub repos (actually, I can. It’s 15, and those are just

the ones that made it there). Without much formal education in this field, I’d

frequently get lost down documentation holes and find myself drowning in the 800

ways of maybe solving the problem that I had. Finally, I came to the conclusion

that I needed more structure, and that I wouldn’t be able to get that structure

in the hour of useful-brain-time I had after work each day. I started

researching bootcamps and doing budget calculations and made plans to leave

Stack Overflow.

I was just a UX person, not a coder. Surrounded by only the most freakishly good

developers at Facebook (and then at Stack Overflow), I pushed whatever fantasies

I had about coding professionally aside. During these few years in which I’ve

been coding in earnest on the side, I also found myself regularly discouraged

and confused by the sheer number of possible things that I could learn or do. I

can’t count the number of quarter-finished games and barely-started projects I

have in my private GitHub repos (actually, I can. It’s 15, and those are just

the ones that made it there). Without much formal education in this field, I’d

frequently get lost down documentation holes and find myself drowning in the 800

ways of maybe solving the problem that I had. Finally, I came to the conclusion

that I needed more structure, and that I wouldn’t be able to get that structure

in the hour of useful-brain-time I had after work each day. I started

researching bootcamps and doing budget calculations and made plans to leave

Stack Overflow.

Quantum Computing In 2022: A Leap Into The Tremendous Future Ahead

The different analyses and drives with quantum computing by the big tech and

other organizations are setting out an ocean of open doors before CIOs and IT

offices to apply the innovation into this present reality settings. Quantum

computers are undeniably appropriate for settling complex optimization- and

performing quick quests of unstructured data, as Prashanth Kaddi, Partner,

Deloitte India makes reference to, “it can possibly bring problematic change

across areas, including finding, medication research, dispersion store network,

traffic flow, energy optimizing and many more. Quantum computing additionally

fundamentally diminishes time to market, just as helps in enhancing customer

delivery. For instance, a drug organization may essentially decrease an

opportunity to showcase new medications. In finance, it could empower quicker,

more complicated Monte Carlo stimulations, like trading, trajectory

optimisation, market instability, and value advancement procedures, and some

more.

The different analyses and drives with quantum computing by the big tech and

other organizations are setting out an ocean of open doors before CIOs and IT

offices to apply the innovation into this present reality settings. Quantum

computers are undeniably appropriate for settling complex optimization- and

performing quick quests of unstructured data, as Prashanth Kaddi, Partner,

Deloitte India makes reference to, “it can possibly bring problematic change

across areas, including finding, medication research, dispersion store network,

traffic flow, energy optimizing and many more. Quantum computing additionally

fundamentally diminishes time to market, just as helps in enhancing customer

delivery. For instance, a drug organization may essentially decrease an

opportunity to showcase new medications. In finance, it could empower quicker,

more complicated Monte Carlo stimulations, like trading, trajectory

optimisation, market instability, and value advancement procedures, and some

more.New data-decoding approach could lead to faster, smaller digital tech

Just one trifling issue: Encoding or decoding data in antiferromagnets can be a

bit like trying to write with a dried-up pen or decipher the scribblings of a

toddler. "The difficulty—and it's a significant difficulty—is how to write and

read information," said Tsymbal, George Holmes University Professor of physics

and astronomy. The same antiferromagnetic property that acts as a pro in one

context—the lack of a net magnetic field preventing data corruption—becomes a

con when it comes to actually recording data, Tsymbal said. Writing a 1 or 0 in

a ferromagnet is a simple matter of flipping its spin orientation, or

magnetization, via another magnetic field. That's not possible in an

antiferromagnet. And whereas reading the spin state of a ferromagnet is

similarly straightforward, it's not easy distinguishing between the spin states

of an antiferromagnet—up-down vs. down-up—because neither produces a net

magnetization that would yield discernible differences in the flow of electrons.

Together, those facts have impeded efforts to develop antiferromagnetic tunnel

junctions with practical use in actual devices.

Just one trifling issue: Encoding or decoding data in antiferromagnets can be a

bit like trying to write with a dried-up pen or decipher the scribblings of a

toddler. "The difficulty—and it's a significant difficulty—is how to write and

read information," said Tsymbal, George Holmes University Professor of physics

and astronomy. The same antiferromagnetic property that acts as a pro in one

context—the lack of a net magnetic field preventing data corruption—becomes a

con when it comes to actually recording data, Tsymbal said. Writing a 1 or 0 in

a ferromagnet is a simple matter of flipping its spin orientation, or

magnetization, via another magnetic field. That's not possible in an

antiferromagnet. And whereas reading the spin state of a ferromagnet is

similarly straightforward, it's not easy distinguishing between the spin states

of an antiferromagnet—up-down vs. down-up—because neither produces a net

magnetization that would yield discernible differences in the flow of electrons.

Together, those facts have impeded efforts to develop antiferromagnetic tunnel

junctions with practical use in actual devices.

IoT & AI Applications in Fisheries Industry Can Bring Another Blue Revolution, Read How?

IoT devices and AI are assisting fisheries in optimizing where and when they

fish, as well as there are sensors that identify fish and catch size and onboard

cameras aid in sorting the catch. The data can also assist the wild fishing

industry cut expenses by providing insights on how to cut fuel usage and enhance

fleet maintenance using AI predictive maintenance. According to McKinsey, if

large-scale fishing enterprises around the world adopt this concept, they may

save $11 billion in annual operating costs. With feed accounting for a

considerable amount of operating costs and both under and overfeeding having

severe consequences for fish health and size as well as water quality, feeding

optimization can result in significant savings and benefits. Many smart fish

farms, such as efishery, use feeders that rely on vibration and auditory cues to

help with more accurate feeding. The use of technology in aquaculture management

is also boosting efficiencies and reducing manpower demands, which is a

significant financial and safety benefit for remote marine farms.

IoT devices and AI are assisting fisheries in optimizing where and when they

fish, as well as there are sensors that identify fish and catch size and onboard

cameras aid in sorting the catch. The data can also assist the wild fishing

industry cut expenses by providing insights on how to cut fuel usage and enhance

fleet maintenance using AI predictive maintenance. According to McKinsey, if

large-scale fishing enterprises around the world adopt this concept, they may

save $11 billion in annual operating costs. With feed accounting for a

considerable amount of operating costs and both under and overfeeding having

severe consequences for fish health and size as well as water quality, feeding

optimization can result in significant savings and benefits. Many smart fish

farms, such as efishery, use feeders that rely on vibration and auditory cues to

help with more accurate feeding. The use of technology in aquaculture management

is also boosting efficiencies and reducing manpower demands, which is a

significant financial and safety benefit for remote marine farms.

Advanced Analytics: A Look Back at 2021 and What's Ahead for 2022

Companies want to become more advanced in analytics in order to better compete.

Yet, they are struggling with both keeping talent in house and building new

talent to perform more advanced analytics. In other words, organizations need to

build literacy to utilize tools such as self-service BI, and they need to either

retain or grow talent to move forward with data science. Data literacy was a

priority in 2021 and it will continue to be in 2022. Data literacy involves how

well users understand and can interact with data and analytics and communicate

the results to achieve business goals. It includes understanding data elements,

understanding the business, framing analytics, critical interpretation, and

communication skills. As part of this, we expect to see more organizations

building literacy enablement teams to help educate their people. We expect to

see modern analytics tools with more advanced and augmented features such as

natural language search and the ability to surface descriptive insights becoming

more popular.

Companies want to become more advanced in analytics in order to better compete.

Yet, they are struggling with both keeping talent in house and building new

talent to perform more advanced analytics. In other words, organizations need to

build literacy to utilize tools such as self-service BI, and they need to either

retain or grow talent to move forward with data science. Data literacy was a

priority in 2021 and it will continue to be in 2022. Data literacy involves how

well users understand and can interact with data and analytics and communicate

the results to achieve business goals. It includes understanding data elements,

understanding the business, framing analytics, critical interpretation, and

communication skills. As part of this, we expect to see more organizations

building literacy enablement teams to help educate their people. We expect to

see modern analytics tools with more advanced and augmented features such as

natural language search and the ability to surface descriptive insights becoming

more popular. Quote for the day:

"The best leader brings out the best in those he has stewardship over." -- J. Richard Clarke

No comments:

Post a Comment