There’s a month for cyber awareness, but what about data literacy?

Just as there’s currently a month devoted to raising cybersecurity awareness, we

need a data literacy month. Ideally, what we need to aim for is not just one

month, but a comprehensive educational push across all industries that could

benefit from data-driven decision-making. But the journey of a thousand miles

starts with a single step, and an awareness month would serve as a perfect

springboard. When planning such initiatives, we must make sure they do not

descend into another boring PowerPoint presentation. Instead, we need to clearly

demonstrate how data can help employees with the tasks they perform every day.

Tailoring the training sessions to the needs of individual teams or departments,

businesses must first and foremost think of situations specific employees find

themselves in on a regular basis. Take a content marketing or demand generation

team, for example: A simple comparison of the conversion rates on several

landing pages, which they most likely work on frequently, is a good way to not

just figure out the optimal language and layout, but also to introduce such

statistical concepts as population, sample, and P-value.

Encouraging more women within cyber security

To begin with, whether this is from a younger age during school studies or

university courses, offering varied entry pathways into the industry, or making

it easier to return after a break, women must be encouraged into the field of

cyber security. These hurdles into the sector have to be addressed. Each

business has a part to play when it comes to ensuring that their organisation

meets the requirements of all of their employees. From remote or hybrid working,

reduced hours or adequate maternity and paternity support, working hours should

be more flexible to suit the needs of the employee. A “return to work scheme”

would greatly benefit women if companies were to implement them. This can help

those who have had a break from the industry get back into work – and this

doesn’t necessarily mean limiting them to roles such as customer support, sales

and marketing. HR teams must also do better when it comes to job descriptions,

ensuring they appeal to a wider audience, offer flexibility and that the

recruitment pool is as diverse as can be.

Misconfiguration on the Cloud is as Common as it is Costly

Very few companies had the IT systems in place to handle even 10% of employees

working remotely at any given time. They definitely were not built to handle a

100% remote workforce. To solve this problem and enable business operations,

organizations of all sizes turned to the public cloud. The public cloud was

built to be always on, available from anywhere, and could handle the surges in

capacity that legacy infrastructure could not. Cloud applications were the

solution to enabling remote workers and continuing business operations. With

that transition came new risks: organizations were forced to rapidly adopt new

access policies, deploy new applications, onboard more users to the cloud, and

support them remotely. To make matters worse, the years of investment in

“defense in depth” security for corporate networks suddenly became

obsolete. No one was prepared for this. It should come as no surprise that

the leading causes of data breaches in the cloud can be traced back to mistakes

made by the customer, not a security failure by the cloud provider. When you add

to that the IT staff’s relative unfamiliarity with SaaS, the opportunities to

misconfigure key settings proliferate.

From fragmented encryption chaos to uniform data protection

Encrypting data during runtime has only recently become feasible. This type of

technology is built directly into the current generation public cloud

infrastructure (including clouds from Amazon, Microsoft, and others), ensuring

that runtime data can be fully protected even if an attacker gains root access.

The technology shuts out any unauthorized data access using a combination of

hardware-level memory encryption and/or memory isolation. It’s a seemingly small

step that paves the way for a quantum leap in data security—especially in the

cloud. Unfortunately, this protection for runtime data has limited efficacy for

enterprise IT. Using it alone requires each application to be modified to run

over the particular implementation for each public cloud. Generally, this

involves re-coding and re-compilation—a fundamental roadblock for adoption for

already stressed application delivery teams. In the end, this becomes yet

another encryption/data security silo to manage—on each host—adding to the

encryption chaos.

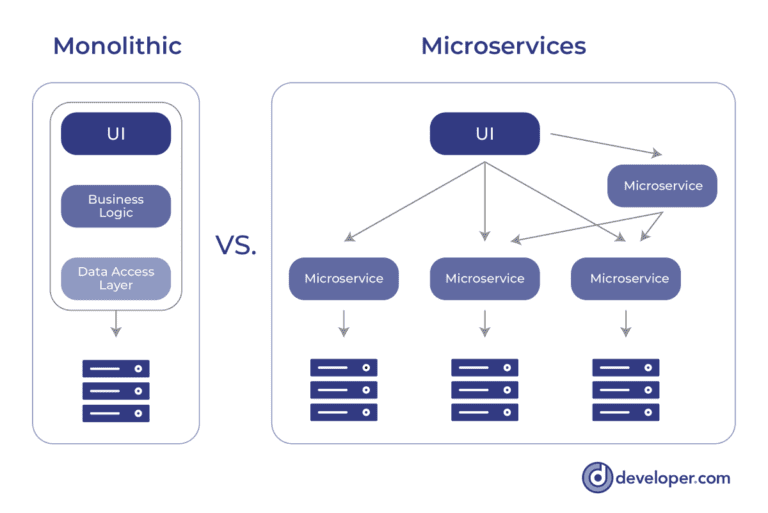

An Introduction to Event Driven Microservices

Event driven Microservices helps in the development of responsive applications

as well. Let us understand this with an example. Consider the notification

service we just talked about. Suppose the notification service needs to inform

the user when a new notification is generated and stored in the queue. Assume

that there are several concurrent users attempting to access the application

and know the notifications that have been processed. In the event-driven

model, all alerts are queued before being forwarded to the appropriate user.

In this situation, the user does not have to wait while the notification

(email, text message, etc.) is being processed. The user can continue to use

the application while the notification is processed asynchronously. This is

how you can make your application responsive and loosely coupled. Although

traditional applications are useful for a variety of use cases, they face

availability, scalability, and reliability challenges. Typically, you’d have a

single database in a monolithic application. So, providing support for

polyglot persistence was difficult.

Software Engineering Best Practices That High-Performing Teams Follow

There's a legacy trope of the (usually male) virtuoso coder, a loner maverick,

who works in isolation, speaks to no one ... He manages to get a pass for all

his misdeeds as his code is so good it could be read as a bedtime story. I'm

here to say, those days are over. I cringe a bit when I hear the term, coding

is a team sport, but it's true. Being a good engineer is about being a good

team member. General good work practices like being reliable and honest are

important. Also, owning up to your mistakes, and not taking credit for someone

else's work. It's about having the ability to prioritize your own tasks and

meet deadlines. But it's also about how you relate to others in your team. Do

people like working with you? If you aren't sociable, then you can at least be

respectful. Is your colleague stuck? Help them! You might feel smug that your

knowledge or skills exceed theirs, but it's a bad look for the whole team if

something ships with a bug or there's a huge delay. Support newbies, do your

share of the boring work, embrace practices like pair programming.

Top 10 DevOps Things to Be Thankful for in 2021

Low-code platforms: As the demand for applications to drive digital workflows

spiked in the wake of the pandemic, professional developers relied more on

low-code platforms to decrease the time required to build an application. ...

Microservices: As an architecture for building applications the core concept

of employing loosely coupled services together to construct an application

goes all the way back to when service-oriented applications (SOA) were

expected to be the next big thing in the 1990s. Microservices have, of course,

been around for several years themselves. ... Observability: As a concept,

observability traces its lineage to linear dynamic systems. Observability in

its most basic form measures how well the internal states of a system can be

inferred based on knowledge of its external outputs. In the past year, a wide

range of IT vendors introduced various types of observability platforms. These

make it easier for DevOps teams to query machine data in a way that enables

them to proactively discover the root cause of issues before they cause

further disruption.

How to Save Money on Pen Testing - Part 1

The quality of the report is the most important criterion for me when choosing

a pen test vendor - provided they have adequately skilled testers. It's the

report that your organization will be left with when the testers have moved on

to their next engagement. Penetration testing is expensive, and the pre-canned

"advice" delivered in a pen test report is often worthless and alarmist. I

know; I've written my fair share of pen test reports in the past. Terms like

"implement best practice" do nothing to drive the change needed to uplift an

organization's security posture. Look for reports that deliver pragmatic

remediation advice, including configuration and code snippets. Most

importantly, review sample reports for alarmist findings such as cookie flags

marked as "High Risk" - a pet hate of mine. ... Also, look for vendors that

take reporting further by integrating with your ticketing system to raise

tickets for issues they find or that provide videos of their hacks, which can

show how simply an attacker can exploit technical security issues.

AI will soon oversee its own data management

AI brings unique capabilities to each step of the data management process, not

just by virtue of its capability to sift through massive volumes looking for

salient bits and bytes, but by the way it can adapt to changing environments

and shifting data flows. For instance, according to David Mariani, founder of,

and chief technology officer at AtScale, just in the area of data preparation,

AI can automate key functions like matching, tagging, joining, and annotating.

From there, it is adept at checking data quality and improving integrity

before scanning volumes to identify trends and patterns that otherwise would

go unnoticed. All of this is particularly useful when the data is

unstructured. One of the most data-intensive industries is health care, with

medical research generating a good share of the load. Small wonder, then, that

clinical research organizations (CROs) are at the forefront of AI-driven data

management, according to Anju Life Sciences Software. For one thing, it’s

important that data sets are not overlooked or simply discarded, since doing

so can throw off the results of extremely important research.

Why digital transformation success depends on good governance

Since digital innovation is by its very nature new, business leaders should

ensure that the policies, processes and governance models used support

digitalisation, rather than block it, and are commensurate with the

technologies that are being utilised. Appointing a core team of accountable

leaders will help create focus and clarity around governance responsibilities.

As governance champions, they can ensure every transformation project begins

with a governance mindset and is governed by behaviours that include a desire

to ‘do the right thing’. As part of this process, the digitalisation of

governance processes and control mechanisms will help reduce any risk of

compliance failures. Today’s governance platforms can help remove the

guesswork from digital governance programmes, making it possible to devise

highly structured frameworks that reduce systemic risk. Enabling organisations

to rise to the challenge of becoming digital-first in a truly ethical and

streamlined way.

Quote for the day:

"A leader should demonstrate his

thoughts and opinions through his actions, not through his words." --

Jack Weatherford

No comments:

Post a Comment