A New Quantum Computing Method Is 2,500 Percent More Efficient

Today, most quantum computers can only handle the simplest and shortest

algorithms, since they're so wildly error-prone. And in recent algorithmic

benchmarking experiments executed by the U.S. Quantum Economic Development

Consortium, the errors observed in hardware systems during tests were so serious

that the computers gave outputs statistically indiscernible from random chance.

That's not something you want from your computer. But by employing specialized

software to alter the building blocks of quantum algorithms, which are called

"quantum logic gates," the company Q-CTRL discovered a way to reduce the

computational errors by an unprecedented level, according to the release. The

new results were obtained via several IBM quantum computers, and they also

showed that the new quantum logic gates were more than 400 times more efficient

in stopping computational errors than any methods seen before. It's difficult to

overstate how much this simplifies the procedure for users to experience vastly

improved performance on quantum devices.

Design Patterns for Machine Learning Pipelines

Design patterns for ML pipelines have evolved several times in the past decade.

These changes are usually driven by imbalances between memory and CPU

performance. They are also distinct from traditional data processing pipelines

(something like map reduce) as they need to support the execution of

long-running, stateful tasks associated with deep learning. As growth in dataset

sizes outpace memory availability, we have seen more ETL pipelines designed with

distributed training and distributed storage as first-class principles. Not only

can these pipelines train models in a parallel fashion using multiple

accelerators, but they can also replace traditional distributed file systems

with cloud object stores. Along with our partners from the AI Infrastructure

Alliance, we at Activeloop are actively building tools to help researchers train

arbitrarily large models over arbitrarily large datasets, like the open-source

dataset format for AI, for instance. ... Even though the problem of transfer

speed remained, this design pattern is widely considered as the most feasible

technique for working with petascale datasets.

Daily Standup Meetings are useless

Standup are not about technical details, even though some technical context can

help to frame the arisen complexity and allow PjM and Leads to take the

necessary steps to enable you achiving your tasks ( additional meetings,

extending the deadline, reestimating the task within the sprint, set up pair

programming sessions etc). According to the official docs the Daily Scrum is a

15-minute event for the Developers of the Scrum Team. The purpose of the Daily

Scrum is to inspect progress toward the Sprint Goal and produce an actionable

plan for the next day of work. This creates focus and improves self-management.

Daily Scrums improve communications, identify impediments, promote quick

decision-making, and consequently eliminate the need for other meetings.

Honestly I am not so sure about the last point, due to the short time allocated

to it, it can indeed generate other meetings, follow-ups between Tech Lead and

(some of ) the developers, or between the team and the stakeholders, or among

developers which decide to tackle an issue with pair programming.

When Is the Waterfall Methodology Better Than Agile?

Is waterfall ever better? The short answer is yes. Waterfall is more efficient,

more streamlined, and faster when it comes to specific types of projects like

these: Generally speaking, the smaller the project, the better suited it is

to waterfall development. If you’re only working with a few hundred lines of

code or if the scope of the project is limited, there’s no reason to take the

continuous phased approach; Low priority projects – those with minimal impact –

don’t need much outside attention or group coordination. They can easily be

planned and knocked out with a waterfall methodology; One of the best

advantages of agile development is that your clients get to be an active part of

the development process. But if you don’t have any clients, that advantage

disappears. If you’re working internally, there are fewer voices and opinions to

worry about – which means waterfall might be a better fit; Similarly, if

the project has few stakeholders, waterfall can work better than agile. If

you’re working with a council of managers or an entire team of decision makers,

agile is almost a prerequisite.

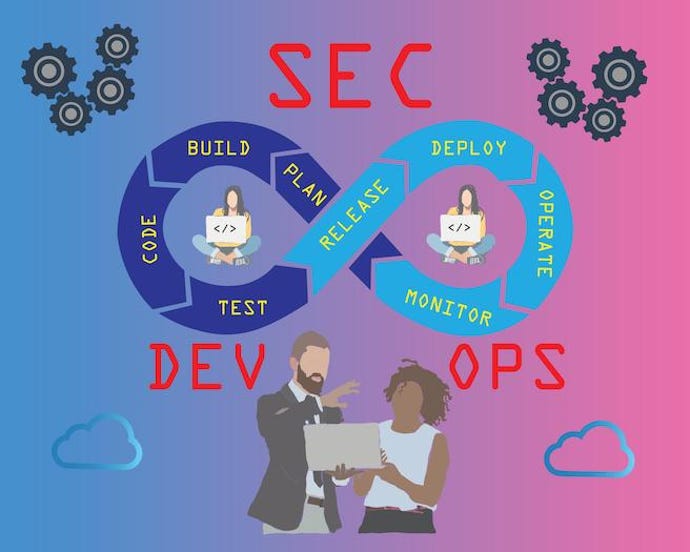

To Secure DevOps, Security Teams Must be Agile

Focusing on a pipeline using infrastructure-as-code allows security teams to

build in static analysis tools to catch vulnerabilities early, dynamic analysis

tools to catch issues in staging and production, and policy enforcement tools to

continuously validate that the infrastructure is compliant, Leitersdorf said.

"If you think about how security can be done now, instead of doing security at

the tail end of the process ... you can now do security from the beginning

through every step in the process all the way to the end. Most security issues

will be caught very early on, and then a handful of them will be caught in the

live environment and then remediated very quickly," he said. Developers get to

retain their speed of development and deployment of applications and, at the

same time, reduce the time to remediate security issues. And security teams get

to collaborate more closely with DevOps teams, he said. "From a security team

perspective, you feel better, you feel more confident, you have guardrails

around your developers to reduce the chance of making mistakes along the way and

building insecure infrastructure and you now have visibility into their DevOps

process, a huge bonus," Leitersdorf said.

How to Avoid Vulnerabilities in Your Code

In general, the "minor problems" are precisely those that result in the most

extensive security disasters, and we can say that failures usually present two

gaps: We encountered flaws such as insecure code design, injection, and

configuration issues through a code vulnerability intentionally or

unintentionally: within the TOP 10 demonstrated by OWASP; Through the

vulnerability of operations: among the most common problems, we can mention the

choice for "weak passwords", default or even the lack of password. A second

common failure is the mismanagement of people's permission to a document or

system. These types of problems, unfortunately, are pretty standard. Not by

chance, 75% of Redis servers have issues of this type. In an analogy, we can say

that security flaws are like the case of the Titanic. Considered one of the

biggest wrecks, most people are unaware that the ship had a "small problem": the

lack of a simple key that could have opened the compartment with binoculars and

other devices to help the crew visualize the iceberg in time and prevent a

collision.

Taking Threat Detection and Response to the Next Level with Open XDR

XDR fundamentally brings all the anchor tenants that are required to detect and

respond to threats into a simple, seamless user experience for analysts that

automates repetitive work. Bringing together all the required context enables

analysts to take action quickly, without getting lost in a myriad of use cases,

different screens and workflows and search languages. It can also help security

analysts respond quickly without creating endless playbooks to cover every

possible scenario. XDR unifies insights from endpoint detection and response

(EDR), network data and security analytics logs and events as well as other

solutions, such as cloud workload and data protection solutions, to provide a

complete picture of potential threats. XDR incorporates automation for root

cause analysis and recommended response, which is critical in order to respond

quickly with confidence across a complex IT and security infrastructure. Whether

your primary challenge is the complexity of tools, data and workflows or

preventing a ransomware actor from laterally moving across your environment,

quickly detecting and containing threats is of the essence.

Why Your Code Needs Abstraction Layers

By creating your abstraction in one layer, everything related to it is

centralized so any changes can be made in one place. Centralization is related

to the “Don’t repeat yourself” (DRY) principle, which can be easily

misunderstood. DRY is not only about the duplication of code, but also of

knowledge. Sometimes it’s fine for two different entities to have the same

code duplicated because this achieves isolation and allows for the future

evolution of those entities separately. ... By creating the abstraction layer,

you expose a specific piece of functionality and hide implementation details.

Now code can interact directly with your interface and avoid dealing with

irrelevant implementation details. This improves the code readability and

reduces the cognitive load on the developers reading the code. Why Because

policy is less complex than its details, so interacting with it is more

straightforward. ... Abstraction layers are great for testing, as you get the

ability to replace details with another set of details, which helps isolate

the areas that are being tested and properly create test doubles.

How to get more women in tech and retain them

When it comes to the demand side, although much progress has been made in

terms of escalating diversity up the corporate agenda, there still needs to be

a seismic shift in employment practices. Specifically, there needs to be a

sweeping change in mindset amongst hiring managers, who still often hire based

on ‘cultural fit’, as well as a new approach to how companies help women to

break the glass ceiling, through mentoring, career development support and

family friendly policies. In the case of recruitment, hiring for cultural fit

tends to favour the status quo in a company, whether that relates to race,

gender, age, socioeconomic level and so on. That makes it harder for anyone

who doesn’t ‘fit the mould’ to get into sectors where they are currently

under-represented. Instead, hiring managers ought to look towards integrating

‘value fit’ in their hiring process. The values of a business are the things

that drive the way they work and can include everything from teamwork and

collaboration, to problem-solving and customer focus. Yes, you need to ensure

your employees don’t clash with one another, but a value-fit approach will

often lead to this outcome too.

How to Reduce Burnout in IT Security Teams

When our work and personal life interact or become a blur between what is

personal life and what is work life, this is when we have to look at our

setup. Working from home allows employees to have more flexibility and focus

on their work. It also cuts down on commute hours. However, some of us are

still trying to separate our work life from our personal life throughout the

pandemic. Think about it. We take calls in our kitchen or bedroom at times.

Kitchen and bedroom are personal life spaces, not work life spaces. Having a

separate space for work helps a lot for balancing. But not everyone has this

privilege; as a consequence, the blurriness of work and personal life can

impact us, and burnout can creep in. However, if you section a part of the

room as a work space, and only use that particular spot for work, it does

help. Lastly, no matter what, have work boundaries set, such as turning off

work equipment at 6PM during the week and off the whole weekend, and keep

those boundaries in place.

Quote for the day:

"The actions of a responsible

executive are contagious." - Joe D. Batton

No comments:

Post a Comment