Creating Time Crystals Using New Quantum Computing Architectures

“A time crystal is perhaps the simplest example of a non-equilibrium phase of

matter,” said Yao, UC Berkeley associate professor of physics. “The QuTech

system is perfectly poised to explore other out-of-equilibrium phenomena

including, for example, Floquet topological phases.” These results follow on the

heels of another time crystal sighting, also involving Yao’s group, published in

Science several months ago. There, researchers observed a so-called prethermal

time crystal, where the subharmonic oscillations are stabilized via

high-frequency driving. The experiments were performed in Monroe’s lab at the

University of Maryland using a one-dimensional chain of trapped atomic ions, the

same system that observed the first signatures of time crystalline dynamics over

five years ago. Interestingly, unlike the many-body localized time crystal,

which represents an innately quantum Floquet phase, prethermal time crystals can

exist as either quantum or classical phases of matter. Many open questions

remain. Are there practical applications for time crystals? Can dissipation help

to extend a time crystal’s lifetimes? And, more generally, how and when do

driven quantum systems equilibrate?

Why Tree-Based Models are Preferred in Credit Risk Modeling?

Credit risk models are used by financial organizations to assess the credit risk

of potential borrowers. Based on the credit risk model validation, they decide

whether or not to approve a loan as well as the loan’s interest rate. New means

of estimating credit risk have emerged as technology has progressed, like credit

risk modelling using R and Python. Using the most up-to-date analytics and big

data techniques to model credit risk is one of them. Other variables, such as

the growth of economies and the creation of various categories of credit risk,

have had an impact on credit risk modelling. Machine learning enables more

advanced modelling approaches like decision trees and neural networks to be

used. This introduces nonlinearities into the model, allowing for the discovery

of more complex connections between variables. We selected to employ an XGBoost

model that was fed with features picked using the permutation significance

technique. ML models, on the other hand, are frequently so complex that they are

difficult to understand. We chose to combine XGBoost and logistic regression

because interpretability is critical in a highly regulated industry like credit

risk assessment.

Intelligent Automation: What’s the Missing Piece of AIOps?

Up until now, AIOps has mostly been used in the context of monitoring. As a

buzzword, people tend to think of the term in relation to creating baselines for

your data and then alerting to any deviations, connecting multiple sources of

information to find the root cause for a problem. These are powerful use cases

and are allowing businesses to find correlations that they might not have

achieved without AI. For example, you might find that poor bandwidth in a

specific region led to an increase in tickets from customers within that

location, or that you have idle cloud resources that are costing you in storage

or compute dollars behind the scenes, allowing you to make manual changes to

optimize costs. However, in many ways, categorizing AIOps as just monitoring and

detection doesn’t make it that different from the previous category of IT

operations analytics, where companies would look at operational data, including

logs and security feeds, and then aggregate these to make smarter decisions. To

get more out of AIOps, companies need to move past simple monitoring use cases,

and look toward management of the cloud with the help of automation.

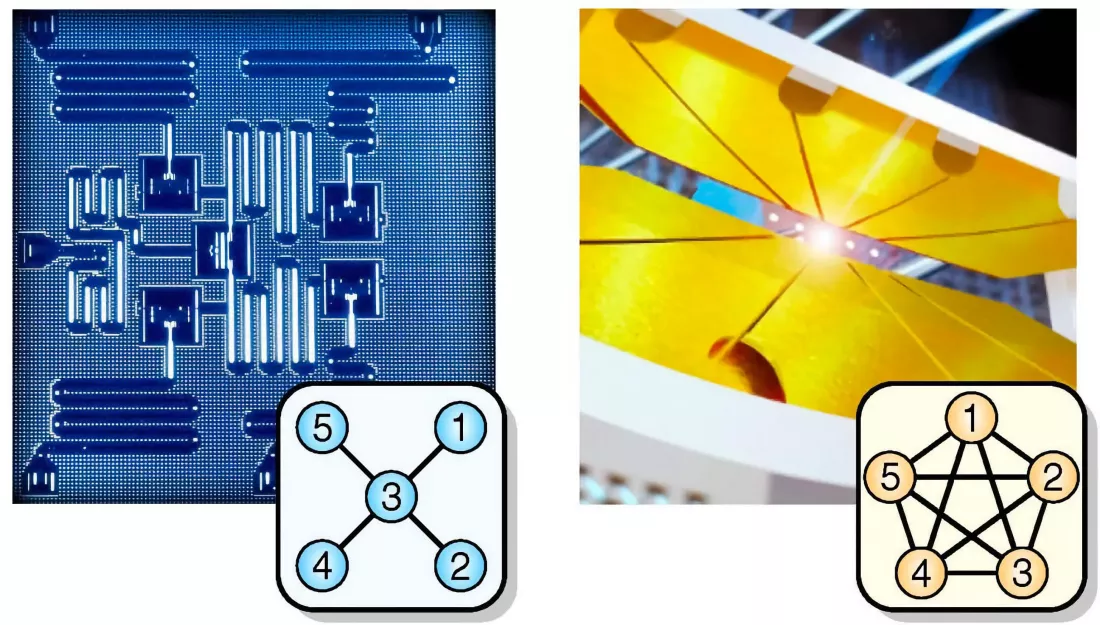

The State of Quantum Computing Systems

Quantum computing “systems” are still in development, and as such the entire

system paradigm is in flux. While the race to quantum supremacy amongst nations

and companies is picking up pace, it’s still at a very early stage to call it a

“competition.” There are only a few potential qubit technologies deemed

practical, the programming environment is nascent with abstractions that have

still not been fully developed, and there are relatively few (albeit extremely

exciting) quantum algorithms known to scientists and practitioners. Part of the

challenge is that it is very difficult and nearly impractical to simulate

quantum applications and technology on classical computers -- doing so would

imply that classical computers have themselves outperformed their quantum

counterparts! Nevertheless, governments are pouring funding into this field to

help push humanity to the next big era in computing. The past decade has shown

an impressive gain in qubit technologies, quantum circuits and compilation

techniques are being realized, and the progress is leading to even more (good)

competition towards the realization of full fledged quantum computers.

Build Your First Machine Learning Model With Zero Configuration — Exploring Google Colab

You may be wondering what’s the point of training an ML model. Well, for

different use cases, there are different purposes. But in general, the purpose

of training an ML model is more or less about making predictions on things that

they’ve never seen. The model is about how to make good predictions. The way to

create a model is called training — using existing data to identify a proper way

to make predictions. There are many different ways to build a model, such as

K-nearest neighbors, SVC, random forest, and gradient boosting, just to name a

few. For the purpose of the present tutorial showing you how to build an ML

model using Google Colab, let’s just use a model that’s readily available in

sklearn — the random forest classifier. One thing to note is that because we

have only one dataset. To test the model’s performance, we’ll split the dataset

into two parts, one for training and the other for testing. We can simply use

the train_test_split method, as shown below. The training dataset has 142

records, while the test dataset has 36 records, approximately in a ratio of 4:1.

Have you traded on-premise lock-in for in-cloud lock-in?

In a global marketplace, being tied to one cloud service can be either

impossible to achieve or not tolerable to the business, so cloud vendor

neutrality becomes important. Practicality is one driver for always being open

to multiple cloud solutions. There’s also vendor strategy: you will get driven,

hard, to opt for its version of key parts of the software stack; their cloud

version of database, say, as Amazon doesn’t want you to move your Oracle apps

onto Amazon, but the Amazon database products instead. In and of itself, that’s

not a crazy or risky decision; there are advantages to doing that if you are an

Amazon customer already, and there will probably be economies of scale. It might

even be cheaper (although that isn’t always the case with cloud contracts). This

may feel like an easier solution to adopt, but could be a real headache if you

have committed to microservices and have all kinds of open source apps running

all over the place (as you want to, as it’s horses for courses here), and the

database at the back is an Amazon database.

A CIO's Introduction to the Metaverse

Collaboration is one of three primary use cases for a metaverse in the

enterprise right now, according to Forrester VP J.P. Gownder. Another primary

use case is one championed by chip giant Nvidia -- simulations and digital

twins. Huang announced Nvidia Omniverse Enterprise during his keynote address at

the company’s GTC 2021 online AI conference this month and offered several use

cases that focused on simulations and digital twins in industrial settings such

as warehouses, plants, and factories. If you are an organization in an industry

with expensive assets -- for instance oil and gas, manufacturing, or logistics

-- it makes sense to have this use case on your radar, according to Gartner’s

Nguyen. “That’s where augmented reality is benefiting enterprise right now,” he

says. As an example, during his keynote address, Nvidia’s Huang showed a video

of a virtual warehouse created with Nvidia Omniverse Enterprise enabling an

organization to visualize the impact of optimized routing in an automated order

picking scenario. That’s an example of a particular use case, but Omniverse

itself is Nvidia’s platform to enable organizations to create their own

simulations or virtual worlds.

Misconfigured FBI Email System Abused to Run Hoax Campaign

The FBI says the misconfiguration involved the Law Enforcement Enterprise

Portal, or LEEP, which allows state, local and federal agencies to share

information, including sensitive documents. The portal also supports a Virtual

Command Center, which allows law enforcement agencies to share real-time

information about events such as shootings and child abductions. Although the

abused email server is operated by the FBI, the bureau issued an updated

statement Sunday noting that the server is not part of the bureau's corporate

email service, and that no classified systems or personally identifiable

information was compromised. "No actor was able to access or compromise any data

or PII on the FBI's network," the FBI says. "Once we learned of the incident, we

quickly remediated the software vulnerability, warned partners to disregard the

fake emails, and confirmed the integrity of our networks." The hacker-crafted

note, a copy of which has been released by Spamhaus, warned that data had

potentially been exfiltrated.

Change management: 9 ways to build resilient teams

Resilience may be misunderstood as the ability to bounce back instantly from

difficulties or to roll with any manner of punches. Defining or encouraging

mindless acceptance of workplace stressors is a recipe for burnout. “The problem

is when leadership focuses on building a team’s resilience as a way to avoid

addressing unnecessary causes of stress that are part of the organization’s

culture,” says David R. Caruso, Ph.D., author of A Leader’s Guide to Solving

Challenges with Emotional Intelligence. “We will not, nor should we, try to

meditate our way out of a toxic culture. Buying everyone a yoga mat while

failing to address sources of unnecessary stress is a problem.” Therefore, it’s

importance that IT leaders understand the state of affairs within the IT

organization and actively address issues and drivers of burnout. “Burnout and

change fatigue – disengagement that comes from constant or poorly managed change

– are very real risks for team members and organizations,” says Noelle Akins,

leadership coach and founder of Akins & Associates. “Resilience is not just

non-stop adaptability.”

How Happiness Technology Is Affecting Our Everyday Lives

Improving employee happiness helps businesses, too. Utilizing this AI technology

allows companies to track what's called "psychological capital," a phrase coined

by professor Fred Luthans. Helping employees improve their scores not only makes

people happier, but also significantly increases productivity and profit for

companies. But what about using AI to make us happier outside of work? Happiness

technologies have their place in our personal lives as well. ... One problem

that the business world has been working on for years is finding a reliable,

discreet way to analyze the day-to-day activities of employees to increase

productivity and engagement. Research clearly shows that happiness is correlated

to better performance, so this trend of tracking and improving employee

happiness has gained popularity. On the back of this trend, AI is being used for

robotic process automation (RPA) and content intelligence.

Quote for the day:

"Leadership is a matter of having

people look at you and gain confidence, seeing how you react. If you're in

control, they're in control." -- Tom Landry

No comments:

Post a Comment