Thoughtfully training SRE apprentices: Establishing Padawan and Jedi matches

Learning via osmosis is very powerful. There is a lot of jargon and technical

terms that are best learned just by hearing others use these terms in context.

For example, if you ask someone who doesn’t work in technology to pronounce

nginx, they will likely say this incorrectly. This is very common for new

engineers too. It’s not a problem, it just means there is a lot to learn which

experienced engineers may take for granted. What if you asked a group of people

who don’t work in technology to spell nginx? I’m sure you’d get many different

answers. How does this change in a remote world? Really, it’s the same. You’ll

still be attending meetings and hearing new terms, you can still attend standup,

and you can still continue to google the terms you don’t know to build your

vocabulary. For example, imagine you are in a meeting on the topic of incident

management and you are reviewing metrics as a team. As a new SRE apprentice you

might wonder, what does MTTD mean? If you hear or see this term in a meeting you

can quickly google it and learn on the job.

Learning via osmosis is very powerful. There is a lot of jargon and technical

terms that are best learned just by hearing others use these terms in context.

For example, if you ask someone who doesn’t work in technology to pronounce

nginx, they will likely say this incorrectly. This is very common for new

engineers too. It’s not a problem, it just means there is a lot to learn which

experienced engineers may take for granted. What if you asked a group of people

who don’t work in technology to spell nginx? I’m sure you’d get many different

answers. How does this change in a remote world? Really, it’s the same. You’ll

still be attending meetings and hearing new terms, you can still attend standup,

and you can still continue to google the terms you don’t know to build your

vocabulary. For example, imagine you are in a meeting on the topic of incident

management and you are reviewing metrics as a team. As a new SRE apprentice you

might wonder, what does MTTD mean? If you hear or see this term in a meeting you

can quickly google it and learn on the job. Blockchain applications that will change the world in the next 5 years

Decentralized finance (DeFi) is another increasingly blossoming application of

blockchain technology that is set to gain significant momentum in the next 5

years. In Q1 2021, the dollar value of assets under management by DeFi

applications grew from roughly $20 billion to $50 billion. DeFi is a form of

finance that removes central financial intermediaries, like banks, to offer

traditional financial instruments that utilize smart contracts on blockchains.

An example of DeFi in action is the plethora of new decentralized applications

now offering easier access to digital loans — users can bypass strict

requirements of banks and engage in peer-to-peer lending with other people

around the world. The next five years are vital for DeFi and will see dramatic

growth in its applications, regulatory compliance associated with the technology

and its overall use. Celebrity investor Mark Cuban, who gained notoriety in the

blockchain industry through his advocation of NFTs, has suggested that “banks

should be scared” of DeFi’s rising popularity.

Decentralized finance (DeFi) is another increasingly blossoming application of

blockchain technology that is set to gain significant momentum in the next 5

years. In Q1 2021, the dollar value of assets under management by DeFi

applications grew from roughly $20 billion to $50 billion. DeFi is a form of

finance that removes central financial intermediaries, like banks, to offer

traditional financial instruments that utilize smart contracts on blockchains.

An example of DeFi in action is the plethora of new decentralized applications

now offering easier access to digital loans — users can bypass strict

requirements of banks and engage in peer-to-peer lending with other people

around the world. The next five years are vital for DeFi and will see dramatic

growth in its applications, regulatory compliance associated with the technology

and its overall use. Celebrity investor Mark Cuban, who gained notoriety in the

blockchain industry through his advocation of NFTs, has suggested that “banks

should be scared” of DeFi’s rising popularity. The remote-working challenge: ‘There are huge issues’

From an employees’ perspective “WFH (Working From Home) has the potential to

reduce commute time, provide more flexible working hours, increase job

satisfaction, and improve work-life balance,” a recent study by the University

of Chicago entitled Work from home & productivity: evidence from personnel

& analytics data on IT professionals noted. That’s the theory, but it

doesn’t always work out like that. The researchers tracked the activity of more

than 10,000 employees at an Asian services company between April 2019 and August

2020 and found that they were working 30 per cent more hours than they were

before the pandemic, and 18 per cent more unpaid overtime hours. But there was

no corresponding increase in their workload, and their overall productivity per

hour went down by 20 per cent. Employees with children, predictably perhaps,

were most affected – they worked 20 minutes per day more than those without.

More surprisingly, the employees had less focus time than before the pandemic,

and a lot more meetings. “Time spent on co-ordination activities and meetings

increased, but uninterrupted work hours shrank considerably.

From an employees’ perspective “WFH (Working From Home) has the potential to

reduce commute time, provide more flexible working hours, increase job

satisfaction, and improve work-life balance,” a recent study by the University

of Chicago entitled Work from home & productivity: evidence from personnel

& analytics data on IT professionals noted. That’s the theory, but it

doesn’t always work out like that. The researchers tracked the activity of more

than 10,000 employees at an Asian services company between April 2019 and August

2020 and found that they were working 30 per cent more hours than they were

before the pandemic, and 18 per cent more unpaid overtime hours. But there was

no corresponding increase in their workload, and their overall productivity per

hour went down by 20 per cent. Employees with children, predictably perhaps,

were most affected – they worked 20 minutes per day more than those without.

More surprisingly, the employees had less focus time than before the pandemic,

and a lot more meetings. “Time spent on co-ordination activities and meetings

increased, but uninterrupted work hours shrank considerably.Quantum Computing —What’s it All About

Cluster API Offers a Way to Manage Multiple Kubernetes Deployments

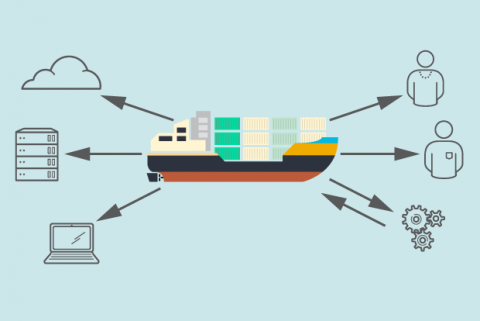

The focus of Cluster API initially is on projects creating tooling and on managed Kubernetes platforms, but in the long run, it will be increasingly useful for organizations that want to build out their own Kubernetes platform, Burns suggested. “It facilitates the infrastructure admin being able to provision a cluster for a user, in an automated fashion or even build the tooling to allow that user to self-service and say ‘hey, I want a cluster’ and press a button and the cluster pops out. By combining Cluster API with something like Logic Apps on Arc, they can come up to a portal, press a button, provision a Kubernetes cluster, get a no-code environment and start building their applications, all through a web browser. ... “We’re maturing to a place where you don’t have to be an expert; where the person who just wants to put together a data source and a little bit of a function transformation and an output can actually achieve all of that in the environment where it needs to run, whether that’s an airstrip, or an oil rig or a ship or factory,” Burns said.Behind the scenes: A day in the life of a cybersecurity expert

"My biggest challenge is how to determine what we need to work on next," Engel

said. "There's only so much time in the world and you only have so much

manpower. We have so many ideas that we want to execute and deliver to ensure

security and privacy, and we don't like to rest on our laurels. We're not just

going to say, 'oh, this is an eight out of 10, so we don't need to touch it

anymore.' We want to be 10 out of 10 everywhere." As for the fun part,

"analysis on security events is a lot of fun because it's my background," he

said. "I love that kind of thing." While he can't go into details on this,

because of security reasons, it's an around-the-clock job. The automated

systems can contact Engel at any time if something highly critical

occurs—"like having a burglar alarm at your house or something like that," he

said. "Someone's attempting to break-in. And in this example, we are the

police." "A common misconception about cybersecurity is that it's literally

just two people sitting in a dark room waiting for a screen to turn red, and

then they maybe flick a couple buttons," Engel said. "It couldn't be further

from the truth."

"My biggest challenge is how to determine what we need to work on next," Engel

said. "There's only so much time in the world and you only have so much

manpower. We have so many ideas that we want to execute and deliver to ensure

security and privacy, and we don't like to rest on our laurels. We're not just

going to say, 'oh, this is an eight out of 10, so we don't need to touch it

anymore.' We want to be 10 out of 10 everywhere." As for the fun part,

"analysis on security events is a lot of fun because it's my background," he

said. "I love that kind of thing." While he can't go into details on this,

because of security reasons, it's an around-the-clock job. The automated

systems can contact Engel at any time if something highly critical

occurs—"like having a burglar alarm at your house or something like that," he

said. "Someone's attempting to break-in. And in this example, we are the

police." "A common misconception about cybersecurity is that it's literally

just two people sitting in a dark room waiting for a screen to turn red, and

then they maybe flick a couple buttons," Engel said. "It couldn't be further

from the truth."What is DataSecOps and why it matters

Security needs to be bolted into DataOps, not an afterthought. This means building a cross team, ongoing collaboration between security engineering, data engineering and other relevant stakeholders, and not just at the end of a big project. This also means that the security of data stores needs to be understood and transparent to security teams. Number three, in the ever-changing data world, and with limited resources, prioritization is key. You should plan and focus on the biggest risks first. In data that often means knowing where your sensitive data is, which is not so trivial, and prioritizing it much higher in terms of projects and resources. Number four, data access needs to have a clear and simple policy. If things start getting too complicated or non-deterministic around data access permissions, and by non-deterministic, I mean that sometimes you may request access and get it, and sometimes you may not get it, you’re either being a disabler for the business data usage, or you’re exposing security risks.Improving microservice architecture with GraphQL API gateways

API gateways are nothing new to microservices. I’ve seen many developers use

them to provide a single interface (and protocol) for client apps to get data

from multiple sources. They can solve the problems previously described by

providing a single API protocol, a single auth mechanism, and ensuring that

clients only need to speak to one team when developing new features.Using

GraphQL API gateways, on the other hand, is a relatively new concept that has

become popular lately. This is because GraphQL has a few properties that lend

themselves beautifully to API gateways. GraphQL Mesh will not only act as our

GraphQL API gateway but also as our data mapper. It supports different data

sources, such as OpenAPI/Swagger REST APIs, gRPC APIs, databases, GraphQL

(obviously), and more. It will take these data sources, transform them into

GraphQL APIs, and then stitch them together. To demonstrate the power of a

library like this, we will create a simple SpaceX Flight Journal API. Our app

will record all the SpaceX launches we attended over the years.

API gateways are nothing new to microservices. I’ve seen many developers use

them to provide a single interface (and protocol) for client apps to get data

from multiple sources. They can solve the problems previously described by

providing a single API protocol, a single auth mechanism, and ensuring that

clients only need to speak to one team when developing new features.Using

GraphQL API gateways, on the other hand, is a relatively new concept that has

become popular lately. This is because GraphQL has a few properties that lend

themselves beautifully to API gateways. GraphQL Mesh will not only act as our

GraphQL API gateway but also as our data mapper. It supports different data

sources, such as OpenAPI/Swagger REST APIs, gRPC APIs, databases, GraphQL

(obviously), and more. It will take these data sources, transform them into

GraphQL APIs, and then stitch them together. To demonstrate the power of a

library like this, we will create a simple SpaceX Flight Journal API. Our app

will record all the SpaceX launches we attended over the years. IT modernization: 5 truths now

Digital transformation got a lot of CIO attention this past year at events

like the MIT Sloan CIO Symposium – and it still isn’t a product or a solution

that anyone can buy. Rather, it’s best described as a continuous process

involving new technologies, ways of working, and adopting a culture of

experimentation. Fostering that culture leads to faster and more

experimentation and the ability to arrive at better outcomes through

continuous improvement. But just because the technology component is often not

front and center (and shouldn’t be) in digital transformation projects doesn’t

mean that a technology toolbox, including a foundational platform, is

unimportant. Anything but. If you look back at some of the words in that

digital transformation definition, it’s easy to see why traditional rigid

platforms often intended to support monolithic long-lived applications might

not fit the bill. Digital transformation is responsible in no small part for

the acceleration of both containerized environments and the consumption of

cloud services.

Digital transformation got a lot of CIO attention this past year at events

like the MIT Sloan CIO Symposium – and it still isn’t a product or a solution

that anyone can buy. Rather, it’s best described as a continuous process

involving new technologies, ways of working, and adopting a culture of

experimentation. Fostering that culture leads to faster and more

experimentation and the ability to arrive at better outcomes through

continuous improvement. But just because the technology component is often not

front and center (and shouldn’t be) in digital transformation projects doesn’t

mean that a technology toolbox, including a foundational platform, is

unimportant. Anything but. If you look back at some of the words in that

digital transformation definition, it’s easy to see why traditional rigid

platforms often intended to support monolithic long-lived applications might

not fit the bill. Digital transformation is responsible in no small part for

the acceleration of both containerized environments and the consumption of

cloud services.Building future-proof tech products and long-lasting customer relationships

When designing a new product, it is essential to not only look at current

needs, but to anticipate changes in business and in computing models. A

timeless design must include potential for expansion and adaptation as the

industry evolves. Products designed in a non-portable manner are limited to

specific deployment scenarios only, for example on-prem vs. cloud. Of course,

predicting movements in the industry is not easy. Many people bet on storage

tape going away, yet it is still found in many data centres today. Some jumped

on a new trend too soon and failed. And others did not recognise the value in

what they had created. Take Xerox, for example, which created then ignored the

first personal computer. So, how do you go about creating enduring technology

that makes a meaningful difference in people’s lives and businesses? First,

stay close to analysts whose job it is to analyse the market and identify

major trends. And, second, engage in deep conversations with end-users to

truly understand their objectives and challenges. Here, it is essential to

discuss customers’ evolving needs and future projects, then work to create a

product that solves for both the short and long term.

When designing a new product, it is essential to not only look at current

needs, but to anticipate changes in business and in computing models. A

timeless design must include potential for expansion and adaptation as the

industry evolves. Products designed in a non-portable manner are limited to

specific deployment scenarios only, for example on-prem vs. cloud. Of course,

predicting movements in the industry is not easy. Many people bet on storage

tape going away, yet it is still found in many data centres today. Some jumped

on a new trend too soon and failed. And others did not recognise the value in

what they had created. Take Xerox, for example, which created then ignored the

first personal computer. So, how do you go about creating enduring technology

that makes a meaningful difference in people’s lives and businesses? First,

stay close to analysts whose job it is to analyse the market and identify

major trends. And, second, engage in deep conversations with end-users to

truly understand their objectives and challenges. Here, it is essential to

discuss customers’ evolving needs and future projects, then work to create a

product that solves for both the short and long term.Quote for the day:

"I think leadership's always been about two main things: imagination and courage." -- Paul Keating

No comments:

Post a Comment