Scientists removed major obstacles in making quantum computers a reality

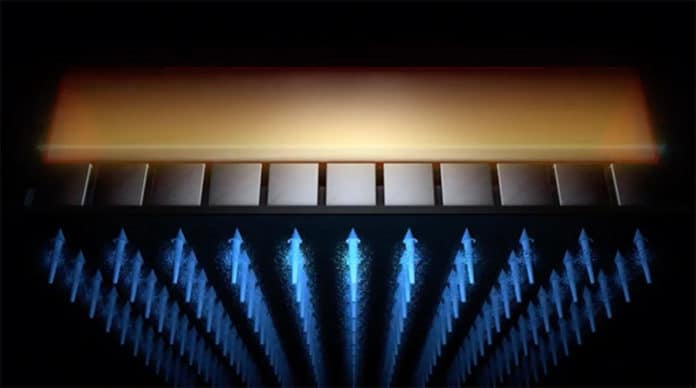

Spin-based silicon quantum electronic circuits offer a scalable platform for

quantum computation. They combine the manufacturability of semiconductor devices

with the long coherence times afforded by spins in silicon. Advancing from

current few-qubit devices to silicon quantum processors with upward of a million

qubits, as required for fault-tolerant operation, presents several unique

challenges. One of the most demanding is the ability to deliver microwave

signals for large-scale qubit control. ... Completely reimagine the silicon chip

structure is the solution to the problem. Scientists started by removing the

wire next to the qubits. They then applied a novel way to deliver

microwave-frequency magnetic control fields across the entire system. This

approach could provide control fields to up to four million qubits. Scientists

added their newly developed component called a crystal prism called a dielectric

resonator. When microwaves are directed into the resonator, it focuses the

wavelength of the microwaves down to a much smaller size.

Agile strategy: 3 hard truths

One of the primary challenges is that leadership can often be a barrier when an

organization is seeking to become more agile. According to last year’s Business

Agility Report from Scrum Alliance and the Business Agility Institute, this is

the most prevalent challenge that agile coaches report. Some reasons for this

include a lack of buy-in and support, resistance to change, having a mindset

that’s not conducive to agility, a lack of alignment between agile teams and

leadership, lack of understanding, and a deeply rooted organizational legacy

regarding management styles. Overcoming legacy structures, cultures, and

mindsets can be difficult. Some coaches have reported that leaders view agile as

being “for their staff” and not for them. Additionally, leaders may have

competing priorities – such as retaining control – which can hinder

organization-wide adoption of agile methodologies. Any leader considering an

agile transformation must understand that in order to succeed, full executive

buy-in is needed and that they too will need to change their way of working and

thinking.

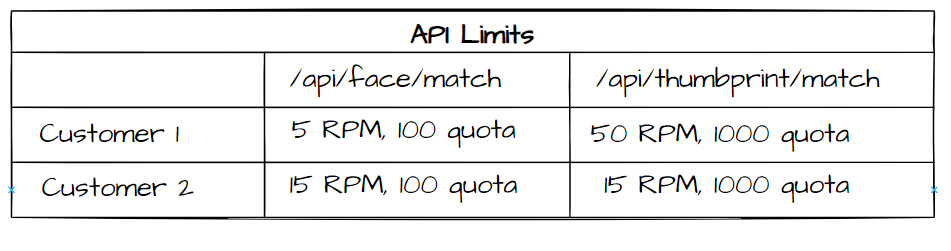

Custom Rate Limiting for Microservices

API providers use rate limit design patterns to enforce API usage limits on

their clients. It allows API providers to offer reliable service to the

clients. This also allows a client to control its API consumption. Rate

limiting, being a cross-cutting concern, is often implemented at the API

Gateway fronting the microservices. There are a number of API Gateway

solutions that offer rate-limiting features. In many cases, the custom

requirements expected of the API Gateway necessitate developers to build their

own API Gateway. The Spring Cloud Gateway project provides a library for

developers to build an API Gateway to meet any specific needs. In this

article, we will demonstrate how to build an API Gateway using the Spring

Cloud Gateway library and develop custom rate limiting solutions. A SaaS

provider offers APIs to verify the credentials of a person through different

factors. Any organization that utilizes the services may invoke APIs to verify

credentials obtained from national ID cards, face images, thumbprints, etc.

The service provider may have a number of enterprise customers that have been

offered a rate limit - requests per minute, and a quota - requests per day,

depending on their contracts.

Google Introduces Two New Datasets For Improved Conversational NLP

Conversational agents are a dialogue system through NLP to respond to a given

query in human language. It leverages advanced deep learning measures and

natural language understanding to reach a point where conversational agents

can transcend simple chatbot responses and make them more contextual.

Conversational AI encompasses three main areas of artificial intelligence

research — automatic speech recognition (ASR), natural language processing

(NLP), and text-to-speech (TTS or speech synthesis). These dialogue systems

are utilised to read from the input channel and then reply with the relevant

response in graphics, speech, or haptic-assisted physical gestures via the

output channel. Modern conversational models often struggle when confronted

with temporal relationships or disfluencies.The capability of temporal

reasoning in dialogs in massive pre-trained language models like T5 and GPT-3

is still largely under-explored. The progress on improving their performance

has been slow, in part, because of the lack of datasets that involve this

conversational and speech phenomena.

Addressing the cybersecurity skills gap through neurodiversity

Having a career in cybersecurity typically requires logic, discipline,

curiosity and the ability to solve problems and find patterns. This is an

industry that offers a wide spectrum of positions and career paths for people

who are neurodivergent, particularly for roles in threat analysis, threat

intelligence and threat hunting. Neurodiverse minds are usually great at

finding the needle in the haystack, the small red flags and minute details

that are critical for hunting down and analyzing potential threats. Other

strengths include pattern recognition, thinking outside the box, attention to

detail, a keen sense of focus, methodical thinking and integrity. The more

diverse your teams are, the more productive, creative and successful they will

be. And not only can neurodiverse talent help strengthen cybersecurity,

employing different minds and perspectives can also solve communication

problems and create a positive impact for both your team and your company.

According to the Bureau of Labor Statistics, the demand for Information

Security Analysts — one of the common career paths for cybersecurity

professionals — is expected to grow 31% by 2029, much higher than the average

growth rate of 4% for other occupations.

Realizing IoT’s potential with AI and machine learning

Propagating algorithms across an IIoT/IoT network to the device level is

essential for an entire network to achieve and keep in real-time

synchronization. However, updating IIoT/IoT devices with algorithms is

problematic, especially for legacy devices and the networks supporting them.

It’s essential to overcome this challenge in any IIoT/IoT network because

algorithms are core to AI edge succeeding as a strategy. Across manufacturing

floors globally today, there are millions of programmable logic controllers

(PLCs) in use, supporting control algorithms and ladder logic. Statistical

process control (SPC) logic embedded in IIoT devices provides real-time process

and product data integral to quality management succeeding. IIoT is actively

being adopted for machine maintenance and monitoring, given how accurate sensors

are at detecting sounds, variations, and any variation in process performance of

a given machine. Ultimately, the goal is to predict machine downtimes better and

prolong the life of an asset.

Understanding and applying robotic process automation

RPA can allow businesses to reallocate their employees, removing them from

repetitive tasks and engaging them in projects that support true growth, both

for the company and individual. Work were human strengths such as emotional

intelligence, reasoning and judgment are required typically bring greater value

to the company, and, they’re also often more personally rewarding. This can

raise job satisfaction and help retain employees. Further, the ability to

reallocate employees can enable a business to apply their useful company

knowledge to other value-adding areas, supplement talent gaps and more. Of

course, there’s the attraction of being able to do one’s job more efficiently,

without manual processes that can make time drag. For instance, let’s say you’re

at that same investment firm and there a rapidly growing hedge fund, requiring

human resources (HR) to onboard a lot of people fast. Between provisioning

accounts, providing access to the right tools, sending out emails and more,

there’s a lot of work involved. With a RPA bot, 20 new people could be processed

at once, with the HR person monitoring progress through a window on the corner

of their screen, which also notifies them if anything needs their attention.

It's time for AI to explain itself

Ultimately, organizations may not have much choice but to adopt XAI. Regulators

have taken notice. The European Union's General Data Protection Regulation

(GDPR) demands that decisions based on AI be explainable. Last year, the U.S.

Federal Trade Commission issued stringent guidelines around how such technology

should be used. Companies found to have bias embedded in their decision-making

algorithms risk violating multiple federal statutes, including the Fair Credit

Reporting Act, the Equal Credit Opportunity Act, and antitrust laws. "It is

critical for businesses to ensure that the AI algorithms they rely on are

explainable to regulators, particularly in the antitrust and consumer protection

space," says Dee Bansal, a partner at Cooley LLP, which specializes in antitrust

litigation. "If a company can't explain how its algorithms work [and] the

contours of the data on which they rely … it risks being unable to adequately

defend against claims regulators may assert that [its] algorithms are unfair,

deceptive, or harm competition." It's also just a good idea, notes James Hodson,

CEO of the nonprofit organization AI for Good.

AI ethics in the real world: FTC commissioner shows a path toward economic justice

The value of a machine learning algorithm is inherently related to the quality

of the data used to develop it, and faulty inputs can produce thoroughly

problematic outcomes. This broad concept is captured in the familiar phrase:

"Garbage in, garbage out." The data used to develop a machine-learning algorithm

might be skewed because individual data points reflect problematic human biases

or because the overall dataset is not adequately representative. Often skewed

training data reflect historical and enduring patterns of prejudice or

inequality, and when they do, these faulty inputs can create biased algorithms

that exacerbate injustice, Slaughter notes. She cites some high-profile examples

of faulty inputs, such as Amazon's failed attempt to develop a hiring algorithm

driven by machine learning, and the International Baccalaureate's and UK's

A-Level exams. In all of those cases, the algorithms introduced to automate

decisions kept identifying patterns of bias in the data used to train them and

attempted to reproduce them. ... "

How to navigate technology costs and complexity with enterprise architecture

.jpg)

The modern business world is increasingly driven by technology. As we move to a

more interconnected and complex environment, the demand for suitable

technologies is increasing – this is so much so that an average enterprise pays

for approximately 1,516 applications. With a shift to remote working, we’re also

seeing an overwhelming imperative to migrate to the cloud, and today,

application costs are estimated to make up 80 per cent of the entire IT budget.

Industry analyst Gartner has even forecasted that worldwide IT spending will

reach $4 trillion in 2021. The modern chief information officer (CIO) is

responsible for understanding these technology costs and bringing them under

control – and a key enabler of this is enterprise architecture (EA). By

providing a strategic view of change, EA ensures alignment of the business and

IT operations, facilitating agility, speed and the ability to make real-time

decisions based on reliable and consistent data. So, what are the common

challenges of spiralling technology costs and how can EA help to reduce this

pressure for CIOs?

Quote for the day:

“Patience is the calm acceptance that

things can happen in a different order than the one you have in mind.” --

David G. Allen

No comments:

Post a Comment