DevOps requires a modern approach to application security

As software development has picked up speed, organizations have deployed

automation to keep up, but many are having trouble working out the security

testing aspect of it. Current application security testing tools tend to scan

everything all the time, overwhelming and overloading teams with too much

information. If you look at all the tools within a CI pipeline, there are tools

from multiple vendors, including open-source tools that are able to work

separately, but together in an automated fashion while integrating with other

systems like ticketing tools. “Application security really needs to make that

shift in the same manner to be more more fine-grained, more service-oriented,

more modular and more automated,” said Carey. Intelligent orchestration and

correlation is a new approach being used to manage security tests, reduce the

overwhelming amount of information and let developers focus on what really

matters: the application. While the use of orchestration and correlation

solutions are not uncommon on the IT operations side for things like network

security and runtime security, they are just beginning to cross into the

application development and security side of things, Carey explained.

Databricks cofounder’s next act: Shining a Ray on serverless autoscaling

Simply stated, Ray provides an API for building distributed applications. It

enables any developer working on a laptop to deploy a model on a serverless

environment, where deployment and autoscaling are automated under the covers. It

delivers a serverless experience without requiring the developer to sign up for

a specific cloud serverless service or know anything about setting up and

running such infrastructure. A Ray cluster consists of a head node and a set of

worker nodes that can work on any infrastructure, on-premises or in a public

cloud. Its capabilities include an autoscaler that introspects pending tasks,

and then activates the minimum number of nodes to run them, and monitors

execution to ramp up more nodes or close them down. There is some assembly

required, however, as the developer needs to register to compute instance types.

Ray can start and stop VMs in the cloud of choice; the ray docs provide

information about how to do this in each of the major clouds and Kubernetes. One

would be forgiven for getting a sense that Ray is déjà vu all over again.

Stoica, who was instrumental in fostering Spark's emergence, is taking on a

similar role with Ray.

Akka Serverless is really the first of its kind

Akka Serverless provides a data-centric backend application architecture that

can handle the huge volume of data required to support today’s cloud native

applications with extremely high performance. The result is a new developer

model, providing increased velocity for the business in a highly cost-effective

manner leveraging existing developers and serverless cloud infrastructure.

Another huge bonus of this new distributed state architecture is that, in the

same way as serverless infrastructure offerings allow businesses to not worry

about servers, Akka Serverless eliminates the need for databases, caches, and

message brokers to be developer-level concerns. ... Developers can express their

data structure in code and the way Akka Serverless works makes it very

straightforward to think about the “bounded context” and model their services in

that way too. With Akka Serverless we tightly integrate the building blocks to

build highly scalable and extremely performant services, but we do so in a way

that allows developers to write “what” they want to connect to and let the

platform handle the “how”. As a best practice you want microservices to

communicate asynchronously using message brokers, but you don’t want all

developers to have to figure out how to connect to them and interact with

them.

Windows 11 enables security by design from the chip to the cloud

The Trusted Platform Module (TPM) is a chip that is either integrated into your

PC’s motherboard or added separately into the CPU. Its purpose is to help

protect encryption keys, user credentials, and other sensitive data behind a

hardware barrier so that malware and attackers can’t access or tamper with that

data. PCs of the future need this modern hardware root-of-trust to help protect

from both common and sophisticated attacks like ransomware and more

sophisticated attacks from nation-states. Requiring the TPM 2.0 elevates the

standard for hardware security by requiring that built-in root-of-trust. TPM 2.0

is a critical building block for providing security with Windows Hello and

BitLocker to help customers better protect their identities and data. In

addition, for many enterprise customers, TPMs help facilitate Zero Trust

security by providing a secure element for attesting to the health of devices.

Windows 11 also has out of the box support for Azure-based Microsoft Azure

Attestation (MAA) bringing hardware-based Zero Trust to the forefront of

security, allowing customers to enforce Zero Trust policies when accessing

sensitive resources in the cloud with supported mobile device managements (MDMs)

like Intune or on-premises.

Switcheo — Zilliqa bridge will be a game-changer for BUILDers & HODlers!

Currently, a vast majority of blockchains operate in silos. This means that many

blockchains can only read, transact, and access data within a singular

blockchain. This limits blockchain user experience and hinders user adoption.

Without interoperability, we have individual ecosystems where users and

developers have to choose which blockchain to interact with. Once they choose a

blockchain, they are limited to using its features and offerings. Not the most

decentralised environment to build on right? No blockchain should be an island —

and working alone doesn’t end well. We need to stay connected to different

protocols so ideas, dApps and users can travel across platforms conveniently.

With interoperability, users and developers can seamlessly transact with

multiple blockchains and benefit from those cross-chain ecosystems, application

offerings in areas like decentralised finance (DeFi), gaming, supply chain

logistics, etc. The list goes on. Interoperability creates the ability for users

and developers to not be stuck having to choose one blockchain over another, but

rather, they can benefit from multiple chains being able to interlink.

JSON vs. XML: Is One Really Better Than the Other?

Despite resolving very similar purposes, there are some critical differences

between JSON and XML. Distinguishing both can help decide when to opt for one or

the other and understand which is the best alternative according to specific

needs and goals. First, as previously mentioned, while XML is a markup language,

JSON, on the other hand, is a data format. One of the most significant

advantages of using JSON is that the file size is smaller; thus, transferring

data is faster than XML. Moreover, since JSON is compact and very easy to read,

the files look cleaner and more organized without empty tags and data. The

simplicity of its structure and minimal syntax makes JSON easier to be used and

read by humans. Contrarily, XML is often characterized for its complexity and

old-fashioned standard due to the tag structure that makes files bigger and

harder to read. However, JSON vs. XML is not entirely a fair comparison. JSON is

often wrongly perceived as a substitute for XML, but while JSON is a great

choice to make simple data transfers, it does not perform any processing or

computation.

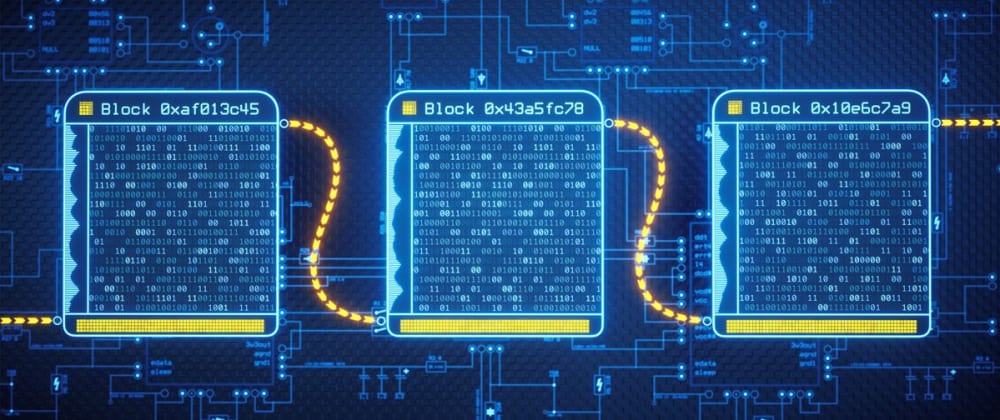

How to Build Your Own Blockchain in NodeJS

It can be helpful to think of blockchains as augmented linked lists, or arrays

in which each element points to the preceding array. Within each block

(equivalent to an element in an array) of the blockchain, there contains at

least the following: A timestamp of when the block was added to the

chain; Some sort of relevant data. In the case of a cryptocurrency, this data

would store transactions, but blockchains can be helpful in storing much more

than just transactions for a cryptocurrency; The encrypted hash of the block

that precedes it; and An encrypted hash based on the data contained

within the block(Including the hash of the previous block). The key component

that makes a blockchain so powerful is that embedded in each block's hash is

the data of the previous block (stored through the previous block's hash).

This means that if you alter the data of a block, you will alter its hash, and

therefore invalidate the hashes of all future blocks. While this can probably

be done with vanilla Javascript, for the sake of simplicity we are going to be

making a Node.js script and be taking advantage of Node.js's built-in Crypto

package to calculate our hashes.

5 Practices to Improve Your Programming Skills

Programmers have to write better code to impress hardware and other

programmers (by writing clean code). We have to write code that will perform

well in time and space factors to impress hardware. There are indeed several

approaches to solve the same software engineering problem. The

performance-first way motivates you to select the most practical and

well-performing solution. Performance is still crucial regardless of modern

hardware because accumulated minor performance issues may affect badly for the

whole software system in the future. Implementing hardware-friendly solutions

requires computer science fundamentals knowledge. The reason is that computer

science fundamentals teach us about how to use the right data structures and

algorithms. Choosing the right data structures and algorithms is the key to

success behind every complex software engineering project. Some performance

problems could stay hidden in the codebase. Besides, your performance test

suite may not cover those scenarios. Your goal should be to apply performance

patches when you spot such a problem always.

Containers Vs. Bare Metal, VMs and Serverless for DevOps

The workhorse of IT is the computer server on which software application

stacks run. The server consists of an operating system, computing, memory,

storage and network access capabilities; often referred to as a computer

machine or just “machine.” A bare metal machine is a dedicated server using

dedicated hardware. Data centers have many bare metal servers that are racked

and stacked in clusters, all interconnected through switches and routers.

Human and automated users of a data center access the machines through access

servers, high security firewalls and load balancers. The virtual machine

introduced an operating system simulation layer between the bare metal

server’s operating system and the application, so one bare metal server can

support more than one application stack with a variety of operating systems.

This provides a layer of abstraction that allows the servers in a data center

to be software-configured and repurposed on demand. In this way, a virtual

machine can be scaled horizontally, by configuring multiple parallel machines,

or vertically, by configuring machines to allocate more power to a virtual

machine.

Debunking Three Myths About Event-Driven Architecture

Event-driven applications are often criticized for being hard to understand

when it comes to execution flow. Their asynchronous and loosely coupled nature

made it difficult to trace the control flow of an application. For example, an

event producer does not know where the events it is producing will end up.

Similarly, the event consumer has no idea who produced the event. Without the

right documentation, it is hard to understand the architecture as a whole.

Standards like AsyncAPI and CloudEvents help document event-driven

applications in terms of listing exposed asynchronous operations with the

structure of messages they produce or consume and the event brokers they are

associated with. The AsyncAPI specification produces machine-readable

documentation for event-driven APIs, just as the Open API Specification does

for REST-based APIs. It documents event producers and consumers of an

application, along with the events they exchange. This provides a single

source of truth for the application in terms of control flow. Apart from that,

the specification can be used to generate the implementation code and the

validation logic.

Quote for the day:

"Leadership is being the first egg in

the omelet." -- Jarod Kintz

No comments:

Post a Comment