How the public sector can accelerate digital discovery

Firstly, public bodies should focus on outcomes rather than output. By

identifying where an immediate impact can be made to address the challenges of

legacy technology – rather than trying to fix everything at once – you can

empower digital partners and discovery teams to identify issues and make key

decisions without blockers from other teams, existing structures or business

areas. Removing this red tape will mean decisions and actions will be taken at a

pace, delivering greater value and results in the process, rather than creating

complicated services that users struggle to navigate. The next focus to enhance

digital discovery should be diversity, building and working with project teams

that cover a wide range of disciplines and skill sets, as well as ages, races

and genders. Increased diversity means that a discovery team benefits from

different experiences and frames of reference, helping to avoid conformity and a

groupthink mentality, which can result in issues being missed or solutions not

being considered as everyone is thinking on the same page. For example,

including people from non-digital backgrounds in a discovery team, such as

service users, will help to identify problems that otherwise may be

missed.

Delivering the Highest Quality of Experience in a Multi-Cloud World

The global pandemic has accelerated enterprise IT teams’ desire to simplify the

management of complex multi-cloud and edge environments and operate them

holistically as a single WAN. It is also driving IT requirements for delivering

the highest levels of application performance for all their cloud-hosted

business applications, from any network in the emerging post-pandemic

environment. This shift is intensifying the urgency to transform conventional

data center and MPLS-centric and VPN-based networks to a more modern hybrid

SD-WAN environment that combines MPLS and internet with secure managed

internet-based cloud services. In a hybrid WAN environment, application

performance across a WAN can vary considerably from site to site or region to

region because of underlying factors such as latency, packet loss and jitter

that must be taken into consideration, especially using a mix of MPLS and

broadband connectivity services. The Aruba EdgeConnect SD-WAN edge platform,

acquired with Silver Peak, supports advanced visibility, routing, control and

intent-based policy management for any application – thereby improving the

performance and availability of business applications by dynamically routing

traffic to virtually any site, automatically adapting to real-time network

conditions.

Intelligence gathering: Bringing AI technology into strategic planning

While theories governing corporate strategy have been debated (and sometimes

overthrown) over the years, real time strategy focuses on modernizing an aspect

that has practically been left untouched: methodology. AI techniques, which

include machine learning, can import data from an abundance of sources, identify

patterns and trends, and supply insights for decision-makers. In the process,

AI-enabled planning upends traditional processes that depend on (and are

affected by) human bias. Too often, the authors point out, current strategic

decisions are based on information that is flawed across multiple dimensions

(e.g., completeness, accuracy) and end up being unduly influenced by intuition

and experience. During the exhaustive process of devising a plan, many

assumptions and hypotheses are undeservedly promoted to “facts,” especially if

they help dim uncertainty. The result: strategic plans that gain consensus, but

emerge with a blandness akin to vision statements—and no mechanism for

consistent follow-up. Without alignment among business units as to how each

defines success, even companies that have embraced AI can end up stalled on the

AI maturity curve, unable to progress beyond early victories in cost reductions

and productivity gains.

Unique TTPs link Hades ransomware to new threat group

Researchers claim to have discovered the identity of the operators of Hades

ransomware, exposing the distinctive tactics, techniques, and procedures (TTPs)

they employ in their attacks. Hades ransomware first appeared in December 2020

following attacks on a number of organizations, but to date there has been

limited information regarding the perpetrators. ... The findings are a result of

incident response engagements carried out by Secureworks in the first quarter of

2021. “Some third-party reporting attributes Hades to the Hafnium threat group,

but CTU research does not support that attribution,” the researchers

wrote. “Other reporting attributes Hades to the financially motivated Gold

Drake threat group based on similarities to that group’s WastedLocker

ransomware. Despite use of similar application programming interface (API)

calls, the CryptOne crypter, and some of the same commands, CTU researchers

attribute Hades and WastedLocker to two distinct groups as of this publication.”

... “Typically, when we see a variety of playbooks used around a particular

ransomware, it points to the ransomware being delivered as

ransomware-as-a-service (RaaS) with different pockets of threat actors using

their own methods,"Marcelle Lee, senior security researcher, CTU-CIC at

Secureworks, tells CSO.

AI: It’s Not Just For the Big FAANG Dogs Anymore

“Previously, building models, building features, was extremely difficult” and

typically required a data scientist. “But today, particularly for SMEs, this

type of automation tool can help those aspects a lot…Our type of automation is

definitely helping them to ramp up the speed of their AI journey.” Fujimaki

noted how one of dotData’s smaller customers was able to build AI solutions

without a huge investment. The company, Sticky.io, develops a subscription

management service that is provided to other businesses as a SaaS offering. It

wanted to add a predictive capability to identify payments that were likely to

fail. “For them, the biggest barrier was…skill,” Fujimaki tells Datanami. “They

are a cloud-native company, so the data is stored in AWS. On the AI side, they

didn’t have data scientists, so they needed AutoML functionality.” Sticky.io’s

product manager was able to use dotData to comb through their data and identify

the right features that would go into the predictive model. Even though he

didn’t posses preexisting talents in data science, the pilot was a success, and

Sticky.io’s leadership recognized the value that it brought. “The most important

skill that [customers] have to have is the input side and output side,” Fujimaki

says.

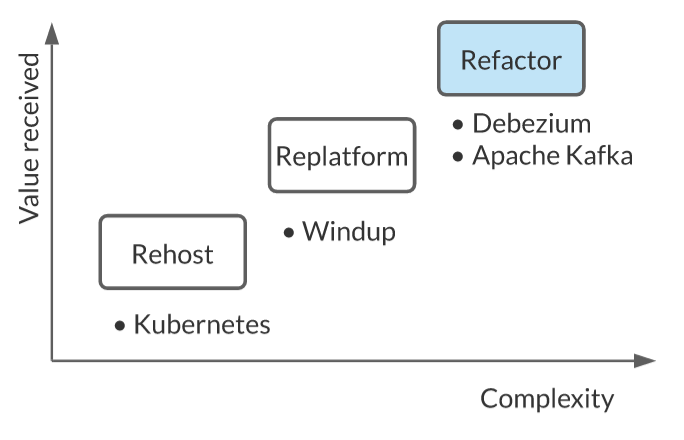

Application modernization patterns with Apache Kafka, Debezium, and Kubernetes

The very first question is where to start the migration. Here, we can use

domain-driven design to help us identify aggregates and the bounded contexts

where each represents a potential unit of decomposition and a potential boundary

for microservices. Or, we can use the event storming technique created by

Antonio Brandolini to gain a shared understanding of the domain model. Other

important considerations here would be how these models interact with the

database and what work is required for database decomposition. Once we have a

list of these factors, the next step is to identify the relationships and

dependencies between the bounded contexts to get an idea of the relative

difficulty of the extraction. Armed with this information, we can proceed with

the next question: Do we want to start with the service that has the least

amount of dependencies, for an easy win, or should we start with the most

difficult part of the system? A good compromise is to pick a service that is

representative of many others and can help us build a good technology

foundation. That foundation can then serve as a base for estimating and

migrating other modules.

Understanding AIOps – separating fact from fiction

AIOps is much more than another buzzword or a simple tool to correlate

incidents. When implemented properly, AIOps can detect anomalies automatically

and help remediate and prevent incidents before they impact end-users and

customers. Once anomalies or incidents are detected, it takes a further step and

provides structured analysis and detail on what these issues are and what the

root cause is. This allows the IT team to understand the problem within minutes,

and fix it faster, preserving user experience and avoiding disruptions to the

business. This is observability in action. When working with telemetry data,

AIOps can pick the right team to alert of issues it detects early, and provide

actionable insights so that operations become more efficient and DevOps teams

can focus on innovation, rather than spending non-productive time reactively

troubleshooting problems. ... There is no real reason why a smaller teams could

not use AIOps to differentiate their business and correct operational issues and

also decrease human burden. In fact for small teams, AIOps can help to quickly

discover issues and decrease pressure on already busy teams who need to

eliminate toil to focus on value creation.

Data Scientists Will be Extinct in 10 Years

Data scientists will be extinct in 10 years (give or take), or at least the role

title will be. Going forward, the skill set collectively known as data science

will be borne by a new generation of data savvy business specialists and subject

matter experts who are able to imbue analysis with their deep domain knowledge,

irrespective of whether they can code or not. Their titles will reflect their

expertise rather than the means by which they demonstrate it, be it compliance

specialists, product managers or investment analysts. We don’t need to look back

far to find historic precedents. During the advent of the spreadsheet, data

entry specialists were highly coveted, but nowadays, as Cole Nussbaumer Knaflic

(the author of “Storytelling With Data”) aptly observes, proficiency with

Microsoft Office suite is a bare minimum. Before that, the ability to touch type

with a typewriter was considered a specialist skill, however with the

accessibility of personal computing it has also become assumed. Lastly, for

those considering a career in data science or commencing their studies, it may

serve you well to constantly refer back to the Venn diagram that you will

undoubtedly come across.

Cisco bolts together enterprise and industrial edge with new routers

As organizations accelerate digitization, they need a way to simplify management

and security across the network and edge devices. "With our new routing

portfolio, customers can have a united architecture across their diverse edge

use cases from HQ to remote edges. The same rich functionality and robust

security models across your entire business – from campuses and branch offices

to substations, remote operating locations, fleets, on-the-go connected assets,"

wrote Butaney. "Now utilities can securely connect their edge to reduce outages,

integrate renewables, and improve grid resiliency. Transportation systems

providers can optimize routes for first responders and share real-time location

and safety information with travelers. Whatever your business, you can connect

all your networks with one common architecture and holistic enterprise-wide

security approach," Butaney stated. The modular routers, including the Catalyst

IR8100, IR8100 Heavy Duty Series and IR8300 Rugged Series Router, can be

customized, and all can have storage or CPUs upgraded in the field.

Quick and Seamless Release Management for Java projects with JReleaser

The original mission of JReleaser is to streamline the release and publishing

process of Java binaries in such a way that these binaries may be consumed by

platform-specific package managers, that is, provide a ZIP, TAR, or JAR file.

JReleaser will figure out the rest, letting you publish binaries to Homebrew,

Scoop, Snapcraft, Chocolatey, and more. In other words, JReleaser shortens the

distance between your binaries and your consumers by meeting them where they

prefer to manage their packages and binaries. Early in JReleaser’s design, it

became apparent that splitting the work into several steps that could be

invoked, individually or as one single unit, would be a better approach than

what GoReleaser offers today. This design choice allows developers to

micromanage every step as needed to hook-in JReleaser at a specific point of

their release process without having to rewrite everything. For example, you can

fire up JReleaser and have it create a Git release (GitHub, GitLab, or Gitea)

along with an automatically formatted changelog, or you can tell JReleaser to

append assets to an existing Git release that was created by other means, or

perhaps you’re only interested in packaging and publishing a package to Homebrew

regardless of how the Git release was created.

Quote for the day:

"Whenever you see a successful business,

someone once made a courageous decision." -- Peter F. Drucker

No comments:

Post a Comment