Data Architecture: One Size Does Not Fit All

“There’s no one right way,” said George Yuhasz, VP and Head of Enterprise Data at NewRez, because the demands and the value that Data Architecture practices bring to an organization are as varied as the number of firms trying to get value from data. Yuhasz was speaking at DATAVERSITY® Data Architecture Online Conference. The very definition of Data Architecture varies as well, he says, so get clarity among stakeholders to understand the constraints and barriers in which Data Architecture needs to fit. Will the organization prioritize process alone? Or process, platforms, and infrastructure? Or will it be folded into a larger enterprise architecture? Without a clear definition, it’s impossible to determine key success criteria, or to know what success is, both in the short term and long term. The definition should be simple enough to be understood by a diverse group of stakeholders, and elegant enough to handle sophistication and nuance. Without it, he said, the tendency will be to “drop everything that even relates to the term ‘data’ onto your plate.”

Chrome zero-day, hot on the heels of Microsoft’s IE zero-day. Patch now!

This bug is listed as a “type confusion in V8“, where V8 is the part of Chrome that runs JavaScript code, and type confusion means that you can feed V8 one sort of data item but trick JavaScript into handling it as if it were something else, possibly bypassing security checks or running unauthorised code as a result. For example, if your code is doing JavaScript calculations on a data object that has a memory block of 16 bytes allocated to it, but you can trick the JavaScript interpreter into thinking that you are working on an object that uses 1024 bytes of memory, you can probably end up sneakily writing data outside the official 16-byte allocation, thus pulling off a buffer overflow attack. And, as you probably know, JavaScript security holes that can be triggered by JavaScript code embedded in a web page often result in RCE exploits, or remote code execution. That’s because you’re relying on your browser’s JavaScript engine to keep control over what is essentially unknown and untrusted programming downloaded and executed automatically from an external source.

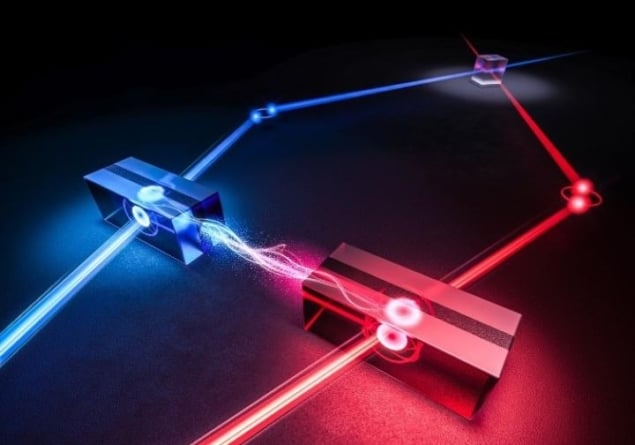

New quantum repeaters could enable a scalable quantum internet

If it can be built, a quantum internet would allow calculations to be

distributed between multiple quantum computers – allowing larger and more

complex problems to be solved. A quantum internet would also provide secure

communications because eavesdropping on the exchange of quantum information can

be easily identified. The backbone of such a quantum network would be

quantum-mechanically entangled links between different network points, called

nodes. However, creating entangled links over long distances at high data rates

remains a challenge. A big problem is that quantum information becomes degraded

as it is transmitted, and the rules of quantum mechanics do not allow signals to

be amplified by conventional repeater nodes. The solution could be quantum

repeaters, which can amplify quantum signals while still obeying quantum

physics. Now, two independent research groups — one at the Institute of Photonic

Sciences (ICFO) in Spain and the other at the University of Science and

Technology of China (USTC) – have shown how quantum memories (QM) offer a path

towards practical quantum repeaters.

3 Mindsets High-Performing Business Leaders Use to Create Growth

Success in life comes from understanding that a lot will happen to you outside

of your control. As humans, we have emotions and feelings — they tend to take

over when something happens to us that's outside of our control. When you focus

on the things you can't control, you put yourself in a dark place that threatens

to spiral your mind. High-performing entrepreneurs don't invest time, energy and

emotion into situations that are outside of their control. Growth-focused

business leaders make a deliberate effort to optimize their mind, body and

spirit. They do the work to operate in a peak state and learn the techniques to

get back into a peak state when they feel themselves slipping. ... Authentic

business leadership means you create wealth through purposeful work and the

desire to build a legacy. You need a vision for where you're going if you plan

to get there and experience the benefits of entrepreneurship. Whether it's

setting up a vision board or having your goals displayed on your phone's

screensaver, you grow when you have a vision and implement growth strategies

consistently.

Has Serverless Jumped the Shark?

Today’s hyped technologies — such as functions-as-a-service offerings like

Amazon Lambda, serverless frameworks for Kubernetes like Knative and other

non-FaaS serverless solutions like database-as-a-service (DBaaS) — are the

underpinnings of more advanced delivery systems for digital business. New

methods of infrastructure delivery and consumption, such as cloud computing, are

as much a cultural innovation as a technological one, most obviously in DevOps.

Even with these technological innovations, companies will still consume a

combination of legacy application data, modern cloud services and other

serverless architectures to accomplish their digital transformation. The

lynchpin isn’t a wholesale move to new technologies, but rather the ability to

integrate these technologies in a way that eases the delivery of digital

experiences and is invisible to the end-user. Serverless hasn’t jumped the

shark. Rather, it is maturing. The Gartner Hype Cycle, a graphic representation

of the maturity and adoption of technologies and applications, forecast in 2017

that it would take two to five years for serverless to move from a point of

inflated expectations to a plateau of productivity.

Opinion: Andreessen Horowitz is dead wrong about cloud

In The Cost of Cloud, a Trillion-Dollar Paradox, Andreessen Horowitz Capital

Management’s Sarah Wang and Martin Casado highlighted the case of Dropbox

closing down its public cloud deployments and returning to the datacenter. Wang

and Casado extrapolated the fact that Dropbox and other enterprises realized

savings of 50% or more by bailing on some or all of their cloud deployments in

the wider cloud-consuming ecosphere. Wang and Casado’s conclusion? Public cloud

is more than doubling infrastructure costs for most enterprises relative to

legacy data center environments. ... Well-architected and well-operated cloud

deployments will be highly successful compared to datacenter deployments in most

cases. However, “highly successful” may or may not mean less expensive. A

singular comparison between the cost of cloud versus the cost of a datacenter

shouldn’t be made as an isolated analysis. Instead, it’s important to analyze

the differential ROI of one set of costs versus the alternative. While this is

true for any expenditure, it’s doubly true for public cloud, since migration can

have profound impacts on revenue. Indeed, the major benefits of the cloud are

often related to revenue, not cost.

What Is Penetration Testing -Strategic Approaches & Its Types?

Social engineering acts as a crucial play in penetration testing. It is such a

test that proves the Human Network of an organization. This test helps secure an

attempt of a potential attack from within the organization by an employee

looking to start a breach or an employee being cheated in sharing data. This

kind of test has both remote penetration test and physical penetration test,

which aims at most common social engineering tactics used by ethical hackers

like phishing attacks, imposters, tailgating, pre-texting, gifts, dumpster

diving, eavesdropping, to name a few. Mainly organizations need penetration

testing professionals and need minimum knowledge about it to secure the

organization from cyberattacks. They use different approaches to find the

attacks and defend them. And they are five types of penetration testing:

network, web application, client-side, wireless network, and social engineering

penetration tests. One of the best ways to learn penetration testing

certifications is EC-Council Certified Penetration Testing Professional or CPENT

is one of the best courses to learn penetration testing. In working in flat

networks, this course boosts your understanding by teaching how to pen test OT

and IoT systems ...

Global chip shortage: How manufacturers can cope over the long term

Although it is very important to shift more production to the U.S., there are

challenges in doing so, Asaduzzaman said. "Currently the number of semiconductor

fabrication foundries in the U.S. is not adequate. If we help overseas-based

companies to build factories here, that will be good. But we definitely don't

want to send all our technology production overseas and then have no control.

That will be a big mistake." However, one ray of hope is that policy provisions

may encourage domestic production of semiconductors, Asaduzzaman said. "For

instance, regulations could require U.S. companies that buy semiconductors to

purchase a certain percentage from domestic producers. Industries have to use

locally produced chips to make sure that local chip industries can sustain."

Asaduzzaman called it "insulting and incorrect" that some overseas chip

manufacturers believe the U.S. doesn't have the skills and cannot keep the cost

low to compete with others in the industry. "We are the ones who invented the

chip technology; now we are depending on overseas companies for chips," he

said.

Complexity is the biggest threat to cloud success and security

Enterprises hit the “complexity wall” soon after deployment when they realize

the cost and complexity of operating a complicated and widely distributed cloud

solution outpaces its benefits. The number of moving parts quickly becomes too

heterogeneous and thus too convoluted. It becomes obvious that organizations

can’t keep the skills around to operate and maintain these platforms. Welcome to

cloud complexity. Many in IT blame complexity on the new array of choices

developers have when they build systems within multicloud deployments. However,

enterprises need to empower innovative people to build better systems in order

to build a better business. Innovation is just too compelling of an opportunity

to give up. If you place limits on what technologies can be employed just to

avoid operational complexity, odds are you’re not the best business you can be.

Security becomes an issue as well. Security experts have long known that more

vulnerabilities exist within a more complex technology solution (the more

physically and logically distributed and heterogeneous).

Limits to Blockchain Scalability vs. Holochain

It is worth noting that it would probably take years to get all of Twitter’s

users to migrate over to Holochain to host themselves, even if Twitter switched

their infrastructure to this kind of decentralized architecture. This is where

the Holo hosting framework comes in. Holo enables Holochain apps, that would

normally be self-hosted, to be served to a web user from a peer hosting network.

In other words, if your users just expect to open their browser, type in a web

address, and have access to your app, you may need to provide them with a hosted

version. Holo has a currency which pays hosts for the hosting power they provide

for Holochain apps that still need to serve mainstream web users. So instead of

paying Amazon or Google to host your app, you pay a network of peer hosting

providers in the HoloFuel cryptocurrency. Instead of gas fees costing over 1

billion times what it would cost to host on Amazon (as it does on Ethereum), we

expect Holo hosting to have more competitive pricing to current cloud providers

because of the low demand on system resources as outlined above.

Quote for the day:

“Doing the best at this moment puts you

in the best place for the next moment” -- Oprah Winfrey

No comments:

Post a Comment