Small language models and open source are transforming AI

From an enterprise perspective, the advantages of embracing SLMs are

multifaceted. These models allow businesses to scale their AI deployments

cost-effectively, an essential consideration for startups and midsize

enterprises that need to maximize their technology investments. Enhanced agility

becomes a tangible benefit as shorter deployment times and easier customization

align AI capabilities more closely with evolving business needs. Data privacy

and sovereignty (perennial concerns in the enterprise world) are better

addressed with SLMs hosted on-premises or within private clouds. This approach

satisfies regulatory and compliance requirements while maintaining robust

security. Additionally, the reduced energy consumption of SLMs supports

corporate sustainability initiatives. That’s still important, right? The pivot

to smaller language models, bolstered by open source innovation, reshapes how

enterprises approach AI. By mitigating the cost and complexity of large

generative AI systems, SLMs offer a viable, efficient, and customizable path

forward. This shift enhances the business value of AI investments and supports

sustainable and scalable growth.

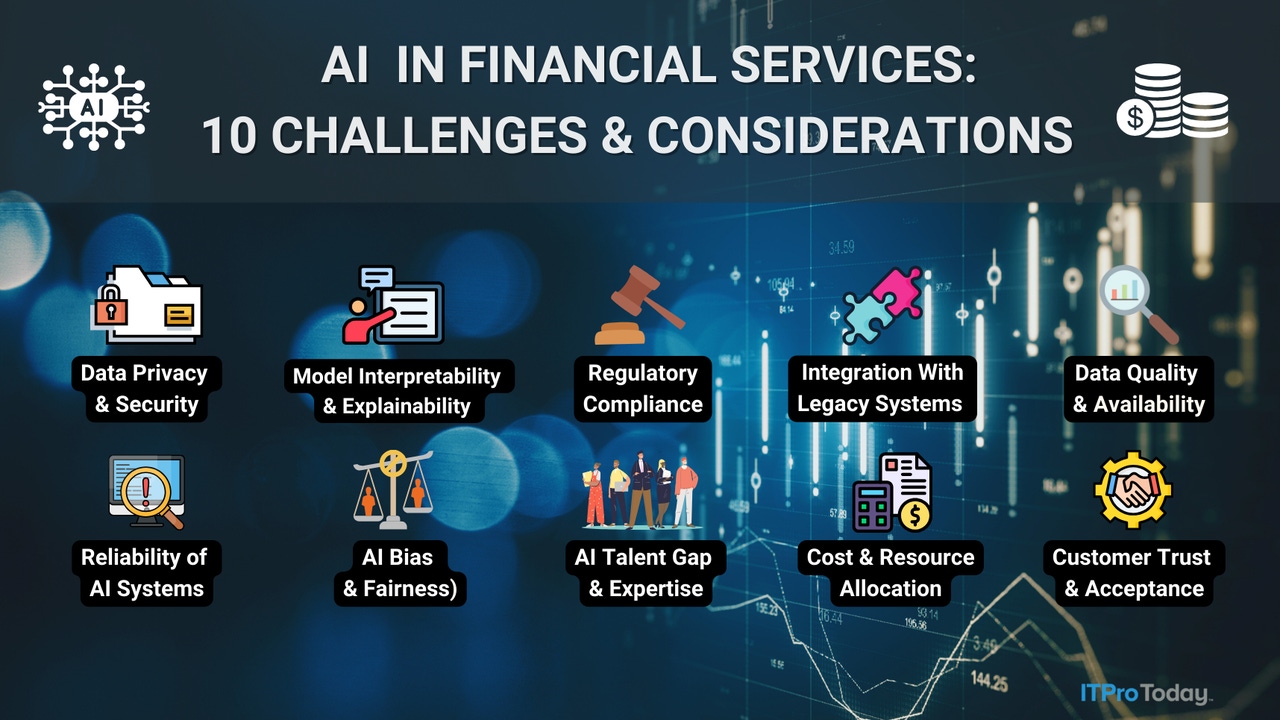

The Impact and Future of AI in Financial Services

Winston noted that AI systems require vast amounts of data, which raises

concerns about data privacy and security. “Financial institutions must ensure

compliance with regulations such as GDPR [General Data Protection Regulation]

and CCPA [California Consumer Privacy Act] while safeguarding sensitive

customer information,” he explained. Simply using general GenAI tools as a

quick fix isn’t enough. “Financial services will need a solution built

specifically for the industry and leverages deep data related to how the

entire industry works,” said Kevin Green, COO of Hapax, a banking AI platform.

“It’s easy for general GenAI tools to identify what changes are made to

regulations, but if it does not understand how those changes impact an

institution, it’s simply just an alert.” According to Green, the next wave of

GenAI technologies should go beyond mere alerts; they must explain how

regulatory changes affect institutions and outline actionable steps. As AI

technology evolves, several emerging technologies could significantly

transform the financial services industry. Ludwig pointed out that quantum

computers, which can solve complex problems much faster than traditional

computers, might revolutionize risk management, portfolio optimization, and

fraud detection.

Is Your Data AI-Ready?

Without proper data contextualization, AI systems may make incorrect

assumptions or draw erroneous conclusions, undermining the reliability and

value of the insights they generate. To avoid such pitfalls, focus on

categorizing and classifying your data with the necessary metadata, such as

timestamps, location information, document classification, and other relevant

contextual details. This will enable your AI to properly understand the

context of the data and generate meaningful, actionable insights.

Additionally, integrating complementary data can significantly enhance the

information’s value, depth, and usefulness for your AI systems to analyze. ...

Although older data may be necessary for compliance or historical purposes, it

may not be relevant or useful for your AI initiatives. Outdated information

can burden your storage systems and compromise the validity of the

AI-generated insights. Imagine an AI system analyzing a decade-old market

report to inform critical business decisions—the insights would likely be

outdated and misleading. That’s why establishing and implementing robust

retention and archiving policies as part of your information life cycle

management is critical.

Generative AI: Good Or Bad News For Software

There are plenty of examples of breaches that started thanks to someone

copying over code and not checking it thoroughly. Think back to the Heartbleed

exploit, a security bug in a popular library that led to the exposure of

hundreds of thousands of websites, servers and other devices which used the

code. Because the library was so widely used, the thought was, of course,

someone had checked it for vulnerabilities. But instead, the vulnerability

persisted for years, quietly used by attackers to exploit vulnerable systems.

This is the darker side to ChatGPT; attackers also have access to the tool.

While OpenAI has built some safeguards to prevent it from answering questions

regarding problematic subjects like code injection, the CyberArk Labs team has

already uncovered some ways in which the tool could be used for malicious

reasons. Breaches have occurred due to blindly incorporating code without

thorough verification. Attackers can exploit ChatGPT, using its capabilities

to create polymorphic malware or produce malicious code more rapidly. Even

with safeguards, developers must exercise caution. ChatGPT generates the code,

but developers are accountable for it

FinOps Can Turn IT Cost Centers Into a Value Driver

Once FinOps has been successfully implemented within an organization, teams

can begin to automate the practice while building a culture of continuous

improvement. Leaders can now better forecast and plan, leading to more precise

budgeting. Additionally, GenAI can provide unique insights into seasonality.

For example, if a resource demand spikes every three days at other

unpredictable frequencies, AI can help you detect these patterns so you can

optimize by scaling up when required and back down to save costs during lulls

in demand. This kind of pattern detection is difficult without AI. It all goes

back to the concept of understanding value and total cost. With FinOps, IT

leaders can demonstrate exactly what they spend on and why. They can point out

how the budget for software licenses and labor is directly tied to IT

operations outcomes, translating into greater resiliency and higher customer

satisfaction. They can prove that they’ve spent money responsibly and that

they should retain that level of funding because it makes the business run

better. FinOps and AI advancements allow businesses to do more and go further

than they ever could. Almost 65% of CFOs are integrating AI into their

strategy.

The convergence of human and machine in transforming business

To achieve a true collaboration between humans and machines, it is crucial to

establish a clear understanding and definition of their respective roles. By

emphasizing the unique strengths of AI while strategically addressing its

limitations, organizations can create a synergy that maximizes the potential

of both human expertise and machine capabilities. AI excels in data

structuring, capable of transforming complex, unstructured information into

easily searchable and accessible content. This makes it an invaluable tool for

sorting through vast online datasets, including datasets, news articles,

academic reports and other forms of digital content, extracting meaningful

insights. Moreover, AI systems operate tirelessly, functioning 24/7 without

the need for breaks or downtime. This "always on" nature ensures a constant

state of productivity and responsiveness, enabling organizations to keep pace

with the rapidly changing market. Another key strength of AI lies in its

scalability. As data volumes continue to grow and the complexity of tasks

increases, AI can be integrated into existing workflows and systems, allowing

businesses to process and analyze vast amounts of information efficiently.

The Crucial Role of Real-time Analytics in Modern SOCs

/dq/media/media_files/E15bvcg2jU0nsqhWogLd.jpg)

Security analysts often spend considerable time manually correlating diverse

data sources to understand the context of specific alerts. This process leads

to inefficiency, as they must scan various sources, determine if an alert is

genuine or a false positive, assess its priority, and evaluate its potential

impact on the organization. This tedious and lengthy process can lead to

analyst burnout, negatively impacting SOC performance. ... Traditional

Security Information and Event Management (SIEM) systems in SOCs struggle to

effectively track and analyze sophisticated cybersecurity threats. These

legacy systems often burden SOC teams with false positives and negatives.

Their generalized approach to analytics can create vulnerabilities and strain

SOC resources, requiring additional staff to address even a single false

positive. In contrast, real-time analytics or analytics-driven SIEMs offer

superior context for security alerts, sending only genuine threats to security

teams. ... Staying ahead of potential threats is crucial for organizations in

today's landscape. Real-time threat intelligence plays a vital role in

proactively detecting threats. Through continuous monitoring of various threat

vectors, it can identify and stop suspicious activities or anomalies before

they cause harm.

Architecting with AI

Every project is different, and understanding the differences between projects

is all about context. Do we have documentation of thousands of corporate IT

projects that we would need to train an AI to understand context? Some of that

documentation probably exists, but it's almost all proprietary. Even that's

optimistic; a lot of the documentation we would need was never captured and

may never have been expressed. Another issue in software design is breaking

larger tasks up into smaller components. That may be the biggest theme of the

history of software design. AI is already useful for refactoring source code.

But the issues change when we consider AI as a component of a software system.

The code used to implement AI is usually surprisingly small — that's not an

issue. However, take a step back and ask why we want software to be composed

of small, modular components. Small isn't "good" in and of itself. ... Small

components reduce risk: it's easier to understand an individual class or

microservice than a multi-million line monolith. There's a well-known

paper(link is external) that shows a small box, representing a model. The box

is surrounded by many other boxes that represent other software components:

data pipelines, storage, user interfaces, you name it.

Hungry for resources, AI redefines the data center calculus

With data centers near capacity in the US, there’s a critical need for

organizations to consider hardware upgrades, he adds. The shortage is

exacerbated because AI and machine learning workloads will require modern

hardware. “Modern hardware provides enhanced performance, reliability, and

security features, crucial for maintaining a competitive edge and ensuring

data integrity,” Warman says. “High-performance hardware can support more

workloads in less space, addressing the capacity constraints faced by many

data centers.” The demands of AI make for a compelling reason to consider

hardware upgrades, adds Rob Clark, president and CTO at AI tool provider

Seekr. Organizations considering new hardware should pull the trigger based on

factors beyond space considerations, such as price and performance, new

features, and the age of existing hardware, he says. Older GPUs are a prime

target for replacement in the AI era, as memory per card and performance per

chip increases, Clark adds. “It is more efficient to have fewer, larger cards

processing AI workloads,” he says. While AI is driving the demand for data

center expansion and hardware upgrades, it can also be part of the solution,

says Timothy Bates, a professor in the University of Michigan College of

Innovation and Technology.

How to Bake Security into Platform Engineering

A key challenge for platform engineers is modernizing legacy applications,

which include security holes. “Platform engineers and CIOs have a

responsibility to modernize by bridging the gap between the old and new and

understanding the security implications between the old and new,” he says.

When securing the software development lifecycle, organizations should secure

both continuous integration and continuous delivery/continuous deployment

pipelines as well as the software supply chain, Mercer says. Securing

applications entails “integrating security into the CI/CD pipelines in a

seamless manner that does not create unnecessary friction for developers,” he

says. In addition, organizations must prioritize educating employees on how to

secure applications and software supply chains. ... As part of baking security

into the software development process, security responsibility shifts from the

cybersecurity team to the development organization. That means security

becomes as much a part of deliverables as quality or safety, Montenegro says.

“We see an increasing number of organizations adopting a security mindset

within their engineering teams where the responsibility for product security

lies with engineering, not the security team,” he says.

Quote for the day:

“If you really want to do something,

you will work hard for it.” -- Edmund Hillary

No comments:

Post a Comment