Improving healthcare fraud prevention and patient trust with digital ID

Digital trust involves the use of secure and transparent technologies to protect

patient data while enhancing communication and engagement. For example, digital

consent forms and secure messaging platforms allow patients to communicate with

their healthcare providers conveniently while ensuring that their data remains

protected. Furthermore, integrating digital trust technology into healthcare

systems can streamline administrative processes, reduce paperwork, and minimize

the chances of errors, according to a blog post by Five Faces. This not only

enhances operational efficiency but also improves the overall patient experience

by reducing wait times and simplifying access to medical services. ... These

smart cards, embedded with secure microchips, store vital patient information

and health insurance details, enabling healthcare providers to access accurate

and up-to-date information during consultations. The use of chip-based ID cards

reduces the risk of identity theft and fraud, as these cards are difficult to

duplicate and require secure authentication methods. This technology ensures

that only authorized individuals can access patient information, thereby

protecting sensitive data from unauthorized access.

A CEO's Take on AI in the Workforce

Those ignoring the AI transformation and not uptraining their skilled staff are

not putting themselves in a position to make use of untapped data that can

provide insights into other areas of opportunity for their business. Making

minimal-to-no investments in emerging technology merely delays the inevitable

and puts companies at a disadvantage at the hands of their competitors.

Alternatively, being too aggressive with AI can lead to security vulnerabilities

or critical talent loss. While AI integration is critical to accelerating

business outputs, doing so without moderators, data safeguards, and regulators

to keep organizations in line with data governance and compliance is actually

exposing companies to security issues. ... AI should not replace people, but

rather presents an opportunity to better utilize them. AI can help solve

time-management and efficiency issues across organizations, allowing skilled

people to focus on creative and strategic roles or projects that drive better

business value. The role of AI should focus on automating time-consuming,

repetitive, administrative tasks, thereby leaving individuals to be more

calculated and intentional with their time.

The promise of open banking: How data sharing is changing financial services

The benefits of open banking are multifaceted. Customers gain greater control

over their financial data, allowing them to securely share it with authorized

providers. This empowers them to explore a wider range of customized financial

products and services, ultimately promoting financial stability and well-being.

Additionally, open banking fosters innovation within the industry, as Fintech

companies leverage customer-consented data to develop cutting-edge solutions.

The Account Aggregator (AA) framework, regulated by the Reserve Bank of India

(RBI), is a cornerstone of open banking in India. AAs act as trusted

intermediaries, allowing users to consolidate their financial data from various

sources, including banks, mutual funds, and insurance companies, into a single

platform. ... APIs empower platforms to aggregate FD offerings from a multitude

of banks across India. This provides investors with a comprehensive view of

available options, allowing them to compare interest rates, tenures, minimum

deposit requirements, and other features within a single platform. This

transparency empowers informed decision-making, enabling investors to select the

FD that best aligns with their risk appetite and financial goals.

What are the realistic prospects for grid-independent AI data centers in the UK?

Already colo companies looking to develop in the UK are evaluating on-site gas

engine power generation and CHP (combined heat and power). To date, UK CHP

projects have been hampered by a lack of grid capacity. Microgrid developments

are viewed as a solution to this. CHP and microgrids should also make data

center developments more appealing for local government planning departments.

... Data center developments have hit front-line politics with Rachel Reeves,

the new UK Labour government’s Chancellor of the Exchequer (Finance Minister)

citing data center infrastructure and reform of planning law as critical to

growing the country’s economy. Already some projects that were denied planning

permission look likely to be reconsidered with reports that “Deputy Prime

Minister Angela Rayner" had “recovered two planning appeals for data centers in

Buckinghamshire and Hertfordshire (already)”. It seems clear that to have any

realistic chance of meeting data center capacity demand for AI, cloud and other

digital services will require on-site power generation in some form or

other.

Why Every IT Leader Needs a Team of Trusted Advisors

When seeking advisors, look for individuals with the time and willingness to

join your kitchen cabinet, Kelley says. "Be mindful of their schedules and

obligations, since they are doing you a favor," he notes. Additionally, if

you're offering any perks, such as paid meals, travel reimbursement, or direct

monetary payments, let them know upfront. Such bonuses are relatively rare,

however. "More than likely, you’re talking about individual or small group phone

calls or meetings." Above all, be honest and open with your team members. "Let

them know what kind of help you need and the time frame you are working under,"

Kelley says. "If you've heard different or contradictory advice from other

sources, bring it up and get their reaction," he recommends. Keep in mind that

an advisory team is a two-way relationship. Kelley recommends personalizing each

connection with an occasional handwritten note, book, lunch, or ticket to a

concert or sporting event. On the other hand, if you decide to ignore their

input or advice, you need to explain why, he suggests. Otherwise, they might

conclude that being a team participant is a waste of time. Also be sure to help

your team members whenever they need advice or support.

Why CI and CD Need to Go Their Separate Ways

Continuous promotion is a concept designed to bridge the gap between CI and CD,

addressing the limitations of traditional CI/CD pipelines when used with modern

technologies like Kubernetes and GitOps. The idea is to insert an intermediary

step that focuses on promotion of artifacts based on predefined rules and

conditions. This approach allows more granular control over the deployment

process, ensuring that artifacts are promoted only when they meet specific

criteria, such as passing certain tests or receiving necessary approvals. By

doing so, continuous promotion decouples the CI and CD processes, allowing each

to focus on its core responsibilities without overextension. ... Introducing a

systematic step between CI and CD ensures that only qualified artifacts progress

through the pipeline, reducing the risk of faulty deployments. This approach

allows the implementation of detailed rule sets, which can include criteria such

as successful test completions, manual approvals or compliance checks. As a

result, continuous promotion provides greater control over the deployment

process, enabling teams to automate complex decision-making processes that would

otherwise require manual intervention.

CIOs listen up: either plan to manage fast-changing certificates, or fade away

Even when organizations finally decide to set policies and standardize security

for new deployments, mitigating the existing deployments is a huge effort, and

in the modern stack, there’s no dedicated operations team, he says. That makes

it more important for CIOs to take ownership of the problem, Cairns points out.

“Especially in larger, more complex and global organizations, the magnitude of

trying to push these things through the organization is often underestimated,”

he says. “Some of that is having a good handle on the culture and how to address

these things in terms of messaging, communications, enforcement of the right

policies and practices, and making sure you’ve got the proper stakeholder buy-in

at the various points in this process — a lot of governance aspects.” ... Many

large organizations will soon need to revoke and reprovision TLS certificates at

scale. One in five Fortune 1000 companies use Entrust as their certificate

authority, and from November 1, 2024, Chrome will follow Firefox in no longer

trusting TLS certificates from Entrust because of a pattern of compliance

failures, which the CA argues were, ironically, sometimes caused by enterprise

customers asking for more time to deal with revocation.

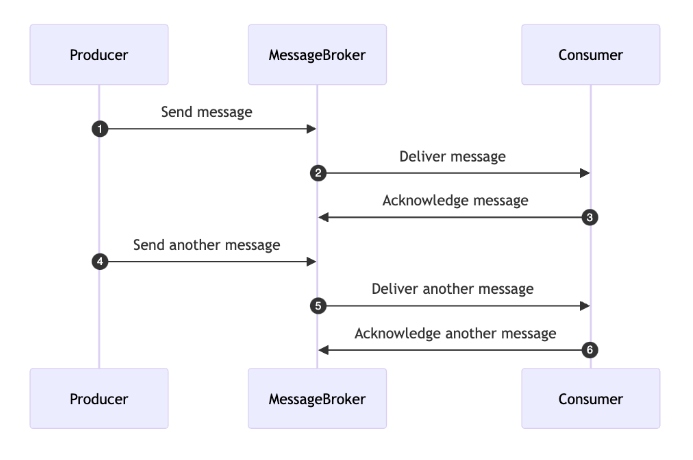

Effortless Concurrency: Leveraging the Actor Model in Financial Transaction Systems

In a financial transaction system, the data flow for handling inbound payments

involves multiple steps and checks to ensure compliance, security, and

accuracy. However, potential failure points exist throughout this process,

particularly when external systems impose restrictions or when the system must

dynamically decide on the course of action based on real-time data. ...

Implementing distributed locks is inherently more complex, often requiring

external systems like ZooKeeper, Consul, Hazelcast, or Redis to manage the

lock state across multiple nodes. These systems need to be highly available

and consistent to prevent the distributed lock mechanism from becoming a

single point of failure or a bottleneck. ... In this messaging based model,

communication between different parts of the system occurs through messages.

This approach enables asynchronous communication, decoupling components and

enhancing flexibility and scalability. Messages are managed through queues and

message brokers, which ensure orderly transmission and reception of messages.

... Ensuring message durability is crucial in financial transaction systems

because it allows the system to replay a message if the processor fails to

handle the command due to issues like external payment failures, storage

failures, or network problems.

Hundreds of LLM Servers Expose Corporate, Health & Other Online Data

Flowise is a low-code tool for building all kinds of LLM applications. It's

backed by Y Combinator, and sports tens of thousands of stars on GitHub.

Whether it be a customer support bot or a tool for generating and extracting

data for downstream programming and other tasks, the programs that developers

build with Flowise tend to access and manage large quantities of data. It's no

wonder, then, that the majority of Flowise servers are password-protected. ...

Leaky vector databases are even more dangerous than leaky LLM builders, as

they can be tampered with in such a way that does not alert the users of AI

tools that rely on them. For example, instead of just stealing information

from an exposed vector database, a hacker can delete or corrupt its data to

manipulate its results. One could also plant malware within a vector database

such that when an LLM program queries it, it ends up ingesting the malware.

... To mitigate the risk of exposed AI tooling, Deutsch recommends that

organizations restrict access to the AI services they rely on, monitor and log

the activity associated with those services, protect sensitive data trafficked

by LLM apps, and always apply software updates where possible.

Generative AI vs. Traditional AI

Traditional AI, often referred to as “symbolic AI” or “rule-based AI,” emerged

in the mid-20th century. It relies on predefined rules and logical reasoning

to solve specific problems. These systems operate within a rigid framework of

human-defined guidelines and are adept at tasks like data classification,

anomaly detection, and decision-making processes based on historical data. In

sharp contrast, generative AI is a more recent development that leverages

advanced ML techniques to create new content. This form of AI does not follow

predefined rules but learns patterns from vast datasets to generate novel

outputs such as text, images, music, and even code. ... Traditional AI relies

heavily on rule-based systems and predefined models to perform specific tasks.

These systems operate within narrowly defined parameters, focusing on pattern

recognition, classification, and regression through supervised learning

techniques. Data fed into these models is typically structured and labeled,

allowing for precise predictions or decisions based on historical patterns. In

contrast, generative AI uses neural networks and advanced ML models to produce

human-like content. This approach leverages unsupervised or semi-supervised

learning techniques to understand underlying data distributions.

Quote for the day:

"Opportunities don't happen. You

create them." -- Chris Grosser

No comments:

Post a Comment