U.S. Treasury: Regulators should back off FinTech, allow innovation

"Banks are very adept at innovating and experimenting with new products and services. The catch is the implementation of those products and services to ensure data privacy and security; it may take months or longer to prove data privacy and security efficacy," Steven D’Alfonso, a research director with IDC Financial Insight said. The federal agency, however, specifically identified a need to remove legal and regulatory uncertainties that hold back financial services companies and data aggregators from establishing data-sharing agreements that would effectively move firms away from screen-scraping customer data to more secure and efficient methods of data access. Today, many third-party data aggregators unable to access consumer data via APIs resort to the more arduous method of asking consumers to provide account login credentials (usernames and passwords) in order to use fintech apps. "Consumers may or may not appreciate that they are providing their credentials to a third-party, and not logging in directly to their financial services company," the report noted.

Are microservices about to revolutionize the Internet of Things?

Individual edge IoT devices typically need to be extremely power efficient and resource efficient, with the smallest possible memory footprint and consuming minimal CPU cycles. Microservices promise to help make that possible. “Microservices in an edge IoT environment can also be reused by multiple applications that are running in a virtualized edge,” Ouissal explained. “Video surveillance systems and a facial recognition system running at the edge could both use the microservices on a video camera, for example.” Microservices also bring distinct security advantages to IoT and edge computing, Ouissal claimed. Microservices can be designed to minimize their attack surface by running only specific functions and running them only when needed, so fewer unused functions remain “live” and therefore attackable. Microservices can also provide a higher level of isolation for edge and IoT applications: In the camera function described above, hacking the video streaming microservice on one app would not affect other streaming services, the app, or any other system.

Companies may be fooling themselves that they are GDPR compliant

A closer look at the steps taken by many of these companies reveals a GDPR strategy that it is only skin deep and fails to identify, monitor or delete all of the Personally Identifiable Information (PII) data they have stored. Such a shallow approach presents significant risks, as these businesses may be oblivious to much of the PII data that they hold and would have difficulty finding and deleting it, if requested to do so. They would also be unable to provide the regulatory authorities with GDPR-mandated information about data implicated in a breach within 72 hours of its discover—another GDPR requirement. To address these risks, companies need a holistic strategy to manage their data—one that automates the process of profiling, indexing, discovering, monitoring, moving and deleting all of their data as necessary, even if it’s unstructured or perceived to be low-risk. This will significantly reduce their GDPR and other regulatory compliance risks, while simultaneously allowing them to make greater use of the data in ways that create business value.

Banks lead in digital era fraud detection

Banks have recognised the need to have an omni-channel view of the different interactions and do their fraud risk assessments across the various channels, he said, because not only are cyber fraudsters working across multiple channels, but so do ordinary consumers, starting something on a laptop, continuing it on the phone and perhaps completing it through a virtual assistant while travelling in a car. “This is true for all consumer-facing businesses that have different channels through with they interact with consumers, and they should follow the banks’ lead and adopt an omni-channel approach to doing their risk profiling and gain from the visibility you have in each of the channels,” said Cohen. “In enterprise security, we were talking about breaking down channels years ago, and now we are starting to talk about it in the context of fraud, so that fraud assessments are carried out in the light of what is going on across all the available channels of interaction, especially as interactions become increasingly through third parties.”

Over 9 out of 10 people are ready to take orders from robots

Perhaps organisations are not doing enough to prepare the workforce for AI. Almost all (90 percent) of HR leaders and over half of employees (51 percent) reported that they are concerned they will not be able to adjust to the rapid adoption of AI as part of their job, and are not empowered to address an emerging AI skill gap in their organization. Almost three quarters (72 percent) of HR leaders noted that their organization does not provide any form of AI training program. Other major barriers to AI adoption in the enterprise are: Cost (74 percent), failure of technology (69 percent), and security risks (56 percent). But a failure to adopt AI will have negative consequences too. Almost four out of five (79 percent) HR leaders and 60 percent of employees believe that it will impact their careers, colleagues, and overall organization Emily He, SVP of Human Capital Management Cloud Business Group at Oracle, said: "To help employees embrace AI, organizations should partner with their HR leaders to address the skill gap and focus their IT strategy on embedding simple and powerful AI innovations into existing business processes."

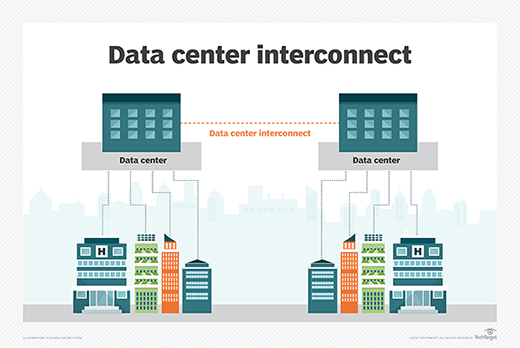

Network capacity planning in the age of unpredictable workloads

When an application or a component of a distributed application moves or scales up, it needs a new IP address and capacity to route traffic to that new address. Every decision around workload portability and elasticity generates traffic on the data center network and the cloud gateway(s) involved. A workload's address determines how workflows through it connect, which defines the pathways and where to focus network capacity plans. To plan realistic capacity requirements, formal network engineers dive into the complex math of the Erlang B formula, and if you are inclined to learn it, check out the older book James Martin's Systems Analysis for Data Transmission. However, there are also easier rules of thumb. As a connection congests, it increases the risk of delay and packet loss in a nonlinear fashion. This tenet contributes to network capacity planning fundamentals. Problems ramp up slowly until the network reaches about 50% utilization; issues rise rapidly after that threshold.

Data recovery do's and don'ts for IT teams

Many, but not all, modern backup applications perform bit-by-bit checks to ensure the data being read from primary storage does in fact match the data being written to backup storage. Add that to your backup software shopping list, Verma advised. "The other thing I've noticed the industry's heading over to in the last couple of years is away from this notion of always having to do a full restore," Verma said. For example, you could restore a single virtual machine or an individual database table, rather than a whole server or the entire database itself. That's easy to forget when under pressure from angry users who want their data back immediately. "The days of doing a full recovery are gone, if you will. It's get me to what I need as quickly as possible," Verma said. "I would say that's the state of the industry." A virtual machine can actually be booted directly from its backup disk, which is useful for checking to see if the necessary data is there but not very realistic for a large-scale recovery, Verma added.

Web Application Security Thoughts

Web application security is a branch of Information Security that deals specifically with security of websites, web applications and web services. At a high level, Web application security draws on the principles of application security but applies them specifically to Internet and Web systems. Web application should follow a system for security testing. ... The company has to decide or project owner has to decide which remediation will take effective solution for the application. Because each application has different purpose and user groups. For financial application, you have to be more careful about the transaction and money stored in the database. So here, application and database both security is important. If we deal with card-data, then we must meet the PCI recommended guideline to mitigate or prevent fraud. In this case, owasp Top 10 vulnerabilities should be maintained properly. To protect the application now a days, not only you have to depend on only the application. You also have to depend on PCI recommended next generation firewall or waf. The next generation firewall will do the following things for an Web Application.

DDoS attackers increasingly strike outside of normal business hours

While attack volumes increased, researchers recorded a 36% decrease in the overall number of attacks. There was a total of 9,325 attacks during the quarter: an average of 102 attacks per day. While the number of attacks decreased overall – possibly as a result of DDoS-as-a-service website Webstresser being closed down following an international police operation, both the scale and complexity of the attacks increased. The LSOC registered a 50% increase in hyper-scale attacks (80 Gbps+). The most complex attacks seen used 13 vectors in total. Link11’s Q2 DDoS Report revealed that threat actors targeted organisations most frequently between 4pm CET and midnight Saturday through to Monday, with businesses in the e-commerce, gaming, IT hosting, finance, and entertainment/media sectors being the most affected. The report reveals that high volume attacks were ramped up via Memcached reflection, SSDP reflection and CLDAP, with the peak attack bandwidth recorded at 156 Gbps.

AIOps platforms delve deeper into root cause analysis

The differences lie in the AIOps platforms' deployment architectures and infrastructure focus, said Nancy Gohring, an analyst with 451 Research who specializes in IT monitoring tools and wrote a white paper that analyzes FixStream's approach. "Dynatrace and AppDynamics use an agent on every host that collects app-level information, including code-level details," Gohring said. "FixStream uses data collectors that are deployed once per data center, which means they are more similar to network performance monitoring tools that offer insights into network, storage and compute instead of application performance." FixStream integrates with both Dynatrace and AppDynamics to join its infrastructure data to the APM data those vendors collect. Its strongest differentiation is in the way it digests all that data into easily readable reports for senior IT leaders, Gohring said. "It ties business processes and SLAs [service-level agreements] to the performance of both apps and infrastructure," she said.

Quote for the day:

"It is a terrible thing to look over your shoulder when you are trying to lead and find no one there." -- Franklin D. Roosevelt

No comments:

Post a Comment