I have been in the space of artificial intelligence for a while and am aware that multiple classifications, distinctions, landscapes, and infographics exist to represent and track the different ways to think about AI. However, I am not a big fan of those categorization exercises, mainly because I tend to think that the effort of classifying dynamic data points into predetermined fixed boxes is often not worth the benefits of having such a “clear” framework. I also believe this landscape is useful for people new to the space to grasp at-a-glance the complexity and depth of this topic, as well as for those more experienced to have a reference point and to create new conversations around specific technologies. What follows is then an effort to draw an architecture to access knowledge on AI and follow emergent dynamics, a gateway of pre-existing knowledge on the topic that will allow you to scout around for additional information and eventually create new knowledge on AI. I call it the AI Knowledge Map (AIKM).

Using innovation labs and accelerators as a form of R&D to learn about certain industries is a great idea, as long as leaders realise that R&D and innovation are not the same thing. Innovation is the combination of clever new ideas and technologies with sustainably profitable business models. So the question still remains - as we work with startups or internal teams to learn about new industries, how are we going to convert those learnings into long-term revenues for the company? We have to design our labs and accelerators to be able to extract insights and create value. Other companies are very explicit about using innovation labs to accomplish their bottom line goals. These leaders are focused on balancing their portfolio, adding new business models and revenues to the company. The biggest challenge these leaders face is what to do with successful innovations. Not every product or service from the innovation lab or accelerator will be successful. But once we have something promising, we need to figure out a way to scale that product or service.

The Case for Work from Home and Flexible Working

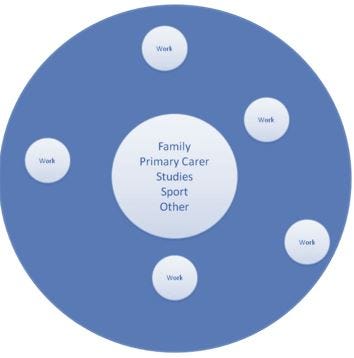

To attract and retain employees under the old paradigm the business must deliver an EVP that provides career opportunity and professional development, regularly sign-posted by role expansion and salary growth. ... An alternative operating model is required for the front line. An operating model that supports a new recruitment promise based on the provision of flexible working arrangements that allows employees to manage their work life priorities. ... The flexible working operating models will be designed to reflect our evolving understanding of what motivates employees, this also forms an important part of the EVP. It is based on engaging the intrinsic motivators of autonomy, mastery and purpose. ... Flexible working enables the front-line to be deployed dynamically to where customers choose to be, whether that be in store, online or on the phones. Creating opportunities to vary not just “where and when I work” but also “what I do” empowers our employee to genuinely design their own work experience. Role variation provides a unique and highly competitive dimension to the EVP and it enhances the businesses resilience to uncertainty.

Data management: Using NoSQL to drive business transformation

Because of our core architecture it is very important to our customers, who are deploying applications, that they can do that in near real-time speed. So, when we think about the capabilities that we have layered together inside of our data platform, we're unlocking the power of NoSQL, but doing so in a way that enables application developers to very quickly learn the platform, and help them become efficient in picking up applications to take advantage of it. Now, that core platform can run at any point in the cloud -- everything from the major public cloud to customer's private data centres -- and it can also run on premise. Now we've extended the power of the platform out to the edge. We have a solution that we have called Couchbase Lite. This is small enough that it can run inside an application on a mobile device, and you still get the full power of the platform, including the data structure and our ability to query and, very soon, you will be able to run operational analytics on top of that.

Balancing innovation and compliance for business success

Whether it is the need for greater transparency with user data, improved reporting methods for the regulator or enhanced security measures, any new technology being introduced will need to carefully assessed so that businesses recognises and understand whether it is compliant with current legislation. While staff trials can often help to raise any last-minute concerns about the functionality of new IT solutions, management also needs to include the IT team and compliance teams in this activity. In many cases, the IT department is left out of discussions regarding data management and compliance, making it hard for them to identify any potential conflicts in this area. In order to address this issue, IT needs to have a greater understanding of the wider business. In particular, the IT department needs to be as involved in the company’s wider compliance measures as it is with particular applications or systems, as this will make it much easier to establish what controls need to be put in place

It’s Time for Token Binding

What is so great about token binding, you might ask? Token binding makes cookies, OAuth access tokens and refresh tokens, and OpenID Connect ID Tokens unusable outside of the client-specific TLS context in which they were issued. Normally such tokens are “bearer” tokens, meaning that whoever possesses the token can exchange the token for resources, but token binding improves on this pattern, by layering in a confirmation mechanism to test cryptographic material collected at time of token issuance against cryptographic material collected at the time of token use. Only the right client, using the right TLS channel, will pass the test. This process of forcing the entity presenting the token to prove itself, is called “proof of possession”. It turns out that cookies and tokens can be used outside of the original TLS context in all sorts of malicious ways. It could be hijacked session cookies or leaked access tokens, or sophisticated MiTM. This is why the IETF OAuth 2 Security Best Current Practice draft recommends token binding, and why we just recently doubled the rewards on our identity bounty program.

Artificial General Intelligence Is Here, and Impala Is Its Name

AGI is a single intelligence or algorithm that can learn multiple tasks and exhibits positive transfer when doing so, sometimes called meta-learning. During meta-learning, the acquisition of one skill enables the learner to pick up another new skill faster because it applies some of its previous “know-how” to the new task. In other words, one learns how to learn — and can generalize that to acquiring new skills, the way humans do. This has been the holy grail of AI for a long time. As it currently exists, AI shows little ability to transfer learning towards new tasks. Typically, it must be trained anew from scratch. For instance, the same neural network that makes recommendations to you for a Netflix show cannot use that learning to suddenly start making meaningful grocery recommendations. Even these single-instance “narrow” AIs can be impressive, such as IBM’s Watson or Google’s self-driving car tech.

David Chamberlain, general manager of Licensing Dashboard, says IT can sometimes be over-cautious. “IT people can be worried about things like an Exchange server going down, so there is a tendency to over-provision, and then people will forget to decommission the cloud service when it is no longer needed,” he says. “The cloud is very elastic and is easy to throttle up, which is a big change from on-premise servers.” For Witt, virtual machine (VM) sprawl has always been an issue on-premise, even when companies have had good processes in place. “It is always easier to spin a VM up than it is to decommission it,” he says. “Most datacentres will have a significant proportion of unused VMs – in my experience, it’s around 30-40%.” While on-premise, only a fraction of storage and compute is consumed for these unused VMs, in the cloud, the VM is charged per second, he points out. “You’re charged for the VM size regardless of whether it is fully utilised,” he says.

The ultimate goal of quantum information programming – a device capable of being reprogrammed to perform any given function – is one step closer following the design of a new generation silicon chip that can control two qubits of information simultaneously. The invention, by a team led by Xiaogang Qiang from the Quantum Engineering Technology Labs at the University of Bristol in the UK, represents a significant step towards the development of a practical quantum computing. In a paper published in the journal Nature Photonics, Qiang and colleagues report proof-of-concept of a fully programmable two-qubit quantum processor “enabling universal two-qubit quantum information processing in optics”. The invention overcomes one of the primary obstacles facing the development of quantum computers. Using current technology, operations requiring just a single qubit (a unit of information that is in a superposition of simultaneous “0” and “1”) can be carried out with high precision.

How data breaches are affecting the retail industry

From phishing, vishing and smishing to acquiring consumers’ identification details, or full-blown criminal hacking, the flow of fresh news stories detailing the latest attacks clearly demonstrate the scale of this growing issue. Indeed, such are the risks of data breaches that they are no longer viewed as IT issues, but organisational issues that can derail day-to-day operations and have long-term reputational impact. So, what are the real business costs of a data breach? According to the 2018 Cost of a Data Breach Study by Ponemon Institute, the average cost of a data breach is $3.86 million, which is a 6.4% increase on the 2017 cost of $3.62 million. ... The harsh reality is that no organisation can ever deem itself completely safe and at zero risk of a data breach. However, what you can – and should – do is take a critical look at your infrastructure, processes, systems and controls, and ensure that you have taken steps to address risks and know what to do if you suffer a breach.

Quote for the day:

"Not all readers are leaders, but all leaders are readers." -- Harry S. Truman

No comments:

Post a Comment