In any organization, you will find two kinds of processes: those that are structured and those that are more open-ended. Structured processes, which are typically followed rigorously, account for roughly two-thirds of all operations at an organization. These are generally the “life support” functions of any company or large group—things like leave management, attendance, and procurement. ... To avoid chaos, this workflow should remain consistent from week to week, and even quarter to quarter. Given the clear structure and obvious objectives, these processes can be handled nicely by a low-code solution. But open-ended processes are not so easy to define, and the goals aren’t always as clear. Imagine hosting a one-time event. You may know a little about what the end result should look like, but you can’t predefine the planning process because you don’t orchestrate these events all the time. These undefined processes, like setting an agenda for an offsite meeting, tend to be much more collaborative, and they often evolve organically as inputs from multiple stakeholders shape the space.

Adopt these continuous delivery principles organization-wide

Upper management should advocate for continuous delivery principles and enforce best practices. Once an organization has set up strong CD pipelines and reaps the benefits, resist any efforts to succumb to older, less automated deployment models just because of team conflicts or a lack of oversight. If a group must work closely together but cannot agree on continuous delivery practices, it's critical that upper management understands CD and its importance to software delivery, pushing the continuous agenda forward and encouraging adoption. Regulation is rarely considered a driver of innovation, so before your team adopts continuous delivery practices, understand any regulatory requirements the organization is under. No one wants to put together a CI/CD pipeline then have the legal department shut it down. An auditor needs to be informed about and understand, for example, the automated testing procedure in a continuous delivery pipeline. And the simple fact that it's repeatable does not mean a process adheres to the regulatory rules.

Incomplete visibility a top security failing

While many security teams implement good basic protections around administrative privileges, the report said these low-hanging-fruit controls should be in place at more organisations, with 31% of organisations still not requiring default passwords to be changed, and 41% still not using multifactor authentication for accessing administrative accounts. Organisations can start to build up cyber hygiene by following established best practices such as the Critical Security Controls, a prioritised set of steps maintained by the CIS. Although there are 20 controls, the report said implementing just the top six establishes what CIS calls “cyber hygiene.” “Industry standards are one way to leverage the broader community, which is important with the resource constraints that most organisations experience,” said Tim Erlin, vice-president of product management and strategy at Tripwire. “It’s surprising that so many respondents aren’t using established frameworks to provide a baseline for measuring their security posture. It’s vital to get a clear picture of where you are so you can plan a path forward.”

Political Play: Indicting Other Nations' Hackers

While it's impossible to gain a complete view of these operations, FireEye suggested that they were being run much more carefully. For example, one ongoing campaign appeared to target U.S. engineering and maritime targets, and especially those connected to South China Sea issues. "From what we observed, Chinese state actors can gain access to most firms when they need to," Bryce Boland, CTO for Asia-Pacific at FireEye, told South China Morning Post in April. "It's a matter of when they choose to and also whether or not they steal the information that is within the agreement." Now, of course, the U.S. appears to be trying to bring diplomatic pressure to bear on Russia as U.S. intelligence leaders warn that Moscow's election-interference campaigns have not diminished at all since 2016. "We have been clear in our assessments of Russian meddling in the 2016 election and their ongoing, pervasive efforts to undermine our democracy," Director of National Intelligence Dan Coats said last month

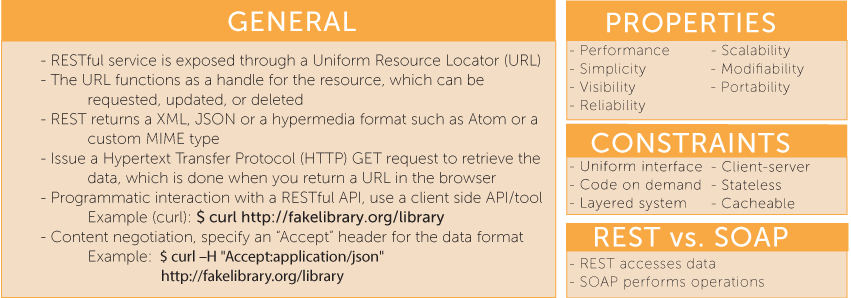

RESTful Architecture 101

When deployed correctly, it provides a uniform, interoperability, between different applications on the internet. The term stateless is a crucial piece to this as it allows applications to communicate agnostically. A RESTful API service is exposed through a Uniform Resource Locator (URL). This logical name separates the identity of the resource from what is accepted or returned. The URL scheme is defined in RFC 1738, which can be found here. A RESTful URL must have the capability of being created, requested, updated, or deleted. This sequence of actions is commonly referred to as CRUD. To request and retrieve the resource, a client would issue a Hypertext Transfer Protocol (HTTP) GET request. This is the most common request and is executed every time you type a URL into a browser and hit return, select a bookmark, or click through an anchor reference link. ... An important aspect of a RESTful request is that each request contains enough state to answer the request. This allows for visibility and statelessness on the server, desirable properties for scaling systems up, and identifying what requests are being made.

Oracle's Database Service Offerings Could Be Its Last Best Hope For Cloud Success

All that said, if Oracle could adjust, it has the advantage of having a foothold inside the enterprise. It also claims a painless transition from on-prem Oracle database to its database cloud service, which if a company is considering moving to the cloud could be attractive. There is also the autonomous aspect of its cloud database offerings, which promises to be self-tuning, self-healing with automated maintenance and updates and very little downtime. Carl Olofson, an analyst with IDC who covers the database market sees Oracle’s database service offerings as critical to its cloud aspirations, but expects business could move slowly here. “Certainly, this development (Oracle’s database offerings) looms large for those whose core systems run on Oracle Database, but there are other factors to consider, including any planned or active investment in SaaS on other cloud platforms, the overall future database strategy, the complexity of moving operations from the datacenter to the cloud

Enterprise IT struggles with DevOps for mainframe

"At companies with core back-end mainframe systems, there are monolithic apps -- sometimes 30 to 40 years old -- operated with tribal knowledge," said Ramesh Ganapathy, assistant vice president of DevOps for Mphasis, a consulting firm in New York whose clients include large banks. "Distributed systems, where new developers work in an Agile manner, consume data from the mainframe. And, ultimately, these companies aren't able to reduce their time to market with new applications." Velocity, flexibility and ephemeral apps have become the norm in distributed systems, while mainframe environments remain their polar opposite: stalwart platforms with unmatched reliability, but not designed for rapid change. The obvious answer would be a migration off the mainframe, but it's not quite so simple. "It depends on the client appetite for risk, and affordability also matters," Ganapathy said. "Not all apps can be modernized -- at least, not quickly; any legacy mainframe modernization will go on for years."

Mitigating Cascading Failure at Lyft

Cascading failure is one of the primary causes of unavailability in high throughput distributed systems. Over the past four years, Lyft has transitioned from a monolithic architecture to hundreds of microservices. As the number of microservices grew, so did the number of outages due to cascading failure or accidental internal denial of service. Today, these failure scenarios are largely a solved problem within the Lyft infrastructure. Every service deployed at Lyft gets throughput and concurrency protection automatically. With some targeted configuration changes to our most critical services, there has been a 95% reduction in load-based incidents that impact the user experience. Before we examine specific failure scenarios and the corresponding protection mechanisms, let's first understand how network defense is deployed at Lyft. Envoy is a proxy that originated at Lyft and was later open-sourced and donated to the Cloud Native Computing Foundation. What separates Envoy from many other load balancing solutions is that it was designed to be deployed in a "mesh" configuration.

Beyond GDPR: ePrivacy could have an even greater impact on mobile

Metadata can be used in privacy-protective ways to develop innovative services that deliver new societal benefits, such as public transport improvements and traffic congestion management. In many cases, pseudonymisation can be applied to metadata to protect the privacy rights of individuals, while also delivering societal benefits. Pseudonymisation of data means replacing any identifying characteristics of data with a pseudonym, or, in other words, a value which does not allow the data subject to be directly identified. The processing of pseudonymised metadata can enable a wide range of smart city applications. For example, during a snow storm, city governments can work with mobile networks to notify connected car owners to remove their cars from a snowplough path. Using pseudonyms, the mobile network can notify owners to move their cars from a street identified by the city, without the city ever knowing the car owners’ identities.

Should we add bugs to software to put off attackers?

The effectiveness of the scheme also hinges on making the bugs non-exploitable but realistic (indistinguishable from “real” ones). For the moment, the researchers have chosen to concentrate their research on the first requirement. The researchers have developed two strategies for ensuring non-exploitability and used them to automatically add thousands of non-exploitable stack- and heap-based overflow bugs to real-world software such as nginx, libFLAC and file. “We show that the functionality of the software is not harmed and demonstrate that our bugs look exploitable to current triage tools,” they noted. Checking whether a bug can be exploited and actually writing a working exploit for it is a time-consuming process and currently can’t be automated effectively. Making attackers waste time on non-exploitable bugs should frustrate them and, hopefully, in time, serve as a deterrent. The researchers are the first to point out the limitations of this approach: the aforementioned need for the software to be “ok” with crashing, the fact that they still have to find a way to make theses bugs indistinguishable from those occurring “naturally”

Quote for the day:

"Coaching is unlocking a person's potential to maximize their own performance. It is helping them to learn rather than teaching them." -- John Whitmore

No comments:

Post a Comment